UCLA Extension Short Course

advertisement

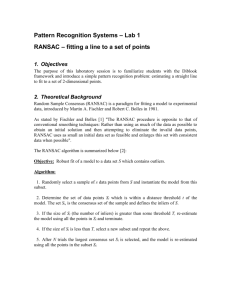

EM and RANSAC Jana Kosecka, CS 223b 1 EM (Expectation Maximization) Brief tutorial by example: EM well known statistical technique for estimation of models from data Set up: Given set of datapoints which were generated by multiple models estimate the parameters of the models and assignment of the data points to the models Here: set of points in the plane with coordinates (x,y), two lines with parameters (a1,b1) and (a2,b2) 1. Guess the line parameters and estimate error of each point wrt to current model 2. Estimate Expectation (weight for each point) Jana Kosecka, CS 223b 2 EM Maximization step: Traditional least squares: Here weighted least squares: Iterate until no change Problems: local minima, how many models ? Jana Kosecka, CS 223b 3 EM - example Jana Kosecka, CS 223b 4 Difficulty in motion estimation using widebaseline matching Jana Kosecka, CS 223b 5 Least square estimator can’t tolerate any outlier 14 14 inliers Outlier 12 10 10 8 8 6 6 4 4 2 2 0 0 -2 0 12 2 4 6 8 10 12 -2 0 Inliers Inliers Outlier Outlier Erroneous line Correct line 2 4 6 8 10 12 Robust techniques is needed to solve the problem. Jana Kosecka, CS 223b 6 Robust estimators for dealing with outliers Use robust objective functions The M-estimator and Least Median of Squares (LMedS) Estimator Neither of them can tolerate more than 50% outliers The RANSAC (RANdom SAmple Consensus) algorithm Proposed by Fischler and Bolles The most popular technique used in Computer Vision community It can tolerate more than 50% outliers Jana Kosecka, CS 223b 7 The RANSAC algorithm • Generate M (a predetermined number) model hypotheses, each of them is computed using a minimal subset of points • Evaluate each hypothesis • • Compute its residuals with respect to all data points. Points with residuals less than some threshold are classified as its inliers • The hypothesis with the maximal number of inliers is chosen. Then re-estimate the model parameter using its identified inliers. Jana Kosecka, CS 223b 8 RANSAC – Practice The theoretical number of samples needed to ensure 95% confidence that at least one outlier free sample could be obtained. It has been noticed that the theoretical estimates are wildly optimistic Usually the actual number of required samples is almost an magnitude more than the theoretical estimate. Jana Kosecka, CS 223b 9 More correspondences and robust matching Select set of putative correspondences Repeat 1. Select at random a set of 8 successful matches 2. Compute fundamental matrix 3. Determine the subset of inliers, compute distance to epipolar line 4. Count the number of points in the consensus set Jana Kosecka, CS 223b 10 RANSAC in action Inliers Outliers Jana Kosecka, CS 223b 11 Epipolar Geometry • Epipolar geometry in two views • Refined epipolar geometry using nonlinear estimation of F Jana Kosecka, CS 223b 12 The difficulty in applying RANSAC Drawbacks of the standard RANSAC algorithm Requires a large number of samples for data with many outliers (exactly the data that we are dealing with) Needs to know the outlier ratio to estimate the number of samples Requires a threshold for determining whether points are inliers Various improvements to standard approaches Still rely on finding outlier-free samples. [Torr’99, Murray’02, Nister’04, Matas’05, Sutter’05 and many others] Jana Kosecka, CS 223b 13 Robust technique – result Jana Kosecka, CS 223b 14