n - Department of Electrical Engineering & Computer Science

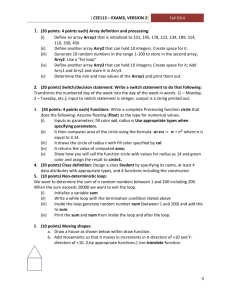

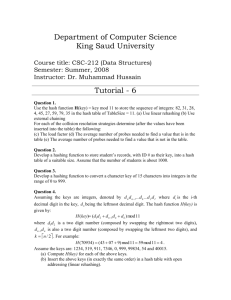

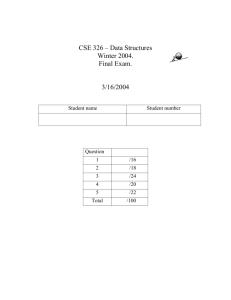

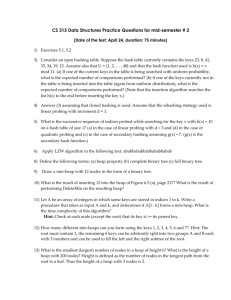

advertisement

Midterm Review

Jeff Edmonds

York University

Abstract Data Types

Positions and Pointers

Loop Invariants

System Invariants

Time Complexity

Classifying Functions

Adding Made Easy

Understand Quantifiers

Recursion

Balanced Trees

Heaps

Huffman Codes

Hash Tables

Graphs

Paradigms

COSC 20111

Midterm Review

• Review slides.

2

Midterm Review

• Review slides.

3

Midterm Review

• Review slides.

4

Midterm Review

• Review slides.

5

Midterm Review

• Review slides.

6

Midterm Review

• Review slides.

• Review the assignment notes and solutions!

7

Midterm Review

• Review slides.

• Review the assignment notes and solutions!

• Review 3101

• Steps0: Basic Math

• First Order Logic

• Time Complexity

• Logs and Exp

• Growth Rates

• Adding Made Easy

• Recurrence Relations

8

Midterm Review

• Review slides.

• Review the assignment notes and solutions!

• Review 3101

• Steps0: Basic Math

• Steps1: Loop Invariants

• Steps2: Recursion

9

Abstract Data Types

Abstractions (Hierarchy)

Elements

Sets

Lists, Stacks, & Queues

Trees

Graphs

Iterators

Abstract Positions/Pointers

Lecture 1

Jeff Edmonds

York University

COSC 2011

10

Software Engineering

• Software must be:

– Readable and understandable

• Allows correctness to be verified, and software to be easily updated.

– Correct and complete

• Works correctly for all expected inputs

– Robust

• Capable of handling unexpected inputs.

– Adaptable

• All programs evolve over time. Programs should be designed so that

re-use, generalization and modification is easy.

– Portable

• Easily ported to new hardware or operating system platforms.

– Efficient

• Makes reasonable use of time and memory resources.

James Elder

11

Abstraction

Abstract Data Types (ADTs)

• An ADT is a model of a data structure that specifies

– The type of data stored

– Operations supported on these data

• An ADT does not specify how the data are stored or how

the operations are implemented.

• The abstraction of an ADT facilitates

– Design of complex systems. Representing complex data

structures by concise ADTs, facilitates reasoning about and

designing large systems of many interacting data structures.

– Encapsulation/Modularity. If I just want to use an object / data

structure, all I need to know is its ADT (not its internal workings).

James Elder

Abstract Data Types

Restricted Data Structure:

Some times we limit what operation can be done

• for efficiency

• understanding

Stack: A list, but elements can only be

pushed onto and popped from the top.

Queue: A list, but elements can only be

added at the end and removed from

the front.

• Important in handling jobs.

Priority Queue: The “highest priority” element

is handled next.

13

Data Structures Implementations

• Array List

– (Extendable) Array

• Node List

– Singly or Doubly Linked List

• Stack

– Array

– Singly Linked List

• Queue

– Circular Array

– Singly or Doubly Linked List

• Priority Queue

– Unsorted doubly-linked list

– Sorted doubly-linked list

– Heap (array-based)

• Adaptable Priority Queue

– Sorted doubly-linked list with

location-aware entries

– Heap with location-aware entries

• Tree

– Linked Structure

• Binary Tree

– Linked Structure

– Array

14

Positions and Pointers

Abstract Positions/Pointers

Positions in an Array

Pointers in C

References in Java

Implementing Positions in Trees

Building Trees

Lecture 2

Jeff Edmonds

York University

COSC 2011

15

High Level Positions/Pointers

Positions: Given a data structure,

we want to have one or more current elements

that we are considering.

Conceptualizations:

• Fingers in pies

• Pins on maps

• Little girl dancing there

• Me

See Goodrich Sec 7.3 Positional Lists

16

Implementations of Positions/Pointers

Positions/Pointers: Now lets redo it in Java.

head .next .next

= head .next;

5

2182

element next

2039

2182

element next

2182

2039

head

The right hand side of the “=” specifies a memory location.

So does its left hand side.

The action is to put the value contained in the first

into the second.

17

Implementing Positions in Trees

class LinkedBinaryTree {

class Node

{

E element;

Node parent;

Node left;

Node right;

}

private Node root = null;

tree

18

Implementing Positions in Trees

…

class LinkedBinaryTree {

tree

Position sibling(Position p) {

Node n=p;

if( n.parent != null )

if( n.parent.right = n )

return n.parent.left;

else

return n.parent.right;

else

} throw new IllegalArgumentException(“p is the root");

p1

p2

p3

}

At any time the user can move a position to the sibling.

p3 = tree.sibling(p2);

19

Implementing Positions in Trees

…

class LinkedBinaryTree {

tree

Position addRight(Position p,E e) {

Node n=p;

if( n.right = null )

n.right = new Node(e,n,null,null);

return n.right;

else

throw new IllegalArgumentException(

p1

p2

p3

Toronto

"p already has a right child");

}

At any time the user can add a position/node

to the right of a position.

p3 = tree.addRight(p2,“Toronto”);

20

Implementing Positions in Trees

class LinkedTree {

tree

Defining the class of trees

nodes can have many children.

We use the data structure Set or List

to store the Positions of a node’s

children.

21

Contracts, Assertions, and Invariants

Contracts

Assertions

Loop Invariants

The Sum of Objects

Insertion and Selection Sort

Binary Search Like Examples

Bucket (Quick) Sort for Humans

Reverse Polish Notation (Stack)

Whose Blocking your View (Stack)

Parsing (Stack)

Data Structure Invariants

Stack and Queue in an Array

Linked Lists

Lecture 3

Jeff Edmonds

York University

COSC 2011

22

On Step At a Time

Precondition

Postcondition

It can be difficult to understand

where computation go.

I implored you to not worry

about the entire computation.

0

i-1

next

i

Trust who passes you

the baton

and go around once

23

Iterative Algorithm with Loop Invariants

• Precondition:

What is true

about input

• Post condition:

What is true

about output.

24

Iterative Algorithm with Loop Invariants

Goal: Your goal is to prove that

• no matter what the input is,

as long as it meets the precondition,

• and no matter how many times your algorithm iterates,

as long as eventually the exit condition is met,

• then the post condition is guarantee to be achieved.

Proves that IF the program terminates then it works

<PreCond> & <code> <PostCond>

25

Iterative Algorithm with Loop Invariants

• Loop Invariant:

Picture of what is true at top of loop.

26

Iterative Algorithm with Loop Invariants

• Establishing the Loop Invariant.

• Our computation has just begun.

• All we know is that we have an input instance that

meets the Pre Condition.

• Being lazy, we want to do the minimum work.

• And to prove that it follows that the Loop Invariant is

then made true.

Establishing Loop Invariant

<preCond>

codeA

<loop-invariant>

27

Iterative Algorithm with Loop Invariants

• Maintaining the loop invariant

Exit

79 km

75 km

(while making progress)

• We arrived at the top of the loop knowing only

• the Loop Invariant is true

• and the Exit Condition is not.

• We must take one step (iteration)

(making some kind of progress).

• And then prove that the Loop Invariant will be

true when we arrive back at the top of the loop.

Maintaining Loop Invariant

Exit

<loop-invariantt>

¬<exit Cond>

codeB

<loop-invariantt+1>

28

Iterative Algorithm with Loop Invariants

• Obtain the Post Condition:

Exit

• We know the Loop Invariant is true

because we have maintained it.

We know the Exit Condition is true

because we exited.

• We do a little extra work.

• And then prove that it follows that the Post

Condition is then true.

Obtain the Post Condition

Exit

<loop-invariant>

<exit Cond>

codeC

<postCond>

29

Iterative Algorithm with Loop Invariants

• Precondition:

What is true

about input

• Post condition:

What is true

about output.

Insertion Sort

88 52

14

31

25 98

30

23

62

79

14,23,25,30,31,52,62,79,88,98

30

Iterative Algorithm with Loop Invariants

• Loop Invariant:

Picture of what is true at top of loop.

23,31,52,88

30 14

25 62

98

79

Sorted sub-list

31

Iterative Algorithm with Loop Invariants

• Making progress while

Maintaining the loop invariant

23,31,52,88

Exit

79 km

75 km

30 14

25 62

98

79

6 elements

to school

23,31,52,62,88

30 14

25

98

79

32

Iterative Algorithm with Loop Invariants

• Beginning & Ending

km

0 km

Exit

Exit

88 52

31

14

25 62 30

23

98

79

88 52

31

14

25 62 30

23

98

79

n elements

to school

0 elements

14,23,25,30,31,52,62,79,88,98

to school

14,23,25,30,31,52,62,79,88,98

33

Iterative Algorithm with Loop Invariants

• Running Time

= 1+2+3+…+n = (n2)

n

n

n

n

n

n

n+1 n+1

n+1

n+1 n+1

34

Iterative Algorithm with Loop Invariants

Define Problem

Define Loop

Invariants

Define Measure of

Progress

79 km

to school

Define Step

Define Exit Condition Maintain Loop Inv

Exit

Exit

Make Progress

Initial Conditions

Ending

Exit

79 km

75 km

km

0 km

Exit

Exit

35

Iterative Algorithm with Loop Invariants

Establishing Loop Invariant

<preCond>

codeA

<loop-invariant>

Maintaining Loop Invariant

Exit

<loop-invariantt>

¬<exit Cond>

codeB

<loop-invariantt+1>

Clean up loose ends

<loop-invariant>

<postCond>

<exit Cond>

Exit

codeC

Proves that IF the program terminates then it works

<PreCond> & <code> <PostCond>

36

Iterative Algorithm with Loop Invariants

• Precondition:

What is true

about input

• Post condition:

What is true

about output.

Binary Search

key 25

3

5

6

13 18 21 21 25 36 43 49 51 53 60 72 74 83 88 91 95

37

Iterative Algorithm with Loop Invariants

• Loop Invariant:

Picture of what is true at top of loop.

• If the key is contained in the original list,

then the key is contained in the sub-list.

key 25

3

5

6

13 18 21 21 25 36 43 49 51 53 60 72 74 83 88 91 95

38

Iterative Algorithm with Loop Invariants

• Making progress while

Maintaining the loop invariant

Exit

79 km

75 km

key 25

3

5

6

13 18 21 21 25 36 43 49 51 53 60 72 74 83 88 91 95

If key ≤ mid,

then key is in

left half.

If key > mid,

then key is in

right half.

39

Iterative Algorithm with Loop Invariants

• Running Time

The sub-list is of size n, n/2, n/4, n/8,…,1

Each step (1) time.

Total = (log n)

key 25

3

5

6

13 18 21 21 25 36 43 49 51 53 60 72 74 83 88 91 95

If key ≤ mid,

then key is in

left half.

If key > mid,

then key is in

right half.

40

Iterative Algorithm with Loop Invariants

• Beginning & Ending

km

0 km

Exit

Exit

key 25

3

5

6

13 18 21 21 25 36 43 49 51 53 60 72 74 83 88 91 95

41

Parsing with a Stack

Input: A string of brackets.

Output: Each “(”, “{”, or “[” must be paired

with a matching “)”, “}”, or “[”.

Loop Invariant: Prefix has been read.

• Matched brackets are matched and removed.

• Unmatched brackets are on the stack.

[( ) ( ( ) ) { }])

Stack

[((

Opening Bracket:

• Push on stack.

Closing Bracket:

• If matches that on stack

pop and match.

• else return(unmatched)

42

Data Structure Invariants

Dude! You have been teaching 3101 too long.

This is not an course on Algorithms,

but on Data Structures!

The importance of invariants is the same.

Differences:

1. An algorithm must terminate with an answer,

while systems and data structures may run

forever.

2. An algorithm gets its full input at the beginning,

while data structures gets a continuous stream

of instructions from the user.

•Both have invariants that must be maintained.

43

Data Structure Invariants

Maintaining Loop Invariant

Exit

InvariantsData Struc t

Push Operation

preCondPush

InvariantsData Struc t+1

postCondPush

Assume we fly in from Mars and InvariantsData Struc t is true:

Assume the user correctly calls the Push Operation:

preCondPush The input is info for a new element.

Implementer must ensure:

postCondPush The element is pushed on top of the stack.

InvariantsData Struc t+1

44

Data Structure Invariants

Maintaining Loop Invariant

Exit

InvariantsData Struc t

Push Operation

preCondPush

InvariantsData Struc t+1

postCondPush

top = top + 1;

A[top] = info;

45

Data Structure Invariants

Queue: Add and Remove

from opposite ends.

Algorithm dequeue()

if isEmpty() then

throw EmptyQueueException

else

info A[bottom]

bottom (bottom + 1) mod N

return e

46

Data Structure Invariants

InvariantsData Struc t

preCondPush

Don’t panic.

Just draw the pictures

and move the pointers.

postCondPush

InvariantsData Struc t+1

47

Data Structure Invariants

InvariantsData Struc t

preCondPush

postCondPush

InvariantsData Struc t+1

48

Data Structure Invariants

InvariantsData Struc t

Special Case: Empty

preCondPush

postCondPush

InvariantsData Struc t+1

49

Data Structure Invariants

InvariantsData Struc t

preCondRemove Rear

Is it so easy???

How about removing an

element from the rear?

last must point at the

second last element.

How do we find it?

You have to walk there from first!

time # of elements

instead of constant

postCondRemove Rear

InvariantsData Struc t+1

50

Data Structure Invariants

Stack: Add and Remove

from same end.

Actually, for a Stack

the last pointer is not needed.

Front

Rear

Add Element

Time Constant

Time Constant

Remove Element

Time Constant

Time n

51

Data Structure Invariants

Stack: Add and Remove

from same end.

Queue: Add and Remove

from opposite ends.

Front

Rear

Add Element

Time Constant

Time Constant

Remove Element

Time Constant

Time n

52

Data Structure Invariants

Doubly-linked lists allow more flexible list

nodes/positions

header

trailer

elements

Front

Rear

Add Element

Time Constant

Time Constant

Remove Element

Time Constant

Time n

Time Constant

53

Data Structure Invariants

Exit

54

Asymptotic Analysis of Time Complexity

History of Classifying Problems

Growth Rates

Time Complexity

Linear vs Constant Time

Binary Search Time (logn)

Insertion Sort Time (Quadratic)

Don't Redo Work

Test (linear) vs Search (Exponential)

Multiplying (Quadratic vs Exponential)

Bits of Input

Cryptography

Amortized Time Complexity

Worst Case Input

Classifying Functions (BigOh)

Adding Made Easy

Logs and Exponentials

Understand Quantifiers

Lecture 4

Jeff Edmonds

York University

COSC 2011

55

Some Math

Classifying Functions

f(i) = nQ(n)

Time

Time Complexity

t(n) = Q(n2)

Input Size

Adding Made Easy

∑i=1 f(i).

Logic Quantifiers

g "b Loves(b,g)

"b g Loves(b,g)

Logs and Exps

2a × 2b = 2a+b

2log n = n

Recurrence Relations

T(n) = a T(n/b) + f(n)

56

The Time Complexity of an Algorithm

• Specifies how the running time

• depends on the size of the input.

A function mapping

“size” of input

“time” T(n) executed .

Work for me to

give you the instance.

Work for you to

solve it.

57

History of Classifying Problems

Impossible

Mathematicians’ dream

Brute Force

(Infeasible)

Considered Feasible

Slow sorting

Fast sorting

Look at input

Binary Search

Time does not depend on input.

Halting

Computable

Exp = 2n

Poly = nc

Quadratic = n2

nlogn

Linear = n

log n

Constant

58

Growth Rates

2n

t

i

m

e

n2

n

log n

5

input size

Brute Force

(Infeasible)

Slow sorting

Look at input

Binary Search

Time does not depend on input.

Exp = 2n

Quadratic = n2

Linear = n

log n

Constant

59

Linear vs Constant Time

Search:

• Input: A linked list.

• Output: Find the end.

• Alg: Walk there.

• Time # of records = n.

Insert Front:

• Input: A linked list.

• Output: Add record to front.

• Alg: Play with pointers.

• Time = 4

60

Linear vs Constant Time

a Java Program J, an integer k,

" inputs I, Time(J,I) ≤ k

Is this “Constant time”

= O(1)?

Time

Yes because bounded

by a constant

• Time = 4 = Constant time = O(1)

Time does not “depend” on input.

n

61

Test vs Search

F

T

F

x1

x2

x3

AND

AND

F

OR

F

NOT

OR

F

F

OR

F

Test/Evaluate:

• Input: Circuit & Assignment

• Output: Value at output.

• Alg: Let values percolate down.

• Time: # of gates.

Search/Satisfiablity:

T

• Input: Circuit

• Output: An assignment

giving true:

• Alg: Try all assignments.

(Brute Force)

• Time: 2n

62

Running Time

Grade School

n2

********

********

********

********

********

********

********

********

********

********

****************

vs Kindergarten

a × b = a + a + a + ... + a

b

T(n) = Time multiply

T(n) = Time multiply

= θ(n2) = quadratic time.

= θ(b) = linear time.

Which is faster?

92834765225674897 × 838839775901103948759

63

Size of Input Instance

5

83920

1’’

2’’

•

•

•

•

Size of paper

# of bits

# of digits

Value

- n = 2 in2

- n = 17 bits

- n = 5 digits

- n = 83920

•

•

•

•

Intuitive

Formal

Reasonable

Unreasonable

# of bits = log2(Value)

Value = 2# of bits

64

The Time Complexity of an Algorithm

• Specifies how the running time

• depends on the size of the input.

A function mapping

“size” of input

“time” T(n) executed .

Work for me to

give you the instance.

Work for you to

solve it.

65

Running Time

Grade School

n2

********

********

********

********

********

********

********

********

********

********

****************

vs Kindergarten

a × b = a + a + a + ... + a

b

T(n) = Time multiply

T(n) = Time multiply

= θ(n2) = quadratic time.

= θ(b) = linear time.

Which is faster?

92834765225674897 × 8388397759011039475

b = value = 8388397759011039475

n = # digits = 20

Time ≈ 8388397759011039475 66

Time ≈ 202 ≈ 400

Running Time

Grade School

n2

********

********

********

********

********

********

********

********

********

********

****************

vs Kindergarten

a × b = a + a + a + ... + a

b

T(n) = Time multiply

T(n) = Time multiply

= θ(n2) = quadratic time.

= θ(b) = linear time.

Which is faster?

92834765225674897 × 8388397759011039475

b = value ≈ 10n

n = # digits = 20

n ≈ exponential!!!

2

Time

≈

10

Time ≈ 20 ≈ 400

67

Running Time

Grade School

n2

********

********

********

********

********

********

********

********

********

********

****************

vs Kindergarten

a × b = a + a + a + ... + a

b

T(n) = Time multiply

T(n) = Time multiply

= θ(n2) = quadratic time.

= θ(b) = linear time.

Which is faster?

92834765225674897 × 8388397759011039475 9

Adding a single digit

n = # digits = 20

multiplies the time by 10! 68

Time ≈ 202 ≈ 400

Time Complexity of Algorithm

The time complexity of an algorithm is

the largest time required on any input

of size n.

O(n2): Prove that for every input of size n,

the algorithm takes no more than cn2 time.

Ω(n2): Find one input of size n, for which the

algorithm takes at least this much time.

θ (n2): Do both.

69

Time Complexity of Problem

The time complexity of a problem is

the time complexity of the fastest algorithm

that solves the problem.

O(n2): Provide an algorithm that solves the

problem in no more than this time.

Ω(n2): Prove that no algorithm can solve it faster.

θ (n2): Do both.

70

Classifying Functions

Functions

nθ(1)

2θ(n)

Double Exp

(log n)θ(1)

25n <<

Exp

θ(1)

Exponential

Polynomial

Poly Logarithmic

Constant

5 << (log n)5 << n5 <<

n5

25n

2

2

<<

nθ(1)

2

2θ(n)

2

71

Classifying Functions

Polynomial = nθ(1)

?

Others

θ(n2)

Cubic

Quadratic

Linear

θ(n)

θ(n3)

θ(n4)

θ(n3 log7(n))

log(n) not absorbed

because not

Mult-constant

72

BigOh and Theta?

•5n2 + 8n + 2log n = Q(n2)

•5n2 log n + 8n + 2log n = Q(n2 log n)

Drop low-order terms.

Drop multiplicative constant.

73

Notations

Theta

f(n) = θ(g(n))

f(n) ≈ c g(n)

BigOh

f(n) = O(g(n))

f(n) ≤ c g(n)

Omega

f(n) = Ω(g(n))

f(n) ≥ c g(n)

Little Oh

f(n) = o(g(n)) f(n) << c g(n)

Little Omega

f(n) = ω(g(n)) f(n) >> c g(n)

74

Definition of Theta

f(n) = θ(g(n))

c1, c2, n0, " n n0, c1 g( n) f ( n ) c2 g( n)

75

Definition of Theta

f(n) = θ(g(n))

c1, c2, n0, " n n0, c1 g( n) f ( n ) c2 g( n)

f(n) is sandwiched between c1g(n) and c2g(n)

76

Definition of Theta

f(n) = θ(g(n))

c1, c2, n0, " n n0, c1 g( n) f ( n ) c2 g( n)

f(n) is sandwiched between c1g(n) and c2g(n)

for some sufficiently small c1 (= 0.0001)

for some sufficiently large c (= 1000)

77

Definition of Theta

f(n) = θ(g(n))

c1, c2, n0, " n n0, c1 g( n) f ( n ) c2 g( n)

For all sufficiently large n

78

Definition of Theta

f(n) = θ(g(n))

c1, c2, n0, " n n0, c1 g( n) f ( n ) c2 g( n)

For all sufficiently large n

For some definition of “sufficiently large”

79

Adding Made Easy

Arithmetic Sum

Gauss

∑i=1..n i = 1 + 2 + 3 + . . . + n

= Q(# of terms · last term)

n

n

n

n

n

n

80

Adding Made Easy

Arithmetic Sum

Gauss

∑i=1..n i = 13 + 23 + 33 + . . . + n3

= Q(# of terms · last term)

True when ever terms increase slowly

81

Adding Made Easy

Geometric Increasing

∑i=0..n

=

ri = r0 + r1 + r2 +. . . + rn

Q(biggest term)

82

Adding Made Easy

• Geometric Like: If f(n) 2Ω(n),

then ∑i=1..n f(i) = θ(f(n)).

• Arithmetic Like: If f(n) = nθ(1)-1, then ∑i=1..n f(i) = θ(n · f(n)).

• Harmonic:

If f(n) = 1/n ,

then ∑i=1..n f(i) = logen + θ(1).

• Bounded Tail:

If f(n) n-1-Ω(1), then ∑i=1..n f(i) = θ(1).

(For +, -, , , exp, log

functions f(n))

This may seem confusing, but it is really not.

It should help you compute most sums easily.

83

Logs and Exp

• properties of logarithms:

logb(xy) = logbx + logby

logb (x/y) = logbx - logby

logbxa = alogbx

logba = logxa/logxb

• properties of exponentials:

a(b+c) = aba c

abc = (ab)c

ab /ac = a(b-c)

b = a logab

bc = a c*logab

84

Understand Quantifiers!!!

Say, I have a game for you.

We will each choose an integer.

You win if yours is bigger.

I am so nice, I will even let you go first.

Easy.

I choose a trillion trillion.

Well done. That is big!

But I choose a trillion trillion and one

so I win.

85

Understand Quantifiers!!!

You laugh but this is

a very important game

in theoretical computer science.

You choose the size of your Java program.

Then I choose the size of the input.

Likely |I| >> |J|

So you better be sure your Java program

can handle such long inputs.

86

Understand Quantifiers!!!

The first order logic we can state

that I win the game:

"x, y, y>x

The proof:

Let x be an arbitrary integer.

Let y = x+1

Note y = x+1 >x

Good game.

Let me try again. I will win this time!

87

Understand Quantifiers!!!

Sam

One politician

Bob

John

Layton

Fred

politician, "voters, Loves(v, p)

"voters, politician, Loves(v, p)

Sam

Could be a

different politician.

Bob

Harper

John

Layton

Fred

88

Understand Quantifiers!!!

“There is a politician that is loved by everyone.”

This statement is “about” a politician.

The existence of such a politician.

We claim that this politician is “loved by everyone”.

Sam

Bob

John

Layton

Fred

politician,["voters, Loves(v, p) ]

"voters,[ politician, Loves(v, p) ]

Sam

“Every voter loves some politician.”

This statement is “about” voters.

Something is true about every voter.

We claim that he “loves some politician.”

Bob

Harper

John

Layton

Fred

89

Understand Quantifiers!!!

A Computational Problem P states

•for each possible input I

•what the required output P(I) is.

Eg: Sorting

An Algorithm/Program/Machine M is

•a set of instructions

(described by a finite string “M”)

•on a given input I

•follow instructions and

•produces output M(I)

•or runs for ever.

Eg: Insertion Sort

90

Understand Quantifiers!!!

Problem P is

computable if

M, "I, M(I)=P(I)

There exists is a single algorithm/machine

that solves P for every input

Play the following game to prove it!

91

Understand Quantifiers!!!

Problem P is

computable if

M, "I, M(I)=P(I)

Two players:

a prover and a disprover.

92

Understand Quantifiers!!!

Problem P is

computable if

M, "I, M(I)=P(I)

They read the statement left to right.

I produce the object when it is a .

I produce the object when it is a ".

I can always win

if and only if

statement is true.

The order the players go

REALY matters.

93

Understand Quantifiers!!!

Problem P is

computable if

M, "I, M(I)=P(I)

I have a machine M that I

claim works.

Oh yeah, I have an input I for

which it does not.

I win if M on input I gives

the correct output

What we have been doing all along.

94

Understand Quantifiers!!!

Problem P is

computable if

M, "I, M(I)=P(I)

Problem P is

uncomputable if

"M, I, M(I) P(I)

I have a machine M that I

claim works.

I find one counter example

input I for which

his machine M fails us.

I win if M on input I gives

the wrong output

Generally very hard to do.

95

Understand Quantifiers!!!

Problem P is

Problem P is

uncomputable if true

true computable if

M, "I, M(I)=Sorting(I) "M, I, M(I) Halting(I)

"I, M, M(I) =Halting(I)

The order the players go

REALY matters.

If you don’t know if it is

true or not, trust the game.

96

Understand Quantifiers!!!

Problem P is

Problem P is

uncomputable if true

true computable if

M, "I, M(I)=Sorting(I) "M, I, M(I) Halting(I)

"I, M, M(I) =Halting(I) true

I give you an input I.

Given I either

A tricky

one.

Halting(I) = yes or

Halting(I) = no.

"I, Myes(I) says yes

"I, Mno(I) says no

I don’t know which, but

one of these does the trick.

97

Understand Quantifiers!!!

• Problem P is computable in polynomial time.

M, c, n0,"I, M(I)=P(I) & (|I| < n0 or Time(M,I) ≤ |I|c)

•Problem P is not computable in polynomial time.

" M, c, n0, I, M(I)≠P(I) or (|I| ≥ n0 & Time(M,I) > |I|c)

• Problem P is computable in exponential time.

M, c, n0,"I, M(I)=P(I) & (|I| < n0 or Time(M,I) ≤ 2c|I|)

•The computational class “Exponential Time"

is strictly bigger than the computational

class “Polynomial Time”.

P, [" M, c, n0, I, M(I)≠P(I) or (|I| ≥ n0 & Time(M,I) > |I|c)]

& [ M, c, n0,"I, M(I)=P(I) & (|I| < n0 or Time(M,I) ≤ 2c|I|)]

98

Recursion

Jeff Edmonds

York University

Lecture 5

One Step at a Time

Stack of Stack Frames

Friends and Strong Induction

Recurrence Relations

Towers of Hanoi

Check List

Merge & Quick Sort

Simple Recursion on Trees

Binary Search Tree

Things not to do

Heap Sort & Priority Queues

Trees Representing Equations

Pretty Print

Parsing

Iterate over all s-t Paths

Recursive Images

Ackermann's Function

COSC 2011

99

On Step At a Time

Precondition

Postcondition

It can be difficult to understand

where computation go.

I implored you to not worry

about the entire computation.

Strange(x,y):

x1 = x/4; y1 = 3y; f1 = Strange( x1, y1 );

x2 = x - 3x1; y2 = y; f2 = Strange( x2, y2 );

return( f1+f2 );

next

x/4 3y

(x-3x1) y

100

Friends & Strong Induction

Strange(x,y):

If x < 4 then return( xy );

x1 = x/4; y1 = 3y;? f1 = Strange( x1, y1 ); •Know Precond: ints x,y

Postcond: ???

x2 = x - 3x1; y2 = y; f2 = Strange( x2, y2 );

return( f1+f2 );

x = 30; y = 5

x1 = 7; y1 = 15

f1 = 105

x2 = 9; y2 = 5

f2 = 45

return 150

X=7

Y = 15

XY = 105

X=9

Y=5

XY = 45

•Consider your input instance

•If it is small enough solve it on your own.

•Allocate work

–Construct one or more sub-instances

• It must be smaller

• and meet the precondition

–Assume by magic your friends

give you the answer for these.

•Use this help to solve your own instance.

•Do not worry about anything else.

– Micro-manage friends by tracing out what

they and their friend’s friends do.

101

– Who your boss is.

Recurrence Relations

Time of Recursive Program

T(1) = 1

T(n) = a T(n/b) + nc

procedure Eg(In)

n = |In|

if(n1) then

• n is the “size” of our input.

• a is the number of “friends”

put “Hi”

• n/b is the “size” of friend’s input.

else

c is the work I personally do.

•

n

c

loop i=1..n

put “Hi”

loop i=1..a

In/b = In cut in b pieces

Eg(In/b)

102

T(n)

n/2

n/2

n/4

n/4

n/4

n

=

n/4

n/4

n/4

n/4

n/2

n/4

n/4

n/4

n/4

n/2

n/4

n/4

n/4

n/4

n/4

11111111111111111111111111111111 . . . . ……………………... . 111111111111111111111111111111111

103

Evaluating: T(n) = aT(n/b)+f(n)

Level

Instance

size

Work

in stack

frame

# stack

frames

Work in

Level

1 · f(n)

a · f(n/b)

0

1

n

f(n)

n/b

f(n/b)

1

a

2

n/b2

f(n/b2)

a2

a2 · f(n/b2)

i

n/bi

f(n/bi)

ai

ai · f(n/bi)

h = log n/log b

n/bh

T(1)

log a/

log b

n

log a/

log b ·

n

T(1)

Total Work T(n) = ∑i=0..h ai×f(n/bi)

104

Evaluating: T(n) = aT(n/b)+f(n)

105

Evaluating: T(n) = aT(n/b) + nc

= 4T(n/2) + n

Time for top level: n = n1 c = 1 log 4

log a/

/log 2

log b

) = θ(n2)

) = θ(n

Time for base cases: θ(n

Dominated?: c = 1 < 2 = log a/log b

Hence, T(n) == ?θ(base cases) = θ(n

log a/

log b

) = θ(n2).

If we reduce the number of friends from 4 to 3

is the savings just 25%?

106

Evaluating: T(n) = aT(n/b) + nc

= 3T(n/2) + n

Time for top level: n = n1 c = 1 log 3

log a/

/log 2

log b

) = θ(n1.58)

) = θ(n

Time for base cases: θ(n

Dominated?: c = 1 < 1.58 = log a/log b

Hence, T(n) == ?θ(base cases) = θ(n

log a/

log b

) = θ(n1.58).

Not just a 25% savings!

θ(n2) vs θ(n1.58..)

107

Evaluating: T(n) = aT(n/b) + nc

= 3T(n/2) + n2

Time for top level: n2, c=2

log 3/

log a/

log 2

log b

θ(n

) = θ(n1.58)

)=

Time for base cases: θ(n

Dominated?: c = 2 > 1.58 = log a/log b

Hence, T(n) == ?θ(top level) = θ(n2).

108

Evaluating: T(n) = aT(n/b) + nc

= 3T(n/2) + n1.58

Time for top level: n1.58, c=1.58

log 3/

log a/

log 2

log b

θ(n

) = θ(n1.58)

)=

Time for base cases: θ(n

Dominated?: c = 1.58 = log a/log b

Hence, all θ(logn) layers require a total of θ(n1.58) work.

The sum of these layers is no longer geometric.

Hence T(n) = θ(n1.58 logn)

109

Evaluating: T(n) = aT(n/b)+f(n)

Level

Instance

size

Work

in stack

frame

# stack

frames

Work in

Level

1 · f(n)

a · f(n/b)

0

1

n

f(n)

n/b

f(n/b)

1

a

2

n/b2

f(n/b2)

a2

a2 · f(n/b2)

i

n/bi

f(n/bi)

ai

ai · f(n/bi)

h = log n/log b

n/bh

T(1)

log a/

log b

n

log a/

log b ·

n

T(1)

All levels the same: Top Level to Base Cases

110

Evaluating: T(n) = aT(n/b)+f(n)

111

Check Lists for Recursive Programs

This is the format of “all” recursive programs.

Don’t deviate from this.

Or else!

112

Merge Sort

88 52

14

31

25 98

30

23

62

79

Get one friend to

sort the first half.

25,31,52,88,98

Split Set into Two

(no real work)

Get one friend to

sort the second half.

14,23,30,62,79

113

Merge Sort

Merge two sorted lists into one

25,31,52,88,98

14,23,25,30,31,52,62,79,88,98

14,23,30,62,79

114

Java Implementation

115

Andranik Mirzaian

115

Java Implementation

116

Andranik Mirzaian

116

Quick Sort

Partition set into two using

randomly chosen pivot

88 52

14

31

25 98

30

23

62

79

14

31 30 23

25

88

≤ 52 ≤

98

62

79

117

Quick Sort

14

31 30 23

25

Get one friend to

sort the first half.

14,23,25,30,31

88

≤ 52 ≤

98

62

79

Get one friend to

sort the second half.

62,79,98,88

118

Quick Sort

14,23,25,30,31

52

62,79,98,88

Glue pieces together.

(No real work)

14,23,25,30,31,52,62,79,88,98

Faster Because all done

“in place”

ie in the input array

119

Java Implementation

120

Andranik Mirzaian

120

Recursion on Trees

(define)

A binary tree is:

- the empty tree

- a node with a right and a left sub-tree.

3

8

1

3

6

2

2

5

4

7

9

1

121

Recursion on Trees

(friends)

number of nodes = ?

Get help from friends

5

3

6

8

1

3

6

2

2

5

4

7

9

1

122

Recursion on Trees

(friends)

number of nodes

= number on left + number on right + 1

= 6 + 5 + 1 = 12

5

3

6

8

1

3

6

2

2

5

4

7

9

1

123

Recursion on Trees

(base case)

number of nodes

Base Case ?

3

8

1

3

6

2

2

0

5

4

7

Base case!

9

1

124

Recursion on Trees(communication

Being lazy, I will only consider

my root node and my communication with my friends.

I will never look at my children subtrees

but will trust them to my friends.

3

6

8

1

3

6

2

2

5

4

7

9

1

125

Recursion on Trees

(code)

126

Recursion on Trees

(cases)

Designing Program/Test Cases

Try same code

n 1 + n2 + 1

n1+0+1

3

3

Same code

works!

n1

generic

n2

generic

Try same code

0+0+1=1

3

Same code

works!

0

0

0

n1

generic

0

Base Case

127

Recursion on Trees

Time: T(n) =

=

(time)

∑stack frame Work done by stack frame

Q(n)

×

Q(1) =

Q(n)

One stack frame

•for each node in the tree

•and for empty trees hang off

And constant work per stack frame.

128

Recursion on

(mult-children)

Trees (friends)

Many Children

number of nodes = 4 + 2 + 4 + 2 + 1 = 13

One friend for each sub-tree.

2

3

4

1

3

4

2

8

5

2

2

7

6

1

4

4

9

129

Recursion on

(mult-children)

Trees (cases)

Designing Program/Test Cases

n 1 + n2 + n3 + 1

n1 + 1 Try same code

3

3

Same code works!

n1

n2

n3

generic

generic

generic

n1

generic

But is this needed

Try same code

+1=1

3

Same code works!

(if not the input)

0

130

Recursion on

(mult-children)

Trees (code)

131

Recursion on

Time: T(n) =

=

(mult-children)

Trees (time)

∑stack frame Work done by stack frame

∑stack frame Q(# subroutine calls)

= ∑node Q(# edge in node)

=Q(edgeTree) = Q(nodeTree) =Q(n)

132

Recursion on Trees

class LinkedBinaryTree {

class Node

{

E element;

Node parent;

Node left;

Node right;

}

Node root = null;

Tree

We pass the recursive

program a “binary tree”.

But what type is it really?

This confused Jeff at first.

133

Recursion on Trees

class LinkedBinaryTree {

class Node

{

E element;

Node parent;

Node left;

Node right;

}

Node root = null;

Tree

(LinkedBinaryTree tree)

One would think tree is of

type LinkedBinaryTree.

Then getting its left subtree,

would be confusing.

left_Tree

134

Recursion on Trees

class LinkedBinaryTree {

class Node

{

E element;

Node parent;

Node left;

Node right;

}

Node root = null;

Tree

(LinkedBinaryTree tree)

One would think tree is of

type LinkedBinaryTree.

Then getting its left subtree,

would be confusing.

left_Tree

135

Recursion on Trees

class LinkedBinaryTree {

class Node

{

E element;

Node parent;

Node left;

Node right;

}

Node root = null;

Tree

(Node tree)

It is easier to have tree be of

type Node.

But it is thought of as the

subtree rooted at the node

pointed at.

The left child is then tree.left or

or

tree

Tree.leftSub(tree)

tree.Getleft()

or

leftSub(tree)

136

Recursion on Trees

class LinkedBinaryTree {

class Node

{

E element;

Node parent;

Node left;

Node right;

}

Node root = null;

Tree

tree

private int NumberNodesRec (Node tree)

public int NumberNodes() {

return NumberNodesRec( root );

}

NumberNodesRec

NumberNodesRec

But the outside user does not

know about pointers to nodes.

137

Balanced Trees

Dictionary/Map ADT

Binary Search Trees

Insertions and Deletions

AVL Trees

Rebalancing AVL Trees

Union-Find Partition

Heaps & Priority Queues

Communication & Hoffman Codes

(Splay Trees)

Lecture 6

Jeff Edmonds

York University

COSC 2011

138

Input

key, value

k1,v1

k2,v2

k3,v3

k4,v4

Dictionary/Map ADT

Problem: Store value/data

associated with keys.

Examples:

• key = word, value = definition

• key = social insurance number

value = person’s data

139

Input

key, value

k1,v1

k2,v2

k3,v3

k4,v4

Dictionary/Map ADT

Array

Problem: Store value/data

associated with keys.

0

1

2

3

4

k5,v5

5

6

7

Unordered Array

…

Implementations:

Insert

Search

O(1)

O(n)

140

Input

key, value

2,v3

4,v4

7,v1

9,v2

Dictionary/Map ADT

Array

0

1

2

Problem: Store value/data

associated with keys.

6,v5

3

4

5

6

7

Unordered Array

Ordered Array

…

Implementations:

Insert

O(1)

O(n)

Search

O(n)

O(logn)

141

6,v5

entries

Implementations:

Problem: Store value/data

associated with keys.

trailer

nodes/positions

2,v3

4,v4

7,v1

9,v2

Dictionary/Map ADT

header

Input

key, value

Insert

Search

Unordered Array

O(1)

O(n)

Ordered Array

O(n)

O(logn)

Ordered Linked List

O(n)

O(n)

Inserting is O(1) if you have the spot.

but O(n) to find the spot.

142

Input

key, value

2,v3

4,v4

7,v1

9,v2

Dictionary/Map ADT

Problem: Store value/data

associated with keys.

38

25

17

51

31

42

63

4 21 28 35 40 49 55 71

Implementations:

Unordered Array

Ordered Array

Binary Search Tree

Insert

O(1)

O(n)

O(logn)

Search

O(n)

O(logn)

O(logn)

143

Input

key, value

Dictionary/Map ADT

2,v3

4,v4

7,v1

9,v2

Implementations:

Problem: Store value/data

associated with keys.

Heaps are good

for Priority Queues.

Insert

Unordered Array

O(1)

Ordered Array

O(n)

Binary Search Tree

O(logn)

Heaps

Faster: O(logn)

Search

O(n)

O(logn)

O(logn)

O(n)

Max O(1)

144

Input

key, value

2,v3

4,v4

7,v1

9,v2

Dictionary/Map ADT

Problem: Store value/data

associated with keys.

Hash Tables are very fast,

but keys have no order.

Implementations:

Insert

Unordered Array

O(1)

Ordered Array

O(n)

Binary Search Tree

O(logn)

Heaps

Faster: O(logn)

Hash Tables

Avg: O(1)

Search

O(n)

O(logn)

O(logn)

Max O(1)

O(1)

Next

O(1)

O(1)

O(n)

(Avg)

145

Balanced Trees

Unsorted

List

Sorted

List

Balanced

Trees

Splay

Trees

(Static)

•Search

O(n)

Heap

Hash

Tables

(Priority

Queue) (Dictionary)

Worst case

O(n)

O(log(n))

O(log(n)) Practice O(log(n)) O(1)

•Insert

•Delete

O(1)

O(n)

O(n)

•Find Max

O(n)

O(1)

O(log(n))

O(1)

O(n)

•Find Next

in Order

O(n)

O(1)

O(log(n))

O(n)

O(n)

better

O(log(n))

Amortized

O(1)

better

146

From binary search to

Binary Search Trees

147

Binary Search Tree

All nodes in left subtree ≤ Any node ≤ All nodes in right subtree

38

≤

25

≤

17

4

51

31

21

28

42

35

40

63

49

55

71

Iterative Algorithm

Move down the tree.

Loop Invariant:

If the key is contained in the original tree,

then the key is contained in the sub-tree

rooted at the current node.

Algorithm TreeSearch(k, v)

v = T.root()

loop

if T.isExternal (v)

return “not there”

if k < key(v)

v = T.left(v)

else if k = key(v)

return v

else { k > key(v) }

v = T.right(v)

end loop

38

key 17

25

51

17

4

31

21

28

42

35

40

63

49

55

71

Recursive Algorithm

If the key is not at the root, ask a friend to look for it in the

appropriate subtree.

Algorithm TreeSearch(k, v)

if T.isExternal (v)

key 17

return “not there”

if k < key(v)

return TreeSearch(k, T.left(v))

else if k = key(v)

17

return v

else { k > key(v) }

return TreeSearch(k, T.right(v))

4

38

25

51

31

21

28

42

35

40

63

49

55

71

Insertions/Deletions

To insert(key, data):

Insert 10

1

We search for key.

v

3

2

Not being there,

we end up in an empty tree.

11

9

w

8

10

4

6

5

12

7

Insert the key there.

Insertions/Deletions

To Delete(keydel, data):

Delete 4

1

If it does not have two children,

v

3

point its one child at its parent.

2

11

9

w

keydel

8

10

4

6

5

>

7

12

Insertions/Deletions

To Delete(keydel, data):

Delete 3

else find the next keynext in order

keydel

1

3

right left left left …. to empty tree

2

11

9

w

keynext

8

10

4

6

5

>

7

12

Replace keydel to delete with keynext

point keynext’s one child at its parent.

Performance

find, insert and remove take O(height) time

In a balanced tree, the height is O(log n)

In the worst case, it is O(n)

Thus it worthwhile to balance the tree (next topic)!

AVL Trees

AVL trees are “mostly” balanced.

Tree is said to be an AVL Tree if and only if

heights of siblings differ by at most 1.

balanceFactor(v)

= height(rightChild(v)) - height(leftChild(v))

{ -1,0,1 }.

Claim: The height of an AVL tree storing n keys is ≤ O(log n).

balanceFactor = 2-3 = -1 44

subtree

height

2

subtree

height

17

32

78

88

50

48

3

62

Rebalancing after an Insertion

Cases

y £x£z

x£y £z

+2

height

=h

h-1

h-2

x

+1

y

h-3

T1

one is h-3 & one is h-4

h-1

T3

height

=h

z

-1

y

h-2

h-3

T2

T0

h-3

+2

height

=h

z

z£y £x

h-3

T3

T0

z£x£y

-2

height

=h

z

-1

y

h-3

h-1

T0

x

x

h-3

-2

z

+1

y

h-3

x

h-2

h-2

T1

T0

T1

T2

one is h-3 & one is h-4

h-1

h-3

T3

T2

T3

one is h-3 & one is h-4

T1

T2

one is h-3 & one is h-4

Rebalancing after an Insertion

Inserting new leaf 6 in to AVL tree

+2

7

-1

4

3

Problem!

8

-1

5

0

6

balanceFactor

Increases heights

along path from leaf to root.

Rebalancing after an Insertion

Inserting new leaf 6 in to AVL tree

+2

7

-1

4

3

Problem!

8

-1

5

Denote

6

z = the lowest imbalanced node

y = the child of z with highest subtree

x = the child of y with highest subtree

Rebalancing after an Insertion

Inserting new leaf 6 in to AVL tree

z

y≤x≤z

y

T4

x

T1

z

T2

T3

x

y

Rebalancing after an Insertion

Inserting new leaf 6 in to AVL tree

z

x

y≤x≤z

y

T4

x

T1

z

T2

T3

x

y

y

z

Rebalancing after an Insertion

Inserting

new leaf 6 in to AVL tree

Rest of Tree

Rest of Tree

z

x

z

y

y

T4

x

T1

T1

T2

T3

T2

T3

T4

• This subtree is balanced.

• And shorter by one.

• Hence the whole is an AVL Tree

Rebalancing after an Insertion

Example: Insert 12

5

7

3

2

1

1

3

13

2

3

2

9

1

4

0

0

1

11

0

0

0

2

31

1

15

1

8

0

0

4

19

0

1

23

0

0

0

0

0

0

162

Rebalancing after an Insertion

Example: Insert 12

5

7

3

2

1

1

3

13

2

3

2

9

1

4

0

0

1

11

0

0

0

2

31

1

15

1

8

0

0

4

19

0

1

23

0

0

w

0

0

0

0

Step 1.1: top-down search

163

Rebalancing after an Insertion

Example: Insert 12

5

7

3

2

1

1

3

13

2

3

2

9

1

4

0

0

0

0

0

1 w

12

0

1

23

0

1

11

0

0

2

31

1

15

1

8

0

0

4

19

0

0

0

0

Step 1.2: expand 𝒘 and insert new item in it

164

Rebalancing after an Insertion

Example: Insert 12

5

7

3

2

1

1

4

13

2

3

1

4

0

0

0

0

4

19

0

3

1

imbalance

9

15

2

1

11

8

0

0

1 w

12

0

0 0

0

2

31

1

23

0

0

0

0

Step 2.1: move up along ancestral path of 𝒘; update

ancestor heights; find unbalanced node.

165

Rebalancing after an Insertion

Example: Insert 12

5

7

3

2

1

1

4

z

13

2

3

3

y 9

1

4

0

0

0

1

0

0

2

31

1

15

2

x 11

1

8

0

0

4

19

0

0

1

23

0

0

0

0

0

12

0

Step 2.2: trinode discovered (needs double rotation)

166

Rebalancing after an Insertion

Example: Insert 12

5

7

3

2

1

1

3

x

11

2

3

2

y 9

1

4

0

0

1

8

0

0

1

4

19

2

31

2

13 z

1

23

0

1

15

12

0

0

0

0

0

0

00

0

Step 2.3: trinode restructured; balance restored. DONE!

167

0

Rebalancing after a deletion

Very similar to before.

Unfortunately, trinode restructuring may

reduce the height of the subtree, causing

another imbalance further up the tree.

Thus this search and repair process must

in the worst case be repeated until we

reach the root.

See text for implementation.

Midterm Review

End

169

Union-Find Data structure.

Average Time = Akerman’s-1(E)

4

170

Heaps, Heap Sort, &

Priority Queues

J. W. J. Williams, 1964

171

Abstract Data Types

Restricted Data Structure:

Some times we limit what operation can be done

• for efficiency

• understanding

Stack: A list, but elements can only be

pushed onto and popped from the top.

Queue: A list, but elements can only be

added at the end and removed from

the front.

• Important in handling jobs.

Priority Queue: The “highest priority” element

is handled next.

172

Priority Queues

Sorted

List

Unsorted

List

Heap

•Items arrive

with a priority.

O(n)

O(1)

O(logn)

•Item removed is

that with highest

priority.

O(1)

O(n)

O(logn)

173

Heap Definition

•Completely Balanced Binary Tree

•The value of each node

each of the node's children.

•Left or right child could be larger.

Where can 9 go?

Where can 1 go?

Where can 8 go?

Maximum is at root.

174

Heap Data Structure

Completely Balanced Binary Tree

Implemented by an Array

175

Heap

Pop/Push/Changes

With Pop, a Priority Queue

returns the highest priority data item.

This is at the root.

21

21

176

Heap

Pop/Push/Changes

But this is now the wrong shape!

To keep the shape of the tree,

which space should be deleted?

177

Heap

Pop/Push/Changes

What do we do with the element that was there?

Move it to the root.

3

3

178

Heap

Pop/Push/Changes

But now it is not a heap!

The left and right subtrees still are heaps.

3

3

179

Heap

Pop/Push/Changes

But now it is not a heap!

The 3 “bubbles” down until it finds its spot.

3

The max of these

three moves up.

3

Time = O(log n)

180

Heap

Pop/Push/Changes

When inserting a new item,

to keep the shape of the tree,

which new space should be filled?

21

21

181

Heap

Pop/Push/Changes

But now it is not a heap!

The 21 “bubbles” up until it finds its spot.

30

The max of these

two moves up.

30

21

21

Time = O(log n)

182

Adaptable Heap

Pop/Push/Changes

But now it is not a heap!

The 39 “bubbles” down or up until it finds its spot.

27

2139

39

21 c

Suppose some outside user

knows about

some data item c

and remembers where

it is in the heap.

And changes its priority

from 21 to 39

183

Adaptable Heap

Pop/Push/Changes

But now it is not a heap!

The 39 “bubbles” down or up until it finds its spot.

39

27

27 f

39

21 c

Suppose some outside user

also knows about

data item f and its location

in the heap just changed.

The Heap must be able

to find this outside user

and tell him it moved.

Time = O(log n)

184

Heap Implementation

• A location-aware heap entry

is an object storing

2 d

4 a

6 b

key

value

position of the entry in the

underlying heap

• In turn, each heap position

stores an entry

• Back pointers are updated

during entry swaps

Last Update: Oct 23,

2014

8 g

Andy

5 e

9 c

185

185

Selection Sort

Selection

Largest i values are sorted on side.

Remaining values are off to side.

3

Exit

79 km

Exit

75 km

5

1

4

<

6,7,8,9

2

Max is easier to find if a heap.

186

Heap Sort

Largest i values are sorted on side.

Remaining values are in a heap.

Exit

79 km

Exit

75 km

187

Communication & Entropy

Use a Huffman Code

described by a binary tree.

001000101

Claude Shannon

(1948)

I first get

, the I start over to get

0

0

0

0

1

1

1

0

0

1

1

0

1

0

1

1

0

1

0

1

188

Communication & Entropy

Objects that are more likely

will have shorter codes.

I get it.

I am likely to answer .

so you give it a 1 bit code.

Claude Shannon

(1948)

0

0

0

0

1

1

1

0

0

1

1

0

1

0

1

1

0

1

0

1

189

Hash Tables

Dictionary/Map ADT

Direct Addressing

Hash Tables

Random Algorithms and Hash

Functions

Key to Integer

Separate Chaining

Probe Sequence

Put, Get, Del, Iterators

Running Time

Simpler Schemes

Lecture 7

Jeff Edmonds

York University

COSC 2011

190

Random Balls in Bins

Throw m/2 balls (keys)

randomly

into m bins (array cells)

The balls get spread out reasonably well.

- Exp( # a balls a ball shares a bin with ) = O(1)

- O(1) bins contain O(logn) balls.

191

Input

key, value

k1,v1

k2,v2

k3,v3

k4,v4

Dictionary/Map ADT

Problem: Store value/data

associated with keys.

Examples:

• key = word, value = definition

• key = social insurance number

value = person’s data

192

Dictionary/Map ADT

• Map ADT methods:

– put(k, v): insert entry (k, v) into the map M.

• If there is a previous value associated with k return it.

• (Multi-Map allows multiple values with same key)

– get(k): returns value v associated with key k. (else null)

– remove(k): remove key k and its associated value.

– size(), isEmpty()

– Iterator:

• keys(): over the keys k in M

• values(): over the values v in M

• entries(): over the entries k,v in M

193

Input

Dictionary/Map ADT

key, value Array

k1,v1

k2,v2

k3,v3

k4,v4

Problem: Store value/data

associated with keys.

0

1

2

3

4

k5,v5

5

6

7

…

Implementations:

Unordered Array

Insert

Search

O(1)

O(n)

194

Input

Dictionary/Map ADT

key, value Array

2,v3

4,v4

7,v1

9,v2

0

1

2

Problem: Store value/data

associated with keys.

6,v5

3

4

5

6

7

…

Implementations:

Unordered Array

Ordered Array

Insert

O(1)

O(n)

Search

O(n)

O(logn)

195

6,v5

entries

Implementations:

Problem: Store value/data

associated with keys.

trailer

nodes/positions

2,v3

4,v4

7,v1

9,v2

Dictionary/Map ADT

header

Input

key, value

Insert

Search

Unordered Array

O(1)

O(n)

Ordered Array

O(n)

O(logn)

Ordered Linked List

O(n)

O(n)

Inserting is O(1) if you have the spot.

but O(n) to find the spot.

196

Input

key, value

2,v3

4,v4

7,v1

9,v2

Dictionary/Map ADT

Problem: Store value/data

associated with keys.

38

25

17

51

31

42

63

4 21 28 35 40 49 55 71

Implementations:

Unordered Array

Ordered Array

Ordered Linked List

Binary Search Tree

Insert

O(1)

O(n)

O(n)

O(logn)

Search

O(n)

O(logn)

O(n)

O(logn)

197

Input

key, value

5,v1

9,v2

2,v3

7,v4

Dictionary/Map ADT

Problem: Store value/data

associated with keys.

Hash Tables are very fast,

but keys have no order.

Implementations:

Insert

Unordered Array

O(1)

Ordered Array

O(n)

Ordered Linked List

O(n)

Binary Search Tree

O(logn)

Hash Tables

Avg: O(1)

Search

O(n)

O(logn)

O(n)

O(logn)

O(1)

Next

O(n)

O(1)

O(1)

O(1)

O(n)

(Avg)

198

Input

key, value

Hash Tables

Consider an array

# items stored.

Universe

of Keys

Universe of keys

is likely huge.

(eg social insurance

numbers)

0

1

2

3

4

5

6

7

8

9

0

1

2

3

4

5

6

7

8

The Mapping

from key

to Array Cell

is many to one

Called

Hash Function

Hash(key) = i

9

…

199

Input

key, value

5,v1

9,v2

2,v3

7,v4

5,?

4,v5

Collisions

are a problem.

Hash Tables

Universe

of Keys

0

1

2

3

4

5

6

7

8

9

…

Implementations:

Hash Function

Consider an array

# items stored.

0

1

2

3

4

5

6

7

8

The Mapping

from key

to Array Cell

is many to one

Called

Hash Function

Hash(key) = i

9

Insert

O(1)

Search

O(1)

200

Understand Quantifiers!!!

Problem P is computable if

A, "I, A(I)=P(I) & Time(A,I) ≤ T

I have a algorithm A that I claim works.

Oh yeah, I have a worst case

input I for which it does not.

Actually my algorithm always gives the right answer.

Ok, but I found in set of keys

I = key1,…, keyn

for which lots of collisions happen

and hence the time is bad.

201

Understand Quantifiers!!!

Problem P is computable by a random algorithm if

A, "I, " R, AR(I)=P(I)

Remember

ExpectedR Time(AR,I) ≤ T

Quick Sort

I have a random algorithm A that I claim works.

I know the algorithm A, but not its random coin flips R.

I do my best to give you a worst case input I.

The random coin flips R are There are worst case coin flips

independent of the input.

but not worst case inputs.

Actually my algorithm always gives the right answer.

And for EVERY input I,

the expected running time (over choice of R)

is great.

202

Input

key, value

287 005,v1

923 005,v2

394 005,v3

482 005,v4

287 005,?

193 005,v5

Random Hash Functions

Fix the worst case input I

Universe

of Keys

0

1

287 005

2

3

482 005

4

Choose a random

mapping

Hash(key) = i

We don’t expect there

to be a lot of collisions.

5

193 005

6

7

394 005

923 005

8

9

(Actually, the random

Hash function likely is

chosen and fixed before the

input comes, but the key is

that the worst case input

does not “know” the hash

function.)

203

Input

key, value

287 005,v1

923 005,v2

394 005,v3

482 005,v4

287 005,?

193 005,v5

Random Hash Functions

Throw m/2 balls (keys)

randomly

into m bins (array cells)

Universe

of Keys

0

1

287 005

2

3

482 005

4

5

193 005

6

7

8

394 005

9 spread out reasonably well.

923 The

005 balls get

- Exp( # a balls a ball shares a bin with ) = O(1)

- O(1) bins contain O(logn) balls.

204

Random Hash Functions

Choose a random mapping

Hash(key) = i

Universe

of Keys

0

1

2

3

4

5

6

7

8

9

We want Hash to be

computed in O(1) time.

0

1

Theory people use

2

3

Hash(key) = (akey mod p) mod N

4

N = size of array

p is a prime > |U|

a is randomly chosen [1..p-1]

n is the number of data items.

5

6

7

8

9

…

a adds just

enough

randomness.

The integers mod p The mod N ensures

form a finite field the result indexes a

similar to the reals. cell in the array.205

Random Hash Functions

Choose a random mapping

Hash(key) = i

Universe

of Keys

0

1

2

3

4

5

6

7

8

9

0

1

2

We want Hash to be

computed in O(1) time.

Theory people use

3

Hash(key) = (akey mod p) mod N

4

N = size of array

p is a prime > |U|

a is randomly chosen [1..p-1]

n is the number of data items.

5

6

7

8

9

Pairwise Independence

"k1 & k2 Pra( Hasha(k1)=Hasha(k2) ) = 1/N

…

Proof: Fix distinct k1&k2 U. Because p > |U|, k2-k1 mod p0. Because p is prime, every

nonzero element has an inverse, eg 23mod5=1. Let e=(k2-k1)-1. Let D = a (k2-k1) mod p, a

= De mod p, and d = D mod N. k1&k2 collide iff d=0 iff D = jN for j[0..p/N]

iff a = jNe mod p. The probability a has one of these p/N values is 1/p p/N = 1/N. 206

Random Hash Functions

Choose a random mapping

Hash(key) = i

Universe

of Keys

0

1

2

3

4

5

6

7

8

9

0

1

2

We want Hash to be

computed in O(1) time.

Theory people use

3

Hash(key) = (akey mod p) mod N

4

N = size of array

p is a prime > |U|

a is randomly chosen [1..p-1]

n is the number of data items.

5

6

7

8

9

Pairwise Independence

"k1 & k2 Pra( Hasha(k1)=Hasha(k2) ) = 1/N

…

Insert key k. Exp( #other keys in its cell )

= Exp( k1,k collision ) = k ,k Exp(collision)

1

= n 1/N = O(1).

207

Random Hash Functions

Choose a random mapping

Hash(key) = i

Universe

of Keys

0

1

2

3

4

5

6

7

8

9

0

1

2

We want Hash to be

computed in O(1) time.

Theory people use

3

Hash(key) = (akey mod p) mod N

4

N = size of array

p is a prime > |U|

a is randomly chosen [1..p-1]

n is the number of data items.

5

6

7

8

9

Pairwise Independence

"k1 & k2 Pra( Hasha(k1)=Hasha(k2) ) = 1/N

…

Not much more independence. Knowing that Hasha(k1)=Hasha(k2)

decreases the range of a from p to p/N values. Doing this logp/logN

times, likely determines a, and hence all further collisions.

208

Random Hash Functions

Choose a random mapping

Hash(key) = i

Universe

of Keys

0

1

2

3

4

5

6

7

8

9

0

1

2

We want Hash to be

computed in O(1) time.

Theory people use

3

Hash(key) = (akey mod p) mod N

4

N = size of array

p is a prime > |U|

a is randomly chosen [1..p-1]

n is the number of data items.

5

6

7

8

9

…

This is usually written akey+b.

The b adds randomness to which cells get hit,

but does not help with collisions.

209

Handling Collisions

Input

key, value

Handling Collisions

394,v1 Universe

When different data

482,v2 of Keys

items are mapped to

583,v3

0

1

2

3

4

5

6

7

8

9

the same cell

0

1

2

3

4

5 394,v1

583, v3

6

7 482,v2

8

9

…

10

210

Separate Chaining

Input

key, value

Separate Chaining

394,v1 Universe

Each cell uses external

482,v2 of Keys

memory to store all the data

583,v3

0

items hitting that cell.

0

1

2

3

4

5

6

7

8

9

1

2

3

4

5 394,v1

583, v3

394,v1

583,v3

6

7 482,v2

482,v2

8

9

…

10

Simple

but requires

additional memory 211

A Sequence of Probes

Input

key, value

Open addressing

394,v1 Universe

The colliding item is placed

482,v2 of Keys

in a different cell of the table

583,v3

0

1

2

3

4

5

6

7

8

9

0

1

2

3

4

5 394,v1

583, v3

6

7 482,v2

8

9

…

10

212

A Sequence of Probes

Input

key, value

Cells chosen by a

103,v6 Universe

sequence of probes.

of Keys

put(key k, value v)

0

0 583, v3

1

2

3

4

5

6

7

8

9

1

i1 = (ak mod p) mod N

5

2

3 290,v4

4

5 394,v1

6

7 482,v2

Theory people use

i1 = Hash(key) = (akey mod p) mod N

N = size of array

11

1009

p is a prime > |U|

a[1,p-1] is randomly chosen 832

8 903,v5

9

…

10

akey = 832103 = 85696

85696 mod 1009 = 940

940 mod 11 = 5 = i1

213

A Sequence of Probes

Input

key, value

Cells chosen by a

103,v66 Universe

103,v

sequence of probes.

of Keys

put(key k, value v)

0

0 583, v3

1

2

3

4

5

6

7

8

9

i1 = (ak mod p) mod N

1

5

2

3 290,v4

4

5 394,v1

6

7 482,v2

8 903,v5

1

This was our first in the

sequence of probes.

9

…

10

214

A Sequence of Probes

Input

key, value

Double Hash

103,v6 Universe

to get sequence distance d.

of Keys

put(key k, value v)

0

0 583, v3

1

2

3

4

5

6

7

8

9

i1 = (ak mod p) mod N

d = (bk mod q) + 1

1

2

5

3

3 290,v4

4

5 394,v1

1

6

7 482,v2

8 903,v5

9

…

10

215

A Sequence of Probes

Input

key, value

Double Hash

103,v6 Universe

to get sequence distance d.

of Keys

put(key k, value v)

0

3

0 583, v3

1

2

3

4

5

6

7

8

9

5

i1 = (ak mod p) mod N

3

d = (bk mod q) + 1

for j = 1..N

i = i1 + (j-1)d mod N

1

2

3 290,v4

4

4

5 394,v1

6

7 482,v2

8 903,v5

1

5 d=3

2

9

If N is prime,

this sequence will reach

each cell.

…

d=3

10

3

216

A Sequence of Probes

Input

Stop this sequence of

key, value

probes when:

103,v66 Universe

103,v

Cell is empty

of Keys

or key already there