SureMail: Notification Overlay for Email Reliability

advertisement

SureMail

Notification Overlay for Email Reliability

Sharad Agarwal

Venkat Padmanabhan

Dilip A. Joseph

8 March 2006

Outline

•

•

•

•

•

Email loss problem

Design philosophy

SureMail design

SureMail robustness to security attacks

SureMail implementation

8.MAR.2006

2

What is Email Loss?

• Email loss : sent email not received

• Silent email loss

– Loss w/o notification (no bounceback / DSN)

• Why?

– Aggressive spam filters

• 90% corp. emails thrown away (blacklist)

• AOL’s strict whitelist rules (must send 100/day)

• Bouncebacks contribute to spam

– Complex mail architecture upgrades / failures

• SMTP reliability is per hop, not end-to-end

8.MAR.2006

3

How Much Email Loss?

• Even loss of 1 email / user / year is bad

– If it’s an important email

• To really measure loss

– Monitor many users’ send & receive habits

– Count how many sent emails not received

– Count how many bouncebacks received

– Difficult to find enough willing participants that

email each other across multiple domains

8.MAR.2006

4

Prior Work

• “The State of the Email Address”

– Afergan & Beverly, ACM CCR 01.2005

•

•

•

•

Rely on bouncebacks; similar to “dictionary” attack

25% of tested domains send bouncebacks

1 sender

0.1% to 5% loss, across 1468 servers, 571 domains

• “Email dependability”

– Lang, UNSW B.E. thesis 11.2004

• 40 accounts, 16 domains receive emails from 1 sender

• Empty body, sequence number as subject

• 0.69% silent loss

8.MAR.2006

5

Our Email Loss Study

• Methodology

– Controller composes email, sends

– Our code for SMTP sending

– Outlook for receiving (both inbox & junk mail)

– Parse sent and received emails into SQL DB

– Match on {sender,receiver,subject,attachment}

– Heuristics for parsing bouncebacks

• Want

– Many sending, receiving accounts

– Real email content

8.MAR.2006

6

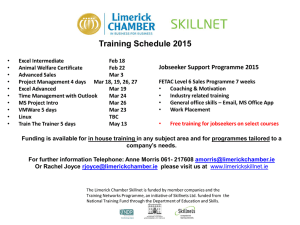

Experiment Details

• Email accounts

– 36 send, 42 receive

– Junk filters off if possible

• Email subject & body

– Enron corpus subset

– 1266 emails w/o spam

• Email attachment

– 70% no attachment

– jpg,gif,ppt,doc,pdf,zip,htm

– marketing,technical,funny

8.MAR.2006

Domain

microsoft.com

fusemail.com

aim.com

yahoo.co.uk

yahoo.com

hotmail.com

gawab.com

bluebottle.com

orcon.net.nz

nerdshack.com

gmail.com

eecs.berkeley.edu

cs.columbia.edu

cc.gatech.edu

nms.lcs.mit.edu

cs.princeton.edu

cs.ucla.edu

cubinlab.ee.mu.oz.au

usc.edu

cs.utexas.edu

cs.waterloo.ca

cs.wisc.edu

Type Receive Send

Exchange

1

1

IMAP

2

2

IMAP

2

2

POP

2

2

POP

2

2

HTTP

1

X

POP

2

2

POP

2

2

POP

2

2

POP

2

2

POP

2

2

IMAP

2

2

IMAP

2

2

POP

2

2

POP

2

2

POP

2

2

POP

2

X

POP

2

X

POP

2

2

POP

2

2

POP

2

2

IMAP

2

2

7

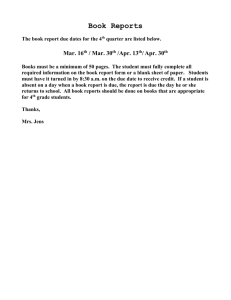

Email Loss Results

Start Date

End Date

Days

Emails sent

Emails received

Emails lost

Total loss rate

Bouncebacks received

Matched bouncebacks

Unmatched bouncebacks

Emails lost silently

Silent loss rate

Hard failures

Conservative silent loss

8.MAR.2006

11/18/2005

1/11/2006

54

138944

144949

2530

1.82%

982

878

104

1548

1.11%

565

0.71%

8

Loss Rates by Account

Account Send Receive

Loss

Loss

a@microsoft.com

1.2

0.1

a@fusemail.com

0.8

1.5

b@fusemail.com

0.4

1.8

a@aim.com

1.6

0.2

b@aim.com

1.2

0.2

a@yahoo.co.uk

0.8

0.2

b@yahoo.co.uk

0.8

0.1

a@yahoo.com

0.7

0.1

b@yahoo.com

0.8

0.2

a@hotmail.com

5

a@gawab.com

1.3

0

b@gawab.com

1.1

0.2

a@bluebottle.com

1.4

8

b@bluebottle.com

2.3

8.1

a@orcon.net.nz

5.3

5.7

b@orcon.net.nz

5.9

6.7

a@nerdshack.com

0.6

0.2

b@nerdshack.com

0.7

0.3

a@gmail.com

2.6

2.7

b@gmail.com

2.3

2.3

– Loss rate 1.82% to 0.82%

8.MAR.2006

Account Send Receive

Loss

Loss

a@eecs.berkeley.edu

0.9

1.3

b@eecs.berkeley.edu

0.5

0.8

a@cs.columbia.edu

1.3

0

b@cs.columbia.edu

1

0

a@cc.gatech.edu

0.7

0

b@cc.gatech.edu

0.6

0.1

a@nms.lcs.mit.edu

0.7

0.2

b@nms.lcs.mit.edu

0.6

0.2

a@cs.princeton.edu

0.9

0.4

b@cs.princeton.edu

0.5

0.1

a@cs.ucla.edu

0.3

b@cs.ucla.edu

0.4

a@cubinlab.ee.mu.oz.au

0.1

b@cubinlab.ee.mu.oz.au

0.1

a@usc.edu

0.8

0.1

b@usc.edu

0.7

0.2

a@cs.utexas.edu

0.7

0.4

b@cs.utexas.edu

0.4

0.2

a@cs.uwaterloo.ca

0.6

0.2

b@cs.uwaterloo.ca

0.6

0.1

a@cs.wisc.edu

0.6

0.1

b@cs.wisc.edu

0.7

0.3

9

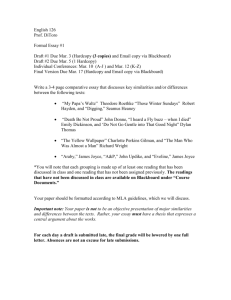

Loss Rates by Attachment

Attachment

Size

(B)

Type Emails

Emails Loss

Sent Received Rate

(none)

96631

1062

1.1

1nag_jpg2.jpg

48634 JPEG

1489

28

1.9

Nehru_01.jpg

21192 JPEG

2632

55

2.1

home_main.gif

35106 GIF

2723

65

2.4

phd050305s.gif

65077 GIF

2905

43

1.5

ActiveXperts_Network_Monitor2.ppt

105984 MSPowerPoint

2935

43

1.5

vijay.ppt

48640 MSPowerPoint

1327

18

1.4

CfpA4v10.doc

55808 MS Word

2900

43

1.5

CHANG_1587051095.doc

25088 MS Word

2711

15

0.6

SSA_PRODUCTSTRATEGY_final.doc 91136 MS Word

2822

33

1.2

34310344.pdf

32211 PDF

2867

25

0.9

f1040v.pdf

47875 PDF

2805

42

1.5

CHANG_1587051095.zip

4987 Zip

2940

19

0.6

SSA_PRODUCTSTRATEGY_final.zip 43511 Zip

2776

64

2.3

IMC_2005_-_Call_for_Papers.htm

10377 HTML

2773

32

1.2

ActivePerl_5.8_-_Online_Docs___Getting_Started.htm

24598 HTML

2757

23

0.8

BBC_NEWS___Entertainment___Space_date_set_for_Scotty's_ashes.htm

32776 HTML

2951

42

1.4

• Nothing stands out

8.MAR.2006

10

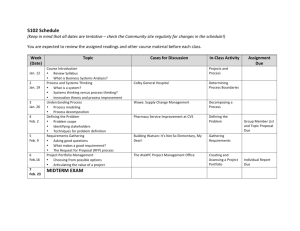

Loss Rates by Subject/Body

40

35

30

Loss %

25

20

15

10

5

1240

1181

1122

1063

1004

945

886

827

768

709

650

591

532

473

414

355

296

237

178

119

60

1

0

Subject

• ~50-250 emails sent per subject

• Without 35% case : loss rate 1.82% to 1.79%

8.MAR.2006

11

Summary of Findings

• Email loss rates are high

– 1.82% loss

– 0.71% conservative silent loss ( 1 / 140 )

• Difficult to disambiguate cause of loss

– Difference between domains (filters or servers?)

– No difference between mailboxes

– No difference between attachments

– Only 1 body had abnormally high loss

8.MAR.2006

12

Outline

•

•

•

•

•

Email loss problem

Design philosophy

SureMail design

SureMail robustness to security attacks

SureMail implementation

8.MAR.2006

13

We Found Email Loss; Now What?

• Can try to fix email architecture, but

– Hard to know exactly what is problem

– Spam filters continually evolve; not perfect

– Some architectures are very complicated

– How many email systems are out there?

– The current system mostly works

8.MAR.2006

14

Fixing the Architecture

• Improve email delivery infrastructure

– more reliable servers

• e.g., cluster-based (Porcupine [Saito ’00])

– server-less systems

• e.g., DHT-based (POST [Mislove ’03])

– total switchover might be risky

• “Smarter” spam filtering

– moving target mistakes inevitable

– non-content-based filtering still needed to cope

with spam load

8.MAR.2006

15

Email Notifications

• DSN / bouncebacks

– Most spam filters don’t generate DSN on drop

– Bogus DSNs due to spam w/ bogus sender

– Some MTAs block DSN for privacy

– MTA crash may not generate DSN

– No DSN for loss between MTA and MUA

• MDN / read receipts

– Expose private info (when read, when online)

– Can help spammers

8.MAR.2006

16

Notification Design Requirements

•

•

•

•

•

•

•

Cause minimal MTA/MUA disruption

Cause minimal user disruption

Preserve asynchronous operation

Preserve user privacy

Preserve repudiability

Maintain spam and virus defenses

Minimize traffic overhead

8.MAR.2006

17

Outline

•

•

•

•

•

Email loss problem

Design philosophy

SureMail design

SureMail robustness to security attacks

SureMail implementation

8.MAR.2006

18

SureMail Design Requirements

• Cause minimal MTA/MUA disruption

• No MTA modification; no Outlook modification

• Cause minimal user disruption

• User notified only on loss

• Preserve asynchronous operation

• Preserve user privacy

• Only receiver is notified of loss

• Preserve repudiability

• No PKI / authentication

• Maintain spam and virus defenses

• Emails not modified

• Minimize traffic overhead

8.MAR.2006

• 85 byte notification per email

19

Basic Operation

• Sender S sends email to receiver R

– S also posts notification to overlay

• R periodically downloads new email

– R also downloads notifications from overlay

• Notification without matching email loss

– delay : median 26s, mean 276s, max 36.6 hrs

8.MAR.2006

20

SureMail Overview

You’ve Lost Mail!

H1(Mnew),

H1(M ),

old

T, message

Request lost

MAC([T,H1(Mnew)]

,H2(Mold))

Sender S

Recipient R

Dnot=H1(R)

Dreg=H2(R)

8.MAR.2006

21

SureMail Overview

• Emails, MTAs, MUAs unmodified

• Parallel notification overlay system

– Decentralized; limited collusion

– Agnostic to actual implementation

• end-host-based (e.g., always-on user desktops)

• infrastructure-based (e.g., “NX servers”)

• Prevent notification snooping & spam

– Email based registration

– Reply based shared secret

8.MAR.2006

22

Email-Based Registration

• Goal: prevent hijacking of R’s notifications

– Only R can receive emails sent to R

– Limited collusion among notification nodes

• One-time operation for initial registration

– R sends registration request to H2(R), H3(R)

– H2(R), H3(R) email registration secrets to R

• To retrieve notifications at H1(R)

– R uses registration secrets with H1(R); H1(R)

verifies with H2(R) H3(R), sends back notifications

– Neither H1(R), H2(R), H3(R) can associate

notifications with R, unless they collude

8.MAR.2006

23

Reply-Based Shared Secret

• Goal: prevent notification spoofing & spam

– Only R & S know their email conversations

– S rarely converses with spammers

• Reply detection

– S sends Mold to R, R replies with M’old

– S uses H(Mold) to “prove” identity to R in future

• Notification for Mnew from S to R

– H1(Mnew),H1(Mold),T,MAC([T,H1(Mnew)],H2(Mold))

– Only R can identify S

– Shared secret can be continually refreshed

8.MAR.2006

24

Attacks Defeated by Design

• X cannot retrieve H1(R) notifications

• H1(R) cannot identify R

• H2(R), H3(R) cannot see R’s notifications

– If they don’t collude; can increase to 3 nodes

•

•

•

•

X, H(R) cannot identify S

X, H(R) cannot learn Mnew, Mold

X cannot annoy R with bogus notifications

X cannot masquerade post to H1(R) as S

8.MAR.2006

25

First Time Sender

• What if FTS email is lost?

• FTS & spammer generally indistinguishable

• But perhaps FTS knows I who knows R

– Email networks have small world properties

– I makes shared secret SI with all known parties

– FTS sends email to R

• Posts multiple notifications

• One for every SI it has learned

8.MAR.2006

26

Other Issues

• Reply-detection:

– “in-reply-to” header may not always help

– indirect checks based on text similarity

• Reducing overhead:

– post notifications only for “important” emails

– delay posting in hope of receiving implicit ACK (reply) or

NACK (bounce-back)

• Mobility:

– reply-based shared secret can be regenerated

– web-mail

• Can support mailing lists

8.MAR.2006

27

Outline

•

•

•

•

•

Email loss problem

Design philosophy

SureMail design

SureMail robustness to security attacks

SureMail implementation

8.MAR.2006

28

SureMail Implementation

• Reply detection heuristic for shared secret

• Notification service

– Centralized server running

– Chord based DHT running

• Notification posting, retrieving

– Grab in/out bound email via Outlook MAPI call

• No modification to Outlook binaries

– XML notification put/get commands

– Simple Win32 GUI

8.MAR.2006

29

SureMail GUI

• Client UI will see emails, will post & retrieve notifications

• E.g. running on two machines

netprofa@microsoft.com and

netprofa@gmail.com

8.MAR.2006

30

Notification Results

Start Date

End Date

Days

Emails sent

Emails lost

Total loss rate

Bouncebacks received

Matched bouncebacks

Unmatched bouncebacks

Emails lost silently

Silent loss rate

Hard failures

Conservative silent loss

Notifications received

8.MAR.2006

1/11/2006

2/8/2006

29

19435

653

3.36%

406

378

28

247

1.27%

70

0.91%

19435

31

Summary

• Email does get lost!

– ~40 accounts, 158000 emails, 0.71%-0.91% silent loss

• SureMail

– Client based – unmodified email, servers, clients; no PKI

– User intervention only on lost email

– Keeps repudiability, privacy, asynchronous, spam & virus

defense

• Separate notification overlay robust

– Simple, small message format

– No virus, malware, spam filters needed

– Provides failure independence

• Status

– ACM Hotnets 05; ACM Sigcomm 06 submission

– Prototype implementation

8.MAR.2006

32