PowerPoint 8-20-13 - Sullivan County BOCES

advertisement

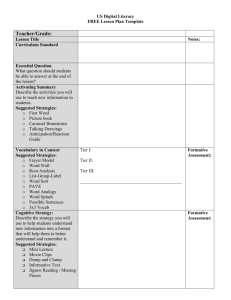

1st Annual Data Summit: Comprehensive Assessment Systems & Formative Assessment David Abrams Sullivan County BOCES 8/20/2013 1 Introduction ● Validity is a process not a product. ● Design assessments to function as a lever for good instructional practices. ● Consciously design assessments so that the data produced can be used to: inform instruction; provide evidence of student achievement and/or growth; and facilitate the transition to the Common Core College & Career Ready Standards. ● Document the Process. 2 Assessment System Design: Key Questions/Bright Lines 1. What do I want to learn from this assessment? 2. Who will use the information gathered from this assessment? 3. What action steps will be taken as a result of this assessment? 4. What professional development or support structures should be in place to ensure the action steps are taken appropriately? 5. How will student learning improve as a result of using this assessment and will it improve more than if the assessment were not used? (Perie, Marion, & Gong, 2009) 3 Comprehensive Assessment System: Components Summative: given one time at the end of the semester or school year to evaluate students’ performance against a defined set of content standards. Can be used for accountability or to inform policy and/or can be teacher administered for grading purposes (Perie, Marion, & Gong, 2009). 4 Comprehensive Assessment System: Components Interim: Assessments administered during instruction to evaluate students’ knowledge and skills relative to a specific set of academic goals in order to inform policymaker or educator decisions at the classroom, school, or district level. The specific interim assessment designs are driven by the purposes and intended uses, but the results of any interim assessment must be reported in a manner allowing aggregation across students, occasions, or concepts (Perie, Marion, & Gong, 2009). 5 Comprehensive Assessment System: Components Interim Con’t: Key components of interim assessments are: 1) they evaluate students’ knowledge and skills relative to a specific set of academic goals; and 2) they are designed to inform decisions at both the classroom and beyond the classroom level (Perie, Marion, & Gong, 2009). 6 Comprehensive Assessment System: Components Formative: used by teachers to diagnose where students are in their learning, where gaps in knowledge and understanding exist, and to help teachers and students improve student learning. The assessment is embedded within the learning activity and linked directly to the current unit of instruction. 7 Comprehensive Assessment System: Components Formative assessments are used most frequently and have the smallest scope and shortest cycle while summative are administered least frequently and have largest scope and cycle. Interim fall between the two (Perie, Marion, & Gong, 2009). 8 Formative Assessment: Table Discussion-Information Processing How is Formative Assessment being used in your District, Schools, &/or Classroom? What constitutes a “quality formative assessment and how do you know?” What are your goals for implementing Formative Assessment in your school? Does your district have Professional Learning Communities/Whole Faculty Study Groups, vertical & horizontal, in place? Are you comfortable using data to inform instruction? 9 Formative Assessment: Perie, Marion, Gong ● Formative assessment is used by classroom teachers to diagnose where students are in their learning, where gaps in knowledge and understanding exist, and how to help teachers and student improve student learning. ● The assessment is embedded within the learning activity and linked directly to the current unit of instruction. ● The tasks presented may vary from one student to another depending on the teacher’s judgment. 10 Formative Assessment: Perie, Marion, Gong ● Providing corrective feedback, modifying instruction to improve the student’s understanding, or indicating areas of further instruction are essential aspects of a classroom formative assessment. 11 Formative Assessment ● …true meaning of formative assessment: an activity designed to give meaningful feedback to students and teachers and to improve professional practice and student achievement Reeves (2009). ● Three things must occur for an assessment to be formative: assessment is used to identify students who are experiencing difficulty; those students are provided additional time and support to acquire the intended skill or concept, and the students are given another opportunity to demonstrate what they have learned (DuFour, Eaker, and Karhanek 2010). 12 Formative Assessment ● Formative assessment is a systematic process to continuously gather evidence and provide feedback about learning while instruction is underway. ● The feedback identifies the gap between a student’s current level of learning and a desired learning goal. ● Teachers elicit evidence about student learning using a variety of methods and strategies, e.g. observation, questioning, dialogue, demonstration, and written response. (Heritage, et al 2009) 13 Formative Assessment ● Teachers must examine the evidence from the perspective of what it shows about student conceptions, misconceptions, skills, and knowledge. ● They need to infer the gap between students’ current learning and desired instructional goals, identifying students’ emerging understanding or skills so they can modify instruction. ● For assessment to be formative, action must be taken to close the gap based on evidence solicited. (Heritage, et al 2009) 14 Formative Assessment What We Know: ● It is not a kind of test. ● Formative Assessment practice, when implemented effectively, can have powerful effects on learning. ● Formative Assessment involves teachers making adjustments to their instruction based on evidence collected, and providing students with feedback that advances learning. ● Students participate in the practice of formative assessment through self and peer-assessment. (Heritage, 2011) 15 Formative Assessment: Teacher’s Role ● Effective when teachers are clear about the intended learning goals for a lesson: this means focusing on what students will learn, as opposed to what they will do. ● Teachers need to share learning goal with students. ● Teachers need to communicate the indicators of progress toward the learning goal. ● There is no single way to collect formative evidence because formative assessment is not a specific kind of test. (Heritage, 2011) 16 Formative Assessment: Student’s Role ● Student’s role begins when they have a clear conception of the learning target. ● In self-assessment, students engage in metacognitive activity which involves students in thinking about their own learning while they are learning. ● They generate feedback that allows them to make adjustments to their learning strategies. 17 Formative Assessment: Student’s Role ● It is important to include peer-assessment where students give feedback to their classmates. ● Students use the feedback; it is important that students have to both reflect on their learning and use the feedback advance learning. (Heritage, 2011) 18 Formative Assessment: Evidence Collection ● Whatever methods teachers use to elicit evidence of learning, it should yield information that is actionable by them and their students. ● Evidence collection is a systematic process and needs to be planned so that teachers have a constant stream of information tied to indicators of progress. (Heritage, 2011) 19 Formative Assessment: Feedback ● Feedback obtained from planned or spontaneous evidence is an essential resource for teachers to shape new learning through adjustments in their instructions. ● Feedback that teacher provides to students is also an essential resource so that student can take active steps to advance their own learning. (Heritage, 2011) 20 Common Formative Assessment ● Common assessment refers to those assessments given by teacher teams who teach the same content or grade level; those with “collective responsibiloity for the learning of a group of students who are expected to acquire the same knowledge and skills.” ● No teacher can opt out of the process: common assessment use the same instrument or a common process utilizing the same criteria for determining the quality of student work. (DuFour et al., 2010) 21 Common Formative Assessment: Benefits ● Promote efficiency for teachers ● Promote equity for students ● Provide an effective strategy for determining whether the guaranteed curriculum is being taught and, learned. ● Inform practice of individual teachers ● Build a team’s capacity to improve its program ● Facilitate a systematic, collective response to students who are experiencing difficulty ● Tool for changing adult behavior and practice (Bailey & Jakicic, 2012) 22 Formative Assessment: Table Discussion-Information Processing Do these views of Formative Assessment converge with your’s? Why/Why Not? What strikes you as most important given the key points regarding Formative Assessment? How do you see implementing assessment strategies that effectively utilize the crucial aspects of Formative Assessment? Are you comfortable designing Common Formative Assessments? 23 Formative Assessment: Evidence to Action-G-Study ● Heritage et al determined that there is little research to evaluate teachers’ ability to adapt instruction based on assessment of student knowledge and understanding. ● Research has shown, using math, that moving from evidence to action may not always be the seamless process formative assessment demands. ● G-study results provide data showing teachers do better at drawing inferences of student levels of understanding from assessment evidence, while having difficulties in deciding next instructional steps. 24 Formative Assessment: Evidence to Action-G-Study ● Heritage et al conducted a G-Study using 3 mathematical concepts as the instructional learning goal. ● The teachers’ pedagogical knowledge in mathematics was the object of measurement. The study was designed to provide information about potential sources of variation in measuring teachers' pedagogical knowledge in mathematics. ● The study design implies that there were three sources of score variability: rater, mathematics principle, and type of task. 25 Formative Assessment: Evidence to Action-G-Study ● Rater: Study used performance tasks; once source of variance was the possibility of score variation between raters due to interpretation and application of rubric and how stringent/lenient a rater may be. ● Principle: different types of domain specific principles may cause variance due to a given teachers’ preparation, a teacher may have more knowledge about one principle than others. (Study evaluated 3 principles: distributive property, solving equations, & rational number equivalence.) 26 Formative Assessment: Evidence to Action-G-Study Task: potential source of variability. Study focused on 3 types of tasks: identifying key principles; evaluating student understanding; and planning the next instructional step based on the evaluation of student understanding. 27 Formative Assessment: Evidence to Action-G-Study Results ● Main effect of principle and rater are minimal. Teachers knew the concept and knew how to evaluate the student work to determine learning. ● Important finding: regardless of the math principle, determining next instructional steps based on the examination of student responses tends to be more difficult for teachers. ● If teachers are not clear about what the next steps are to move learning forward, then promise of Formative Assessment to improve student learning is impacted negatively. 28 Formative Assessment: Evidence to Action-G-Study Results & Teacher Support ● Teachers need clear conceptions of how learning progresses in a domain; they need to know what precursor skills and understandings are for a specific instructional goal, what a good performance of the desired goal looks like, and how the skill increases in sophistication from the current level students have reached. ● Learning progressions describe how concepts and skill increase in sophistication in a domain from the most basic to the highest level, showing the trajectory of learning along which students are expected to progress. 29 Formative Assessment: Evidence to Action-G-Study Results & Teacher Support ● From a learning progression, teachers can access the big picture of what students need to learn, they can grasp what the key building blocks of the domain are, while having sufficient detail for planning instruction to meet short term goals. ● Teaches are able to connect Formative Assessment opportunities to short term goals as a means to track student learning. ● Learning progressions alone will not be sufficient. 30 Formative Assessment: Evidence to ActionG-Study Results & Teacher Support ● Teachers need to know what a good performance of the their specific short-term learning goal looks like. They must also know that good performance does not look like. ● Key finding: using assessment information to plan subsequent instruction tends to be the most difficult task for teachers as compared to other tasks. This finding gives rise to the question: can teachers always use formative evidence to effectively “form” action? 31 Formative Assessment: Instructional SupportMarzano, Pickering, and Pollock (2001) 9 highly effective, research-based strategies 1. Identifying similarities and differences 2. Summarizing and note-taking 3. Reinforcing effort and providing recognition 4. Homework and practice 5. Nonlinguistic representations 6. Cooperative learning 7. Setting objectives & Providing Feedback 8. Generating & testing hypothesis 9. Cues, questions, and advanced organizers 32 Formative Assessment: Table Discussion-Information Processing How can you use Professional Learning Communities to support teachers and students when implementing Formative Assessment? Based on Heritage et al’s findings, what type of professional development will you need to support Formative Assessment in your District’s? 33 Formative Assessment: Validity Framework Review Nichols, Meyers, & Burling (2009) Validity Framework for a Formative System: ● Figure 1: General Framework for Evaluating Validity ● Figure 2: Framework using the identification of specific procedural errors ● Figure 3: Framework for a system of reteaching Validity: The degree to which accumulated evidence and theory support specific interpretations of test scores entailed by proposed use of a test (JS Glossary). 34 Formative Assessment: Design ● Need to unwrap standards or evaluate State Testing Data to determine demonstrated areas of instructional need ● Define & Align to State and District Expectations Review Baily Figure 4.1: 5 Steps 1. Focus on Key Words 2. Map it Out 3. Analyze the target 4. Determine big ideas 5. Establish Guiding Questions for Instruction 35 Formative Assessment: Design ● Assessments must provide information about important learning targets that are clear to students and teacher teams. ● Assessments provide timely information for both students and teacher teams. ● Assessment must provide information that tells students and teacher teams what to do next. 36 Formative Assessment: Design ● Determine Assessment types: selected response; constructed response; performance task ● Determine number and balance of items ● Select/design assessment ● Administer, evaluate responses, and redesign instructional strategies 37 Formative Assessment: Design ● Assess again (in original assessment design, create enough items to have more than one assessment) ● Evaluate depth and breath of rigor: Webb’s Depth of Knowledge/Bloom’s Taxonomy ● Utilize PLC to support process and data discussions 38 Cognitive Response Demands: Reading Load Reading Load: The amount and complexity of the textual and visual information provided with an item that an examinee must process and understand in order to respond successfully to an item (Ferrara 2011). 39 Cognitive Response Demands: Reading Load Low Reading Load: May include a small amount of text. Moderate Reading Load: Lower amounts of text and visuals and less complex text. High Reading Load: May include a large amount of text much of which is complex linguistically or complex because of the content area concepts and terminology used (Ferrara, 2011). 40 Cognitive Response Demands: Mathematical Complexity Ranges for NAEP Low Complexity: Items may require examinees to recall a property or recognize a concept; these are straightforward, single operation items. Moderate Complexity: May require examinees to make connections between multiple concepts, multiple operations, and to display flexibility in thinking as they decide how to approach a problem. High Complexity: May require examinees to analyze assumptions made in a mathematical model or to use reasoning, planning, judgment, and creative thought; it assumes that students are familiar with the mathematics concepts and skills required by an item (Ferrara, 2011). 41 Common Core Transition: Academic Language Academic language is used to refer to the form of language expected in contexts such as the exposition of topics in the school curriculum, making arguments, defending propositions, and synthesizing information (Snow, 2010). (See Coxhead’s High-Incidence Academic Word List) 42 Bailey & Jakicic Sample Assessment Plan Learning Target Knowledge Identify similes & metaphors from text Five Matching Explain meaning of common similes & metaphors Develop a narrative paragraph w/both Application Analysis Evaluation Four Multiplechoice One Constructed Response 43 Revised Item Map for Locally Developed Assessments-Formative Assessments Sample Item Map Template: Prior to Assessment Administration Quest # Type (MC, CRQ, ERQ) Point(s) Learning Standard PI CC Literacy Standard Reading Or Math Load Lexile or other Reading Formula AL* Webb DOK Sample Item Map Template: Post Assessment Administration Quest # Type (MC, CRQ, ERQ) Point(s) Learning Std PI CC Literacy Std Reading Or Math Load Lexile or Other Reading Formula AL Webb DOK Item Data *AL: Academic Language 44 Multiple Measures-Joint Standards 13.7: In educational settings, a decision or characterization that will have major impact on a student should not be made on a simple test score. Other relevant information should be taken into account if it will enhance the overall validity of the decision. 45 Multiple Measures Multiple Measures are intended to improve quality of high-stakes decision making so decisions are not based on one single measure. Definition of multiple measures, criteria to evaluate each measure, and how these measures should be combined for use in decision making is not clear. (Henderson-Montero, Julian, & Yen, 2003) 46 Multiple Measures: Examples ● ● ● ● ● ● Test more than one content area Assess a content area using a combination of MC and CRQ formats Assess a content area using an on-demand test and a class based portfolio (writing) Assess school performance using a combination of academic tests and other indicators Make progressively “higher stakes” decisions about schools using a combination of accountability scores and other reviews Use for promotion/graduation processes by meeting certain criteria, even if student does not pass a State’s on-demand test (Gong & Hill, 2001). 47 Multiple Measures: Examples ● ● ● ● ● ● Have several assessment instruments that can be used by students of various proficiency or presentation/response needs Allow students multiple opportunities to retake the test to determine whether they meet minimum cut scores Allow for promotion/graduation Double score every constructed response item on tests used for high school student accountability Assess school performance using an average of at least two years’ of data Assess school performance using as many grades of students as practical. (Gong & Hill, 2001) 48 Multiple Measures ● Need to create a framework for combining multiple measures. Four Categories of Rules: 1. Conjunctive, 2. Compensatory, 3. Mixed conjunctive-compensatory, and 4. Confirmatory (Henderson-Montero, Julian, and Yen 2003; Chester, 2003). 49 Multiple Measures ● ● ● Conjunctive: attainment of a minimum standard (e.g. meeting a designated cut score on a specific exam) Compensatory: weaker performance on one measure can be offset by stronger performance on another. Performance on two or more measures is required before they are combined into a compensatory rule. Mixed conjunctive-compensatory: uses a combination of conjunctive and compensatory approaches; e.g. minimal performance level is required across measures, but beyond minimal level of performance, poorer performance on one measure can be counterbalanced by better performance on other measures. 50 Multiple Measures ● Confirmatory: employs information from one measure to validate or compare information from another, independent measure (e.g. statewide reading performance compared to NAEP). Generally apply a conjunctive rule where minimum performance on independent measure is required (e.g. Regents alternatives, i.e. AP score of Level 3). (Henderson-Montero, Julian, and Yen 2003; Chester, 2003). 51 Multiple Measures: Example Philadelphia's approach to combining multiple measures to reach Elementary promotion decisions. Measures of Different Constructs Different Measures Of the Same Constructs Multiple Opportunities Conjunctive (AND) Test scores in Reading & math; Satisfactory teacher Marks on ALL subject Areas; Successful Completion of Multidisciplinary & Service Learning Projects Satisfactory teacher marks AND test scores Accommodations & Alternate Assessments Compensatory (+/-) Complementary (OR) Multiple-Choice and Open-Ended Sections of SAT-9 SAT 9 OR Citywide Test Summer School; Retest in citywide test SAT-9 OR Spanish-language Aprenda; Citywide test In English OR Spanish (Chester, 2003) 52 Multiple Measures: Challenges ● ● ● ● Technical issues in combining specific assessments and indicators: use of common scale and/or index (e.g. combine test scores from NRT & CRT; state and local (NRT; CRT; other); combine test scores and other indicators; combining nonstandard and/or different combinations of measures. Sufficient data for characterizing school(s). “Valid” interpretations and uses of accountability for effective and fair school improvement & services to individual students (disaggregation of data) (Gong & Hill, 2003). Use of multiple measures does not necessarily improve the reliability and validity of the decisions that are made; it is the logic by which the measures are combined that determines the accuracy and appropriateness of the high-stakes decisions that are made (Chester, 2003). 53 Appendix Materials 54 Cognitive Response Item Coding: Bloom’s Taxonomy Levels Description Level 1: Knowledge Recall & Recognition Level 2: Comprehension Translate, Interpret, & Extrapolate Level 3: Application Use of generalizations in specific instances Level 4: Analysis Determine Relationships Level 5: Synthesis Create new relationships Level 6: Evaluation Exercise of learned judgment 55 Cognitive Response Item Coding: Webb’s Depth of Knowledge Level Description Level 1 Recall: recall of a fact, information, or procedure Level 2 Skill/Concept: use of information or conceptual knowledge, two or more steps, etc. Level 3 Strategic Thinking: requires reasoning, developing plan or a sequence of steps, some complexity, more than one possible answer. Level 4 Extended Thinking: requires an investigation, time to think and process multiple conditions of the problem 56 (www.wcer.wisc.edu) Cognitive Response Item Coding: Bloom & Webb: Side-By-Side Bloom’s Taxonomy Webb’s DOK Knowledge: Recall Comprehension: low level processing Recall Application: Use of abstractions in concrete situations Basic Application of Skill/Concept: use of information, conceptual knowledge, multiple steps Analysis: breakdown of a situation into component parts Strategic Thinking: requires reasoning, a plan, or multi-step processes Synthesis & Evaluation: Putting together elements and parts to form a whole Extended Thinking: requires investigation, time to think, and to process multiple conditions of task 57 Common Core Transition: Academic Language Academic language is designed to be concise, precise, and authoritative. To achieve these goals, it uses sophisticated words and complex grammatical constructions that can disrupt reading comprehension and block learning. Students need help in learning academic vocabulary and how to process academic language if they are to become independent learners (Snow, 2010). (See Coxhead’s High-Incidence Academic Word List) 58 Common Core Transition: Academic Language ● Maintaining the impersonal authoritative stance creates a distanced tone that is often puzzling to adolescent readers and is difficult for them to utilize in their writing. ● Students must have access to the allpurpose academic vocabulary that is used to talk about knowledge and that they will need to use in making their own arguments and evaluating others’ arguments (Snow, 2010). 59 Common Core Transition: Academic Language Formal Register (Formal Academic Tone): language found in most academic, business, & serious nonfiction. It is characterized by: ● Emotional distance between writer and audience; ● Emotional distance between the writer and topic; ● No colloquial language, slang, regionalisms, and dialects; ● Careful attention to conventions of edited American English; and ● Attention to the logical relationships among words and ideas (The St. Martin’s Handbook). 60 Item Types: Key Term Item Format: The variety of test item structures or types that can be used to measure examinees’ knowledge, skills, and abilities; these include: multiple-choice or selected response; open-ended or constructed response; essay; and performance task. (Perie, 2010). 61 Item Types: Key Terms Selected Response Items: Items or tasks in which students choose from among response or answer choices that are presented to them, e.g. MultipleChoice, True/False, Matching, Cloze (Carr & Harris, 2001). 62 Item Types: Conventional MC Three Parts: Stem, Correct Answer, and Distractors (Haladyna, 1999). ● Stem: Stimulus for the response; it should provide a complete idea of the problem to be solved in selecting the right answer. The stem can also be phrased in a partial-sentence format. Whether the stem appears as a question or a partial sentence, it can also present a problem that has several right answers with one option clearly being the best of the right answers. 63 Item Types: Conventional MC Three Parts: Stem, Correct Answer, and Distractors ● Correct Answer: the one and only right answer; it can be a word, phrase, or sentence. ● Distractors: Distractors are wrong answers. Each distractor must be plausible to test-takers who have not yet learned the content the item is supposed to measure. To those who have learned the content, the distractors are clearly wrong choices. Distractors should resemble the correct choice in grammatical form, style, and length. Subtle or blatant clues that give away the correct choice should be avoided. 64 Item Types: Key Terms Constructed Response Item: An exercise for which examinees must create their own responses or products rather than choose a response from an enumerated set. Short-answer items require a few words or a number as an answer, whereas extended-response items require at least a few sentences (Joint Standards, 1999). 65 Item Types: Key Terms Constructed Response Items Con’t: Constructed response items or tasks are used to assess processes or procedural knowledge or to probe for students’ understanding of knowledge and information. Constructed response tasks are often contrasted with selected response items or tasks (Carr & Harris, 2001). 66 Item Types: Key Terms Performance Assessments: Productand behavior-based measurements based on settings designed to emulate real-life contexts or conditions in which specific knowledge or skills are actually applied (Joint Standards, 1999). 67 Item Types: Key Terms Key Components of CR Items: ● Task: A specific item, problem, question, prompt, or assignment ● Response: Any kind of performance to be evaluated, including short/extended answer, essay, presentation, & demonstration. ● Rubric: Scoring criteria used to evaluate responses ● Scorers: People who evaluate responses (ETS, 2005) 68 Item Types: Key Terms Task-Specific Rubric: A set of scoring guidelines specific to a particular task. The criteria are addressed and described in terms of specific content or capacities that can be demonstrated in terms of particular, identified content relevant to the task (Carr & Harris, 2001). 69 Item Types: Key Terms High-Inference Constructed Response Items: Format requires expert judgment about the trait being observed and rubrics are used to evaluate. Many abstract qualities are evaluated this way, e.g. writing ability, organization, style, word choice, and math complex problem solving (e.g. proofs, quadratic equations) (Haladyna, 1999). 70 Item Types: Key Terms Low Inference Constructed Response Items: Format simply involves observation because there is some behavior or answer in mind that is either present or absent (e.g. scaffolded questions, writing conventions, measurements) (Haladyna, 1999). 71 Item Types: High & LowInference CR Attribute High-Inference High Usually Abstract, most valued in education Low-Inference Low Usually concrete Ease of Construction Design of items is complex, involving command or question, conditions for performance, and rubrics Design of items is not as involved as high inference, involving command or question, conditions for performance, and a simple mechanism Cost of Scoring Involves training; expensive Scoring not as complex but costs can still be high Type of Behavior Measured 72 Item Types: High & LowInference CR Attribute High-Inference Low-Inference Reliability Reliability can be a Results can be very problem due to inter- reliable due to rater reliability concrete nature of items Objectivity Can be subjective More objective Bias: Systemic Error Possible threats to validity: over or underrating Seldom yields biased observations (Haladyna, 1999) 73 Choosing An Item Format (MC v. CR) ● Most efficient and reliable way to measure knowledge is with MC format. ● Most direct way to measure skill is via performance, but many mental skills can be tested via MC with a high degree of proximity (statistical relation between CR and MC items of an isolated skill). If the skill is critical to ultimate interpretation, CR is preferable to MC. 74 Choosing An Item Format (MC v. CR) ● When measuring a fluid ability or intelligence, the complexity of such human traits favors CR item formats of complex nature (high-inference) (Haladyna, 1999). 75 Choosing An Item Format: Conclusions about Criterion Measurement Criterion Conclusion About MC & CR Declarative Knowledge Most MC formats provide the same information as an essay, short answer, or completion formats. Critical Thinking MC formats involving vignettes or scenarios provide a good basis for forms of critical thinking. MC format has good fidelity to the more realistic open-ended behavior elicited by CR. (Haladyna, 1999) 76 Choosing An Item Format: Conclusions about Criterion Measurement Criterion Conclusion About MC & CR Problem Solving Ability MC item sets provide a good basis for testing problem solving. However, more research is still needed. Creative Thinking Ability MC format limited. School Abilities (e.g. writing, reading, & mathematics) Performance has the highest fidelity to criterion for these school abilities. MC is good for measuring foundational aspects of fluid abilities, such as declarative knowledge or knowledge of skills. (Haladyna, 1999) 77 Test Validation: Data Collection & Analysis ● Answer Sheet Design & Scanning Procedures (Work w/BOCES & RIC) ● Utilize Item Banking Software ● Depth of data collection: student demographics; scores; and item level data, if possible ● Evaluate/Revise ● Generate trend data ● Review against other data to verify & audit rigor ● Document & Save Analyses 78 Test Validation: Data Collection & Analysis ● ● ● ● ● ● Parallel NYSTP Test Reporting Approaches Report General Performance on Test; recommendation, report %s of students at Levels 1-4 (place 3 cuts into the instrument). Run data for all student groups and then run Title I disaggregation: Race/Special Populations (Special Education & ELLs). If you have multiple Need Resources Capacity districts/schools, run analysis by category. Further analysis: disaggregate by building and class/instructor if possible. For shared exams, try to run a comprehensive analysis of all participating CSDs for general trends and Title I disaggregation (allows for possible benchmarking). 79 Test Validation: Data Collection & Analysis Psychometric Analysis: See Classical Test Analysis in SED ELA/Math 3-8 Technical Manuals. Use baseline measures to inform your analysis. Analysis Includes 4 Primary Elements: 1) Item level statistical information (item response patterns, item difficulty, and item discrimination); 2) Test level data (raw score statistics-mean & SD) & test reliability measures (Cronbach’s Alpha & Feldt-Raju Coefficient); 3) Test speededness (omit rates); & 4) Bias through DIF (differential item functioning). Use p-values to complete Item Maps for use with administrative and instructional staff. 80 Sources American Educational Research Association, American Psychological Association, and National Council on Measurement in Education (1999). Standards for Educational and Psychological Testing. Washington, D.C.: American Educational Research Association. Baily, Kim. & Jakicic, C. (2012). Common Formative Assessment. Bloomington, IN: Solution Tree Press. 81 Sources Gong, Brian & Hill, Richard. (2001). Some Considerations of Multiple Measures in Assessment and School Accountability. CCSSO & US DOE Accountability Conference March 2324, Washington D.C.: 2001. Haladyna, Thomas M. (1999). Developing and Validating Multiple-Choice Test Items. Mahwah, NJ: Lawrence Erlbaum Associates, Publishers. 82 Sources Henderson-Montero, Dianne, Julian, Marc. C., & Yen, Wendy M. (2003). Multiple Perspectives and Multiple Measures: Alternative Design and Analysis Models. Educational Measurement: Issues and Practice. 22. 2, 7-12. Heritage, Margaret, Kim, J., Vendlinski, T. & Herman, J. From Evidence to Action: A Seamless Process in Formative Assessment? Educational Measurement: Issues and Practice, 28.3, 24-31. 83 Sources Heritage, Margaret. (2011). Formative Assessment: An Enabler of Learning. Retrieved from www.cse.ucla.edu. Herman, Joan L. and Choi, Kilchan (2012). Validation of ELA and Mathematics Assessments: A General Approach. Retrieved from www.cse.ucla.edu/products/states_schools/ValidationELA _FINA L.pdf 84 Sources Nichols, Paul, Meyers, J. & Burling, K (2009). A Framework for Evaluating and Planning Assessments Intended to Improve Student Achievement. Educational Measurement: Issues and Practice, 28.3, 14-23. Perie, Marianne, Marion, S., & Gong, B. (2009). Moving Toward a Comprehensive Assessment System: A Framework for Considering Interim Assessments. Educational Measurement: Issues and Practice, 28.3, 5-13. 85 David Abrams dabrams5@nycap.rr.com 86