action models

advertisement

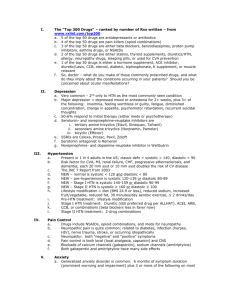

Learning Applicability Conditions in AI Planning from Partial Observations Hankz Hankui Zhuoa, Derek Hao Hua, Chad Hoggb, Qiang Yanga and Hector Munoz-Avilab a: Hong Kong University of Science & Technology, b: Lehigh University Motivation Modeling applicability conditions is difficult, especially for PDDL and HTN descriptions. There are some learning algorithms based on complete state information. However, state information is often partial and noisy in some domains, e.g., Batch commands in operating systems; Activity recognition; … Our work focus on learning STRIPS model based on partial & noisy state information; and then extend it to PDDL and HTN model learning. 2 Applicability Conditions Learning Hierarchies LAMP system HTNlearner HTN model PDDL model STRIPS Model ARMS system 3 Notations A planning domain: ( S , A, ) SAS A planning problem: P (, s0 , g ) A plan trace: T (s0 , a0 , s1 , an , g ) The problem of learning applicability conditions is: Input: a set of plan traces Output: a set of applicability conditions so that plan traces are able to proceed. 4 ARMS STRIPS Models Input: predicates, action PDDL model HTN model schemas, a set of plan traces Output: STRIPS action models STRIPS Model 5 ARMS STRIPS Models Types, predicates, actions schemas Plan traces Build constraints E.g., The relation p must be generated by an action prior to p in the plan trace The last action before p should not delete p … Solved w/ Weighted MAXSAT Action models Calculate weights using frequent set mining algorithm, and solve these weighted constraints to finally attain action models 6 LAMPPDDL models Input: predicates, action PDDL model HTN model schemas, a set of plan traces Output: action models with quantifiers and implications, e.g., STRIPS Model 7 LAMPPDDL models Types, predicates, action schemas Plan traces Generate candidate formulas A set of propositions ``at’’ is a precondition of ``move’’ Learn weights of candidate formulas using MLNs Action models Select formulas whose weights are larger than a threshold Convert the selected formulas to action models E.g. … 8 HTN-learner HTN models Input: a set of decomposition trees with partial observations, e.g. PDDL model HTN model STRIPS Model Output: action models and method preconditions. 9 HTN-learner HTN models Decomposition trees HTN schemata Build constraints State constraints Decomposition constraints Action constraints Solve constraints HTN model Names, parameters, Relation Tasks’ information relations between States Relation information and methods/actions between Constraints imposed methods and actions on action’s Solving constraints preconditions & effects using weighted MAXSAT 10 Experimental result (ARMS) (:action pick-up (?x - block) :precondition (and(clear ?x)(ontable ?x)( handempty)) :effect (and (not (ontable ?x)) (not (clear ?x))(clear ?x) (not (handempty)) (handempty) (holding ?x))) (:action put-down (?x - block) :precondition (holding ?x) (clear ?x) :effect (and (not (holding ?x))(clear ?x) (handempty)(not (clear ?x))(ontable ?x))) (:action stack (?x - block ?y - block) :precondition (and (holding ?x)(clear ?y)(ontable ? y)) :effect (and (not (holding ?x)) (not (clear ?y)) (clear ?x)(handempty)(not(onta ble ?y))(on ?x ?y))) (:action unstack (?x - block ?y block) :precondition (and (on ?x ?y) (clear ?x) (handempty)) :effect (and (holding ?x) (clear ?y) (not (clear ?x)) (not (handempty))(on ?x ?y) (not (on ?x ?y)))) 11 Experimental Result (LAMP) (:action pick-up (?x - block) :precondition (and (clear ?x)(handempty)(holding ?x)) :effect (and (not (handempty)) (not(clear ?x)) (holding ?x) (when (ontable ?x)(not (ontable ?x))) (forall (?y-block) (when(on ?x ?y)(clear ?y))) (forall (?y-block)(when(on ?x ?y)(holding ?y))) (forall(?y-block) (when(on ?x ?y)(not(on ?x ?y)))))) (:action put-down (?x - block) :precondition (holding ?x) (clear ?x) (handempty) :effect (and (not (holding ?x))(clear ?x) (handempty) (ontable ?x) (forall (?y-block) (when (not(clear ?y)) (ontable ?x))) (forall (?y-block)(when (clear ?y) (on ?x ?y))))) (:action stack (?x - block ?y - block) :precondition (and (holding ?x) (clear ?y)(handempty)) :effect (and (not (holding ?x))(not (clear ?y))(clear ?x) (handempty) (on ?x ?y) (when (clear ?y)(on ?x ?y)) (when (ontable ?y)(on ?x ?y)) (when (ontable ?y)(not (clear ?y))) (when (not(clear ?y))(ontable ?x)))) (:action unstack (?x - block ?y - block) :precondition (and (clear ?x)(holding ?x)(handempty)) :effect (and(not(handempty)) (not(clear ?x))(ontable ?y) (clear ?x) (holding ?x) (when(on ?x ?y) (clear ?y)) (when(ontable ?y)(clear ?y)) (when(ontable ?x)(not(ontable ?x))) (when (on ?x ?y)(not(on ?x ?y))))) 12 Experimental Result (HTN-learner) (:method makestack_from_table_iter :parameters (?x - block ?y - block ?z - block) :task (stack_from_table ?x - block ?y - block) :preconditions (and (ontable ?x) (clear ?z) (holding ?z) (clear ?y) (on ?z ?x)) :subtasks (and (clean ?x ?z) (pick-up ?x) (stack ?x ?y)) Other methods … And action models, “pick-up”,… ?z ?x ?y 13 Related works Action model learning Benson, 1995; Wang, 1995; Schmill et al., 2000; Pasula et al., 2007; Walsh and Littman, 2008; Yang et al., 2007; … Markov Logic Networks (MLNs) Domingos, 2005; Poon and Domingos, 2007; … HTN learning Ilghami et al., 2005; Xu and Mu˜noz-Avila, 2005; Hogg et al., 2008; Yang et al., 2007; … 14 Conclusion We have given an overview on several novel approaches to learn applicability conditions in AI Planning, including STRIPS action models, PDDL models with quantifiers and logical implications, and HTN models including action models and method Preconditions. Our LAMP algorithm enumerates all possible preconditions and effects according to our specific correctness constraints. In the future, we wish to add some form of domain knowledge to further filter out some “impossible” candidate formulas beforehand thereby making the algorithm much more efficient. We wish to extend the action model learning algorithm to more elaborate action models that explicitly represent resources and functions. We will also apply our algorithms to more challenging tasks in real world planning applications. 15 Thank You! 16