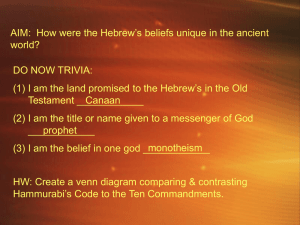

Find an irregular motion trajectory (surveillance application)

advertisement

Threading Local Kernel Functions:

Local versus Holistic Representations

Amnon Shashua

Tamir Hazan

School of Computer Science & Eng.

The Hebrew University

Hebrew University

1

Conventional Signal Representation for

Purposes of Machine Learning:

Given a training set of examples:

( x1 , y1 )

,..., ( xl , yl )

Find a classification function

xi X

yi 1

f ( x ) 1

which is approximately consistent

with the training examples and which “generalizes” well.

The instance space, typically

X Rn ,

is a 1D signal

Goal in this work:

Generalize this concept where the instance space

is a set – for example, a set of vectors with varying cardinality

Hebrew University

2

Examples for Learning over Sets

Conventional: identify a face from a single image

Model images

Novel image

Each picture is represented as a 1D vector.

Hebrew University

3

Examples for Learning over Sets

Example I

A better way: identify a face from a collection of images

Model images

input set

Hebrew University

4

Examples for Learning over Sets

Example II

Find an irregular motion trajectory (surveillance application)

An instance: a video clip of people in motion along a certain period of time.

A possible representation: each trajectory is a column vector of (x,y) positions over

time (rows represent time and columns represent people.

Represented by a matrix with 3 columns and 2n rows

Hebrew University

5

Examples for Learning over Sets

Example II

Find an irregular motion trajectory (surveillance application)

An instance: a video clip of people in motion along a certain period of time.

A possible representation: each trajectory is a column vector of (x,y) positions over

time (rows represent time and columns represent people.

For instance matrix

where

Ai

associate a label

yi 1

yi 1

if

Ai

contains an “irregular” trajectory

yi 1

if

Ai

does not

Hebrew University

6

Examples for Learning over Sets

Example II

Find an irregular motion trajectory (surveillance application)

Given a training set of examples:

( A1 , y1 )

,..., ( Al , yl ) Ai R nk yi 1

Find a classification function

where

f ( A) 1

if

f ( A) 1

A

contains an “irregular” trajectory

Hebrew University

7

Examples for Learning over Sets

Example II

Find an irregular motion trajectory (surveillance application)

Say, we want to use an “optimal margin” classification algorithm like the SVM.

1 T

max i M

2

i

subject to

0 i C ,

y

i

i

0

i

M ij yi y j h( Ai , A j )

where

h( Ai , A j )

is a positive definite kernel

Not enough to find “some” measure of similarity on a pair of matrices

It must also form an inner-product space

h( A, A' ) ( A)T ( A' )

Hebrew University

8

Another Example: will be used as running example

Holistic Representation: measurements form a single vector

• Typical: straightforward representation, advanced inference engine (SVM..)

• When vector is in high dimension - unreasonable demands on the inference engine

• Down-sampling blurs the distinction near the boundary - loss of accuracy

• Sensitivity to occlusions, local and global transformations, non-rigidity of local parts,..

Local Parts Representation:

• Measurements form a collection (ordered or unordered) of vectors

• Typical: advanced (well thought off) data modeling

but straightforward inference engine (nearest neighbor)

• Robust to local changes of appearance and occlusions

• Number of local parts may vary from one instance to the next.

Hebrew University

9

Problem Statement

Local Parts Representation:

• Measurements form a collection (ordered or unordered) of vectors

• Typical: advanced (well thought off) data modeling

but straightforward inference engine (nearest neighbor)

• Robust to local changes of appearance and occlusions

• Number of local parts may vary from one instance to the next.

Goal: Endow advanced algorithms (SVM..) with ability

to work with local representations.

Key: similarity function over instances must be positive definite (one can form

a metric from it).

Hebrew University

10

Problem Statement: similarity over sets of varying cardinality

Given two sets of vectors

A {a1 ,..., ak } R n

B {b1,...,bq } R

find a similarity measure

k q

n

sim(A,B)

T

an inner-product, i.e., sim(A,B) (A) (B)

sim(A,B) is

• sim(A,B) is built over local kernel functions (ai ,b j )

•

• column cardinality (rank) may

vary, n is fixed but k,q are arbitrary

• parameters of sim(A,B) should induce

invariance to order, robustness

under occlusions, degree of interaction between local parts, and local transformations

Hebrew University

11

Existing Approaches

• Fit a distribution to the set of local parts (column vectors) followed by a match

over distributions like KL-divergence.

Shakhnarovich, Fisher, Darrell ECCV02

Kondor, Jebara ICML 03

Vasconcelos, Ho, Moreno ECCV04

• Pros: number of parts can vary

• Cons: variation could be complex and mot likely to fit a known distribution,

sample size (number of parts) could be not sufficiently large for a fit.

• Direct (algebraic)

Wolf, Shashua JMLR03 - set cardinality must be equal.

Wallraven, Caputo, Graf ICCV03 - heuristic, not positive definite

Hebrew University

12

Inner-Products over Matrices: Linear Family

Generally, the inner-product

A R nk

a R nk

A,B aT Sˆb where

B R nq

nq

b R

vector representations via column-wise concatenation

n 2 n 2

ˆS

is the upper-left nk nq sub-matrix of some positive definite S R

by representing

We will begin

A,B a Sˆb

T

in a more convenient form

allowing explicit access to the columns of A,B

Hebrew University

13

Inner-Products over Matrices: Linear Family

We will begin by representing A,B a Sˆb

allowing explicit access to the columns of A,B

T

Assume for now linear local kernels:

Any

SR

n 2 n 2

in a more convenient form

(ai,b j ) aTi b j

can be represented as a sum over tensor products:

p

S Gr Fr

r1

Where

Gr ,Fr

are

nn

matrices.

Hebrew University

14

Tensor Product Space

vector space

is the

dimensional dual space of the space of all multilinear functions

of pairs of vectors from ,

is a basis of

is a basis (reverse lexicographical order) of

If G,F are linear maps from V onto itself, then

onto itself.

f11G ...

.

.

G F

.

.

f n1G ...

is a linear map from

f1n G

.

.

f nnG

Hebrew University

15

Inner-Products over Matrices: Linear Family

We will begin by representing A,B a Sˆb

allowing explicit access to the columns of A,B

T

in a more convenient form

p

Any

S Rn

2

n

2

can be represented as a sum over tensor products:

n n matrices.

Where Gr ,Fr are

S Gr Fr

r1

Hebrew University

16

Inner-Products over Matrices: Linear Family

Where

is of size

is the

with the (i,j) entry equal to

upper-left sub-matrix of

Hebrew University

17

Inner-Products over Matrices: Linear Family

Where

is of size

is the

with the (i,j) entry equal to

upper-left sub-matrix of

p

What are the necessary conditions on

such that S Gr Fr is pos. def.?

r1

This is difficult (NP-hard, Gurvits 2003).

Sufficient conditions: If both

are p.d. then so is

p

S Gr Fr

r1

Another condition:

must “distribute with the local kernel”

Hebrew University

18

Inner-Products over Matrices: Linear Family

Where

is of size

is the

with the (i,j) entry equal to

upper-left sub-matrix of

The role of

is to perform local coordinate change (mix the entries of the columns)

We will assume that

and as a result:

Where

are part of the upper-left block with appropriate dimensions

of a fixed p.d. matrix

Hebrew University

19

Inner-Products over Matrices: Linear Family

Where

are part of the upper-left block with appropriate dimensions

of a fixed p.d. matrix

The choices of F will determined the type of invariances one could obtain:

Examples:

parts are assumed aligned, take a weighted sum of local matches.

permutation invariance, take a weighted sum of all matches

decaying interaction among local parts

Hebrew University

20

Lifting matrices to higher dimensions:

The non-linear family sim(A,B)

forms a linear super-position of local kernels

Where

maps matrices onto higher dimensional

matrices (possibly in a non-linear manner)

will form non-linear super-positions of the local kernels

Hebrew University

21

Lifting matrices to higher dimensions:

The non-linear family sim(A,B)

There are two algebraic generic ways to “lift” the matrices: by applying symmetric

operators on the tensor product space:

the l-fold alternating tensor

the l-fold symmetric tensor

These liftings introduce the determinant and permanent operations on

blocks of

(whose entries are

)

Hebrew University

22

The Alternating Tensor

The l-fold alternating tensor is a multilinear map of

where

Example:

If

forms a basis of

Where

of

, then the

elements

form a basis of the l-fold alternating tensor product

, denoted by

Hebrew University

23

The Alternating Tensor: example

Example: n=3, l=2

In

Let

we have 3 such elements:

be the standard basis of

Hebrew University

24

The Alternating Tensor: example

Hebrew University

25

The Alternating Tensor: example

Hebrew University

26

The Alternating Tensor: example

Note that the basic units are the 2x2 minors of A

Hebrew University

27

The l-fold Alternating Tensor

is a linear map

is a linear map

The matrix representation of

The

is called the “l’th compound matrix”

entry has the value

has dimensions

whose entries are equal to the

Hebrew University

minors of A

28

The l-fold Alternating Tensor

has dimensions

whose entries are equal to the

minors of A

Finally, from the identity

we conclude

which is known as the Binet-Cauchy theorem.

Hebrew University

29

The l-fold Alternating Kernel

Recall,

Where

is of size

is the

with the (i,j) entry equal to

upper-left sub-matrix of

In particular, when

Define:

where

with dimension

with dimension

upper-left block of

Hebrew University

with dimension

30

The l-fold Alternating Kernel

In particular, when

is a linear weighted combination of the

Since the entries of

are

minors of

we get that:

is a non-linear combination of the local

Hebrew University

31

The l-fold Alternating Kernel: Geometric Interpretation

Let

The “QR” factorization of A (and B)

can be computed using only the elements

(Wolf & Shashua, JMLR’03)

Therefore, without loss of generality we can assume that the input matrices

fed into

are unitary.

Hebrew University

32

The l-fold Alternating Kernel: Geometric Interpretation

Therefore, without loss of generality we can assume that the input matrices

fed into

are unitary.

The determinant of an

block of

is equal to the product of the cosine

principal angles between the corresponding pair of l-dimensional subspaces

spanned by:

Reminder:

cos( j )

(recall that

max

ucol(A ),v col(B )

uT v

u T u vT v 1

)

Hebrew University

u T ui vT vi 0

i 1,..., j 1

33

The l-fold Alternating Kernel: The role of F

The choices of F will determined the type of invariances one could obtain:

Examples:

sum of product cos. prin. ang. between matching subspaces

and

only a subset of matching subspaces are considered

(say, a sliding window arrangement)

sum of product cos. prin. ang. between all pairs of subspaces

and

Hebrew University

34

The Symmetric Tensor and Kernel

The points in the l-fold symmetric tensor space

The

points

are defined:

where

form a basis for

The analogue of

is the “l’th power matrix”

whose

entry has the value

is

whose entries are equal to the

Hebrew University

permanents of A

35

Compound Kernels: Example

Define:

and obtain the “square l-fold alternating kernel”:

(with an appropriate sparse F).

Hebrew University

36

Pedestrian Detection using Local Parts

Nine local regions, each providing 22 measurements

Hebrew University

37

Holistic Down-sampled Images

Raw

linear

Poly d=2 Poly d=6 RBF

20x20

78%

83%

84%

86%

32x32

78%

84%

85%

82%

72%

77%

76.5%

occlusion 73.5%

* The % is the ratio between the sum of true positives and true negatives and the total number of test examples

* SVM with 4000 training and 4000 test sets were used

Hebrew University

38

Sim(A,B) Kernels

Local

kernel

linear

90.8%

85%

90.6%

88%

RBF

91.2%

85%

90.4%

90%

* The % is the ratio between the sum of true positives and true negatives and the total number of test examples

* SVM with 4000 training and 4000 test sets were used

Hebrew University

39

Sim(A,B) Kernels with Occlusions

Local kernel

linear

62%

87%

RBF

83%

88%

* The % is the ratio between the sum of true positives and true negatives and the total number of test examples

* SVM with 4000 training and 4000 test sets were used

Hebrew University

40

END

Hebrew University

41