Presentation

advertisement

HPC Tutorial

Manoj Nambiar,

Performance Engineering Innovation Labs

Parallelization and Optimization CoE

www.cmgindia.org

Computer Measurement Group, India

0

A Common Expectation

Our ERP application has

slowed down. All the

departments are

complaining.

Let’s use HPC

Computer Measurement Group, India

-1-

1

Agenda

• Part – I

– A sample domain problem

– Hardware & Software

• Part – II Performance Optimization Case Studies

– Online Risk Management

– Lattice Boltzmann implementation

– OpenFOAM - CFD application (if time permits)

Computer Measurement Group, India

2

Designing an Airplane for performance ……

Thank You

Problem: Calculate Total Lift and Drag on the plane for a wind-speed of 150 m/s

Computer Measurement Group, India

3

Performance Assurance – Airplanes vs Software

Assurance

Approach

Testing

Airplane

Wind Tunnel Testing

Software

Load Testing with virtual

users

Simulation CFD Simulation

Discrete Event Simulation

Analytical

MVA, BCMP, M/M/k etc

None

Cost

Accuracy

Computer Measurement Group, India

4

CFD Example – Problem Decomposition

Methodology

1.Partition volume into cells

2. For a number of time steps

Thank You

2.a For each cell

2.a.1 calculate velocities

2.a.2 calculate pressure

2.a.3 calculate turbulence

All cells have to be in equilibrium

with each other.

Becomes a large AX=b problem.

This problem is partitioned into groups of cells which are assigned to CPUs

Each CPU can compute in parallel but the also have to communicate to each other

Computer Measurement Group, India

5

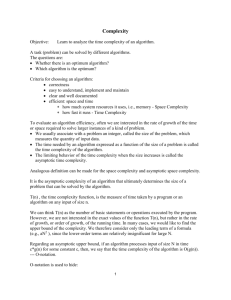

A serial algorith for Ax = B

Compute Complexity – O(n2)

Computer Measurement Group, India

6

What kind of H/W and S/W do we need

•

Take an example Ax=b solver

– Order of computational complexity is n2

– Where n is the number of cells in which the domain is divided

•

Higher the number of cells – Higher the accuracy

•

Typical number of cells

– In 10’s of millions

•

Very prohibitive to run sequentially

•

Increase in memory requirements will need proportionally higher number of servers

Parallel implementation is needed on a large cluster or servers

Computer Measurement Group, India

7

Software

• Lets look at the software aspect first

– Then we look at the hardware

Computer Measurement Group, India

8

Work Load Balancing

•

After solving Ax=B

– Some elements of x need to be exchanged with neighbor groups

– Every group (process) has to send and receive values with its neighbors

• For the next Guass Seidel iteration

Also need to check that all values of x have converged

Should this using TCP/IP or 3 tier web/app/database architecture?

Computer Measurement Group, India

9

Why TCP/IP wont suffice

•

Philosophically – NO

– These parallel programs are peers

– No one process is client or server

•

Technically – NO

– There can be as much as 10000 parallel processes

• Need to keep a directory of public server IP and port for each process

– TCP is a stream oriented protocol

• Applications need to pass messages

•

Changing the size of the cluster is tedious

Computer Measurement Group, India

10

Why 3 tier application will not suffice?

•

3 tier applications are meant to serve end user transactions

– This application is not transactional

•

Database is not needed for these applications

– No need to first persist and then read data

• This kind of I/O will impact performance significantly

• Better to store data in RAM

– ACID properties of the database are not required

• Applications are not transactional in nature

– SQL is a major overhead considering data velocity requirements

•

Managed Frameworks like J2EE, .NET not optimal for such requirements

Computer Measurement Group, India

11

MPI to the rescue

•

A message oriented interface

•

Has an API sparring 300 functions

– Support complex messaging requirements

•

A very simple interface for parallel programming

•

Also portable regardless of the size of the deployment cluster

Computer Measurement Group, India

12

MPI_Functions

• MPI_Send

• MPI_Recv

• MPI_Wait

• MPI_Reduce

–

–

–

–

SUM

MIN

MAX

….

Computer Measurement Group, India

13

Not so intuitive MPI calls

• MPI_Allgather(v)

• MPI_Scatter(v)

• MPI_Gather(v)

• MPI_All_to_All(v)

Computer Measurement Group, India

14

Not so intuitive MPI calls

• MPI_Allgather(v)

• MPI_Scatter(v)

• MPI_Gather(v)

• MPI_All_to_All(v)

Computer Measurement Group, India

15

Not so intuitive MPI calls

• MPI_Allgather(v)

• MPI_Scatter(v)

• MPI_Gather(v)

• MPI_All_to_All(v)

Computer Measurement Group, India

16

Not so intuitive MPI calls

• MPI_Allgather(v)

• MPI_Scatter(v)

• MPI_Gather(v)

• MPI_All_to_All(v)

Computer Measurement Group, India

17

Sample MPI program – parallel addition of a large array

Computer Measurement Group, India

18

MPI – Send, Recv and Wait

If you have some computation to be done

while waiting to receive a message from a peer

This is the place to do it

Computer Measurement Group, India

19

Hardware

• Lets look at the Hardware

–

–

–

–

Clusters

Servers

Coprocessors

Parallel File System

Computer Measurement Group, India

20

HPC Cluster

Not very different from regular data center clusters

Computer Measurement Group, India

21

Now lets look inside a server

NUMA

Coprocessor’s

go here

Computer Measurement Group, India

22

Parallelism in Hardware

• Multi-server/Multi-node

• Multi-sockets

• Multi-core

Mult-socket server board

• Co-processors

– Many Core

– GPU

• Vector Processing

Multi-core CPU

Computer Measurement Group, India

23

Coprocessor - GPU

PCIE Card

•

•

•

SM – Streaming Multi-processor

Device RAM – high speed GDDR5 RAM

Extreme multi-threading – thousands of threads

Computer Measurement Group, India

24

Inside a GPU streaming multiprocessor (SM)

•

An SM can be compared to a CPU core

•

A GPU core is essentially an ALU

•

All cores execute the same instruction at a time

– What happens to “if-then-else”?

•

A warp is software equivalent of a CPU thread.

– Scheduled independently

– A warp instruction executed by all cores at a time

•

Many warps can be scheduled on an SM

– Just like many threads on a CPU

– When 1 warp is scheduled to run other warps are moving data

•

A collection of warps concurrently running on an SM make a block

– Conversely an SM can run only one block at a time

Efficiency is achieved when there is one warp in 1 stage

of the execution pipeline

Computer Measurement Group, India

25

How S/W runs on the GPU

1.

A CPU process/thread initiates data transfer from CPU memory to GPU memory

2.

The CPU invokes a function (kernel) that runs on the GPU

– CPU specifies the number of blocks and blocks per thread

– Each block is scheduled on one SM

– After all blocks complete execution CPU is woken

This is known as offload mode of execution

3.

CPU fetches the kernel output from the GPU memory

Computer Measurement Group, India

26

Co-Processor – Many Integrated Core (MiC)

•

•

Cores are same as Intel Pentium CPU’s

– With vector processing instructions

L2 Level cache is accessible by all the cores

Execution Modes

• Native

• Offload

• Symmetric

Computer Measurement Group, India

27

What is vector processing?

for(i=1; i< 8; i++) c[i] = a[i]+b[i];

A

B

ALU in an ordinary CPU core

ADD C, A, B

1 arithmetic operation

per instruction cycle

C

Vector registers

A1 A2 A3 A4 A5 A6 A7 A8

VADD C, A, B

ALU in an CPU core with vector processing

B1 B2 B3 B4 B5 B6 B7 B8

8 arithmetic operations

per instruction cycle

C1 C2 C3 C4 C5 C6 C7 C8

Computer Measurement Group, India

28

HPC Networks – Bandwidth and Latency

Computer Measurement Group, India

29

Hierarchical network

End of row switch

Top of rack

•

The most intuitive design of a network

– Not uncommon in data centers

•

What happens when the 1st 8 nodes need to communicate to the next 8?

– Remember that all links have the same bandwidth

Computer Measurement Group, India

30

Clos Network

•

Can be likened to a replicated hierarchical network

– All nodes can talk to all other nodes

– Dynamic routing capability essential in the switches

Computer Measurement Group, India

31

Common HPC Network Technology - Infiniband

•

Technology used for building high throughput low latency network

– Competes with Ethernet

•

To use Infiniband

– You need a separate NiC on the server

– An Infiniband switch

– An Infiniband Cable

•

Messaging supported in Infiniband

– a direct memory access read from or, write to, a remote node

• (RDMA).

– a channel send or receive

– a transaction-based operation (that can be reversed)

– a multicast transmission.

– an atomic operation

Computer Measurement Group, India

32

Parallel File Systems - Lustre

•

•

Parallel file systems give the same file system interface to legacy applications

Can be built out of commodity hardware and storage.

Computer Measurement Group, India

33

HPC Applications - Modeling and Simulation

• Aerodynamics

Prototype

– Vehicular design

• Energy and

Resources

Simulation

– Seismic Analysis

– Geo-Physics

– Mining

Physical Experimentation

Lab Verification

Final Design

• Molecular Dynamics

– Drug Discovery

– Structural Biology

• Weather Forecasting

HPC or

no HPC?

Accuracy

Speed

Power

Cost

From Natural Science to Software

Computer Measurement Group, India

34

Relatively Newer & Upcoming Applications

•

•

•

Finance

– Risk Computations

– Options Pricing

– Fraud Detection

– Low Latency trading

Image Processing

– Medical Imaging

– Image Analysis

– Enhancement and Restoration

Video Analytics

– Face Detection

– Surveillance

Internet of Things

– Smart City

– Smart Water

– eHealth

Bio-Informatics

– Genomics

Knowledge of core algorithms is key

Computer Measurement Group, India

35

Technology Trends Impacting Performance &

Availability

• Multi-Core, Speeds not increasing

• Memory Evolution

– Lower memory per core

– Relatively Low Memory Bandwidth

– Deep Cache & Memory Hierarchies

• Heterogeneous Computing

– Coprocessors.

• Vector Processing

Temperature fluctuation induced

slowdowns

Memory error induced

slowdowns

Network communication errors

Large sized cluster

– Increased failure probability

Algorithms need to be re-engineered to make best use of trends

Computer Measurement Group, India

36

Knowing Performance Bounds

• Amdahl’s Law

– Maximum speed up achievable sp = (s + (1-s)/p)-1

– Where s is the fraction of code that has to run sequentially

Also Important to take problem size into account

when estimating speedups

Compute/Communication ratio is key.

Typically – Higher the problem size

- higher the the ratio

- Better the speed up

Computer Measurement Group, India

37

Quick Hardware Recap

FLOPS Bound

Bandwidth Bound

What about server clusters?

Computer Measurement Group, India

38

FLOPS and Bandwidth dependencies

• FLOPS – Floating operations per second

–

–

–

–

–

Frequency

No of CPU sockets

No of cores/per socket

No of Hyper-threads per core

No of vector units per core / hyperthead

• Bandwidths (Bytes/sec)

– Level in the hierarchy – Registers, L1, L2, L3, DRAM

– Serial / Parallel

– Memory attached to same CPU socket or another CPU

Why are we not talking about memory latencies?

Computer Measurement Group, India

39

Know your performance bounds

GPU

•

•

Above information can also be obtained from product data sheets

What do you gain by knowing performance bounds?

Computer Measurement Group, India

40

Other ways to gauge performance

• CPU speed

– SPEC – integer and floating point benchmark

• Memory Bandwidth

– Streams benchmark

Computer Measurement Group, India

41

Basic Problem

• Consider the following code

– double a[N], b[N], c[N], d[N];

– int i;

– for (i = 0; i < N-1; i++) a[i] = b[i] + c[i]*d[i];

• If N = 1012

• And the code has to complete in 1 second?

– How many Xeon E5-2670 CPU sockets would you need?

– Is this memory bound or CPU bound?

Computer Measurement Group, India

42

General guiding principles for performance optimization

•

Minimize communication requirements between parallel processes / threads

•

If communication is essential then

– Hide communication delays by overlapping compute and communication

•

Maximize data locality

– Helps caching

– Good NUMA page placement

•

Do not forget to use compiler optimization flags

•

Implement weighted decomposition of workload

– In a cluster with heterogeneous compute capabilities

Let your profiling results guide you on the next steps

Computer Measurement Group, India

43

Optimization Guidelines for GPU platforms

•

Minimize use of “if-then-else” or any other branching

– they cause divergence

•

Tune the number of threads per block

– Too many will exhaust caches and registers in the SM

– Too few will underutilize GPU capacity

•

Use device memory for constants

•

Use shared memory for frequently accessed data

•

Use sequential memory access instead of strided

•

Coalesce memory accesses

•

Use streams to overlap compute and communications

Computer Measurement Group, India

44

Steps in designing parallel programs

Data Structure

• Partitioning

Primitive Tasks

• Communication

• Agglomeration

• Mapping

Computer Measurement Group, India

45

Steps in designing parallel programs

• Partitioning

• Communication

• Combine sender and receiver

• Eliminate communication

• Increase Locality

• Agglomeration

• Combine senders and receivers

• Reduces number of message transmissions

• Mapping

Computer Measurement Group, India

46

Steps in designing parallel programs

• Partitioning

• Communication

• Agglomeration

NODE 1 NODE 2 NODE 3

• Mapping

Computer Measurement Group, India

47

Agenda

• Part – I

– A sample domain problem

– Hardware & Software

• Part – II Performance Optimization Case Studies

– Online Risk Management

– Lattice Boltzmann implementation

– OpenFOAM - CFD application Xeon Phi (if time permits)

Computer Measurement Group, India

48

Multi-core Performance Enhancement: Case Study

Computer Measurement Group, India

49

Background

• Risk Management in a commodities exchange

• Risk computed post trade

– Clearing and settlement – T+2

• Risk details updated on screen

– Alerting is controlled by human operators

Computer Measurement Group, India

50

Commodities Exchange: Online Risk Management

Trading

System

Clearing Member

Client1

ClientK

Online

Trades

Risk

Management

System

Alerts

Prevent

Client/Clearing

Member from

Trading

Collateral

Falls

Short

Initial Deposit of Collateral

Long/Short Positions on Contracts

Contract/Commodity Price Changes

Risk Parameters Change during day

Computer Measurement Group, India

51

Will standard architecture on commodity servers suffice?

Application Server

2 CPU

Database Server

2 CPU

Risk Management System?

Computer Measurement Group, India

52

Commodities Exchange: Online Risk Management

Computations:

• Position Monitoring, Mark to Market, P&L, Open Interest,

Exposure Margins

• SPAN: Initial Margin (Scanning Risk), Inter-Commodity

Spread Charge, Inter-Month Spread Charge, Short Option

Margin, Net Option Value

• Collateral Management

Functionality is complex

Let’s look at a simpler problem that reflects the same computational

challenge & come back later

Computer Measurement Group, India

53

Workload Requirements

• Trades/Day : 10 Million

• Peak Trades/Sec : 300

• Traders : 1 Million

Computer Measurement Group, India

54

P&L Computation

Trader A

Time

Txn

Stock

Quantity

Price

t1

BUY

Cisco

100

950

Total

Amount

95,000

t2

BUY

IBM

200

30

6000

t3

SELL

Cisco

40

975

39,000

t4

SELL

IBM

200

31

6200

Profit(Cisco, t4) = -95000 + 39000 + (100-40)*970

= -56000 + 58200 = 2200

Current

Cisco

price is

970

Biggest culprit

In general Profit on a given stock S at time t:

= sum of txn values up to time t +

(netpositions on stock at time t) * price of stock at time t

Buy txns take –ve value, sell +ve value

Computer Measurement Group, India

55

P&L Computation

int

int

int

int

profit[MAXTRADERS]; // array of trader profits

netpositions[MAXTRADERS][MAXSTOCKS]; // net positions per stock

sumtxnvalue[MAXTRADERS][MAXSTOCKS]; // net transaction values

profitperstock[MAXTRADERS][MAXSTOCKS];

loop forever

t = get_next_trade();

sumtxnvalue[t.buyer][t.stock] − = t.quantity * t.price;

sumtxnvalue[t.seller][t.stock] + = t.quantity * t.price;

netpositions[t.buyer][t.stock] + = t.quantity;

netpositions[t.seller][t.stock] − = t.quantity;

loop for all traders r

profit[r] = profit[r] – profitperstock[r][t.stock];

profitperstock[r][t.stock] = sumtxnvalue[r][t.stock] +

netpositions[r][t.stock] * t.price;

profit[r] = profit[r]+ profitperstock[r][t.stock];

end loop

end loop

Computer Measurement Group, India

56

P&L Computational Analysis

• Profit has to be kept updated for every price change

– For all traders

• Inner Loop: 8 Computations

– 4 Computations (+ + * +)

– Loop Counter

– 3 Assignments

• Actual Computational Complexity

– 20 times as complex as displayed algorithm

• Number of traders: 1 million

Computer Measurement Group, India

57

P&L Computational Analysis

• SLA Expectation: 300 trades / sec

• Computations/trade

– 8 computations x 1 million traders x 20 = 160 million

• Computations/sec = 160 million x 300 trades/sec

– 48 billion computations/sec!

• Out of reach of contemporary servers that time!

Can we deliver

within

an

IT

budget?

Computer Measurement Group, India

58

Test Environment

• Server

• 8 Xeon 5560 cores

• 2.8 GHz

• 8 GB RAM

• OS: Centos 5.3

• Linux kernel 2.6.18

• Programming Language : C

• Compilers: gcc and icc

Computer Measurement Group, India

59

Test Inputs

Number of Trades

1 Million

Number of Traders

Number of Stocks

Trade File Size

100,000

100

20 MB

Trade Distribution

Trades %

20%

Stock %

30%

20 %

60%

60%

10%

Computer Measurement Group, India

60

P&L Computation: Baselining

Trades/sec

Baseline Performance gcc

190

gcc –O3

323

Overall Gain

Computer Measurement Group, India

70%

61

P&L Computation: Transpose

int

int

int

int

profit[MAXTRADERS]; // array of trader profits

netpositions[MAXTRADERS][MAXSTOCKS]; // net positions per stock

sumtxnvalue[MAXTRADERS][MAXSTOCKS]; // net transaction values

profitperstock[MAXTRADERS][MAXSTOCKS];

loop forever

t = get_next_trade();

Trader

sumtxnvalue[t.buyer][t.stock] − = t.quantity * t.price;

r1

sumtxnvalue[t.seller][t.stock] + = t.quantity * t.price;

netpositions[t.buyer][t.stock] + = t.quantity;

Stock

s1

Trade t

Stock

si

r2

r3

netpositions[t.seller][t.stock] − = t.quantity;

Very Poor Caching

loop for all traders r

profit[r] = profit[r] – profitperstock[r][t.stock];

profitperstock[r][t.stock] = sumtxnvalue[r][t.stock] +

netpositions[r][t.stock] * t.price;

profit[r] = profit[r]+ profitperstock[r][t.stock];

end loop

end loop

Computer Measurement Group, India

62

62

Matrix Layout

Trader

Stock

s1

.

Stock

si

r1

r2

r3

Memory Layout

Trader r1

S S

1 2

Trader r2

S S S

i 1 2

Trader r3

S S S

i 1 2

Trader r4

S S S

i 1 2

Computer Measurement Group, India

S

i

63

Matrix Layout - Optimized

Stock

Trader

r1

Trader

r2

Trader

r3

S1

S2

S3

Optimized Memory Layout

Stock S1

r1r2

Stock S2

rn r1r2

Stock S3

rnr1r2

Stock S4

rnr1r2

Computer Measurement Group, India

rn

64

P&L Computation: Transpose

int

int

int

int

profit[MAXTRADERS]; // array of trader profits

netpositions[MAXSTOCKS][MAXTRADERS]; // net positions per stock

sumtxnvalue[MAXSTOCKS][MAXTRADERS]; // net transaction values

profitperstock[MAXSTOCKS][MAXTRADERS];

loop forever

Stock

t = get_next_trade();

sumtxnvalue[t.buyer][t.stock] − = t.quantity * t.price;

Trade

t s1

sumtxnvalue[t.seller][t.stock] + = t.quantity

* t.price;

Trader

r1

Trader

ri

netpositions[t.buyer][t.stock] + = t.quantity;

Good Caching si

netpositions[t.seller][t.stock] −Very

= t.quantity;

loop for all traders r

profit[r] = profit[r] – profitperstock[t.stock][r];

profitperstock[t.stock][r] = sumtxnvalue[t.stock][r] +

netpositions[t.stock][r] * t.price;

profit[r] = profit[r]+ profitperstock[t.stock][r];

end loop

end loop

Computer Measurement Group, India

65

P&L Computation: Transpose

Trades/

sec

Overall Gain

Baseline

Performance gcc

190

gcc –O3

323

1.7X

4750

25X

Transpose of

Trader/Stock

Immediate Gain

14.7X

Intel Compiler

icc –fast (not –O3)

Trades/

sec

Overall Gain

Immediate Gain

6850

36X

37%

Computer Measurement Group, India

66

P&L Computation: Use of Partial Sums

int

int

int

int

profit[MAXTRADERS]; // array of trader profits

netpositions [MAXSTOCKS] [MAXTRADERS]; // net positions per stock

sumtxnvalue [MAXSTOCKS] [MAXTRADERS]; // net transaction values

profitperstock[MAXSTOCKS] [MAXTRADERS];

loop forever

This can be

maintained

sumtxnvalue[t.buyer][t.stock] − = t.quantity * t.price;

cumulatively for the

trader. Need not be

sumtxnvalue[t.seller][t.stock] + = t.quantity * t.price;

per stock.

netpositions[t.buyer][t.stock] + = t.quantity;

t = get_next_trade();

netpositions[t.seller][t.stock] − = t.quantity;

loop for all traders r

profit[r] = profit[r] – profitperstock[r][t.stock];

profitperstock[r][t.stock] = sumtxnvalue[r][t.stock] +

netpositions[r][t.stock] * t.price;

profit[r] = profit[r]+ profitperstock[r][t.stock];

end loop

end loop

Computer Measurement Group, India

67

P&L Computation: Use of Partial sums

int

int

int

int

int

profit[MAXTRADERS]; // array of trader profits

netpositions [MAXSTOCKS] [MAXTRADERS]; // net positions per stock

sumtxnvalue [MAXTRADERS]; // net transaction values

sumposvalue [MAXTRADERS]; // sum of netpositions * stock price

ltp[MAXSTOCKS]; // latest stock price (last traded price)

Monetary

loop forever

Value of all

t = get_next_trade();

stock

sumtxnvalue[t.buyer] − = t.quantity * t.price;

positions

time of trade

sumtxnvalue[t.seller] + = t.quantity * t.price;

netpositions[t.buyer][t.stock] + = t.quantity;

netpositions[t.seller][t.stock] − = t.quantity;

sumposvalue[t.buyer] + = t.quantity * ltp[t.stock];

sumposvalue[t.seller] − = t.quantity * ltp[t.stock];

loop for all traders r

sumposvalue[r] + = netpositions[t.stock] [r]* (t.price − ltp[t.stock]);

profit[r] = sumtxnvalue[r] + sumposvalue[r];

Trades/

Overall Gain

end loop

sec

Computer Measurement Group, India

ltp[t.stock] =Use

t.price;

of Partial Sum

9650

50X

Immediate

Gain

68

68

41%

P&L Computation: Skip Zero Values

Majority of the

values of this

matrix are 0,

thanks to hot

stocks

int netpositions [MAXSTOCKS] [MAXTRADERS];

loop for all traders r

if (netpositions[t.stock][r] != 0)

sumposvalue[r] + = netpositions[t.stock][r] * (t.price − ltp[t.stock]);

profit[r] = sumtxnvalue[r] + sumposvalue[r];

endif

end loop

Skip Zero Values

Trades/

sec

Overall Gain

Immediate

Gain

10800

56X

12%

Computer Measurement Group, India

69

P&L Computation: Cold Stocks

• There is a large percentage of cold stocks

– Those which are held by very few traders

• In the last optimization an “if” check was added to avoid

computation

– If the trader does not hold the traded stock

• Is there any benefit if the trader record is not accessed at all?

– We are computing for 100,000 traders

Computer Measurement Group, India

70

P&L Computation: Sparse Matrix Representation

Flags Table – This

Stock owned by who?

Stock

A

B

C

D

E

s1

1

1

0

0

0

s2

1

1

1

0

0

s3

1

0

0

1

1

Updated in outer loop

Traversed in outer loop

Stock

Count

T0

T1

T2

.

.

s1

2

A

B

0

0

0

s2

3

A

C

B

0

0

s3

3

A

E

D

0

0

Traders Indexes/stock

Computer Measurement Group, India

71

P&L Computation: Sparse Matrix Representation

int

int

int

int

int

profit[MAXTRADERS]; // array of trader profits

netpositions [MAXSTOCKS] [MAXTRADERS]; // net positions per stock

sumtxnvalue [MAXTRADERS]; // net transaction values

sumposvalue [MAXTRADERS]; // sum of netpositions * stock price

ltp[MAXSTOCKS]; // latest stock price (last traded price)

loop forever

t = get_next_trade();

sumtxnvalue[t.buyer] − = t.quantity * t.price;

sumtxnvalue[t.seller]

+ = t.quantity * t.price;

netpositions[t.buyer][t.stock] + = t.quantity;

netpositions[t.seller][t.stock] − = t.quantity;

sumposvalue[t.buyer] + = t.quantity * ltp[t.stock];

sumposvalue[t.seller] − = t.quantity * ltp[t.stock];

loop for all traders r

Traverse list

of trader

count for

stock less

than

threshold

sumposvalue[r] + = netpositions[t.stock]

[r]* (t.price

Trades/

Overall

Gain − ltp[t.stock]);

Immediate

profit[r] = sumtxnvalue[r] + sumposvalue[r];

sec

Gain

end loop

Computer Measurement

72

Sparse Matrix

36000 Group, India

189X

3.24X

72

P&L Computation: Clustering

int profit[MAXTRADERS];

int sumtxnvalue

[MAXTRADERS];

int sumposvalue

[MAXTRADERS];

struct

{

int

int

int

}

Poor caching for sparse matrix lists

TraderRecord

profit;

sumtxnvalue

sumposvalue;

Clustering

Better caching performance!

Trades/

sec

Overall Gain

Immediate

Gain

70000

368X

94%

Computer Measurement Group, India

73

P&L Computation: Precompute Price Difference

int netpositions [MAXSTOCKS] [MAXTRADERS];

Loop Invariant:

Move to outside

the loop

loop for all traders r

if (netpositions[t.stock][r] != 0)

sumposvalue[r] + = netpositions[t.stock][r] * (t.price − ltp[t.stock]);

profit[r] = sumtxnvalue[r] + sumposvalue[r];

end loop

Trades/sec

Clustering

75000

Overall Gain Immediate Gain

394X

Computer Measurement Group, India

7%

74

P&L Computation: Loop Unrolling

#pragma unroll

loop for all traders r

if (netpositions[t.stock][r] != 0)

sumposvalue[r] + = netpositions[t.stock][r] * (t.price − ltp[t.stock]);

profit[r] = sumtxnvalue[r] + sumposvalue[r];

end loop

Clustering

Trades/

sec

Overall Gain

Immediate Gain

80000

421X

7%

Computer Measurement Group, India

75

Commodities Exchange: Online Risk Management

Trading

System

Clearing Member

Client1

ClientK

Online

Trades

Risk

Management

System

Alerts

Prevent

Client/Clearing

Member from

Trading

Collateral

Falls

Short

Initial Deposit of Collateral

Long/Short Positions on Contracts

Contract/Commodity Price Changes

Risk Parameters

Change during day76

Computer Measurement

Group, India

P&L Computation: Batching of Trades

Batch n trades and use ltp of last trade // increases risk by a small delay

loop for all traders r

if (netpositions[t.stock][r] != 0)

sumposvalue[r] + = netpositions[t.stock][r] * (t.price − ltp[t.stock]);

profit[r] = sumtxnvalue[r] + sumposvalue[r];

end loop

Trades/sec

Overall Gain Immediate Gain

Batching of 100 trades

150000

789X

1.88X

Batching of 1000 trades

400000

2105X

2.67X

So far all this is with only one thread!!!

Computer Measurement Group, India

77

P&L Computation: Use of Parallel Processing

#pragma openmp with chunks (32 threads on 8 core Intel server)

loop for all traders r

if (netpositions[t.stock][r] != 0)

sumposvalue[r] + = netpositions[t.stock][r] * (t.price − ltp[t.stock]);

profit[r] = sumtxnvalue[r] + sumposvalue[r];

end loop

OpenMP

Trades/sec

Overall

Gain

Immediate

Gain

1.2 million

5368X

2.55X

Computer Measurement Group, India

78

P&L Computation: Summary of Optimizations

Optimization

Trades/sec

Baseline gcc

190

gcc –O3

320

Immediate

Gain

Overall

Gain

1.70X

1.7X

Transpose of Trader/Stock

4750

14.70X

25X

Intel Compiler icc –fast

6850

1.37X

36X

Use of Partial Sums

9650

1.41X

50X

Skip Zero Values

10,800

1.12X

56X

Sparse Matrix

36,000

3.24X

189X

Clustering of Arrays

70,000

1.94X

368X

Precompute Price Diff

75,000

1.07X

394X

Loop Unrolling

80,000

1.07X

421X

Batching of 100 Trades

150,000

1.88X

Batching of 1000 Trades

400,000

2.67X

1,020,000

2.55X

OpenMP

Computer Measurement Group, India

789X Single

2105X Thread

5368X 8 CPU,

32 Thread

79

Lattice Boltzmann on GPU

BACKGROUND

Computer Measurement Group, India

80

2-D Square Lid Driven Cavity Problem

U

Moving Top Lid

Fluid

L

Y

X

L

Flow is generated by continuously moving top lid at a constant

velocity.

Computer Measurement Group, India

81

Level 1

Time (ms)

MGUPS

520727.1

5.034192

Remarks

Simply ported CPU code to GPU.

Structure Node & Lattice in GPU Global Memory.

/*CPU Code*/

for(y=0; y<(ny-2); y++)

{

for(x=0; x<(nx-2); x++)

{

-}

}

/*GPU Code*/

/*for(int y=0; y<(ny-2); y++){*/

if(tid < (ny-2))

{

for(x=0; x<(nx-2); x++)

{

-}

}

Replace outer loop Iterations with threads.

Total Threads=(ny-2), Each thread working on (nx-2) grid points.

MGUPS = (GridSize x TimeIterations) / (Time x 1000000)

Computer Measurement Group, India

82

Level 1 (Cont.)

Computer Measurement Group, India

83

Level 2

Time (ms)

MGUPS

115742

22.64899

Remarks

Loop Collapsing

/*GPU Code Level 1*/

/*GPU Code with Loop Fusion*/

if(tid < (ny-2))

{

for(x=0; x<(nx-2); x++)

{

for(y=0; y<(ny-2); y++)-}

}

if(tid < ((ny-2)*(nx-2)))

{

y = (tid/(nx-2))+1;

x = (tid%(nx-2))+1;

-}

Collapsing of 2 nested loops into one to exhibit massive

parallelism.

Total threads=[(ny-2)*(nx-2)], Now each thread working on 1 grid

point.

Computer Measurement Group, India

84

About GPU Constant Memory

Tesla C2075

SM 1

SM 2

SM 14

Constant Memory

Global Memory

Can be used for data that will not change over the course of kernel

execution.

Define constant memory using __constant__

cudMemcpyToSymbol will copy data to constant memory.

Constant memory is cached.

Constant memory is read-only.

Just 64 KB.

Computer Measurement Group, India

85

Level 3

Time (ms)

MGUPS

113061.8

23.186

Remarks

Copied Lattice Structure in GPU Constant Memory

typedef struct Lattice{

int Cs[9];

int Lattice_velocities[9][2];

real_dt Lattice_constants[9][4];

real_dt ek_i[9][9];

real_dt w_k[9];

real_dt ac_i[9];

real_dt gamma9[9];

}Lattice;

__constant__ Lattice lattice_dev_const[1];

cudaMemcpyToSymbol(lattice_dev_const, lattice, sizeof(Lattice));

Computer Measurement Group, India

86

Level 4

Time (ms)

MGUPS

Remarks

40044.5

65.5

Coalesced Memory Access pattern for Node Structure

typedef struct Node /* AoS, (ny*nx) elements */

{

int Type;

real_dt Vel[2];

real_dt Density;

real_dt F[9];

real_dt Ftmp[9];

}Node;

Typ

e

Vel[2]

Densit

y

Grid Point 0

F[9]

Ftmp[9

]

Typ

e

Vel[2]

Densit

y

F[9]

Ftmp[9

]

Grid Point 1

Computer Measurement Group, India

87

Level 4 (Cont.)

Computer Measurement Group, India

88

Level 4 (Cont.)

T-0

T-1

(All Threads simultaneously accessing

Density)

Stride

Typ

e

Vel[2]

Densit

y

Grid Point 0

F[9]

Ftmp[9

]

Typ

e

Vel[2]

Densit

y

F[9]

Ftmp[9

]

Grid Point 1

Computer Measurement Group, India

89

Level 4 (Cont.)

T-0

Typ

e

T-1

Vel[2]

(All Threads simultaneously accessing

Density)

Stride Inefficient access of global memory

Densit

y

F[9]

Ftmp[9

]

Typ

e

Grid Point 0

T-0

T-1

Densit

y

Vel[2]

F[9]

Ftmp[9

]

Grid Point 1

(All Threads simultaneously accessing

Density)

Coalesced Access pattern

Typ

e

Typ

e

Vel[2]

Vel[2]

Densit

y

Densit

y

F[9]

F[9]

Ftmp[9

]

Ftmp[9

]

Efficient access of global memory

Computer Measurement Group, India

90

Level 4 (Cont.)

typedef struct Node /* AoS, (ny*nx) elements */

{

int Type;

real_dt Vel[2];

real_dt Density;

typedef struct Type{

real_dt F[9];

int *val;

real_dt Ftmp[9];

}Type;

}Node;

typedef struct Vel{

real_dt *val;

}Vel;

typedef struct Node_map{

typedef struct Density{

Type type;

real_dt *val;

Vel vel[2];

}Density;

Density density;

typedef struct F{

F f[9];

real_dt *val;

Ftmp ftmp[9];

}F;

}Node_dev;

typedef struct Ftmp{

real_dt *val;

}Ftmp;

Computer Measurement Group, India

91

Level 5

Time (ms)

MGUPS

14492.6

180.9

Remarks

Arithmetic Optimizations

for(int k=3; k<SPEEDS; k++){

//mk[k] = lattice_dev_const->gamma9[k]*mk[k];

//mk[k] = lattice_dev_const->gamma9[k] * mk[k] /

lattice_dev_const->w_k[k];

mk[k] = lattice_dev_const->gamma9_div_wk[k]*mk[k];

}

for(int i=0; i<SPEEDS; i++){

f_neq = 0.0;

for(int k=0; k<SPEEDS; k++)

{

//f_neq += ((lattice_dev_const->ek_i[k][i] * mk[k]) /

lattice_dev_const->w_k[k]);

f_neq += lattice_dev_const->ek_i[k][i]*mk[k];

}

}

Computer Measurement Group, India

92

Level 5 (Cont.)

Computer Measurement Group, India

93

Level 6

Time (ms)

MGUPS

8309.662109

315.468903

Remarks

Algorithmic Optimization

Global

barrier

Fn , vn , n

Collision

Ftmpn

Streaming

Fn

vn , n

Collision stores Ftmp to GPU Global Memory.

Streaming loads Ftmp from GPU Global Memory.

Global Memory Load/Store operations are expensive.

Computer Measurement Group, India

94

Level 6 (Cont.)

Collision

Streaming

Pull Ftmp from Neighbors needs Synchronization.

Computer Measurement Group, India

95

Level 6 (Cont.)

Collision

Streaming

Instead Push Ftmp to Neighbors – No need of

Synchronization

Computer Measurement Group, India

96

Level 6 (Cont.)

Fn , vn , n

Ftmpn

vn , n

Ftmpn

Fn

Collision & Streaming can be one kernel.

Saves one Load/Store from/to Global Memory.

Computer Measurement Group, India

97

Optimizations Achieved on GPU using CUDA

Levels

Time (ms)

MGUPS

(Million Grid

Updates Per

Second)

1

520727.1

5.034192

2

115742

22.64899

3

113061.8

23.186

4

40044.5

65.5

5

14492.6

180.9

6

8309.662109

315.468903

Remarks

Simply ported CPU code to GPU.

Structure Node & Lattice in GPU Global Memory.

Loop Collapsing

Copied Lattice Structure in GPU Constant

Memory

Coalesced Memory Access pattern for Node

Structure

Arithmetic Optimizations

Algorithmic Optimization

CUDA Card: Tesla C2075 (448 Cores, 14 SM, Fermi, Compute 2.0)

Computer Measurement Group, India

98

Recap

• Part – I

– A sample domain problem

– Hardware & Software

• Part – II Performance Optimization Case Studies

– Online Risk Management

– Lattice Boltzmann implementation

Computer Measurement Group, India

- 99 -

99

Closing Comments

•

OLTP applications seldom require HPC technologies

– Unless it is an application that needs to respond in microseconds

• Algo trading etc

•

Can HPC technologies be used to speed up my data-transformation (ETL/ELT) and reporting

workloads?

– Sure – you have to let go the ease of using 3rd party products & databases

• If you don’t want to –customizing a specific bottleneck process could help

– Stay tuned to companies innovating in this space –

• e.g SQREAM – implements databases operations on GPU’s

•

Investing in an HPC cluster and technologies not enough

– Also investing people who understand

• Underlying technologies

• Applications

Computer Measurement Group, India

- 100 -

100

Q&A

m.nambiar@tcs.com

www.cmgindia.org

Computer Measurement Group, India

101