Goal-Driven Autonomy Learning for Long

advertisement

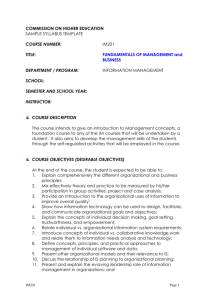

Goal-Driven Autonomy Learning for Long-Duration Missions Héctor Muñoz-Avila Goal-Driven Autonomy (GDA) GDA is a model of introspective reasoning in which an agent revises its own goals Key concepts: Expectation: the expected state after executing an action. Discrepancy: A mismatch between expected state and actual state. Explanation: A reason for the mismatch. Goal: State or desired states. Where the GDA knowledge is coming from? Objectives Enable greater autonomy and flexibility for unmanned systems A key requirement of the project is for autonomous systems to be robust over long periods of time Very difficult to encode for all possible circumstances in advance (i.e., months ahead). Changes (e.g., environmental) over time Need for learning: adapt to a changing environment. GDA knowledge needs to adapt to uncertain and dynamic environments while performing long-duration activities Goal-Driven Autonomy (GDA) Goal Management goal change Concrete Objective: Learn/adapt the GDA knowledge elements for each of the four components goal input goal insertion subgoal Meta Goals Intend Task Reasoning Trace ( ) Plan Meta-Level Control Evaluate Memory Hypotheses Interpret Strategies Episodic Memory Activations Self Model ( Controller Three levels: At the object (TREX) level, At the meta-cognition (MIDCA) level, and At the integrative object and meta-reasoning (MIDCA+TREX) level Introspective Monitoring Trace Metaknowledge ) Monitor Mental Domain = Ω goal change goal input goal insertion subgoal Goals MΨ MΨ Intend Problem Solving Task Plan Actions Act (& Speak) Evaluate Memory Mission & Goals( ) World Model (MΨ) Comprehension Hypotheses MΨ Interpret Episodic Memory Semantic Memory & Ontology Plans( ) & Percepts ( ) World =Ψ Percepts Perceive (& Listen) Goal Management Learning An initial set of priorities can be set at the beginning of the deployment But for a long-term mission such priorities will need to be adjusted automatically as a function of changes in the environment. For example, by default we might prioritize sonar sensory goals E.g., to determine potential hazardous conditions surrounding the UUV Goal Management Learning - Example Situation: four unknown contacts in area Default: identification of each contact can be set as a goal Each goal can be associated with a priority as a function of the distance to the UUV Unknown contact has some initial sensor readings Adaptation: once the contact have been identified, system might change the priority of future contacts with same sensor readings E.g., giving higher priority to contact that could be a rapid moving vessel Initial sensor readings for same target might change as a result of changing environmental conditions Goal Formulation Learning New goals can be formulated depending on: the discrepancies encountered the explanation generated and the observations from state Example: As before, unknown contact has some initial sensor readings Contact turns out to be a large mass that moves very close to the vehicle forcing trajectory change New goal: keep distance from contact with initial readings Explanation Learning Explanations are assumed to be deterministic: Discrepancy Explanation But these frequently assume perfect observability Need to relax this assumption to handle sensor information Associate priorities to explanations Need to be adapted over time Prior work studied underpinnings Need to consider sensor readings Expectation Learning Expectations need to consider time intervals . Must take into account the plan look-ahead and latency These two factors can be adapted over time by reasoning at the integrative object and meta-reasoning (MIDCA+TREX) level Conclusions Operating autonomously over long periods of time is a challenging task: Too difficult to pre-define all circumstances in advance. Conditions change over time Our vision is for UUVs that adapt to uncertain and dynamic environments while performing longduration activities By learning and refining GDA knowledge