Resource Analysis Based On

System Architecture Behavior

Monica Farah-Stapleton

Professor Ray Madachy

Professor Mikhail Auguston

Professor Kristin Giammarco

November 2015

Overview of Today’s Presentation

•

•

•

•

•

Pervasive Challenge and Response

Research Alignments

Applying FP Methodology To MP Model

Relating Activities

Closing Thoughts

We would like to acknowledge Lori Holmes-Limbacher for her

contributions as a FPA subject matter expert and the Q/P Management

Group, Inc. for the Tee Time System example.

2

Pervasive Challenge and Response

Challenge

•

Information Technology (IT) systems are large, challenged by the rate of change of

commercial IT, and represent a significant investment in time and resources

– Introduction of new system/capability results in unintended or unexpected

system/environment behaviors

– Operational and financial impacts often assessed after the fact

– Resourcing decisions and precise architectural descriptions of system/environment often

minimally related

Approach

•

•

•

Leverage precise behavioral modeling using Monterey Phoenix (MP) to assess

architectural design decisions and their impacts prior to, during, and after deployment

Relate architectural modeling to resourcing through analysis of behaviors and

Unadjusted Function Point Analysis (FPA) counts, leveraging complexity and size

metrics, e.g. Data Element Type (DET) and File Type Referenced (FTR)

Align activities with Systems Engineering Research Center (SERC) Ilities Tradespace

and Affordability Program (iTAP)

3

iTAP Research Objectives

• Total Ownership Cost (TOC) modeling to enable affordability tradeoffs with

integrated software-hardware-human factors

• Current shortfalls for ilities tradespace analysis

– Models/tools are incomplete wrt/ TOC phases, activities, disciplines, SoS aspects

– No integration with physical design space analysis tools, system modeling, or each other

• New aspects

– Integrated costing of systems, software, hardware and human factors across full lifecycle

operations

– Extensions and consolidations for DoD application domains

– Tool interoperability and tailorability (service-oriented)

• Can improve affordability-related decisions across all joint services

• Current Phase 4 Plans – Extract:

– Assess Monterey Phoenix (MP) for automatically providing cost information from

architectural models

– MP will extract software sizing cost model inputs to compute costs, and we will assess

mapping MP architectural elements into systems engineering cost model inputs

Please See Our Demo At the Tools Fair 5:00-6:30 PM

44

Applying FPA Methodology to MP

Architecture Model

Step 1:

Step 2:

Step 3:

Step 4:

Step 5:

Step 6:

Step 7:

Step 8:

Identify problem statement, i.e. typical questions to be answered, determine type of count

Describe behaviors of system and environment in natural language, identify scope boundary

Unambiguously represent behaviors using MP, extract use cases/initial views from MP model

Relate MP system/environment behaviors to Function Point behaviors

Identify coefficients that inform complexity and scale, identify counting rules

Determine Unadjusted Function Point (UFP) count

Assess effort using MP-COCOMO II/III tool and extracting coefficients

Visualize results in views specific to stakeholders

5

Basic Concepts for Monterey Phoenix (MP)

Behavioral Modeling

• Event - any detectable action in

system’s or environment’s behavior

• Event trace - set of events with

two basic partial ordering relations,

precedence (PRECEDES) and

inclusion (IN)

• Event grammar - specifies the

structure of possible event traces

Innovations:

•

Uniform Framework: Describe behaviors and interactions of the system AND environment using the same

framework

•

Leverage Small Scope Hypothesis: Exhaustive search through all possible scenarios (up to the scope limit),

expecting that most flaws in models can be demonstrated on small counterexamples

•

Separation of System Interaction from System Behavior: Specify behavior of each system’s components

separately from interactions between those components

6

What Does “Separation of System Interaction

from System Behavior” Mean?

7

Function Point Analysis

Functionality From User’s Perspective

Determine the Type of Count and Boundary :

Development Project, Enhancement Project, Application

Terminology:

•

•

•

•

External Inputs (EI): Data that is entering a system

External Outputs (EO) and External Inquires (EQ): Data that is

leaving the system

Internal Logical Files (ILF): Data that is processed and stored within

the system

External Interface Files (EIF): Data that is maintained outside the

system but is necessary to satisfy a particular process requirement

Function Point Analysis Practice

•

•

•

•

Count Data and Transactional Function Types

Determine Unadjusted FP Count

Determine the Value Adjustment Factor N/A

Calculate final Adjusted FP Count N/A

Sources: IFPUG

Function Points:

• Normalized metric used to

evaluate software deliverables

• Measure size based on welldefined functional

characteristics of the software

system

• Must be defined around

components that can be

identified in a well-written

specification

8

Relating FPA and MP

• Unadjusted Function Point is a unit of measurement to express the amount of

functionality in a system, and can be used to estimate system cost. Of specific

interest are the input/output activities of the system.

• MP architecture model is based on behavior modeling, providing a bridge

between the requirements and high level design, and is supportive of cost

estimates early in the design phase.

• The concept of an event in MP is an abstraction for activity within the system.

It is rendered as a pseudo-code, appropriate for capturing the functional

aspects of requirements, and supportive of refinement.

• UFP can be identified in the MP architecture model as an interaction

abstraction (i.e. COORDINATE or SHARE ALL constructs).

• The structure and the complexity of interactions in MP provide a source for

assigning weights contributing to the UFP.

• Since an MP model is precise and formal, FP metrics can be identified by

automated tools such as http://csse.usc.edu/tools/MP_COCOMO

9

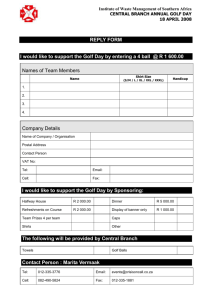

Tee Time Example System Example

UFP Count Synopsis

10

Relating Activities:

Tee Time System -- Golf Courses List, EQ : State Drop Down

Transactional Function: EQ (View or Display Retrieval of Data)

•

Click Button from Main Screen, navigate to Golf Courses list.

No EP.

•

Exit button: Navigation, no EP.

EQ -- View/Display State Drop Down

Click on state arrow

State list display returned

Stop

1 FTR (Golf Courses ILF), 2 DET (Arrow Click,

State field)

EP

EQ

Description

State Drop

Down

ILF/EIF

Golf

Courses

(I)

FTR/DET

Complex

(1,2)

Low

EQ – View/Display City Drop Down

State data entered

Click on City arrow

City list display returned

Stop

1 FTR (Golf Courses ILF), 3 DET (State data, Arrow

Click, City field)

EQ – View/Display Golf Courses List

Enter State and City

Click Display button

UFP

Name displayed back, don’t count state, city.

Stop

3

1 FTR (Golf Course ILF), 4 DET(State, City, Name,

Click Display)

© Copyright 2010. Q/P Management Group, Inc. All Rights Reserved.

11

MP Model for EQ State Drop Down

Nested COORDINATE

Line 01 Schema

Name for EQ

COORDINATE

01 SCHEMA EQ_State_Drop_Down

Lines 02-05

represent the

behaviors of

ROOTs (Actors)

02 ROOT User_GCL: Inquire_on_state_data something_else;

03

Inquire_on_state_data: Click_state_arrow_dropdown Receive_state_list_display;

04 ROOT Golfcourses_ILF: Get_result anything_else;

05

Get_result: Receive_state_arrow_prompt Send_state_list_display;

06 COORDINATE $x: Inquire_on_state_data

07

$y: Get_result

08 DO

Lines 06-17

represent the

behaviors of

EQ: State

Drop Down

COORDINATE

Lines 09 -16

represent

behaviors of

nested

COORDINATE,

DETs and FTRs.

The nested

interactions

(ADD) influence

the weight of

this EQ

COORDINATE

FROM User_GCL,

FROM Golfcourses_ILF

09 COORDINATE $xx: Click_state_arrow_dropdown FROM $x,

10

$yy: Receive_state_arrow_prompt FROM $y,

11

$x11: Receive_state_list_display FROM $x,

12

$y11: Send_state_list_display FROM $y

13 DO

14

ADD $xx PRECEDES $yy;

15

ADD $y11 PRECEDES $x11;

16 OD;

17 OD;

MP Calculation

• 1 FTR and 1 nested COORDINATE (COORDINATE & 2 ADDs) correspond to 1 FTR and 2 DETs and a

functional complexity weighting of 3

• EQ State Drop Down = (1 COORDINATE) * 3 UFP/COORDINATE = 3 UFPs

12

EQ State Drop Down Use Case

Sequence Diagram Automatically Generated by Firebird, Scope 2

• Monterey Phoenix and Related Work:

http://faculty.nps.edu/maugusto

• MP Wiki: https://wiki.nps.edu/display/MP

• Public MP server with MP editor, trace generator, and trace

graph visualization: http://firebird.nps.edu/

13

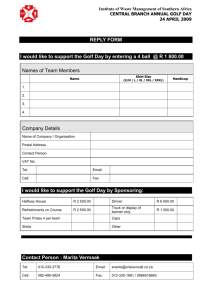

FPA Calculation

External Inquiry (EQ)

EP

Description

ILF/EIF

FTR/DET

Complexity

UFP

EQ

State Drop Down

Golf Courses (I)

(1,2)

Low

3

EQ

City Drop Down

Golf Courses (I)

(1,3)

Low

3

EQ

Golf Course List

Golf Courses (I)

(1,4)

Low

3

EQ

Golf Course Detail

Golf Courses (I)

(1,12)

Low

3

EQ

Scoreboard Display

Scoreboard (I)

(1,6)

Low

3

EQ

Maintain Golf Course Display Golf Courses (I)

(by ID)

(1,13)

Low

3

EQ

Tee Times Reservation

Display

Tee Times (I)

(1,11)

Low

3

EQ

Tee Times Shopping Display

Merchandise (E)

(1,3)

Low

3

EQ

Product View Picture

Merchandise (E)

(1,3)

Low

3

Source IFPUG Counting Manual Part 2, p.7-19

14

MP and FPA UFP Calculation

EQ State Drop Down

EP

EQ

Description

State Drop

Down

ILF/EIF

Golf

Courses (I)

FTR /

DET

(1,2)

Complex

Low

UFP

3

UFP Calculation: FPA Manual Count

UFP Calculation: Extracted From MP

•

• 1 COORDINATE interaction associated with

State Drop Down EQ behaviors

• State Drop down EQ COORDINATE

contains a nested COORDINATE (2 ADDs)

• The 2 ADDs relate to 2 DETs

• ROOT Golfcourses_ILF relates to 1 FTR

• 0 -1 FTRs and 1-5 DETs correspond to a

Low functional complexity rating

• A Low functional complexity rating

corresponds to 3 UFP

• EQ State Drop Down is equal to 1

COORDINATE with a weight of 3 or

3 UFPs

•

•

1 FTR and 2 DETs identified from the

behavior of the State Drop Down EQ

0-1 FTRs and 1-5 DETs correspond to a Low

functional complexity rating

A Low functional complexity rating

corresponds to 3 UFPs

15

MP-COCOMO Tool

Collaboration In Progress

Source: http://csse.usc.edu/tools/MP_COCOMO/

16

Closing Thoughts

• Resourcing is a socio-technical problem

– Methodology and MP Framework synchronize concepts, architectures,

system/software implementation, business processes, organizational dynamics

– Relevant in the System of Systems problem space

– Make the tools and methods user-friendly, enforce across the enterprise

• Next Steps

– Refine weights for each Transactional Function

– Refine relationship between steps of a FPA Elementary Process and MP

descriptions

• Nested COORDINATES

• ILF and EIF behavioral representations in MP

– Apply methodology to iTAP UAV case study and IFPUG case study

17

Back Up

18

FPA Calculation

External Input (EI)

EP

Description

ILF/EIF

FTR/DET

Complexity

UFP

EI

Scoreboard (ADD)

Scoreboard (I)

(1,7)

Low

3

EI

Scoreboard (CHANGE)

Scoreboard (I)

(1,7)

Low

3

EI

Scoreboard (DELETE)

Scoreboard (I)

(1,3)

Low

3

EI

Maintain Golf Course (ADD)

Golf Courses (I)

(1,13)

Low

3

EI

Maintain Golf Course (CHANGE)

Golf Courses (I)

(1,13)

Low

3

EI

Maintain Golf Course (DELETE)

Golf Courses (I)

Tee Times

(2,3)

Low

3

EI

Tee Times Reservations (ADD)

Tee Times (I)

(1,12)

Low

3

EI

Tee Times Reservations (CHANGE)

Tee Times (I)

(1,12)

Low

3

EI

Tee Times Reservations (DELETE)

Tee Times (I)

(1,6)

Low

3

Source IFPUG Counting Manual Part 2, p.7-19

19

FPA Calculation

External Output (EO)

EP

Description

ILF/EIF

FTR/DET

Complexity

UFP

EO

Shopping Display (Calculation)

Merchandise

(1,7)

Low

4

EO

Buy – Send to Purchasing

(Calculation)

Merchandise

(1,15)

Low

4

Source IFPUG Counting Manual Part 2, p.7-19

20

FPA Calculation

Data Functions

Description

ILF/EIF

RET/DET

Complexity

UFP

Golf Course

ILF

(1,11)

Low

7

Tee Times

ILF

(1,10)

Low

7

Scoreboard

ILF

(1,5)

Low

7

Merchandise

EIF

(1,3)

Low

5

Source IFPUG Counting Manual Part 2, p.7-19

21

22

iTAP Phase 4 Plans – Task 2

•

•

•

Collaboration with AFIT for a joint Intelligence, Surveillance and Reconnaissance

(ISR) mission application involving heterogeneous teams of autonomous and

cooperative agents.

NPS will provide cost modeling expertise, tools and Monterey Phoenix (MP) modeling

support. A focus will be on translations between models/tools in MBSE, specifically

mapping architectural elements into cost model inputs.

Approach

– Develop a baseline operational and system architecture to capture a set of military scenarios.

– Transition the baseline architecture to the MP environment.

– Utilize the executable architecture modeling framework of MP to perform automated

assertion checking and find counterexamples of behavior that violate the expected system's

correctness.

• Operational scenarios will be cycled through the MP modeling process, whereby alternate events are

captured for each actor in each scenario. This will produce a superset of scenario variants from the

behavior models, suitable for input to tradespace analysis and cost models.

– Design and demonstrate an ISR UAV tradespace.

– Develop cost model interfaces for components of the architecture in order to evaluate cost

effectiveness in an uncertain future environment.

23

iTAP Phase 4 Plans – Task 3

•

•

Continue extending the scope and tradespace interoperability of cost models and tools

from previous phases.

Cost modeling will engage domain experts for Delphi estimates, evolve baseline

definitions of cost driver parameters and rating scales for use in data collection, gather

empirical data and determine areas needing further research to account for differences

between estimated and actual costs.

– Prototype cost models and tools will be extended accordingly for piloting and refinement.

•

•

For tool interoperability we will integrate cost models in different ways with MBSE

architectural modeling approaches and as web services. We will also automate systems

and software risk advisors that operate in conjunction with the cost models.

NPS will provide domain expertise for SysML cost model integration with Georgia

Tech and USC to add software cost model formulas and the risk assessment

capabilities.

– This is also allied with Task 2 where we will assess Monterey Phoenix (MP) for

automatically providing cost information from architectural models. MP will extract software

sizing cost model inputs to compute costs, and we will assess mapping MP architectural

elements into systems engineering cost model inputs.

24

Systems Engineering Research Center

• A US Department of Defense

University-Affiliated Research

Center (UARC)

• The networked national resource

to further systems research and its

impact on issues of national and

global significance

The Systems Research and Impact Network

25

SERC: 22 Collaborators, Led by Stevens

Institute

26