Introductory Seminar on Research talk 2011

advertisement

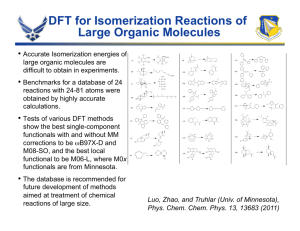

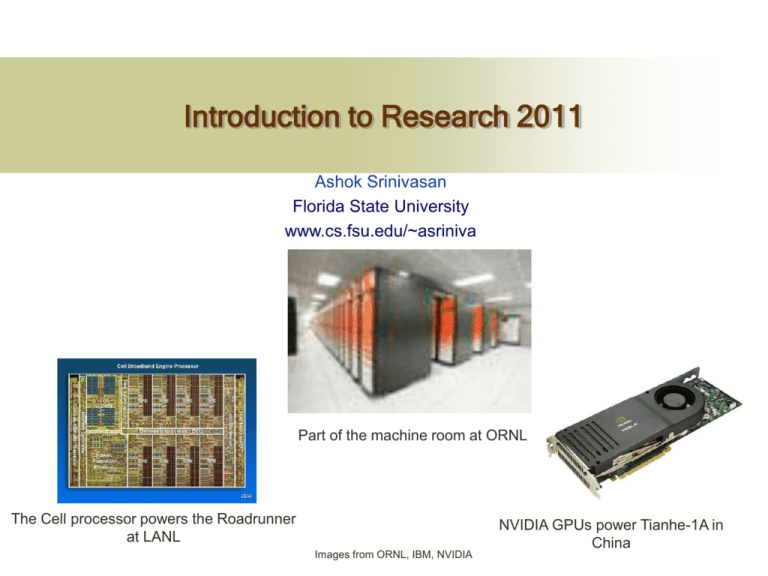

Introduction to Research 2011 Ashok Srinivasan Florida State University www.cs.fsu.edu/~asriniva Part of the machine room at ORNL The Cell processor powers the Roadrunner at LANL Images from ORNL, IBM, NVIDIA NVIDIA GPUs power Tianhe-1A in China Outline Research High Performance Computing Applications and Software Multicore processors Massively parallel processors Computational nanotechnology Simulation-based policy making Potential Research Topics Research Areas High Performance Computing, Applications in Computational Sciences, Scalable Algorithms, Mathematical Software Current topics: Computational Nanotechnology, HPC on Multicore Processors, Massively Parallel Applications New Topics: Simulation-based policy analysis Old Topics: Computational Finance, Parallel Random Number Generation, Monte Carlo Linear Algebra, Computational Fluid Dynamics, Image Compression Importance of Supercomputing Fundamental scientific understanding Solution of bigger problems Automobile crash tests Solutions with time constraints Climate modeling More accurate solutions Nano-materials, drug design Disaster mitigation Study of complex interactions for policy decisions Urban planning Some Applications Increasing relevance to industry In 1993, fewer than 30% of top 500 supercomputers were commercial, now, 57% are commercial A variety of application areas Commercial Finance and insurance Medicine Aerospace and Automobiles Telecom Oil exploration Shoes! (Nike) Potato chips! Toys! Scientific Weather prediction Earthquake modeling Epidemic modeling Materials Energy Computational biology Astro-physics Supercomputing Power The amount of parallelism too is increasing, with the high end having over 200,000 cores Geographic Distribution North America has over half the top 500 systems However, Europe and East Asia too have a significant share China is determined to be a supercomputing superpower Two of its national supercomputing centers have top-five supercomputers Japan has the top machine and two in the top five Planning a $ 1.3 billion exascale supercomputer in 2020 Asian Supercomputing Trends Challenges in Supercomputing Hardware can be obtained with enough money But obtaining good performance on large systems is difficult Some DOE applications ran at 1% efficiency on 10,000 cores They will have to deal with a million threads soon, and with a billion at the exa-scale Don’t think of supercomputing as a means of solving current problems faster, but as a means of solving problems we earlier thought we could not solve Development of software tools to make use of the machines easier Architectural Trends Massive parallelism 10K processor systems will be commonplace Large end already has over 500K processors Single chip multiprocessing All processors will be multicore Heterogeneous multicore processors Cell used in the PS3 GPGPU 80-core processor from Intel Processors with hundreds of cores are already commercially available Distributed environments, such as the Grid But it is hard to get good performance on these systems Accelerating Applications with GPUs Over a hundred cores per GPU Hide memory latency with thousands of threads Can accelerate a traditional computer to a teraflop GPU cluster at FSU Quantum Monte Carlo applications Algorithms Linear algebra, FFT, compression, etc Small Discrete Fourier Transforms (DFT) on GPUs GPUs are effective for large DFTs, but not small DFTs However, they can be effective for a large number of small DFTs Useful for AFQMC We use the asymptotically slow matrix-multiplication based DFT for very small sizes We combine it with mixed-radix for larger sizes We use asynchronous memory transfer to deal with host-device data transfer overhead Comparison of DFT Performance Comparison of 512 simultaneous DFTs without host-device data transfer N 4 8 12 16 20 24 Mixed Radix Time: µs/DFT 0.043 0.214 0.550 1.14 1.96 3.19 M atrix M ultipl ication Time: µs/DFT 0.038 0.206 0.716 1.95 3.09 6.71 Cooley Tukey Time: µs/DFT 0.115 0.353 1.96 CUFFT Time: µs/DFT 18.3 23.5 45.8 35.4 47.6 46.4 FFTW Time: µs/DFT 2.87 3.41 4.78 6.81 11.2 17.1 3-D DFTs 2-D DFTs N 4 8 12 16 20 24 Mixed Radix Time: µs/DFT 0.621 4.04 12.4 34.8 71.9 138 M atrix M ultipl ication Time: µs/DFT 0.578 3.43 12.7 42.9 77.5 172 Cooley Tukey Time: µs/DFT 1.06 6.01 58.2 CUFFT Time: µs/DFT 50.1 84.7 327 836 566 678 FFTW Time: µs/DFT 3.57 12.2 38.3 92.6 230 513 Petascale Quantum Monte Carlo Originally a DOE funded project involving collaboration between ORNL, UIUC, Cornell, UTK, CWM, and NCSU Now funded by ORAU/ORNL Scale Quantum Monte Carlo applications to petascale (one million gigaflops) machines Load balancing, fault tolerance, other optimizations Load Balancing In current implementations, such as QWalk and QMCPack, cores send excess walkers to cores with fewer walkers In the new algorithm (alias method), cores may send more than their excess, and receive walkers even if they originally had an excess Load can be balanced with each core receiving from at most one other core Also optimal in maximum number of walkers received Total number of walkers sent may be twice the optimal Performance Comparison Comparisons with QWalk Mean number of walkers migrated Maximum number of receives Process-Node Affinity Node allocation is not necessarily ideal for minimizing communication Process-node affinity can, therefore, be important Allocated nodes for a 12,000 core run on Jaguar Load Balancing with Affinity Renumbering the nodes improves load balancing and AllGather time Basic load balancing Load balancing after renumbering Results on Jaguar Potential Research Topics High Performance Computing on Multicore Processors Algorithms, Applications, and libraries on GPUs Applications on Massively Parallel Processors Quantum Monte Carlo applications Load balancing and communication optimizations Simulation-based policy decisions Combine scientific computing with models of social interactions to help make policy decisions