Statistics 846.3(02) Statistics 349.3(02) Lecture Notes

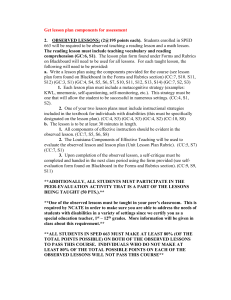

advertisement

Brief Review

Probability and Statistics

Probability distributions

Continuous distributions

Defn (density function)

Let x denote a continuous random variable then f(x) is

called the density function of x

1)

f(x) ≥ 0

2)

b

3)

f ( x)dx 1

f ( x)dx P a x b

a

Defn (Joint density function)

Let x = (x1 ,x2 ,x3 , ... , xn) denote a vector of continuous

random variables then

f(x) = f(x1 ,x2 ,x3 , ... , xn)

is called the joint density function of x = (x1 ,x2 ,x3 , ... , xn)

if

1)

f(x) ≥ 0

2)

f ( x ) dx 1

3)

f (x)dx Px R

R

Note:

f (x)dx f x , x , x dx dx dx

1

2

n

1

2

f (x)dx f x , x , x dx dx dx

1

R

R

n

2

n

1

2

n

Defn (Marginal density function)

The marginal density of x1 = (x1 ,x2 ,x3 , ... , xp) (p < n)

is defined by:

f1(x1) = f ( x )dx 2 = f ( x1 , x 2 )dx 2

where x2 = (xp+1 ,xp+2 ,xp+3 , ... , xn)

The marginal density of x2 = (xp+1 ,xp+2 ,xp+3 , ... , xn) is

defined by:

f2(x2) = f ( x) dx1= f (x1 , x 2 )dx1

where x1 = (x1 ,x2 ,x3 , ... , xp)

Defn (Conditional density function)

The conditional density of x1 given x2 (defined in previous

slide) (p < n) is defined by:

f ( x)

f (x1 , x 2 )

f1|2(x1 |x2) =

f 2 x 2

f 2 x 2

conditional density of x2 given x1 is defined by:

f (x) f (x1 , x 2 )

f2|1(x2 |x1) =

f1 x1

f1 x1

Marginal densities describe how the subvector

xi behaves ignoring xj

Conditional densities describe how the

subvector xi behaves when the subvector xj is

held fixed

Defn (Independence)

The two sub-vectors (x1 and x2) are called independent

if:

f(x) = f(x1, x2) = f1(x1)f2(x2)

= product of marginals

or

the conditional density of xi given xj :

fi|j(xi |xj) = fi(xi) = marginal density of xi

Example (p-variate Normal)

The random vector x (p × 1) is said to have the

p-variate Normal distribution with

mean vector m (p × 1) and

covariance matrix S (p × p)

(written x ~ Np(m,S)) if:

1

1

f x

exp (x μ)' S (x μ)

1/ 2

p/2

2

2 S

1

Example (bivariate Normal)

x1

The random vector x is said to have the bivariate

x2

m1

Normal distribution with mean vector μ

m2

and

covariance matrix

2

1

11

12

S

12 22 1 2

1 2

2

2

1

1

f x

exp (x μ)' S (x μ)

1/ 2

p/2

2

2 S

1

1

1

f x1 , x2

exp (x μ)' S (x μ)

1/ 2

2

2 S

1

1

2 11 22

2 1/ 2

12

exp Q x1 , x2

1

11 12

Q x1 , x2 (x μ)'

(x μ)

12 22

22 ( x1 m1 ) 2 2 12 ( x1 m1 )( x2 m 2 ) 11 ( x2 m 2 ) 2

11 22 122

f x1 , x2

1

21 1 1 2

exp Qx1 , x2

Qx1 , x2

2

x1 m1

x1 m1 x2 m 2 x2 m 2

2

1

1 2 2

1 2

2

Theorem (Transformations)

Let x = (x1 ,x2 ,x3 , ... , xn) denote a vector of

continuous random variables with joint density

function f(x1 ,x2 ,x3 , ... , xn) = f(x). Let

y1 =f1(x1 ,x2 ,x3 , ... , xn)

y2 =f2(x1 ,x2 ,x3 , ... , xn)

...

yn =fn(x1 ,x2 ,x3 , ... , xn)

define a 1-1 transformation of x into y.

Then the joint density of y is g(y) given by:

g(y) = f(x)|J| where

( x ) ( x1 , x 2 , x3 ,..., x n )

J

( y ) ( y1 , y 2 , y 3 ,..., y n )

x n

x1 x 2

...

y

y

y

1

1

1

x1 x 2 ... x n

det y 2 y 2

y 2 = the Jacobian of the

...

transformation

x

x

x

n

2

1

...

y n

y n y n

Corollary (Linear Transformations)

Let x = (x1 ,x2 ,x3 , ... , xn) denote a vector of

continuous random variables with joint density

function f(x1 ,x2 ,x3 , ... , xn) = f(x). Let

y1 = a11x1 + a12x2 + a13x3 , ... + a1nxn

y2 = a21x1 + a22x2 + a23x3 , ... + a2nxn

...

yn = an1x1 + an2x2 + an3x3 , ... + annxn

define a 1-1 transformation of x into y.

Then the joint density of y is g(y) given by:

1

1

1

g ( y ) f ( x)

f ( A y)

det( A)

det( A)

a11

a

21

where A

an1

a12

a22

an 2

a1n

a2 n

... ann

...

...

Corollary (Linear Transformations for Normal

Random variables)

Let x = (x1 ,x2 ,x3 , ... , xn) denote a vector of continuous

random variables having an n-variate Normal

distribution with mean vector m and covariance matrix

S.

i.e.

x ~ Nn(m, S)

Let

y1 = a11x1 + a12x2 + a13x3 , ... + a1nxn

y2 = a21x1 + a22x2 + a23x3 , ... + a2nxn

...

yn = an1x1 + an2x2 + an3x3 , ... + annxn

define a 1-1 transformation of x into y.

Then y = (y1 ,y2 ,y3 , ... , yn) ~ Nn(Am,ASA')

Defn (Expectation)

Let x = (x1 ,x2 ,x3 , ... , xn) denote a vector of

continuous random variables with joint density

function

f(x) = f(x1 ,x2 ,x3 , ... , xn).

Let U = h(x) = h(x1 ,x2 ,x3 , ... , xn)

Then

E U E h(x) h(x) f (x)dx

Defn (Conditional Expectation)

Let x = (x1 ,x2 ,x3 , ... , xn) = (x1 , x2 ) denote a

vector of continuous random variables with joint

density function

f(x) = f(x1 ,x2 ,x3 , ... , xn) = f(x1 , x2 ).

Let U = h(x1) = h(x1 ,x2 ,x3 , ... , xp)

Then the conditional expectation of U given x2

EU x2 Eh(x1 ) x2 h(x1 ) f1|2 (x1 x2 )dx

1

Defn (Variance)

Let x = (x1 ,x2 ,x3 , ... , xn) denote a vector of

continuous random variables with joint density

function

f(x) = f(x1 ,x2 ,x3 , ... , xn).

Let U = h(x) = h(x1 ,x2 ,x3 , ... , xn)

Then

VarU E U EU E h(x) Eh(x)

2

U

2

2

Defn (Conditional Variance)

Let x = (x1 ,x2 ,x3 , ... , xn) = (x1 , x2 ) denote a

vector of continuous random variables with joint

density function

f(x) = f(x1 ,x2 ,x3 , ... , xn) = f(x1 , x2 ).

Let U = h(x1) = h(x1 ,x2 ,x3 , ... , xp)

Then the conditional variance of U given x2

VarU x 2 E h(x1 ) Eh(x1 ) x 2

2

Defn (Covariance, Correlation)

Let x = (x1 ,x2 ,x3 , ... , xn) denote a vector of

continuous random variables with joint density

function

f(x) = f(x1 ,x2 ,x3 , ... , xn).

Let U = h(x) = h(x1 ,x2 ,x3 , ... , xn) and

V = g(x) =g(x1 ,x2 ,x3 , ... , xn)

Then the covariance of U and V.

CovU ,V EU EU V EV

Eh(x) Eh(x)g (x) Eg (x)

and UV

CovU ,V

correlatio n

Var (U ) Var (V )

Properties

•

•

•

•

Expectation

Variance

Covariance

Correlation

1. E[a1x1 + a2x2 + a3x3 + ... + anxn]

= a1E[x1] + a2E[x2] + a3E[x3] + ... + anE[xn]

or E[a'x] = a'E[x]

2. E[UV] = E[h(x1)g(x2)]

= E[U]E[V] = E[h(x1)]E[g(x2)]

if x1 and x2 are independent

3. Var[a1x1 + a2x2 + a3x3 + ... + anxn]

n

n

i 1

i j

ai2Var[ xi ] 2 ai a j Cov[ xi , x j ]

or Var[a'x] = a′S a

Cov( x1 , x2 ) ... Cov( x1 , xn )

Var ( x1 )

Cov( x , x ) Var ( x )

...

Cov

(

x

,

x

)

2

1

2

2

n

where S

...

Cov( xn , x1 ) Cov( xn , x2 ) ... Var ( xn )

4. Cov[a1x1 + a2x2 + ... + anxn ,

b1x1 + b2x2 + ... + bnxn]

n

n

i 1

i j

ai b jVar[ xi ] ai b j Cov[ xi , x j ]

or Cov[a'x, b'x] = a′S b

5.

EU Ex2 EU x2

6.

VarU Ex2 VarU x2 Varx2 EU x2

Multivariate distributions

The Normal distribution

1.The Normal distribution –

parameters m and (or 2)

Comment: If m = 0 and = 1 the distribution is

called the standard normal distribution

0.03

Normal distribution

with m = 50 and =15

0.025

0.02

Normal distribution with

m = 70 and =20

0.015

0.01

0.005

0

0

20

40

60

80

100

120

The probability density of the normal distribution

1

f ( x)

e

2

2

xm

, x

2 2

If a random variable, X, has a normal distribution

with mean m and variance 2 then we will

write:

X ~ N m ,

2

The multivariate Normal

distribution

Let

x1

x = a random vector

xp

Let

m1

= a vector of constants (the

m

mean vector)

m p

Let

S

p p

1 p

1 p

pp

= a p × p positive

definite matrix

Definition

The matrix A is positive semi definite if

xAx 0 for all x

Further the matrix A is positive definite if

xAx 0 only if x 0

Suppose that the joint density of the random

vector x is:

f ( x ) f x1 ,

, xp

1

2

p/2

S

1/ 2

e

1

x m S1 x m

2

The random vector, x [x1, x2, … xp] is said

to have a p-variate normal distribution with

mean vector m and covariance matrix S

We will write:

x ~ N m, S

p

Example: the Bivariate Normal distribution

f x1 , x2

with

1

2 S

m1

m

m2

1/ 2

e

1

x m S1 x m

2

and

2

S

22

2 22 2

2

1

2

2

2

Now

S 22 1

2

12

2

1

2

2

2

and

x m S x m

1

11 12 x1 m1

x1 m1 , x2 m2 x m

22 2

2

12

22 12 x1 m1

1

x1 m1 , x2 m2

S

12 11 x2 m2

-1

1

2

2

22 x1 m1 212 x1 m1 x2 m2 11 x2 m2

S

2

2

x1 m1

2

2 1 2 x1 m1 x2 m2

12 22 1 2

2

1

x2 m2

2

x m 2

x

m

x

m

x

m

1

1

1

1

2

2

2

2

2

1 2 2

1

1 2

2

Hence

f x1 , x2

1

2 S

1/ 2

e

1

x m S1 x m

2

1

2 1 2

1

2

e

1

Q x1 , x2

2

where

2

x m 2

x

m

x

m

x

m

1

1

1

1

2

2

2

2

2

1 2 2

1

Q x1 , x2

1 2

Note:

f x1 , x2

1

2 1 2

1

2

e

1

Q x1 , x2

2

is constant when

2

x m 2

x

m

x

m

x

m

1

1

1

1

2

2

2

2

2

1 2 2

1

Q x1 , x2

1 2

is constant.

This is true when x1, x2 lie on an ellipse

centered at m1, m2 .

Surface Plots of the bivariate

Normal distribution

Contour Plots of the bivariate

Normal distribution

Scatter Plots of data from the

bivariate Normal distribution

Trivariate Normal distribution - Contour map

x m S 1 x m = const

mean vector

x3

m1

m m2

m3

x2

x1

Trivariate Normal distribution

x3

x2

x1

Trivariate Normal distribution

x3

x1

x2

Trivariate Normal distribution

x3

x2

x1

example

In the following study data was collected for a

sample of n = 183 females on the variables

• Age,

• Height (Ht),

• Weight (Wt),

• Birth control pill use (Bpl - 1=no pill, 2=pill)

and the following Blood Chemistry measurements

• Cholesterol (Chl),

• Albumin (Abl),

• Calcium (Ca) and

• Uric Acid (UA). The data are tabulated next page:

Age

22

25

25

19

19

20

20

20

21

21

21

21

21

21

21

21

21

21

21

21

21

21

22

22

22

22

22

22

22

22

22

22

22

22

22

22

22

23

23

23

23

24

24

24

24

24

24

24

25

25

25

25

54

25

25

26

26

26

26

36

53

The data :

Ht

67

62

68

64

67

64

64

65

60

65

63

64

67

67

63

64

63

64

68

62

68

64

62

62

57

64

64

59

67

62

58

66

60

60

65

60

67

63

64

63

63

68

64

64

65

65

64

71

62

67

66

63

67

67

67

66

64

65

65

65

65

Wt

144

128

150

125

130

118

118

119

107

135

100

120

134

145

138

113

160

115

125

106

150

130

135

110

105

120

115

94

125

97

100

130

100

100

135

95

124

125

105

125

120

125

130

130

130

148

135

156

107

175

112

120

127

135

141

135

118

125

120

121

140

Bpl

1

1

2

1

2

1

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

1

2

1

2

1

2

1

2

1

2

2

1

2

1

2

1

2

1

2

2

1

2

1

2

1

2

2

2

Chl

200

243

50

158

255

210

210

192

246

245

208

260

204

192

280

230

215

225

165

200

220

255

263

173

170

290

263

220

200

192

247

175

155

155

215

200

247

220

207

266

240

195

250

250

225

200

180

240

330

175

205

235

260

295

230

240

238

198

196

270

220

Alb

43

41

38

41

45

39

39

38

42

34

38

47

40

39

41

39

39

44

48

38

47

34

43

42

46

37

42

47

43

38

42

44

41

41

40

47

44

32

42

42

43

49

39

39

50

37

37

42

48

39

46

44

44

46

38

48

40

44

38

43

40

Ca

98

104

96

99

105

95

95

93

101

106

98

106

108

95

102

99

96

105

105

95

102

102

98

97

98

98

102

105

100

95

104

106

96

96

93

99

102

92

100

103

101

106

103

103

108

104

96

102

101

93

101

103

106

106

101

103

99

96

95

98

107

UA

54

33

30

47

83

40

40

50

52

48

54

38

34

49

41

38

39

44

28

40

75

40

47

37

45

59

47

46

44

43

52

58

45

45

43

34

45

42

40

47

39

52

46

46

39

49

49

51

53

51

33

40

57

47

52

51

46

43

43

35

46

Age

27

27

27

27

27

27

27

27

28

28

28

28

28

28

29

29

29

29

30

30

30

30

30

30

30

30

30

31

31

31

31

31

31

32

32

32

32

32

32

32

32

32

32

54

33

33

33

33

33

33

34

34

35

35

35

35

36

36

36

50

54

Ht

64

64

69

64

63

64

60

65

65

62

65

66

62

65

61

61

68

65

66

63

61

63

62

63

64

66

64

65

66

65

63

66

67

67

68

62

65

63

71

62

62

66

68

67

64

60

67

68

65

69

62

63

62

67

66

66

62

67

66

66

66

Wt

120

180

137

125

125

124

140

155

108

110

120

113

135

160

142

115

155

118

143

110

99

132

125

110

135

112

160

125

120

115

110

123

136

132

203

155

126

125

170

120

145

140

133

140

115

118

137

130

130

138

112

125

115

125

138

140

135

120

112

140

158

Bpl

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

Chl

172

317

195

185

168

200

250

280

260

250

175

305

200

235

177

235

226

230

198

295

230

200

230

262

174

250

217

250

237

270

280

238

218

185

235

262

160

189

205

260

240

197

180

245

205

260

243

195

203

222

197

245

180

223

254

245

247

175

215

390

305

Alb

43

37

46

36

42

40

36

42

48

44

48

41

43

42

39

45

38

44

45

45

43

37

46

33

40

44

35

43

34

41

44

37

38

39

38

37

41

40

37

43

45

44

32

39

47

38

41

40

44

40

37

38

40

40

39

39

34

46

43

46

42

Ca

98

98

101

94

97

96

98

103

106

105

100

93

97

101

99

98

94

99

107

98

99

96

104

99

95

100

95

98

91

111

99

96

95

103

99

99

97

94

90

107

108

106

95

104

100

99

106

95

101

104

93

95

91

100

107

105

90

103

104

97

103

UA

60

84

42

54

41

52

68

52

51

38

47

24

37

41

46

47

43

44

65

46

39

34

48

41

35

35

31

39

49

64

49

33

42

37

37

43

40

40

60

38

42

58

40

56

54

38

55

58

48

42

44

41

59

37

41

56

44

39

42

55

48

Age

37

37

37

38

38

39

39

39

39

39

39

40

40

40

40

40

40

40

40

41

41

41

41

42

42

43

43

43

43

43

43

43

43

55

55

44

44

45

45

46

46

46

46

46

47

47

47

47

48

48

48

48

48

48

48

48

49

49

50

52

54

Ht

67

65

63

64

65

64

64

63

69

62

62

63

64

65

66

65

65

67

62

62

68

64

69

60

63

66

68

66

66

64

62

64

65

64

64

62

63

67

65

67

63

62

62

65

67

67

61

59

62

66

67

65

65

66

60

64

64

69

69

62

60

Wt

125

116

129

165

151

135

108

195

132

100

110

110

151

145

140

140

137

130

117

116

215

125

170

105

129

167

145

138

132

125

113

126

148

124

165

118

133

180

140

145

138

118

103

190

135

143

132

94

120

143

143

134

164

120

125

138

126

158

135

107

170

Bpl

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

1

2

2

Chl

200

270

230

255

275

210

198

260

180

210

235

196

305

170

276

272

315

300

290

320

255

306

324

240

210

210

250

335

230

285

200

280

276

275

298

253

242

160

263

320

257

190

230

265

297

255

257

257

300

225

216

248

306

235

195

338

255

217

295

265

220

Alb

45

42

36

44

38

40

44

40

39

45

41

39

39

45

46

41

37

40

42

44

43

45

40

41

40

40

36

44

42

45

40

45

41

40

36

43

47

38

45

40

40

38

43

41

42

41

39

41

39

40

40

42

44

36

41

37

41

36

43

46

35

Ca

99

100

91

102

94

95

90

108

94

91

99

97

99

100

100

91

96

106

99

111

105

98

99

101

100

100

98

105

98

105

93

106

105

98

100

94

104

97

107

101

90

95

102

108

100

100

96

103

94

100

96

102

100

97

95

100

102

106

105

104

88

UA

66

48

22

62

46

46

38

42

30

27

35

42

48

43

55

44

99

52

42

61

45

62

55

51

46

52

42

58

48

50

36

38

50

53

63

44

49

59

52

37

61

43

33

85

45

40

38

53

51

62

47

42

78

35

53

58

48

65

63

64

63

3D Scatterplot Wt,Ht Age

3D Scatterplot

Alb, Chl, Bp

Marginal and Conditional

distributions

Theorem: (Woodbury)

A CBD

1

Proof:

1

A A C B DA C DA1

1

1

1

1

1

1

A

CBD

A

A

C

B

DA

C

DA

1

I CBDA

I CBDA

1

1

1

1

1

A

CBD

A

A

CBD

A

C

B

DA

C

DA

1

1

1

1

1

1

C CBDA C B DA C DA1

1

CB B DA C B DA C DA1

1

1

1

1

I CBDA1 CBDA1 I

1

1

1

1

Example:

I n bJ n

1

b

In

Jn

1 nb

Solution:

1

Use A CBD A A C B DA C DA1

1

1

1

1

1

with A I n , C 1, D 1, B b

11

1

1

hence I n 1b1 I n I n 1 b 1I n 1 1I n

1

I n 1

11

b n

b

In

Jn

1 nb

1

Theorem: (Inverse of a partitioned symmetric matrix)

A11

Let A

A12

A12

B11

1

and B A

A22

B12

Then B11 A11 A12 A A12

1

22

B12

B22

-1

1

A A A A22 A12 A A A12 A111

1

11

1

11 12

1

11 12

-1

A A12

B22 A22 A12

1

1

1

1

1

A22 A22 A12 A11 A12 A22 A12 A12 A22

1

11

A A12

A11 A12 A A12

B12

1

22

1

22

A A12

B12 A A12 A22 A12

1

11

1

11

-1

-1

Proof:

A11

Let A

A12

A12

B11

1

and B A

A22

B12

A11

Then I p AA AB

A12

1

A11B11 A12 B12

A12 B11 A22 B12

A12 B11

A22 B12

B12

B22

B12

B22

A11B12 A12 B22 I q

=

A12 B12 A22 B22 0

A11B11 A12 B12 I q

or

A12 B11 A22 B12 0

0

I p q

A11B12 A12 B22 0

A12 B12 A22 B22 I p q

B11 A111 A111 A12 B12

hence

1

B12 A22

A12 B11 0

B12 A111 A12 B22

1

1

B22 A22

A22

A12 B12

1

and B11 A111 A111 A12 A22

A12 B11

1

or I A111 A12 A22

A12 B11 A111

1

and A11 A12 A22

A12 B11 I

hence B11 A11 A12 A A

1

22 12

-1

-1

similarly B22 A22 A12 A A

-1

1

1

and B12 A22 A12 A11 A12 A22 A12

1

11 12

B12 A A A22 A12 A A

1

11 12

1

11 12

-1

Theorem: (Determinant of a partitioned symmetric

matrix)

A11

Let A

A12

Then

1

A11 A12 A22

A22

A12

A

1

A

A

A

A

A

22

12

11

12

11

Proof:

A11

Note A

A12

and

A12

A22

B

C

A12 A11 0 I

A111 A12

1

A22 A12 I 0 A22 A12 A11 A12

B C*

B D

D 0 D

0

Theorem: (Marginal distributions for the Multivariate

Normal distribution)

x1 q

have p-variate Normal distribution

Let x

x2 p q

m1 q

with mean vector m

m2 p q

S11 S12

and Covariance matrix S

S

S

22

12

Then the marginal distribution of xi is qi-variate Normal

distribution (q1 = q, q2 = p - q)

with mean vector

mi

and Covariance matrix S ii

Theorem: (Conditional distributions for the

Multivariate Normal distribution)

x1 q

have p-variate Normal distribution

Let x

x2 p q

m1 q

with mean vector m

m2 p q

S11 S12

and Covariance matrix S

S

S

22

12

Then the conditional distribution of xi given x j is qi-variate

Normal distribution

1

m

=

m

S

S

i j

i

ij jj x j m j

with mean vector

and Covariance matrix

Sii j Sii - Sij Sjj1Sij

Proof: (of Previous two theorems)

x1

The joint density of x is

x2

f x f x1 , x2

where

1

2

p/2

1

2

p/2

S

1

2

S

e

1

2

e

12 x m S1 x m

12 Q x1 , x2

m1 q

S11 S12

, S

and

m

S22

m2 p q

S12

Q x1 , x2 x m S 1 x m

Q x1 , x2 x m S 1 x m

S11 S12 x1 m1

x1 m1, x2 m2 21

22

x

m

S

S

2

2

x1 m1 S11 x1 m1 2 x1 m1 S12 x2 m2

x2 m2 S 22 x2 m2

S11

1

S

S12

where

and

S12

S 22 ,

-1

S11 S12

21

22

S

S

1

1

S S S12

S11

S S S S S 22 S12

11

1

11

1

11 12

S S

S S 22 S12

1

11 12

1

22

1

11 12

12

S S12

S S12 S 22 S12

S

1

11

1

11

-1

= S 21

also

1

S11

S S11 S 22 S12

S12

and Q x1 , x1 x1 m1 S11 x1 m1 2 x1 m1 S12 x2 m2

x2 m2 S 22 x2 m2

1

x1 m1 S11

x1 m1

1

1

1

1

S11 S12 S12

S11

x1 m1 S11 S12 S22 S12

x1 m1

-1

1

1

S11 S12 x2 m2

2 x1 m1 S11 S,12 S22 S12

-1

1

S11 S12 x2 m2

x2 m2 S22 S12

1

Hence Q x1 , x2 x1 m1 S11

x1 m1

-1

1

S x1 m1 S 22 S12

S11 S12

x2 m2 S12

1

S11

x2 m2 S12

x1 m1

1

11

Q1 x1 Q2 x1 , x2

1

where Q x1 x1 m1 S11

x1 m1

,

1

S11

and Q2 x1 , x2 x2 m2 S12

x

m

1 1

1

S S12 x2 m2 S12

S11

S 22 S12

x1 m1

1

11

-1

-1

x2 b A x2 b

1

S11

where b m2 S12

x1 m1

1

S11

and A S 22 S12

S12

now f x f x1 , x2

1

2

p/2

S

1

2

e

12 Q x1 , x2

1

2

p/2

1

2

1

S11

S11 S22 S12

S12

1

2

q/2

S11

1

2

e

1

2

e

12 Q1 x1 Q2 x1 , x2

1

12 x1 m1 S11

x1 m1

1

p q / 2

2

A

1

2

e

12 x2 b A1 x2 b

The marginal distribution of x1 is

f1 x1 f x1 , x2 dx2

1

2

q/2

S11

1

2

2

S11

1

p q / 2

2

1

2

e

q 1

2

dx p

1

12 x1 m1 S11

x1 m1

1

1

q/2

e

f x , x dx

A

1

2

e

12 x2 b A1 x2 b

1

12 x1 m1 S11

x1 m1

dx2

The conditional distribution of x2 given x1 is:

f 2|1 x2 x1

f x1 , x2

f1 x1

1

p q / 2

2

A

1

2

e

1

S11

where b m2 S12

x1 m1

1

S11

and A S 22 S12

S12

12 x2 b A1 x2 b

1

S11

The matrix S 2 1 S 22 S12

S12

is called the matrix of partial variances and covariances.

The i, j

th

element of the matrix S 2 1

ij 1,2....q

is called the partial covariance (variance if i = j)

between xi and xj given x1, … , xq.

ij 1,2....q

ij 1,2....q

ii 1,2....q jj 1,2....q

is called the partial correlation between xi and xj given

x1 , … , xq .

1

S11

the matrix S12

is called the matrix of regression coefficients for

predicting xq+1, xq+2, … , xp from x1, … , xq.

Mean vector of xq+1, xq+2, … , xp given x1, … , xqis:

1

S11

m2 1 x1 where m2 S12

m1

Example:

x1

x

2

Suppose that x

x3

x4

Is 4-variate normal with

10

15

m and

6

14

4

4 2

2 17 6

S

4 6 14

6

2 5

2

5

6

7

x1

The marginal distribution of x1

x2

is bivariate normal with

10

m1 and

15

4 2

S11

2

17

x1

The marginal distribution of x1 x2

x3

is trivariate normal with

4

4 2

10

m1 15 and S11 2 17 6

4 6 14

6

x3

Find the conditional distribution of x2

x4

x1 15

given x1

x2 5

Now

10

6

m1 andm2

15

14

and

4 2

14

S11

S 22

2 17

6

6

7

S12

4

6

2

5

1

S11

S 2 1 S 22 S12

S12

14

6

9

3

6 4

7 2

3

5

6 4 2

5 2 17

1

4

6

2

5

The matrix of regression coefficients for predicting x3, x4

from x1, x2.

1

S11

S12

4

2

6 4 2

5 2 17

0.875 .250

0.375

.250

1

1

S11

m2 S12

m1

m2 m1

6 0.875

14 0.375

0.250 10

0.250 15

6.5

6.5

m2 1 x1

0.875 x1 0.250 x2 6.5

0.375

x

0.250

x

6.5

1

2

0.875 15 0.250 5 6.5 7.875

0.375

15

0.250

5

6.5

13.375

The Chi-square distribution

The Chi-square distribution

The Chi-square (c2) distribution with n d.f.

1 2 n 1 1 x

2 x2 e 2

f x n2

0

n

n 2 x

1

2

2

x

e

n2 n

2 2

0

x0

x0

x0

x0

Graph: The c2 distribution

(n = 4)

0.2

(n = 5)

(n = 6)

0.1

0

0

4

8

12

16

Basic Properties of the Chi-Square distribution

1. If z has a Standard Normal distribution then z2 has

a c2 distribution with 1 degree of freedom.

2. If z1, z2,…, zn are independent random variables

each having Standard Normal distribution then

U z12 z22 ... zn2

has a c2 distribution with n degrees of freedom.

3. Let X and Y be independent random variables

having a c2 distribution with n1 and n2 degrees of

freedom respectively then X + Y has a c2

distribution with degrees of freedom n1 + n2.

continued

4. Let x1, x2,…, xn, be independent random variables

having a c2 distribution with n1 , n2 ,…, nn degrees

of freedom respectively then x1+ x2 +…+ xn has a

c2 distribution with degrees of freedom n1 +…+ nn.

5. Suppose X and Y are independent random variables

with X and X + Y having a c2 distribution with n1

and n (n > n1 ) degrees of freedom respectively

then Y has a c2 distribution with degrees of

freedom n - n1.

The non-central Chi-squared distribution

If z1, z2,…, zn are independent random variables each

having a Normal distribution with mean mi and

variance 2 = 1, then

U z z ... zn

2

1

2

2

2

has a non-central c2 distribution with n degrees of

freedom and non-centrality parameter

n

12 mi2

i 1

Mean and Variance of non-central c2

distribution

If U has a non-central c2 distribution with n degrees of

freedom and non-centrality parameter

Then

n

1

2

m

i 1

n

2

i

E U n 2 n mi2

i 1

VarU 2n 4

If U has a central c2 distribution with n degrees of

freedom and is zero, thus

EU n

VarU 2n

Distribution of Linear and

Quadratic Forms

y

N μ, S

Suppose

Consider the random variable

2

2

2

U y Ay a11 y1 a22 y2 ann yn

2a12 y1 y2 2an 1,n yn 1 yn

Questions

1. What is the distribution of U? (many statistics

have this form)

2. When is this distribution simple?

3. When we have two such statistics when are

they independent?

y

N 0, I

Simplest Case

2

2

2

U y Iy y1 y2 yn

Then the distribution of U is the central c2

distribution with n = n degrees of freedom.

Now consider the distribution of other quadratic forms

Theorem Suppose y

1

2

2

2

U 2 y Iy y1 y2 yn

where

n

1

2 2

2

N μ, I then

m

i 1

2

i

c n,

Proof

1

Note z y

N μ, I then

1

1

2

2

2

U z z 2 y Iy y1 y2 yn

with

1

1 1

2 μ μ

n

1

2 2

m

i 1

2

i

c n,

Theorem Suppose y

N μ, S then

1

U yS y

c n,

1

with 2 μ S μ

Let S AA with A n.s.

1

Consider z A y

1

1

1

Then z

N A μ, A SA

1

N A μ, I

and U zz

c n,

Proof

with

1

A μ A μ

1

1

2

1 1

μ A

A μ

1

1

2 μ AA μ

1

2μS μ

1

2

also

1

1

U zz A y A y

1 1

y A

A y

1

y AA y

1

y S y

Hence

with

1

U yS y

μS μ

1

2

c n,

Theorem Suppose z

N 0, I then

U z Az has a central c distributi on with r d.f.

if and only if

• A is symmetric idempotent of rank r.

Proof Since A is symmetric idempotent of

rank r, there exists an orthogonal matrix P

such that

A = PDP or PAP = D

Since A is idempotent the eigenvalues of A

are 0 or 1 and the # of 1’s is r, the rank of

A.

r

Thus D

nr

I 0

0 0

I 0

and PAP

0

0

Let P P1 P2

P1

Then PAP AP1 P2

P2

P1AP1 P1AP21 I 0

P2 AP1 P2 AP2 0 0

P1z y1

consider y Pz

P2z y1

Now y

N P0, PIP

N 0, PP

N 0, I

thus y1

N r 0, I

2

and U y1y1 has a c distributi on with r d.f.

I 0

Now U y1 y1 y

y yPAP y zAz

0 0

Thus zAz has a c 2 distributi on with r d.f.

Theorem

Suppose

U zAz

(A generaliza tion of previous theorem)

z

N μ, I then

has a non - central c r , distributi on.

1

with non - centrality parameter 2 μ Aμ

if and only if

• A is symmetric idempotent of rank r.

Proof Similar to previous theorem

Theorem Suppose y

N μ, S then

U yAy has c r , distributi on

1

with 2 μ Aμ

if and only if the following two conditions are

satisfied:

1. AS is idempotent of rank r.

2. SA is idempotent of rank r.

Proof Let Q be such that S QQ.

1

then z Q y has a N Q 1μ, Q 1SQ1 N Q 1μ, I

distributi on

Let B QAQ or A Q1BQ 1 , then from

the previous theorem

1

1

U zBz y Q BQ y y Ay has a χ 2 r ,

1

1

distributi on with μ Q BQ μ μ Aμ

if and only if B QAQ is idempotent of rank r.

B QAQ is of rank r if and only if A is of rank r.

B QAQ

and A are congruent, similar

Also B QAQ is idempotent B B B

i.e. QAQ QAQ QAQ.

or Q QAQ QAQ Q Q QAQ Q.

i.e. AQ QAQ Q AQ Q

1

1

i.e. AQ QAQ Q AQ Q

and ASAS AS

Similarly it can be shown SASA SA

Thus B is idempotent AS and SA are idempotent .

Summarizin g

U yAy has c r , distributi on

1

with 2 μ Aμ

if and only if the following two conditions are

satisfied:

1. AS is idempotent of rank r.

2. SA is idempotent of rank r.

Application:

Let y1, y2, … , yn be a sample from the Normal

distribution with mean m, and variance 2.

2

Then

n 1s

U

2

has a c2 distribution with n = n -1 d.f. and = 0

(central)

1

Proof y1

y

1

2

y

N m 1, I where 1

1

yn

n 1s 2

1 n

2

U

2 yi y

2

i 1

2

n

y

1

n

1

i 1

2

2 yi

i 1

n

1 1

1

y11y

2 yy

y 2 I 11 y

n

n

yAy

1 1

where A 2 I 11

n

1 1n 1n

1 1 1

1 n

n

2

1

1

n

n

1n

1

n

1

1 n

1 1 2

1

Now AS 2 I 11 I I 11

n

n

1

Also SA I 11

n

1 1

AS AS I 11 I 11

n n

1

1

1

I 11 11 2 1111

n

n

n

1

1

1

I 11 11 11 since 11 n

n

n

n

1

I 11 AS

n

1

Thus AS I 11 is idempotent of rank r n 1

n

Hence

n 1s 2

U

2

yAy

has a c2 distribution with n = n -1 d.f. and noncentrality parameter

12 m1Am1 12 m 2 1I 1n 111

m 11 1n 1111 0

1

2

2

Independence of Linear and Quadratic Forms

Again let y have a N μ, S distributi on

Consider t he following R. V. ' s

U1 y Ay , U 2 y By and v Cy

when is U1 yAy independen t of U 2 yBy?

U1 yAy independen t of v Cy ?

Theorem

Let y have a N μ, I distributi on

then U yAy is independen t of v Cy

if CA 0.

Proof Since A is symmetric there exists an

orthogonal matrix P such that PAP = D where

D is diagonal.

Note: since CA = 0 then rank(A) = r n and

some of the eigenvalues (diagonal elements of

D) are zero.

D11 0

thus PA P

0 0

CA 0 CPPAP 0

B11 B12 D11 0 0 0

B

21 B 22 0 0 0 0

B11 B12

where

CP

B 21 B 22

i.e. B11D11 0, B 21D11 0

and since D111 exists then B11 0, B 21 0

0 B12

thus CP

0 B 2 .

0 B 22

Let z Py, then z has a N Pμ, PP N Pμ, I dist' n

now yAy zPA Pz

D11 0 z1

z1 z2

z1 D11z1

0 0 z 2

z1

Also Cy CPz 0 B 2 B 2 z 2

z 2

Since z1 is independen t of z 2 then

yAy z1 D11z1 is independen t of Cy B 2 z 2

Theorem

Let y have a N μ, S distributi on where S is of rank n

then CSA 0 implies the quadratic form U yAy

is independen t of the linear form v Cy

Proof Exercise.

Similar to previous thereom.

Application:

Let y1, y2, … , yn be a sample from the Normal

distribution with mean m, and variance 2.

Then

n

1

2

2

yi y are independen t.

y and s

n 1 i 1

y1

Proof

y

2

1

1

1

1

v y n n n

n 1 y Cy

yn

y1

y

2

1

1

1

1

v y n n n

n 1 y Cy

yn

1

2

1

s y n 1 I n 11y yAy

Now y has a N μ, S distributi on with

μ m1 and S I

and CSA 1n 1 I n11 I 1n 1 1

n n 1 1 I 1n 1 1 n n 1 1 1 0

Q.E.D.

Theorem (Independence of quadratic forms)

Let y have a N μ, S distributi on where

S is of rank n.

then ASB 0 implies the quadratic forms

U1 yAy and U 2 yBy are independen t.

Proof Let S = is non- singular) .

ASB AB 0 AB 0

or WV 0 where W A, V B

Note : both W and V are symmetric.

W W and V V

Expected Value and Variance of quadratic

forms

Theorem

Suppose E y μ and Var y S and U y Ay then

E U μA μ trAS

Proof Let e y μ, then S E e e and E e 0

E U E μ e Aμ e

μAμ E eAμ μAE e E eAe

μAμ E treAe μAμ E trAe e

μAμ trAE e e μAμ trAS

Summary

EU EyAy μA μ trAS

μAμ EeAe where e y μ

n

n

n n

aij mi m j E aij ei e j

i 1 j 1

i 1 j 1

Example – One-way Anova

y11, y12, y13, … y1n a sample from N(m1,2)

y21, y22, y23, … y2n a sample from N(m2,2)

yk1, yk2, yk3, … ykn a sample from N(mk,2)

U1 yij yi SSError where yi 1n yij

k

n

2

i 1 j 1

Now mij E yij mi E yi

n

j 1

Thus

k n

2

E U1 E yij yi

i 1 j 1

k

n

k

n

2

2

mij mi E eij ei

i 1 j 1

i 1 j 1

k

e 2

0 E n 1 si

i 1

k n 1 2

e 2

1

where s

e

n

1

n 1

j 1

ij

ei and E s1

2

e

2

2

Now let

k

U 2 n yi y SS Treatments where y

2

i 1

Now E yi mi and E y

k

1

k

m

i 1

i

m

k

1

k

i 1

yi

Thus

k

2

E U 2 E n yi y

k i 1

k

2

2

n mi m nE ei e

i 1

i 1

n mi m nk 1E s

k

2

2

e

i 1

k

n mi m k 1

2

2

i 1

where s2e

k

1

k 1

2

e

e

i sample variance

i 1

Vare

2

e

calculated from e1 , e2 ,, ek and E s

i

n

Statistical Inference

Making decisions from data

There are two main areas of Statistical Inference

• Estimation – deciding on the value of a

parameter

– Point estimation

– Confidence Interval, Confidence region Estimation

• Hypothesis testing

– Deciding if a statement (hypotheisis) about a

parameter is True or False

The general statistical model

Most data fits this situation

Defn (The Classical Statistical Model)

The data vector

x = (x1 ,x2 ,x3 , ... , xn)

The model

Let f(x| q) = f(x1 ,x2 , ... , xn | q1 , q2 ,... , qp)

denote the joint density of the data vector x =

(x1 ,x2 ,x3 , ... , xn) of observations where the

unknown parameter vector q W (a subset of

p-dimensional space).

An Example

The data vector

x = (x1 ,x2 ,x3 , ... , xn) a sample from the normal

distribution with mean m and variance 2

The model

Then f(x| m , 2) = f(x1 ,x2 , ... , xn | m , 2), the joint

density of x = (x1 ,x2 ,x3 , ... , xn) takes on the form:

f x m

n

2

i 1

1

e

2

xi m 2

2

1

2

n/2

n

e

n

i 1

xi m 2

2

where the unknown parameter vector q (m , 2) W

={(x,y)|-∞ < x < ∞ , 0 ≤ y < ∞}.

Defn (Sufficient Statistics)

Let x have joint density f(x| q) where the unknown

parameter vector q W.

Then S = (S1(x) ,S2(x) ,S3(x) , ... , Sk(x)) is called a set of

sufficient statistics for the parameter vector q if the

conditional distribution of x given S = (S1(x) ,S2(x)

,S3(x) , ... , Sk(x)) is not functionally dependent on the

parameter vector q.

A set of sufficient statistics contains all of the

information concerning the unknown parameter vector

A Simple Example illustrating Sufficiency

Suppose that we observe a Success-Failure experiment

n = 3 times. Let q denote the probability of Success.

Suppose that the data that is collected is x1, x2, x3 where

xi takes on the value 1 is the ith trial is a Success and 0 if

the ith trial is a Failure.

The following table gives possible values of (x1, x2, x3).

(x1, x2, x3)

(0, 0, 0)

(1, 0, 0)

(0, 1, 0)

(0, 0, 1)

(1, 1, 0)

(1, 0, 1)

(0, 1, 1)

(1, 1, 1)

f(x1, x2, x3|q)

(1 - q)3

(1 - q)2q

(1 - q)2q

(1 - q)2q

(1 - q)q2

(1 - q)q2

(1 - q)q2

q3

S =Sxi

0

1

1

1

2

2

2

3

g(S |q)

(1 - q)3

3(1 - q)2q

3(1 - q)q2

q3

f(x1, x2, x3| S)

1

1/3

1/3

1/3

1/3

1/3

1/3

1

The data can be generated in two equivalent ways:

1. Generating (x1, x2, x3) directly from f (x1, x2, x3|q) or

2. Generating S from g(S|q) then generating (x1, x2, x3) from f (x1,

x2, x3|S). Since the second step does involve q no additional

information will be obtained by knowing (x1, x2, x3) once S is

determined

The Sufficiency Principle

Any decision regarding the parameter q should

be based on a set of Sufficient statistics S1(x),

S2(x), ...,Sk(x) and not otherwise on the value of

x.

A useful approach in developing a statistical

procedure

1. Find sufficient statistics

2. Develop estimators , tests of hypotheses etc.

using only these statistics

Defn (Minimal Sufficient Statistics)

Let x have joint density f(x| q) where the

unknown parameter vector q W.

Then S = (S1(x) ,S2(x) ,S3(x) , ... , Sk(x)) is a set

of Minimal Sufficient statistics for the

parameter vector q if S = (S1(x) ,S2(x) ,S3(x) , ...

, Sk(x)) is a set of Sufficient statistics and can be

calculated from any other set of Sufficient

statistics.

Theorem (The Factorization Criterion)

Let x have joint density f(x| q) where the unknown

parameter vector q W.

Then S = (S1(x) ,S2(x) ,S3(x) , ... , Sk(x)) is a set of

Sufficient statistics for the parameter vector q if

f(x| q) = h(x)g(S, q)

= h(x)g(S1(x) ,S2(x) ,S3(x) , ... , Sk(x), q).

This is useful for finding Sufficient statistics

i.e. If you can factor out q-dependence with a set of

statistics then these statistics are a set of Sufficient

statistics

Defn (Completeness)

Let x have joint density f(x| q) where the unknown

parameter vector q W.

Then S = (S1(x) ,S2(x) ,S3(x) , ... , Sk(x)) is a set of

Complete Sufficient statistics for the parameter vector

q if S = (S1(x) ,S2(x) ,S3(x) , ... , Sk(x)) is a set of

Sufficient statistics and whenever

E[f(S1(x) ,S2(x) ,S3(x) , ... , Sk(x)) ] = 0

then

P[f(S1(x) ,S2(x) ,S3(x) , ... , Sk(x)) = 0] = 1

Defn (The Exponential Family)

Let x have joint density f(x| q)| where the

unknown parameter vector q W. Then f(x| q)

is said to be a member of the exponential family

of distributions if:

k

h(x) g (θ) exp S i (x) pi (θ) ai xi bi

f x θ

,

i 1

0

Otherwise

q W,where

1) - ∞ < ai < bi < ∞ are not dependent on q.

2) W contains a nondegenerate k-dimensional

rectangle.

3) g(q), ai ,bi and pi(q) are not dependent on x.

4) h(x), ai ,bi and Si(x) are not dependent on q.

If in addition.

5) The Si(x) are functionally independent for i = 1, 2,..., k.

6) [Si(x)]/ xj exists and is continuous for all i = 1, 2,..., k j = 1,

2,..., n.

7) pi(q) is a continuous function of q for all i = 1, 2,..., k.

8) R = {[p1(q),p2(q), ...,pK(q)] | q W,} contains nondegenerate

k-dimensional rectangle.

Then

the set of statistics S1(x), S2(x), ...,Sk(x) form a Minimal

Complete set of Sufficient statistics.

Defn (The Likelihood function)

Let x have joint density f(x|q) where the unkown

parameter vector q W. Then for a

given value of the observation vector x ,the

Likelihood function, Lx(q), is defined by:

Lx(q) = f(x|q) with q W

The log Likelihood function lx(q) is defined by:

lx(q) =lnLx(q) = lnf(x|q) with q W

The Likelihood Principle

Any decision regarding the parameter q should

be based on the likelihood function Lx(q) and not

otherwise on the value of x.

If two data sets result in the same likelihood

function the decision regarding q should be the

same.

Some statisticians find it useful to plot the

likelihood function Lx(q) given the value of x.

It summarizes the information contained in x

regarding the parameter vector q.

An Example

The data vector

x = (x1 ,x2 ,x3 , ... , xn) a sample from the normal

distribution with mean m and variance 2

The joint distribution of x

Then f(x| m , 2) = f(x1 ,x2 , ... , xn | m , 2), the joint

density of x = (x1 ,x2 ,x3 , ... , xn) takes on the form:

f x m

n

2

i 1

1

e

2

xi m 2

2

1

2

n/2

n

e

n

i 1

xi m 2

2

where the unknown parameter vector q (m , 2) W

={(x,y)|-∞ < x < ∞ , 0 ≤ y < ∞}.

The Likelihood function

Assume data vector is known

x = (x1 ,x2 ,x3 , ... , xn)

The Likelihood function

Then L(m , )= f(x| m , ) = f(x1 ,x2 , ... , xn | m , 2),

n

i 1

1

e

2

xi m

1

2

n/2

n

1

2 n

n/2

2

n

2

xi m

2

1

n/2

2

2

xi2 2 m xi m 2

n

1

i 1

1

i 1

e

e

2

n

e

n

i 1

xi m 2

2

or

L m,

1

2

n/2

1

2

n/2

i 1

e

n

n

2

2

x

2

m

x

m

i

i

2

e

n

1

xi2 2 m

2 i1

1

2

n/2

n

n

1

e

1

2

n 1 s

2

xi nm 2

i 1

n

nx 2 2 m nx n m 2

n

since s 2

2

2

x

nx

i

i 1

n

or

n 1

2

2

2

x

n

1

s

nx

i

i 1

n

and since x

x

i 1

n

i

n

then

x

i 1

i

nx

hence

L m ,

1

2

n/2

n

e

2

1

2

n/2

n

n 1 s

1

e

1

2

nx 2 2 m nx nm 2

n 1s n x m

2

2

2

Now consider the following data: (n = 10)

57.1

72.3

75.0

57.8

50.3

mean

s

L m ,

48.0

1

6.2832

53.1

58.5

53.7

57.54

9.2185

5

49.6

10

e

1

2

9 9.2185 10 57.54 m

2

2

Likelihood n = 10

3E-16

2.5E-16

2E-16

1.5E-16

1E-16

70

5E-17

m

0

1

0

S1

20

50

Contour Map of Likelihood n = 100

70

m

50

S1

1

0

20

Now consider the following data: (n = 100)

57.1

72.3

75.0

57.8

50.3

48.0

49.6

53.1

58.5

53.7

77.8

43.0

69.8

65.1

71.1

44.4

64.4

52.9

56.4

43.9

49.0

37.6

65.5

50.4

40.7

66.9

51.5

55.8

49.1

59.5

64.5

67.6

79.9

48.0

68.1

68.0

65.8

61.3

75.0

78.0

61.8

69.0

56.2

77.2

57.5

84.0

45.5

64.4

58.7

77.5

81.9

77.1

58.7

71.2

58.1

50.3

53.2

47.6

53.3

76.4

69.8

57.8

65.9

63.0

43.5

70.7

85.2

57.2

78.9

72.9

78.6

53.9

61.9

75.2

62.2

53.2

73.0

38.9

75.4

69.7

68.8

77.0

51.2

65.6

44.7

40.4

72.1

68.1

82.2

64.7

83.1

71.9

65.4

45.0

51.6

48.3

58.5

65.3

65.9

59.6

mean

s

62.02

11.8571

L m ,

1

6.2832

50

100

e

1

2

9911.8571 100 62.02 m

2

2

Likelihood n = 100

1.6E-169

1.4E-169

1.2E-169

1E-169

8E-170

6E-170

4E-170

70

2E-170

m

0

1

0

S1

20

50

Contour Map of Likelihood n = 100

70

m

50

S1

1

0

20

The Sufficiency Principle

Any decision regarding the parameter q should

be based on a set of Sufficient statistics S1(x),

S2(x), ...,Sk(x) and not otherwise on the value of

x.

If two data sets result in the same values for the

set of Sufficient statistics the decision regarding

q should be the same.

Theorem (Birnbaum - Equivalency of the

Likelihood Principle and Sufficiency Principle)

Lx (q)

Lx (q)

1

2

if and only if

S1(x1) = S1(x2),..., and Sk(x1) = Sk(x2)

The following table gives possible values of (x1, x2, x3).

f(x1, x2, x3|q)

(1 - q)3

(1 - q)2q

(1 - q)2q

(1 - q)2q

(1 - q)q2

(1 - q)q2

(1 - q)q2

(x1, x2, x3)

(0, 0, 0)

(1, 0, 0)

(0, 1, 0)

(0, 0, 1)

(1, 1, 0)

(1, 0, 1)

(0, 1, 1)

(1, 1, 1)

S =Sxi

0

1

1

1

2

2

2

3

q3

g(S |q)

(1 - q)3

f(x1, x2, x3| S)

1

1/3

1/3

1/3

1/3

1/3

1/3

1

3(1 - q)2q

3(1 - q)q2

q3

The Likelihood function

S =0

1.2

0.08

0.08

0

0

0

0.2

0.4

0.6

0.8

1

0.2

0.02

0.02

0

0.4

0.04

0.04

0.2

0.6

0.06

0.06

0.4

0.8

0.1

0.1

0.6

1

0.12

0.12

0.8

S =3

1.2

0.14

0.14

1

S =2

0.16

S =1

0.16

0

0.2

0.4

0.6

0.8

1

0

0

0.2

0.4

0.6

0.8

1

0

0.2

0.4

0.6

0.8

1

Estimation Theory

Point Estimation

Defn (Estimator)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W.

Then an estimator of the parameter f(q) = f(q1

,q2 , ... , qk) is any function T(x)=T(x1 ,x2 ,x3 , ... ,

xn) of the observation vector.

Defn (Mean Square Error)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where the

unknown parameter vector q W.

Let T(x) be an estimator of the parameter

f(q). Then the Mean Square Error of T(x) is

defined to be:

M .S.E.T x θ E(T (x) f (θ)) 2

(T (x) f (θ)) 2 f (x | θ)dx

Defn (Uniformly Better)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W.

Let T(x) and T*(x) be estimators of the

parameter f(q). Then T(x) is said to be

uniformly better than T*(x) if:

M .S .E.T x θ M .S .E.T *x θ

whenever θ W

Defn (Unbiased )

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W.

Let T(x) be an estimator of the parameter f(q).

Then T(x) is said to be an unbiased estimator of

the parameter f(q) if:

E T x T (x) f (x | θ)dx f θ

Theorem (Cramer Rao Lower bound)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W. Suppose

that:

i) f ( x | θ) exists for all x and for all θ W .

θ

f ( x | θ)

ii)

f ( x | θ)dx

dx

θ

θ

f ( x | θ)

iii)

t x f ( x | θ)dx t x

dx

θ

θ

2

f (x | θ)

iv) 0 E q for all θ W

i

Let M denote the p x p matrix with ijth element.

2 ln f (x | θ)

mij E

i, j 1,2, , p

q i q j

Then V = M-1 is the lower bound for the covariance

matrix of unbiased estimators of q.

That is, var(c' θ̂ ) = c'var( θ̂)c ≥ c'M-1c = c'Vc where θ̂

is a vector of unbiased estimators of q.

Defn (Uniformly Minimum Variance

Unbiased Estimator)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W. Then

T*(x) is said to be the UMVU (Uniformly

minimum variance unbiased) estimator of f(q)

if:

1) E[T*(x)] = f(q) for all q W.

2) Var[T*(x)] ≤ Var[T(x)] for all q W

whenever E[T(x)] = f(q).

Theorem (Rao-Blackwell)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W.

Let S1(x), S2(x), ...,SK(x) denote a set of sufficient

statistics.

Let T(x) be any unbiased estimator of f(q).

Then T*[S1(x), S2(x), ...,Sk (x)] = E[T(x)|S1(x),

S2(x), ...,Sk (x)] is an unbiased estimator of f(q)

such that:

Var[T*(S1(x), S2(x), ...,Sk(x))] ≤ Var[T(x)]

for all q W.

Theorem (Lehmann-Scheffe')

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W.

Let S1(x), S2(x), ...,SK(x) denote a set of

complete sufficient statistics.

Let T*[S1(x), S2(x), ...,Sk (x)] be an unbiased

estimator of f(q). Then:

T*(S1(x), S2(x), ...,Sk(x)) )] is the UMVU

estimator of f(q).

Defn (Consistency)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where

the unknown parameter vector q W. Let Tn(x)

be an estimator of f(q). Then Tn(x) is called a

consistent estimator of f(q) if for any e > 0:

lim PTn x f θ e 0 for all θ W

n

Defn (M. S. E. Consistency)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x|q) where the

unknown parameter vector q W. Let Tn(x) be an

estimator of f(q). Then Tn(x) is called a M. S. E.

consistent estimator of f(q) if for any e > 0:

lim M .S .E.Tn θ lim E Tn x f θ 0

n

for all θ W

n

2

Methods for Finding Estimators

1. The Method of Moments

2. Maximum Likelihood Estimation

Methods for finding estimators

1. Method of Moments

2. Maximum Likelihood Estimation

Method of Moments

Let x1, … , xn denote a sample from the density

function

f(x; q1, … , qp) = f(x; q)

The kth moment of the distribution being

sampled is defined to be:

mk q1 ,

,q p E x k

x k f x;q1 ,

,q p dx

The kth sample moment is defined to be:

1 n k

mk xi

n i 1

To find the method of moments estimator of

q1, … , qp we set up the equations:

m1 q1 , ,q p m1

m2 q1 , ,q p m2

m p q1 ,

,q p m p

We then solve the equations

m1 q1 , ,q p m1

m2 q1 , ,q p m2

m p q1 ,

,q p m p

for q1, … , qp.

The solutions

q1 , ,q p

are called the method of moments estimators

The Method of Maximum Likelihood

Suppose that the data x1, … , xn has joint density

function

f(x1, … , xn ; q1, … , qp)

where q (q1, … , qp) are unknown parameters

assumed to lie in W (a subset of p-dimensional

space).

We want to estimate the parametersq1, … , qp

Definition: Maximum Likelihood Estimation

Suppose that the data x1, … , xn has joint density

function

f(x1, … , xn ; q1, … , qp)

Then the Likelihood function is defined to be

L(q) = L(q1, … , qp)

= f(x1, … , xn ; q1, … , qp)

the Maximum Likelihood estimators of the parameters

q1, … , qp are the values that maximize

L(q) = L(q1, … , qp)

the Maximum Likelihood estimators of the parameters

q1, … , qp are the values

qˆ1 , ,qˆp

Such that

L qˆ1 ,

Note:

, qˆp max L q1 ,

q1 , ,q p

maximizing L q1 ,

is equivalent to maximizing

l q1 ,

, q p ln L q1 ,

the log-likelihood function

,q p

,q p

,q p

Hypothesis Testing

Defn (Test of size )

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x| q) where

the unknown parameter vector q W.

Let w be any subset of W.

Consider testing the the Null Hypothesis

H0: q w

against the alternative hypothesis

H1: q

w.

Let A denote the acceptance region for the test.

(all values x = (x1 ,x2 ,x3 , ... , xn) of such that the

decision to accept H0 is made.)

and let C denote the critical region for the test

(all values x = (x1 ,x2 ,x3 , ... , xn) of such that the

decision to reject H0 is made.).

Then the test is said to be of size if

Px C f (x | θ)dx for all θ w and

C

Px C f (x | θ)dx for at least one θ0 w

C

Defn (Power)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x| q) where the

unknown parameter vector q W.

Consider testing the the Null Hypothesis

H0: q w

against the alternative hypothesis

H1: q

w.

where w is any subset of W. Then the Power of the test for

q

w is defined to be:

C θ Px C f ( x | θ)dx

C

Defn (Uniformly Most Powerful (UMP) test of

size )

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x| q) where the

unknown parameter vector q W.

Consider testing the the Null Hypothesis

H0: q w

against the alternative hypothesis

H1: q w.

where w is any subset of W.

Let C denote the critical region for the test . Then

the test is called the UMP test of size if:

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of

observations having joint density f(x| q) where the

unknown parameter vector q W.

Consider testing the the Null Hypothesis

H0: q w

against the alternative hypothesis

H1: q w.

where w is any subset of W.

Let C denote the critical region for the test . Then

the test is called the UMP test of size if:

Px C f (x | θ)dx for all θ w and

C

Px C f (x | θ)dx for at least one θ0 w

C

and for any other critical region C* such that:

Px C *

Px C *

f (x | θ)dx

for all θ w and

C*

f (x | θ)dx

for at least one θ0 w

C*

then

f (x | θ)dx f (x | θ)dx for all θ w .

C

C*

Theorem (Neymann-Pearson Lemma)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of observations having

joint density f(x| q) where the unknown parameter vector q W =

(q0, q1).

Consider testing the the Null Hypothesis

H0: q = q0

against the alternative hypothesis

H1: q = q1.

Then the UMP test of size has critical region:

f (x | θ 0 )

C x

K

f (x | θ1 )

where K is chosen so that

f (x | θ

C

0

)dx

Defn (Likelihood Ratio Test of size )

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of observations having

joint density f(x| q) where the unknown parameter vector q W.

Consider testing the the Null Hypothesis

H0: q w

against the alternative hypothesis

H1: q w.

where w is any subset of W

Then the Likelihood Ratio (LR) test of size a has critical region:

max f (x | θ)

C x θw

K

f ( x | θ)

max

θW

where K is chosen so that

Px C f (x | θ)dx for all θ w and

Px C f (x | θ)dx for at least one θ0 w

C

C

Theorem (Asymptotic distribution of

Likelihood ratio test criterion)

Let x = (x1 ,x2 ,x3 , ... , xn) denote the vector of observations having

joint density f(x| q) where the unknown parameter vector q W.

Consider testing the the Null Hypothesis

H0: q w

against the alternative hypothesis

H1: q w.

max f (x | θ)

Let x θw

where w is any subset of W

max f (x | θ)

θW

Then under proper regularity conditions on U = -2ln(x)

possesses an asymptotic Chi-square distribution with degrees of

freedom equal to the difference between the number of

independent parameters in W and w.