Trigger and DAQ of the LHC experiments upgrade

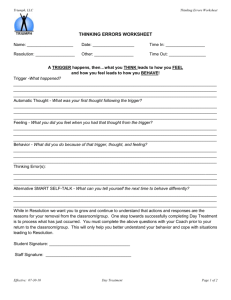

advertisement

Upgrade of Trigger and Data Acquisition Systems for the LHC Experiments Nicoletta Garelli CERN XXIII International Symposium on Nuclear Electronics and Computing, 12-19 September 2011, Varna, Bulgaria Acknowledgment & Disclaimer • I would like to thank David Francis, Benedetto Gorini, Reiner Hauser, Frans Meijers, Andrea Negri, Niko Neufeld, Stefano Mersi, Stefan Stancu and all other colleagues for answering my questions and sharing ideas. • My apologizes for any mistakes, misinterpretations and misunderstandings. • This presentation is far to be a complete review of all the trigger and data acquisition related activities foreseen by the LHC experiments from 2013 to 2022. • I will focus on the upgrade plans of ATLAS, CMS and LHCb only. 9/13/2011 N. Garelli (CERN). NEC'2011 2 Outline • Large Hadron Collider (LHC) – today, design, beyond design • LHC experiments – design – trigger & data acquisition systems – upgrade challenges • Upgrade plans – ATLAS – CMS – LHCb 9/13/2011 N. Garelli (CERN). NEC'2011 3 LHC: a Discovery Machine Goal: explore TeV energy scale to find Higgs Boson & New Physics beyond Standard Model How: Large Hadron Collider (LHC) at CERN, with possibility of steady increase of luminosity large discovery range LHC Project in brief • LEP tunnel: 27 km Ø, ~100 m underground • pp collisions, center of mass E = 14 TeV • 4 interaction points 4 big detectors Alice • Particles travel in bunches at ~ c • Bunches of O(1011) particles each • Bunch Crossing frequency: 40 MHz • Superconducting magnets cooled to 1.9 K with 140 tons of liquid He. (Magnetic ATLAS field strength ~ 8.4 T) • Energy of one beam = 362 MJ (300x Tevatron) 9/13/2011 N. Garelli (CERN). NEC'2011 CMS LHC SPS PS LHCb 4 LHC: Today, Design, Beyond Design Current Status Design Beyond Design beam energy (TeV) 3.5 (½ design) 7 (7x Tevatron) - bunch spacing (ns) 50 (½ design) 25 - colliding bunches nb 1331 (~½ design) 2808 - peak luminosity (cm-2s-1) 3.1 1033 (~30% design) 1034 (30x Tevatron) 5 1034 (leveled) bunch intensity, protons/bunch (1011) 1.25 (>design) 1.15 1.7 3.4 (with 50 ns) b* (m) 1 (~½ design) 0.55 0.15 1 b* = beam envelope at Interaction Point (IP), determined by magnets arrangements & powering. Smaller b* = Higher Luminosity Interventions needed to reach design conditions 9/13/2011 N. Garelli (CERN). NEC'2011 2 LHC can go further Higher Luminosity 5 LHC Schedule Model Yearly Schedule • operating at unexplored conditions long way to reach design performance need for commissioning & testing periods • one 2-month Technical Stop (TS). Best period for power saving: Dec-Jan • every ~2 months of physics a shorter TS followed by a Machine Development (MD) period necessary • 1 month of heavy ion run (different physics program) Jan Feb Mar April TS HWC Phys Phys May June July Aug Sept Oct Nov Dec Phys TS+MD Phys Phys Phy TS+MD Phys Phys TS & Ion TS Every 3 years a 1 year long (at least) shutdown needed for major component upgrades … and the experiments? • profit from LHC TS & shutdown periods for improvements & replacements • LHC drives the schedule experiments schedule has to be flexible 9/13/2011 N. Garelli (CERN). NEC'2011 6 LHC: Towards Design Conditions Don’t forget that life is not always easy Single Event Effects due to radiation Unidentified Falling Objects (UFO), fast beam losses What LHC can do as it is today: with 50 ns spacing: nb = 1380, bunch intensity = 1.7 1011, b* = 1.0 m L = 5 1033 cm-2s-1 at 3.5 TeV with 25 ns spacing: nb = 2808, bunch intensity = 1.2 1011, b* = 1.0 m L = 4 1033 cm-2s-1 at 3.5 TeV Not possible to reach design performance today: 1) Beam Energy: joints between s/c magnets limits to 3.5 TeV/beam 2) Beam Intensity: collimation limits luminosity to ~5 1033 cm-2s-1 with E = 3.5 TeV/beam 9/13/2011 N. Garelli (CERN). NEC'2011 7 LHC Draft Schedule – Consolidation 2013 CONSOLIDATION E = 6.5-7 TeV L = 1034 cm-2s-1 • fully repair joints between s/c magnets • install magnet clamps • Electrical fault in bus between super conducting magnets caused 19.9.2008 accident limit E to 3.5 TeV • After joints reparation 7 TeV will be reached, after dipole training: O(100) quench/sector O(month) hardware commissioning 9/13/2011 N. Garelli (CERN). NEC'2011 8 LHC Upgrade Draft Schedule – Phase1&2 • fully repair joints between s/c E = 6.5-7 TeV New collimation system 2013 CONSOLIDATION magnets 34 be protected L = 10to necessary from • install magnet clamps high losses at higher luminosity 2017 2021 9/13/2011 PHASE 1 PHASE 2 • collimation upgrade E = 7 TeV L = 2 1034cm-2s-1 • injector upgrade (Linac4) E = 7 TeV L = 5 1034cm-2s-1 N. Garelli (CERN). NEC'2011 • new bigger quadrupoles smaller b* • new RF Crab cavities 9 LHC Upgrade Draft Schedule • fully repair joints between s/c magnets • install magnet clamps 2013 CONSOLIDATION E = 6.5-7 TeV L = 1034cm-2s-1 2017 • collimation upgrade E = 7 TeV L = 2 1034cm-2s-1 • injector upgrade (Linac4) PHASE 1 • new bigger quadrupoles PHASE 2 E = 7 TeV 2021 The Super-LHC L = 5 1034 cm-2s-1 smaller b* • new RF Crab cavities 3000 fb-1 by the end of 2030 x103 wrt today 9/13/2011 N. Garelli (CERN). NEC'2011 10 LHC Experiments Design • LHC environment (design) – spp inelastic ~ 70 mb Event Rate = 7 108 Hz – Bunch Cross (BC) every 25 ns (40 MHz) ~ 22 interactions every “active” BC – 1 interesting collision is rare & always hidden within ~22 minimum bias collisions = pile-up • Stringent requirements − fast electronics response to resolve individual bunch crossings − high granularity (= many electronics channels) to avoid that a pile-up event(1) goes in the same detector element as the interesting event(1) − radiation resistant (1) Event 9/13/2011 = snapshot of values of all front-end electronics elements containing particle signals from single BC N. Garelli (CERN). NEC'2011 11 LHC Upgrade: Effects on Experiments 2013 2014 2017 2018 2021 2022 Challenge for experiments: LHC luminosity x10 higher than today after second long shutdown (phase 1) • Higher peak luminosity Higher pile-up – more complex trigger selection – higher detector granularity – radiation hard electronics • Higher accumulated luminosity radiation damage: need to replace components – sensors: Inner Tracker in particular (~200 MCHF/experiment) – electronics? not guaranteed after 10 y use 9/13/2011 N. Garelli (CERN). NEC'2011 12 Interesting Physics at LHC s tot 100 mb Total (elastic, diffractive, inelastic) cross-section of proton-proton collision s tot s H (500 GeV) DESIGN Higgs -> 4m ~22 MinBias 100 mb 1011 1 pb Find a needle … Fluegge, G. 1994, Future Research in High Energy Physics, Tech. rep …in the haystack! 9/13/2011 BEYOND DESIGN 5x bigger haystack ~100 MinBias Cross-section of SM Higgs Boson production s H (500 GeV) 1 pb N. Garelli (CERN). NEC'2011 13 Trigger & Data Acquisition (DAQ) Systems • @ LHC nominal conditions O(10) TB/s of data produced 40 MHz O(10)TB/s • Trigger&DAQ: select & store interesting data for analysis at O(100) MB/s Trigger & DAQ Local Storage O(100)MB/s CERN Data Storage 9/13/2011 – mostly useless data (min. bias events) – impossible to store them – TRIGGER: select interesting events (the Higgs boson in the haystack) – DAQ: convey data to local mass storage – Network: the backbone, large Ethernet networks with O(103) Gbit & 10-Gbit ports, O(102) switches • Until now: high efficiency (>90%) N. Garelli (CERN). NEC'2011 14 Comparing LHC Experiments Today Experiment Read-out channels Trigger Levels Read-Out Links (type, out, #) Level 0-1-2 Rate (Hz) Event Size (B) HLT Out (MB/s) ATLAS ATLAS ~90 106 3 S-link, 160 Mb/s ~1600 L1 ~ 105 L2 ~ 3 103 1.5 106 300 CMS CMS ~90 106 2 S-link64, 400 Mb/s ~500 L1 ~ 105 106 600 LHCb LHCb ~1 106 2 G-link, 200 Mb/s ~400 L0 ~ 106 5.5 104 70 Similar read-out links ATLAS: partial & on-demand read-out @L2 CMS & LHCb: read-out everything @L1 9/13/2011 N. Garelli (CERN). NEC'2011 15 ATLAS Trigger & DAQ (today) Trigger Info Trigger 40 MHz DAQ <2.5 ms Level 1 40 MHz ATLAS Event 1.5 MB/25 ns Calo/Muon Detectors Other Detectors Detector Read-Out L1 Accept 75 (100) kHz 75 kHz ATLAS Data Regions Of Interest FE FE FE ROD ROD ROD 112 GB/s ~40 ms ReadOut System Level 2 ~ 3 kHz ~4 sec Event Filter ~ 200 Hz High Level Trigger ROI Requests L2 Accept ~3 kHz EF Accept ~200 Hz ROI data (~2%) Data Collection Network Event Builder ~4.5 GB/s SubFarmInput Event Filter Network SubFarmOutput DataFlow ~ 300 MB/s CERN Data Storage 9/13/2011 N. Garelli (CERN). NEC'2011 16 CMS Trigger & DAQ (today) 40 MHz • LV1 trigger HW: – custom electronics – rate from 40 MHz to 100 kHz O(ms) Level 1 Trigger • Event Building – 1st stage based on Myrinet technology: FED-builder – 2nd stage based on TCP/IP over GBE: RU-builder – 8 independent identical DAQ slices – 100 GB/s throughput • HLT: PC farm 100 kHz Front-End pipelines Read-out buffers Switching Networks O(s) High Level Trigger – event driven – rate from 100 kHz to O(100) Hz 9/13/2011 Detectors N. Garelli (CERN). NEC'2011 100 Hz Processors farms Mass storage 17 Experiments Challenges Beyond Design • • • • Beyond design new working point to be established Higher pile-up increase pattern recognition problems Impossible to change calorimeter detectors (budget, time, manpower) Necessary to change inner tracker – current damaged by radiation – needs for more granularity • Level-1 @ higher pile-up select all interesting physics – simple increase of thresholds in pT not possible: lot of physics will be lost – more sophisticated decision criteria needed • move software algorithms into electronics • muon chambers better resolution for trigger required • add inner tracker information to Level-1 • Longer Level-1 decision time longer latency • More complex reconstruction in HLT – more computing power required 9/13/2011 N. Garelli (CERN). NEC'2011 18 DAQ Challenges • Problem: – which read-out ? – at which bandwidth? – which electronics? • Higher detector granularity higher number of read-out channels increased event size • Longer latency for Level-1 decisions possible changes in all subdetector read-out systems • Larger amount of data to be treated by network & DAQ – higher data rate network upgrade to accommodate higher bandwidth needs – need for increased local data storage • Possibly higher HLT output rate if increased global data storage (Grid) allows 9/13/2011 N. Garelli (CERN). NEC'2011 19 As of Today: Difficult Planning • Hard to plan – while maintaining running experiments – with uncertain schedule • Upgrade plans driven by – Trigger: guarantee good & flexible selection – DAQ: guarantee high data taking efficiency • New technologies might be needed – Trigger: new L1 trigger & more powerful HLT – DAQ: read-out links, electronics &network • To be considered – replacing some components may damage others – new architecture must be compatible with existing components in case of partial upgrade 9/13/2011 N. Garelli (CERN). NEC'2011 20 ATLAS A Toroidal LHC ApparatuS 9/13/2011 N. Garelli (CERN). NEC'2011 21 ATLAS Draft Schedule – Consolidation TDAQ farms & networks E = 6.5-7 TeV 2013 CONSOLIDATION consolidation L = 1034 cm-2s-1 Sub-detector read-out upgrades to enable Level-1 output of 100 kHz Current innermost pixel layer will have significant radiation damage, largely reduced detector efficiency replacement needed by 2015 Insertable B-Layer (IBL) built around a new beam-pipe & slipped inside the current detector 9/13/2011 N. Garelli (CERN). NEC'2011 22 Evolution of TDAQ Farm • Today: architecture with many farms & network domains: – cpu&network resources balancing on 3 different farms (L2, EB, EF) requires expertise – 2 trigger steering instances (L2, EF) – 2 separate networks (DC & EF) – huge configuration • Proposal: merge L2, EB, EF within a single homogeneous system – each node can perform the whole HLT selection steps • L2 processing & data collection based on ROIs • event building • event filter processing on the full event – automatic system balance – a single HLT instance 9/13/2011 N. Garelli (CERN). NEC'2011 23 TDAQ Network Proposal • Current network architecture: ROS ROS ROS ROS – system working well – EF core router: single point of failure – new technologies • 2013: replacement of cores mandatory (exceeded lifetime) DC SV SV PU PU XPU PU XPU XPU • Proposal: merge DC&EF networks OK with new chassis some cost reduction perfect for TDAQ farms evolution mixing functionalities reduce scaling potential with actual TDAQ farms configuration 9/13/2011 N. Garelli (CERN). NEC'2011 SFI SFI XPU XPU XPU PU XPU XPU EF SFO SFO EFEF EF 24 ATLAS Upgrade Draft Schedule – Phase1 2013 E = 6.5-7 TeV • TDAQ farm & network consolidation CONSOLIDATION L = 1034cm-2s-1 • L1 @ 100 kHz • IBL Level-1 Upgrade to cope 2017 PHASE 1 E = 7 TeV with pile-up after phase-1 L = 2 1034 cm-2s-1 • New muon detector Small Wheel (SW) • Provide increased calorimeter granularity • Level-1 topological trigger • Fast Track Processor (FTK) 9/13/2011 N. Garelli (CERN). NEC'2011 25 New Muon Small Wheel (SW) • Muon precision chambers (CSC & MDT) performance deteriorated − need to replace with a better detector • Exploit new SW to provide also trigger information − today: 3 trigger stations in barrel (RPC) & end-caps (TGC) New SW = 4th trigger station − reduce fake − improve pT resolution − level-1 track segment with 1 mrad resolution • Micromegas detector: new technology which could be used 9/13/2011 N. Garelli (CERN). NEC'2011 Small Wheel 26 L1 Topological Trigger • Proposal: additional electronics to have a Level-1 trigger based on topology criteria, to keep it efficient at high luminosities: Df, Dh, angular distance, back-toback, not back-to-back, mass – di-electron low lepton pT in Z, ZZ/ZW,WW, H→WW/ZZ/tt and multi-leptons SUSY modes – jet topology, muon isolation, … • New topological trigger processor with input from calorimeter & muon detectors, connected to new Central Trigger Processor • Consequence: longer latency, develop common tools for reconstructing topology both in muon & calorimeter detectors 9/13/2011 N. Garelli (CERN). NEC'2011 27 Fast Track Processor (FTK) Good match between Pre-stored & Recorded patterns • Introduce highly parallel processor: – for full Si-Tracker – provides tracking for all L1-accepted events within O(25μs) Pattern from reconstruction Reconstruct tracks >1 GeV – – – – 90% efficiency compared to offline track isolation for lepton selection fast identification of b & τ jets primary vertex identification Pre-stored patterns • Tracks reconstruction has 2 time-consuming stages: Discarded patterns – pattern recognition Associative memory – track fitting FPGA • After L1, before L2 – HLT selection software interface to FTK output (tracks available earlier) 9/13/2011 N. Garelli (CERN). NEC'2011 28 ATLAS Upgrade Draft Schedule – Phase2 2013 2017 2021 9/13/2011 • Reduce heterogeneity in PHASE 0 1. Full digital read-out 34 -2of -1 calorimeter (data & trigger) E = 6.5-7 TeV L = 10 cm s TDAQ farms & networks • faster data transmission • trigger access to full calorimeter resolution (provides • FTK identification) finer cluster E = 7and TeVbetter electron PHASE 1 -2s-1 • L1 Topological trigger = 2 1034 cm proposedLsolution: fast rad-tolerant 10 Gb/s links PHASE 2 E = 7 TeV L = 5 1034 cm-2s-1 N. Garelli (CERN). NEC'2011 2. Precision muon chambers used in trigger logic dismount as less as possible 3. L1 Track Trigger 29 Improve L1 Muon Trigger – Phase2 Current muon trigger: • trigger logic assumes tracks to come from interaction point (IP) • pT resolution limited by IP smearing (Phase2: 50mm ~150mm) • MDT resolution 100 times better than trigger chambers (RPC) Proposal: use precision chambers (MDT) in trigger logic – reduce rates in barrel – no need for vertex assumption – improve selectivity for high-pT muons • Current limitation: MDT read-out serial & asynchronous Phase2: improve MDT electronics performance (solve latency problem) • Fast MDT readout options: – seeded/tagged method use information from trigger chambers to define RoI & only consider small # of MDT tubes which falls into the RoI. Longer latency – unseeded/untagged method stand-alone track finding in MDT chambers. Larger bandwidth required to transfer MDT hit pattern 9/13/2011 N. Garelli (CERN). NEC'2011 30 Track Trigger – Phase2 New Inner Detector • only with silicon sensors • better resolution, reduced occupancy • more pixel layers for b-tagging • Possible to introduce L1 track trigger keep L1 rate @ 100 kHz – combine with calorimeter to improve electron selection – correlate muon with track in ID & reduce fake tracks – possible L1 b-tagging • L1 track trigger Self Seeded – use high pT tracks as seed – need fast communication to form coincidences between layers – latency of ~3ms • L1 track trigger ROI Seeded – need to introduce a L0 trigger to select RoI at L1 – long ~10ms L1 latency 9/13/2011 N. Garelli (CERN). NEC'2011 31 multi-jet event at 7 TeV CMS The Compact Muon Solenoid 9/13/2011 N. Garelli (CERN). NEC'2011 32 CMS Consolidation Phase 2013 CONSOLIDATION E = 6.5-7 TeV Trigger & DAQ consolidation 34 -2 -1 L = 10 cm s • x3 increase HLT farm processing power • replace HW for Online DB Muons CMS design: space for a 4th layer of forward muon chambers (CSC & RPCs) • better trigger robustness in 1.2<|h|<1.8 • preserve low pT threshold 9/13/2011 N. Garelli (CERN). NEC'2011 33 CMS Upgrade Draft Schedule – Phase1 2013 CONSOLIDATION E = 6.5-7 TeV L = 1034 cm-2s-1 2017 PHASE 1 E = 7 TeV L = 2 1034 cm-2s-1 Phase-1 requirements&plans as ATLAS • radiation damage change silicon innermost tracker • maintain Level-1 < 100 kHz, low latency, good selection tracking info @ L1+ more granularity in calorimeters DAQ evolution to cope with new design 9/13/2011 N. Garelli (CERN). NEC'2011 • Trigger & DAQ consolidation • 4th layer muon detectors • New pixel detector • Upgrade hadron calorimeter (HCAL) silicon photomultipliers. Finer segmentation of readout in depth • New trigger system • Event Builder & HLT farm upgrade 34 CMS New Pixel Detector – Phase1 • New pixel detector (4 barrel layers, 3 end-caps) • Need for replacement – radiation damage (innermost layer might be replaced before) – read-out chips just adequate for L=1034 cm-2s-1 with 4% dynamic data loss due to read-out latency & buffer to improve • Goal – gives better tracking performance – improved b-tagging capabilities – reduce material using a new cooling system CO2 instead of C6F14 9/13/2011 N. Garelli (CERN). NEC'2011 35 CMS New Trigger System – Phase1 • Introduce regional calorimeter trigger – to use full granularity for internal processing – more sophisticated clustering & isolation algorithms to handle higher rates and complex events • New infrastructure based on μTCA for increased bandwidth, maintenance, flexibility • Muon trigger upgrade to handle additional channels & faster FPGA moving from custom ASICs to powerful modern FPGAs with huge processing & I/O capability to implement more sophisticated algorithms 9/13/2011 Advanced Telecommunications Computing Architecture (ATCA). Dramatic increase in computing power & I/O N. Garelli (CERN). NEC'2011 36 CMS Upgrade Draft Schedule – Phase2 2013 CONSOLIDATION 2017 2021 9/13/2011 PHASE 1 PHASE 2 E = 6.5-7 TeV L = 1034 cm-2s-1 E = 7 TeV L = 2 1034 cm-2s-1 E = 7 TeV L = 5 1034cm-2s-1 N. Garelli (CERN). NEC'2011 • Trigger & DAQ consolidation • 4th layer muon detectors • New pixel detector • Upgrade HCAL silicon photomultipliers • New trigger system • EventBuilder&HLT farm upgrade • Install new tracking system track trigger • Major consolidation of electronics systems • Calorimeter end-caps • DAQ system upgrade 37 New Tracker • R/D projects for new sensors, new front-end, high speed link (customized version of GBT), tracker geometry arrangement – >200M pixels, >100M strips • Level-1 @ high luminosity need for L1 tracking • Delivering information for Level-1 – impossible to use all channels for individual triggers – Idea: exploit strong 3.8 T magnetic field and design modules able to reject signals from low-pT particles • Different discrimination proposals to reject hits from low-pT tracks data transmission at 40 MHz feasible: 1 2 pass pass fail fail 1. within a single sensor, based on cluster ~ 1 mm width 2. correlating signals from stacked sensor pairs 9/13/2011 N. Garelli (CERN). NEC'2011 ~ 100 μm 38 B0s meson μ+ μ- LHCb The Large Hadron Collider beauty experiment 9/13/2011 N. Garelli (CERN). NEC'2011 39 LCHb Trigger & DAQ Today Single-arm forward spectrometer (~300 mrad acceptance) for precision measurements of CP violation & rare B-meson decays 40 MHz L0 e, g < 1 MHz HW L0 had L0 m HLT1. High pT tracks with IP != 0 SW 30 kHz Global reconstruction HLT2. Inclusive & exclusive selection 3 kHz 9/13/2011 Event size ~35 kB • Designed to run with average # of collisions per BX ~ 0.5 & nb~2600 L ~ 2 1032 cm-2s-1 running with L = 3.3 1032 cm-2s-1 • Reads-out 10 times more often than ATLAS/CMS to reconstruct secondary decay vertices very high rate of small events (~55 kB today) • L0 trigger: high efficiency on dimuon events, but removes half of the hadronic signals • All trigger candidates stored in raw data & compared with offline candidates: • HLT1: tight CPU constraint (12 ms), reconstruct particles in VELO, determine position of vertices • HLT2: Global track reconstruction, searches for secondary vertices N. Garelli (CERN). NEC'2011 40 LCHb Upgrade – Phase1 • 2011: L ~O(150%) of design, O(35%) of bunches • after 2017: Higher rate higher ET threshold even less hadronic signals 40 MHz CPU farm Custom electronics Calo, Muon LLT pT of had, m, e,/y 1-40 MHz All sub-detectors HLT Tracking, vertexing, inclusive/exclusive selections 20 kHz 9/13/2011 Interesting physics with ~ 50 fb-1 (design: 5 fb-1): • precision measurements (charm CPV, …) • searches (~1 GeV Majorana neutrinos,…) UPGRADE NEEDED • increase read-out to 40 MHz & eliminate trigger limitations • LLT will not simply reduce rate as L0, but will enrich selected sample • new VELO detector • no major changes for muon & calo • upgrade electronics & DAQ • data link from detector: components from GBT readout-network made for ~ 24 Tb/s • common back-end read-out board: TELL40. Parallel optical I/Os (12 x > 4.8 Gb/s), GBT compatible N. Garelli (CERN). NEC'2011 41 Need for Bandwidth – Phase2 Read-out from cavern to counting room Ethernet ~40 Gb/s A V Front-End S-link T Board M Board ~200 Mb/s PC ~200 Mb/s C E Read-Out System GBT ~5 Gb/s A ~40 Mb/s ~40 Gb/s Ethernet 1 Gb/s • New front-end GigaBit Transceiver (GBT) chipset – point-to-point high speed bi-directional link to send data from/to counting room at ~5Gb/s – simultaneous transmission of data for DAQ, Slow Control, Timing Trigger & Control (TTC) systems – robust error correction scheme to correct errors caused by SEUs • Advanced Telecommunications Computing Architecture (ATCA) – point-to-point connections between crate modules – higher bandwidth in output • Which electronics in 20 y? Will VME be still ok? Do we need ATCA functionality? 9/13/2011 N. Garelli (CERN). NEC'2011 42 Conclusion • Trigger & DAQ systems worked extremely well until now • After the long LHC shutdown of 2017: beyond design – increased luminosity – increased pile-up • Experiments need to upgrade to work beyond design – New Inner Tracker: radiation damage & more pile-up – Level-1 trigger: more complex hardware selection & deal with longer latency – New read-out links: higher bandwidth – Scale DAQ and Network • Difficult to define upgrade strategy as of today – unstable schedule – maintaining current experiments • One thing is sure: LHC experiments upgrade will be exciting 9/13/2011 N. Garelli (CERN). NEC'2011 43