Software Testing 2

advertisement

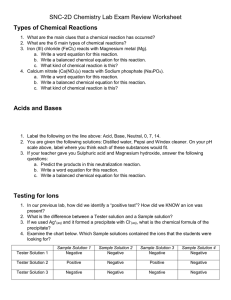

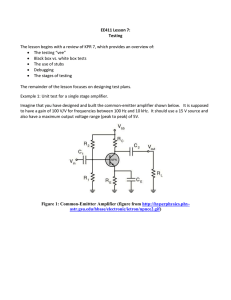

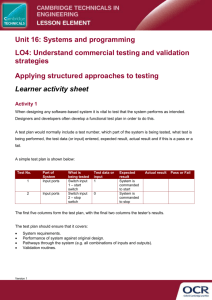

Damian Gordon Born May 11, 1930 Died August 6, 2002 Born in Rotterdam, Netherlands A Dutch computer scientist, who received the 1972 Turing Award for fundamental contributions to developing programming languages. “Testing shows the presence, not the absence of bugs” “Program testing can be used to show the presence of bugs, but never to show their absence!” Let’s call that Principle #1: Testing shows the presence of defects, but if no defects are found that is no proof of correctness. Principle #2: Exhaustive Testing is impossible, all combinations of inputs and preconditions are impossible to test, instead of this it is important to focus on risk analysis and priorities. Principle #3: Early Testing is important, test as soon as possible and focus on defined objectives. Principle #4: Defect Clustering, a small section of code may contain most of the defects. Principle #5: Pesticide Paradox, using the same test cases over and over again will never lead to finding new defects – review them regularly. Principle #6: Testing is Context Dependent, safetycritical software is tested differently to an ecommerce site. Principle #7: Absence-of-Errors fallacy, if the system does not fulfil the users needs, it is not useful. Planning and Control Planning and Control Analysis and Design Planning and Control Analysis and Design Implementation and Execution Planning and Control Analysis and Design Implementation and Execution Evaluating Exit Criteria and Reporting Planning and Control Analysis and Design Implementation and Execution Evaluating Exit Criteria and Reporting Test Closure Activities Understanding the goals and objectives of the customers, stakeholders, and the project, and the risks that testing is intended to address. This gives us the mission of testing or the test assignment. To help achieve mission, the test strategy and test policies are created ◦ Test Strategy: An overall high-level approach, e.g. “system testing is carried out by independent software testers” ◦ Test Policies: Rules for testing, e.g. “we always review design documents” From here we can define a test plan. Test Plan: ◦ Determine the scope and risks and identify the objectives of testing. We consider what software, components, systems or other products are in scope for testing Test Plan: ◦ Determine the test approach (techniques, test items, coverage, identifying and interfacing with the teams involved in testing, testware) Test Plan: ◦ Implement the test policy and/or the test strategy Test Plan: ◦ Determine the required test resources (e.g. people, test environment, PCs): from the planning we have already done we can now go into detail; we decide on our team make-up and we also set up all the supporting hardware and software we require for the test environment. Test Plan: ◦ Schedule test analysis and design tasks, test implementation, execution and evaluation: we will need a schedule of all the tasks and activities, so that we can track them and make sure we can complete the testing on time. Test Plan: ◦ Determine the exit criteria: we need to set criteria such as coverage criteria (for example, the percentage of statements in the software that must be executed during testing) that will help us track whether we are completing the test activities correctly This phase focuses on moving from more general objectives to tangible test conditions and test designs. We start by designing some Black Box tests based on the existing specification of the testing. This process will result in the specifications themselves being updated, clarified, and disambiguated. Next we identify and prioritise the tests, and select representative tests that relate to the software that carry risks or are of particular interest. Next we identify the data that will be used to test this software. So this will include specifically design test data as well as “likelive” data. It may be necessary to generate a large volume of “like-live” data to stress-test the system. It is important that the “like-live” data doesn’t include real customers names, etc. If there would be any confidentiality issues. Finally we design the test environment setup and identify any required infrastructure and tools. Including support tools such spreadsheets, word processors, project-planning tools, etc. We build the tests based on test cases. We may set up testware for automated testing. We need to plan that the set-up and configuration will take significant time. Implementation Prioritise test cases Group together similar test cases into a test suite, which usually share test data Verify that the test environment has been set up correctly Execution Execute the test suites following the test procedures. Log the outcome of test execution and record all important information. Compare actual results with expected results, and report any discrepancies as incidents. Repeat test activities as a result of action taken for each discrepancy. Comparing test execution to stated objectives of the development. Exit criteria (c.f. Fagan inspection) are requirements which must be met to complete a specific process, might be “testing team completes and files testing report”) Check test logs against criteria, is all evidence present and fully documented Assess if more tests are needed Write a Test Summary Report for stakeholders. Test closure activities include: ◦ Check all deliverables have been delivered ◦ Finalise and archive all testware such as scripts, infrastructure, etc. For future projects. ◦ Evaluate how testing went and analyse lesson learned for future projects. Independent Testing – who is a tester? Why do we sometimes not get on with the rest of the team? Independent Testing – who is a tester? A tester is coming to the process from a critical perspective, unlike a designer or developer who are working positively or optimistically towards the project, the tester must always focus on judging and appraising. Independent Testing – who is a tester? Suppose you were going to cook a meal to enter in a competition for chefs. You select the menu, collect the ingredients, cook the food, set the table, and serve the meal. If you want to win, you do each task as well as you can. Suppose instead you are one of the judges evaluating the competition meals. You examine everything critically, including the menu, the ingredients, the methods used, keeping to time and budget allowances, choice of ingredients, the elegance of the table setting and the serving, and the look and taste of the meal. To differentiate between the competition chefs, you'll praise every good aspect of their performances but you'll also note every fault and error each chef made. So it is with software testing: building the software requires a different mindset from testing the software. Independent Testing – who is a tester? This is not to say that a programmer cannot be a tester, or a tester cannot be a programmer, and in fact in many organisations people assume both roles at different time. The programmer is usually the first line of testing since they will be testing elements of the programs as they are writing the code, and will be finding and eliminating errors before anyone else sees the code. Independent Testing – who is a tester? The big problem is that it’s hard to spot issues with your own work, so someone who is independent of the development process is better to review and judge. Independent Testing – who is a tester? Several levels of independence can be identified, listed here from the lowest level of independence to the highest: ◦ tests by the person who wrote the item under test ◦ tests by another person within the same team, such as another programmer ◦ tests by a person from a different organizational group, such as an independent test team ◦ tests designed by a person from a different organization or company, such as outsourced testing or certification by an external body Why do we sometimes not get on with the rest of the team? Some people on a team want to get the project done and delivered as quickly as possible, whereas others what to ensure that a bug-free system is delivered. Why do we sometimes not get on with the rest of the team? If people are unclear as to the amount of testing that has to be done to complete the test strategy, or if development takes longer than anticipated, there may be pressure to truncate the test process. Why do we sometimes not get on with the rest of the team? When a tester is checking code, if they detect errors they perceive this as a good thing, we have found defects or failures in the system. Why do we sometimes not get on with the rest of the team? However many developers take great pride is their work, and the software they write becomes personal to them, from their point-of-view any flaws that are uncovered about the system could be taken as a personal attack on them. Why do we sometimes not get on with the rest of the team? So..... Why do we sometimes not get on with the rest of the team? Communicate findings on the product in a neutral, fact-focused way without criticizing the person who created it. For example, write objective and factual incident reports and review findings. ◦ Don't gloat - you are not perfect either! ◦ Don't blame - any mistakes are probably by the group rather than an individual. ◦ Be constructively critical and discuss the defect and how you are going to log it. Why do we sometimes not get on with the rest of the team? Explain that by knowing about this now we can work round it or fix it so the delivered system is better for the customer. ◦ Say what you liked and what worked, as well as what didn't work. ◦ Show what the risk is honestly - not everything is high priority. ◦ Don't just see the pessimistic side - give praise as well as criticism. ◦ Show what risks have been uncovered and the benefits of the review or test. Why do we sometimes not get on with the rest of the team? Start with collaboration rather than battles. Remind everyone of the common goal of better quality systems. ◦ Be polite and helpful, collaborate with your colleagues. ◦ Try to understand how the other person feels and why they react as they do. ◦ Confirm that the other person has understood what you have said and vice versa. ◦ Explain how the test or review helps the author what's in it for them. ◦ Offer your work to be reviewed, too Why do we sometimes not get on with the rest of the team? Maybe we could use the Six Thinking Hats?