segmentation - Andrew T. Duchowski

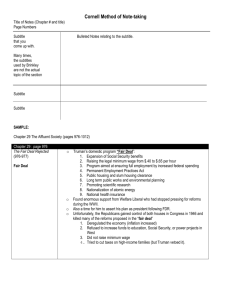

advertisement

Evaluating Eye Movement Differences when Processing Subtitles Abstract Feifan Zheng COMPUTER SCIENCE BARD COLLEGE fz6131@bard.edu Andrew T. Duchowski COMPUTER SCIENCE CLEMSON UNIVERSITY duchowski@acm.org Elisa Perego DEPARTMENT OF PSYCHOLOGY UNIVERSITY OF TRIESTE Discussion Subtitle types appear to have similar influences on the eye movements during subtitled film viewing, failing to support our previous hypotheses. Several reasons that might cause the contradiction are concluded: Our experimental study enables us to obtain a richer picture of the visual processing of subtitled films. By analyzing eye movement data, we aim to determine whether different subtitle types influence gaze switching during subtitled film viewing. Our hypothesis is that different subtitle formats can cause different tradeoffs between image processing and text processing. We specifically hypothesize that real-time subtitles can have a disruptive effect on information processing and recognition performance. Eye tracking data, however, shows that there is no significant difference in gaze patterns among the four types of subtitles tested. • Quality of calibrations The visualization of the eye movement recordings indicate there were errors in the calibration process. These errors are non-negligible and resulted in unreliable eye movement measurements (see Figure 5). Introduction & Background Previous research has shown that different types of text groupings in respoken subtitles elicit different viewing behaviors (Rajendran, 2011). This study examines segmented film (not live) subtitles. The particular type of segmentation that was tested in this study is between the noun phrase (NP) structure. We considered the following NP splits in our study: 1. Noun + Adjective; 2. Noun + Prepositional Phrase; 3. Adjective + Noun; 4. Determiner + Noun. Figure 2. Screenshots with heatmaps and scanpaths of 1 of the 28 modified places from the testing video. Subtitle types: BENE (top left), MALE (top right), PYRAMID (bottom left), and WFW (bottom right). Results • The results were analyzed along four metrics between subjects (comparing data among four types of subtitles). The metrics were number of gazepoint crossovers (see Figure 3), number of fixation crossovers, percentage of gazepoint duration on subtitles, percentage of fixation duration on subtitles. Methodology • The experiment was a between-subjects factorial design with 4 levels. • Participants watched the same 10-minute video excerpt from a Hungarian drama (see Figure 1). The video was subtitled with one of four types of subtitles, modified in 28 places. They were instructed to watch the clip for content in both the subtitles and video. Figure 3. Example of a gazepoint/saccadic crossover. A crossover occurs when the gazepoint/fixation moves from video section to subtitle section, or vise p-values from ANOVA of the four metrics were 0.233, 0.5503, versa. • The 0.7329 and 0.7613, respectively, showing no significant differences in subtitle types among the four metrics (see Figure 4). Figure 5. The overall scanpaths of eye movements from four different subjects. The red rectangles indicate the subtitle sections. The first, third and last were badly calibrated. • Duration of the testing video The duration of the test video in the previous study was less than one minute. In our study, the test video we used in this study lasted 10 minutes. A reasonable hypothesis is that the effect of subtitle difference has been concealed within the long duration of the current video. • Lack of participants Since this study is designed between subjects, a large number of participants is required to complete this study. The lack of statistical significance maybe due to the small number of participants per group. Despite the fact that the result contradicts our initial hypotheses, we find that our data coincides with a previous study, which showed that different types of text grouping in film subtitles do not elicit diverse viewing behaviors and generate different tradeoffs between image processing and text processing (Perego 2010). A key factor may be the nature of live (respoken) subtitling. Conclusion Our study has shown that the type of text chunking has little significance on the visual behavior of our participants. This result contradicts our initial hypothesis, in favor of an earlier observation. However, it should be noted that the duration of the video, the quality of the calibrations, and the lack of participants produced considerable error within our data. In absence of these sources of error, replication of this study may produce stronger results. • Participants took recognition tests and filled out questionnaires a Figure 1. A participant is watching the week after they watched the testing video in front of the eye video. tracking equipment. • The four types of subtitles tested were: 1. BENE (well-segmented subtitles, NP structures are kept in the same line); 2. MALE (ill-segmented subtitles, NP structures are broken into two lines); 3. PYRAMID (well-segmented subtitles, the upper line was always shorter than the lower line); 4. WFW (real-time subtitles, words showed up one by one). (see Figure 2) References Rajendran, D. J., Duchowski, A. T., Orero, P., Martinez, J., and Romero-Fresco, P., “Effects of Text Chunking on Subtitling: A Quantitative and Qualitative Examination'', Perspectives: Studies in Translatology, Special Issue: When Modalities Merge, Arumi, M., Matamala, A. and Orero, P., Eds., 2011 (to appear). Perego, E., Del Missier, F., Porta M., and Mosconi M., The Cognitive Effectiveness of Subtitle Processing. Media Psychology, 13:243–272, 2010. Figure 4. Graphs showing means and standard errors for between-subject gazepoint crossover count (top left), percentage of gazepoint duration on subtitles (top right), fixation crossover count (bottom left), and percentage of fixation duration on subtitles (bottom right) . Acknowledgement This research was supported, in part, by NSF Research Experience for Undergraduates (REU) Site Grant CNS-0850695.