Block 3 Discrete Models Lesson 9 – Discrete Probability

advertisement

Block 3 Discrete Systems

Lesson 11 –Discrete Probability

Models

The world is an uncertain

place

Random Process

Random – happens by chance, uncertain,

non-deterministic, stochastic

Random Process – a process having an

observable outcome which cannot be

predicted with certainty

one of several outcomes will occur at random

Uses of Probability

Measures uncertainty

Foundation of inferential statistics

Basis for decision models under uncertainty

A manager observing

an uncertainty outcome

Two Approaches to modeling

probability

Sample Space and Random Events

Uses sets and set theory

Random Variables and Probability

Distributions

Discrete case – algebraic

Continuous case - calculus

Sample Spaces and Random

Events

Let S = the set of all possible outcomes

(events) from a random process. Then S is

called the sample space.

Let E = a subset of S. Then E is called a

random event.

Basic problem: Given a random process and

the sample space S, what is the probability of

the event E occurring - P(E).

Example Sample Space &

Random Events

Let S = the set of all outcomes from observing the

number of demands on a given day for a particular

product. S = {0, 1, 2,…n}

Let E1 = the random event, there is one demand.

Then E1 = {1}

Let E2 = the random event of no more than 3

demands. Then E2 = {0, 1, 2, 3}

Let E3 = the random event, there are at least 4

demands. Then E3 = {4, 5, …, n} or E3 = E2c

S x S = the set of outcomes from observing two days

of demands = {(0,0), (1,0), (0,1), …}

What is a probability – P(E)?

Let P(E) =Probability of the event E occurring, then

0 ≤ P(E) ≤ 1

If P(E) = 0, then event will not occur (impossible event)

If P(E) = 1, then the event will occur, i.e. a certain event

So the closer P(E) is to 1,

then the more likely it is

that the event E will

occur?

The Sample Space

The collection of all possible outcomes (events) relative

to a random process is called the sample space, S

where

S = {E1, E2 ... Ek} and P(S) = 1

sampling space

How are probabilities

determined?

Elementary or basic events

1.

2.

3.

Empirical or relative frequency

A priori or equally-likely using counting methods

Subjectively –personal judgment or belief

Compound events formed from unions,

intersections, and complements of basic

events

Laws of probability

Example - Relative Frequency

(empirical)

A coin is tossed 2,000 times and heads appear 1,243

times.

The company’s Web site has been down 5 days out

of the last month (30 days).

P(H) = 1243/2000 = .6215

P(site down) = 5/30 = .16667

2 out of every 20 units coming off the production line

must be sent back for rework.

P(rework) = 2/20 = .1

Will you die this year?

Probability Male Death

0.024

0.019

0.014

0.009

0.004

-0.001

0

10

20

30

40

Age

50

60

70

age

0

5

10

16

17

20

25

30

35

40

45

50

55

60

65

70

75

80

85

90

Male

0.007644

0.000202

0.00011

0.00081

0.000964

0.00129

0.001379

0.001389

0.00177

0.002589

0.003891

0.005643

0.008106

0.012405

0.019102

0.029824

0.046499

0.073269

0.120186

0.192615

Female

0.006275

0.000152

0.000113

0.000375

0.000423

0.000456

0.000499

0.000628

0.000953

0.001514

0.002264

0.003227

0.004884

0.007732

0.012199

0.019312

0.030582

0.050396

0.086443

0.147616

Example - A priori (equally-likely

outcomes)

A pair of fair dice are tossed.

S x S = {(1, 1),(1, 2),(1, 3),(1, 4),(1, 5),(1, 6), (2, 1),(2, 2),(2,

3),(2, 4),(2, 5),(2, 6),(3, 1),(3, 2),(3, 3),(3, 4),(3, 5),(3, 6),(4,

1),(4, 2),(4, 3),(4, 4),(4, 5),(4, 6),(5, 1),(5, 2),(5, 3),(5, 4),(5,

5),(5, 6),(6, 1),(6, 2),(6, 3),(6, 4),(6, 5),(6, 6)}

P(a seven) = 6/36 = .1667

A supply bin contains 144 bolts to be used by the

manufacturing cell in the assembly of an automotive

door panel. The supplier of the bolts has indicated

that the shipment contains 7 defective bolts.

Let E = the event, a defective bolt is selected

P(E) = 7/144 = .04861.

A priori (equally-likely outcomes)

versus Relative Frequency (empirical)

A prior (knowable independently of experience):

n( A)

P( A)

n( S )

n(A) = the number of ways in which event A can occur

n(S) = total number of outcomes from the random process

Relative frequency:

n( E )

P ( E ) Lim

n

n

where

n(E) = number of times event E occurs in n trials

Examples – Subjective Probability

8 out of 10 “leading” economists believe that the gross

national product (GNP) will grow by at least 3% this year.

P(GNP .03) = 8/10 = .8

Bigg Bosse, the CEO for a major corporation, consults his

marketing staff. Together they make the assessment that

there is a 50-50 chance that sales will increase next year.

The House of Congress majority leader, after consulting

with his staff, determines that there is only 25 percent

chance that an important tax bill will be passed.

Computing Probabilities for

Compound Events

Finding the probability of the union,

intersection, and complements of events

Mutually Exclusive Events

A

B

P(Ac ) = 1 - P(A)

P( A B) = P(A) + P(B) if A and B are mutually

exclusive

P(A B) = P() = 0

if A and B are mutually exclusive

Note that A’ and B’ are not mutually exclusive.

More Mutually Exclusive

Events

Random process: draw a card at random from an

ordinary deck of 52 playing cards

Let A = the event, an ace is drawn

Let B = the event, a king is drawn

P(A) = 1/13 and P(B) = 1/13

Then P( A B) = P(A) + P(B) = 1/13 + 1/13 = 2/13 It’s not

P(A B) = 0

me!

P(A’) = 1 – 1/13 = 12/13; P(B’) = 12/13

P(A B)’ = P( A’ B’) = ?

the event is not an ace or not king

The Addition Formula

A

B

A B

AB

P(A B) = P(A) + P(B) - P(A B)

More of the Addition Formula

Random process: draw a card at random from an ordinary

deck of 52 playing cards

Let A = the event, draw a spade

P(A) = 13/52 =1/4

let B = the event draw an ace

P(B) = 4/52 = 1/13

P(A B) = 1/52

P(A B) = P(A) + P(B) - P(A B)

= 1/4 + 1/13 – 1/52 = 13/52 + 4/52 – 1/52 = 16/52 = .3077

12 1

3

36

The Multiplication rule

Independent Events

Two events, A and B are independent if the

P(A) is not affected by the event B having

occurred (and vice-versa).

If A and B are independent,

then

P(A B) = P(A) P(B)

Note that A’ and B’ are independent

if A and B are independent.

An Independence

event

Proof by Example

Let E1 = the event, a three or four is rolled on the toss of a

single fair die, P(E1) = 2/6

E2 = the event, a head is tossed from a fair coin, P(E2)

= 1/2

then D x C = {(1,H), (1,T), (2,H), (2,T), (3,H), (3,T), (4,H),

(4,T), (5,H), (5,T), (6,H), (6,T)}; P(E1 E2) = 2/12

Mult. rule: P(E1 E2) = P(E1) P(E2) = (2/6) (1/2) = 2/12

Another Example

Let A = the event, prototype A fails heat stress test

B = the event prototype B fails a vibration test

Given P(A) = .1 ; P(B) = .3

Find P(A B) = ? and P(A B) = ?

Assuming independence:

P(A B) = P(A) P(B) = (.1)(.3) = .03

P(A B) = P(A) + P(B) - P(A B) = .1 + .3 - .03 = .37

P(A B)’ = P(A’ B’) = P(A’)P(B’) = (.9)(.7) = .63

Glee Laundry Detergent

Each box of powered laundry detergent coming off of

the final assembly line is subject to an automatic

weighing to insure that the weight of the contents falls

within specification. Each box is then visually

inspected by a quality assurance technician to insure

that is it properly sealed.

I have been

rejected.

Glee

More Glee

If three percent of the boxes fall outside the weight

specifications and five percent are not properly sealed,

what is the probability that a box will be rejected after final

assembly?

Let A = the event, a box does not meet the weight

specification; P(A) = .03

Let B = the event, a box is not properly sealed; P(B) = .05

P(A B) = P(A) + P(B) – P(A)P(B)

= .03 + .05 - .0015 = .0785

A Reliability Problem

An assembly is composed of 3 components as shown

below. If A is the event, component A does not fail, B is

the event, component B does not fail, and C is the event,

component C does not fail, find the reliability of the

assembly where P(A) = .8, P(B) = .9, and P(C) = .8.

Assume independence among the components.

A

B

C

P(S) = P[ (A B) C] = P(A B) + P(C) – P(A B C)

= P(A) P(B) + P(C) – P(A)P(B)P(C) = (.8)(.9) + .8 – (.8)(.9)(.8)

= .72 + .8 - .576 = .944

Next – random variables and

their probability distributions!

Variables that are random; what

will they think of next?

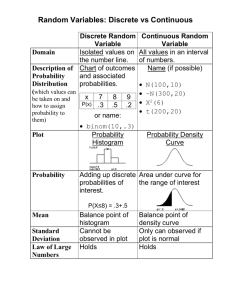

Discrete Random Variables

A random variable (RV) is a variable which takes on

numerical values in accordance with some probability

distribution.

Random variables may be either continuous (taking on

real numbers) or discrete (usually taking on non-negative

integer values).

The probability distribution which assigns probabilities to

each value of a discrete random variable can be

described in terms of a probability mass function (PMF),

p(x) in the discrete case.

Random Variables - Examples

Y = a discrete random variable, the number of

machines breaking down each shift

X = a discrete random variable, the monthly demand

for a replacement part

Z = a discrete random variable, the number of

hurricanes striking the Gulf Coast each year

Xi = a discrete random variable, the number of

products sold in month i

The PMF

The Probability Mass Function (PMF), p(x), is defined

as

p(x) = Pr(X = x}

and has two properties:

1. p ( x) 0

2.

p ( x) 1

all x

By convention, capital letters represent the random variable while the

corresponding small letters denote particular values the random

variable may assume.

A Probability Distribution

Let X = a RV, the number of customer

complaints received each day

The Probability Mass Function (PMF) is

.1

.3

p( x)

.5

.1

if x 0

if x 1

if x 2

if x 3

4

p ( x) 1

x 1

The CDF

The cumulative distribution function (CDF), F(x) is

x

defined where

F ( x) Pr( X x) p(i )

i 0

Example:

.1

.3

p( x)

.5

.1

if x 0

if x 1

if x 2

if x 3

.1 if x 0

.4 if x 1

F ( x)

.9 if x 2

1.0 if x 3

Rolling the dice

X = the outcome from rolling a pair of dice

number of ways

2

(1,1)

1

3

(1,2) , (2,1)

2

4

(1,3) , (2,2) , (3,1)

3

5

(1,4) , (2,3) , (3,2) , (4,1)

4

6

(1,5) , (2,4) , (3,3) , (4,2) , (5,1)

5

7

(1,6) , (2,5) , (3,4) , (4,3) , (5,2) , (6,1)

6

8

(2,6) , (3,5) , (4,4) , (5,3) , (6,2)

5

9

(3,6) , (4,5) , (5,4) , (6,3)

4

10

(4,6) , (5,5) , (6,4)

3

11

(5,6) , (6,5)

2

12

(6,6)

1

Total

36

An Example

Let X = a random variable, the sum resulting from

the toss of two fair dice;

X = 2, 3, …, 12

(1,1) (1,2) (1,3) (1,4) (1,5) (1,6)

(2,1) (2,2) (2,3) (2,4) (2,5) (2,6)

(3,1) (3,2) (3,3) (3,4) (3,5) (3,6)

(4,1) (4,2) (4,3) (4,4) (4,5) (4,6)

(5,1) (5,2) (5,3) (5,4) (5,5) (5,6)

(6,1) (6,2) (6,3) (6,4) (6,5) (6,6)

x

p(x)

F(x)

2

1/36

1/36

3

2/36

3/36

4

3/36

6/36

=S

5

6

7

8

9

10

11

12

4/36

5/36

6/36

5/36

4/36

3/36

2/36

1/36

10/36 15/36 21/36 26/36 30/36 33/36 35/36 36/36

Probability Histogram for the

Random Variable X

Expected Value or Mean

The expected value of a random variable (or

equivalently the mean of the probability distribution)

is defined as

E[ X ] xp( x)

x 0

Don’t you get it? The expected value

is just a weighted average of the

values that the random variable

takes on where the probabilities are

the weights.

Example – Expected Value

I expected this to

have a little value!

.1

.3

p( x)

.5

.1

if x 0

if x 1

if x 2

if x 3

E[X] = 0 (.1) + 1 (.3) + 2 (.5) + 3 (.1)

= 1.6

Example –Expected Value

12

E[ X ] xp ( x)

Dice example:

x 2

= 2(1/36) + 3(2/36) + 4(3/36) + 5(4/36) + 6(5/36) + 7(6/36)

+ 8(5/36) + 9(4/36) + 10(3/36) + 11(2/36) + 12(1/36)

= (1/36) (2 + 6 + 12 + 20 + 30 + 42 + 40 + 36 + 30 + 22 + 12)

= (252/36) = 7

x

p(x)

F(x)

2

1/36

1/36

3

2/36

3/36

4

3/36

6/36

5

6

7

8

9

10

11

12

4/36

5/36

6/36

5/36

4/36

3/36

2/36

1/36

10/36 15/36 21/36 26/36 30/36 33/36 35/36 36/36

Yet Another Discrete

Distribution

Let X = a RV, the number of customers per day

x

p ( x)

; x 1, 2,..., 20

210

x 1

1 x( x 1)

F ( x) 2

( x 1)

2 210

420

210

F(20) = (20)(21) / 420 = 1

n

recall the

arithmetic series:

n

Sn a ( j 1)d [2a (n 1)d ]

2

j 1

More of yet another discrete

distribution

Let X = a RV, the number of customers per day

x

; x 1, 2,..., 20

210

x

x( x 1)

F ( x)

2 ( x 1)(1)

2 210

420

p( x)

n n 1 2n 1

i

6

i 1

n

2

20 21 41

2870;

6

2870 / 210 13.67

Pr{X = 15} = p(15) = 15/210 = .0714

Pr{X 15} = F(15) = (15)(16)/420 = .5714

Pr{10 < X 15} = F(15) – F(10)

= .5714 – (10)(11)/420 = .5714 - .2619 = .3095

20

20

x

1 20 2

2

E[ X ] xp ( x) x

x 13

210 210 x 1

3

x 1

x 1

A most enjoyable

lesson.

So ends our discussion on

Discrete Probabilities

Coming soon to a classroom near

you – discrete optimization

models