PowerPoint Presentation - University of Pittsburgh

Entity/Event-Level

Sentiment Detection and Inference

Lingjia Deng

Intelligent Systems Program

University of Pittsburgh

Dr. Janyce Wiebe, Intelligent Systems Program, University of Pittsburgh

Dr. Rebecca Hwa, Intelligent Systems Program, University of Pittsburgh

Dr. Yuru Lin, Intelligent Systems Program, University of Pittsburgh

Dr. William Cohen, Machine Learning Department, Carnegie Mellon University

1

A World Of Opinions

NEWS

EDITORIALS

BLOGS

3

Motivation

• ... people protest the country’s same-sex marriage ban ....

negative protest people same-sex marriage ban positive

6

Explicit Opinions

• The explicit opinions are revealed by opinion expressions.

people negative protest same-sex marriage ban positive

7

Implicit Opinions

• The implicit opinions are not revealed by expressions, but are indicated in the text.

• The system needs to infer implicit opinions.

people negative protest same-sex marriage ban positive

8

Goal:

Explicit and Implicit Sentiments

• explicit: negative sentiment

• implicit: positive sentiment people negative protest same-sex marriage ban positive

9

Goal:

Entity/Event-Level Sentiments

• PositivePair (people, same-sex marriage)

• NegativePair (people, same-sex marriage ban) people negative protest same-sex marriage ban positive

10

Three Questions to Solve

• Is there any corpus annotated with both explicit and implicit sentiments?

• No. This proposal develops.

• Is there any inference rules defining how to infer implicit sentiments?

• Yes. (Wiebe and Deng, arXiv, 2014.)

• How do we incorporate the inference rules into computational models?

• This proposal investigates.

11

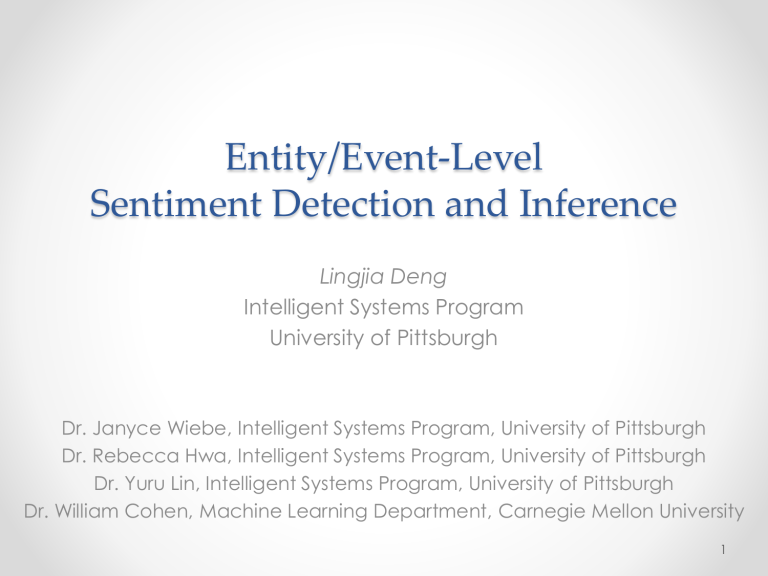

Completed and

Proposed

Work

Corpus:

MPQA 3.0

• Expert Annotations on 70 documents (Deng et al.,

NAACL 2015)

• Non-expert Annotations on hundreds of documents

Sentiment

Inference on

+/-Effect Events

& Entities

Sentiment

Inference on

General Events

& Entities

• A corpus of +/-effect event sentiments (Deng et al., ACL 2013)

• A model validating rules (Deng and Wiebe, EACL 2014)

• A model inferring sentiments (Deng et al., COLING 2014)

• Joint Models

• A pilot study (Deng and Wiebe, EMNLP 2015)

• Extracting Nested Source and Entity/Event Target

• Blocking the rules

12

Background:

Sentiment Corpora

Review Sentiment

Corpus (Hu and Liu,

2004)

Sentiment Tree

Bank (Socher et al., 2013)

MPQA 2.0 (Wiebe at al.,

2005; Wilson, 2008)

MPQA 3.0

Genre Source Target Implicit

Opinion s product reviews writer the product, feature of the product the movie

✗ movie reviews news, editorials, blogs, etc news, editorials, blogs, etc writer writer, and any entity an arbitrary span

✗

✗ writer, and any entity any entity/event

✔ eTarget

(head of noun phrase/verb phrase)

13

Background:

MPQA Corpus

• Direct subjective o nested source o attitude

• attitude type

• target

• Expressive subjective element (ESE) o nested source o polarity

• Objective speech event o nested source o target

14

MPQA 2.0: An Example

nested source: writer, Imam negative attitude

When the Imam issued the fatwa against

Salman Rushdie for insulting the Prophet … target

15

Background:

Explicit and Implicit Sentiment

• Explicit sentiments o Extracting explicit opinion expressions, sources and targets

(Wiebe et al., 2005, Johansson and Moschitti, 2013a, Yang and Cardie,

2013, Moilanen and Pulman, 2007, Choi and Cardie, 2008, Moilanen et al.,

2010)

.

• Implicit sentiments o Investigating features directly indicating implicit sentiment

(Zhang and Liu, 2011; Feng et al., 2013

). No inference.

o A rules-based system requiring all oracle information. (Wiebe and Deng, arXiv 2014)

16

Completed and

Proposed

Work

Corpus:

MPQA 3.0

• Expert Annotations on 70 documents (Deng et al.,

NAACL 2015)

• Non-expert Annotations on hundreds of documents

Sentiment

Inference on

+/-Effect Events

& Entities

Sentiment

Inference on

General Events

& Entities

• A corpus of +/-effect event sentiments (Deng et al., ACL 2013)

• A model validating rules (Deng and Wiebe, EACL 2014)

• A model inferring sentiments (Deng et al., COLING 2014)

• Joint Models

• A pilot study (Deng and Wiebe, EMNLP 2015)

• Extracting Nested Source and Entity/Event Target

• Blocking the rules

18

Completed and

Proposed

Work

Corpus:

MPQA 3.0

• Expert Annotations on 70 documents (Deng et al.,

NAACL 2015)

• Non-expert Annotations on hundreds of documents

Sentiment

Inference on

+/-Effect Events

& Entities

Sentiment

Inference on

General Events

& Entities

• A corpus of +/-effect event sentiments (Deng et al., ACL 2013)

• A model validating rules (Deng and Wiebe, EACL 2014)

• A model inferring sentiments (Deng et al., COLING 2014)

• Joint Models

• A pilot study (Deng and Wiebe, EMNLP 2015)

• Extracting Nested Source and Entity/Event Target

• Blocking the rules

19

From MPQA 2.0 To MPQA 3.0

nested source: writer, Imam negative attitude target

When the Imam issued the fatwa against

Salman Rushdie for insulting the Prophet … o “Imam” is negative toward “Rushdie’’.

o “Imam” is negative toward “insulting’’.

o “Imam” is NOT negative toward “Prophet”. eTarget

20

Expert Annotations

• Expert annotators include Dr. Janyce Wiebe and I.

• The expert annotators are asked to select which noun or verb is the eTarget of an attitude or an ESE.

• The expert annotators annotated 70 documents.

• The agreement score is 0.82 on average over four documents.

21

Non-Expert Annotations

• Previous work have tried to ask non expert annotators to annotate subjectivity and opinions (Akkaya et al., 2010,

Socher et al., 2013).

• Reliable Annotations o Non-expert annotators with high credits.

o Majority vote.

o Weighted vote and reliable annotators (Welinder and Perona,

2010).

• Validating Annotation Scheme o 70 documents: Compare non-expert annotations with expert annotations.

o Then , collect non-expert annotations for the remaining corpus.

22

Part 1 Summary

• An entity/event-level sentiment corpus, MPQA 3.0

• Complete expert annotations o 70 documents (Deng and Wiebe, NAACL 2015).

• Propose non-expert annotations o Remaining hundreds of documents.

o Crowdsourcing tasks.

o Automatically acquiring reliable labels.

27

Completed and

Proposed

Work

Corpus:

MPQA 3.0

• Expert Annotations on 70 documents (Deng et al.,

NAACL 2015)

• Non-expert Annotations on hundreds of documents

Sentiment

Inference on

+/-Effect Events

& Entities

Sentiment

Inference on

General Events

& Entities

• A corpus of +/-effect event sentiments (Deng et al., ACL 2013)

• A model validating rules (Deng and Wiebe, EACL 2014)

• A model inferring sentiments (Deng et al., COLING 2014)

• Joint Models

• A pilot study (Deng et al., EMNLP 2015)

• Extracting Nested Source and Entity/Event Target

• Blocking the rules

28

+/-Effect Event Definition

• A +effect event has benefiting effect on the theme.

o help, increase, etc

• A –effect event has harmful effect on the theme.

o harm, decrease, etc

• A triple

• <agent, event, theme>

He rejects the paper.

-effect event: reject

Agent: He theme: paper

<He, reject, paper>

29

+/-Effect Event Representation

• +Effect(x) o x is a +effect event

• -Effect(x) o x is a –effect event

• Agent(x,a) o a is the agent of +/-effect event x

• Theme(x, h) o h is the theme of +/-effect event x

31

+/-Effect Event Corpus

• +/-Effect event information is annotated.

o The +/-effect events.

o The agents.

o The themes.

• The writer’s sentiments toward the agents and themes are annotated.

o positive, negative, neutral

• 134 political editorials.

32

Sentiment Inference Rules

• people protest the country’s same-sex marriage ban.

• explicit sentiment o NegativePair(people, ban)

• +/-effect event information o -Effect(ban) o Theme(ban, same-sex marriage)

NegativePair(people, ban) ^ -Effect(ban)

^ Theme(ban, same-sex marriage)

PositivePair(people, same-sex marriage)

33

Sentiment Inference Rules

• +Effect Rule :

• If two entities participate in a +effect event,

• the writer’s sentiments toward the entities are the same.

• -Effect Rule :

• If two entities participate in a –effect event,

• the writer’s sentiments toward the entities are the opposite.

Can rules infer sentiments correctly?

(Deng and Wiebe, EACL 2014)

34

Building the graph from annotations

(Deng and Wiebe, EACL 2014) agent/ theme

A B

D C

E

• node score: two sentiment scores f

A

( pos )

+ f

A

( neg )

=

1

35

Building the graph from annotations

A B

D C

+/-effect

E

• edge score: four sentiment constraints scores

•

Y

D , E

( pos , pos )

• the score that the sentiment toward D is positive

• AND the sentiment toward E is positive

36

Building the graph from annotations

A B

D C

+/-effect

E

• edge score: inference rules

• if +effect: Y

D , E

( pos , pos )

=

1,

Y

D , E

• Y

D , E

( pos , neg )

=

1,

Y

D , E

( neg , neg )

=

1

( neg , pos )

=

1

37

Loopy Belief Propagation agent/ theme A B

D C

+/-effect

E

• Input: the gold standard sentiment of one node

• Model: Loopy Belief Propagation

• Output: the propagated sentiments of other nodes

38

Propagating sentiments agent/ theme A B

D C

+/-effect

E

• For node E,

• can it be propagated with correct sentiment labels?

39

Propagating sentiments A E agent/ theme A B

D C

+/-effect

E

• Node A is assigned with gold standard sentiment.

• Run the propagation.

• Record whether Node E is propagated correctly or not.

40

Propagating sentiments B E agent/ theme A B

D C

+/-effect

E

• Node B is assigned with gold standard sentiment.

• Run the propagation.

• Record whether Node E is propagated correctly or not.

41

Propagating sentiments C E agent/ theme A B

D C

+/-effect

E

• Node C is assigned with gold standard sentiment.

• Run the propagation.

• Record whether Node E is propagated correctly or not.

42

Propagating sentiments D E agent/ theme A B

D C

+/-effect

E

• Node D is assigned with gold standard sentiment.

• Run the propagation.

• Record whether Node E is propagated correctly or not.

43

Evaluating E being propagated correctly agent/ theme A B

D C

+/-effect

E

• Node E is propagated with sentiment 4 times.

• correctness =

(# node E being propagated correctly)/ 4

• average correctness = 88.74%

44

Conclusion

• Defining the graph-based model with sentiment inference rules.

• Propagating sentiments correctly in 88.74% cases.

• To validate the inference rules only,

• The graph-based propagation model is built from manual annotations.

Can we automatically infer sentiments?

(Deng et al., COLING 2014)

45

Local Detectors

(Deng et al., COLING 2014)

• Given a +/-effect event span in a document,

• Run state-of-the-art systems assigning local scores.

(Q2) is the effect reversed?

(Q1) is it +effect or -effect?

Agent1 Agent2 reversed +effect -effect Theme1 pos: 0.7

neg: 0.5

pos: 0.5

neg: 0.6

Theme2 reverser: 0.9

+effect: 0.8

-effect: 0.2

pos: 0.5

neg: 0.5

pos: 0.7

neg: 0.5

(Q3) which spans are agents and themes?

(Q4) what are the writer’s sentiments?

46

Local Detectors

(Deng et al., COLING 2014)

(Q2) negation detected (Q1) word sense disambiguation

Agent1 Agent2 reversed +effect -effect Theme1 pos: 0.7

neg: 0.5

pos: 0.5

neg: 0.6

Theme2 reverser: 0.9

+effect: 0.8

-effect: 0.2

pos: 0.5

neg: 0.5

pos: 0.7

neg: 0.5

(Q3) semantic role labeling

(Q4) sentiment analysis

47

Global Optimization

Agent1 Agent2 reversed +effect -effect Theme1 pos: 0.7

neg: 0.5

pos: 0.5

neg: 0.6

Theme2 reverser: 0.9

+effect: 0.8

-effect: 0.2

pos: 0.5

neg: 0.5

pos: 0.7

neg: 0.5

• The global model selects an optimal set of candidates: o one candidate from the four agent sentiment candidates,

• Agent1-pos, Agent1-neg, Agent2-pos, Agent2-neg o one/no candidate from the reversed candidate, o one candidate from the +/-effect candidates, o one candidate from the four theme sentiment candidates.

48

Objective Function

æ min

-

å i

Î

EffectEvent

È

Entity

å c

Î

L i u: binary indicator of choosing candidate p ic u ic

+

å

< i , k , j

>Î

Triple x ikj

+

å

< i , k , j

>Î

Triple d ikj

ö p: candidate local score

ξ, δ: slack variables of triple <i,k,j> representing this triple is an exception to

+effect –effect rule (exception: 1)

• The framework assigns values (0 or 1) to u o maximizing the scores given by the local detectors,

• and assigns values (0 or 1) to ξ, δ o minimizing the cases where +/-effect event sentiment rules are violated.

• Integer Linear Programming (ILP) is used.

49

+Effect Rule Constraints

• In a +effect event, sentiments are the same

0 0

+effect: 1

1 1 -effect: 0 exception: 1 not exception: 0

å i ,

< i , k , j

> u i , pos

-

å j ,

< i , k , j

> u j , pos

+ u k ,

+ effect

u k , reversed

<=

AND

1

+ x ikj

å i ,

< i , k , j

> u i , neg

-

å j ,

< i , k , j

> u j , neg

+ u k ,

+ effect

u k , reversed

<=

1

+ x ikj

50

-Effect Rule Constraints

• In a –effect event, sentiments are opposite.

å i ,

< i , k , j

> u i , pos

+

å j ,

< i , k , j

> u j , pos

-

1

+ u k ,

effect

u k , reversed

<=

1

+ d ikj

å i ,

< i , k , j

> u i , neg

+

å j ,

< i , k , j

> u j , neg

-

1

+ u k ,

effect

u k , reversed

<=

1

+ d ikj

51

Performances

1

0,8

0,6

0,4

0,2

0

Light Color: Local

Dark Color: ILP

Accuracy of

Q1

Accuracy of

Q2

Accuracy of

Q3

F-measure of

Q4

(Q1) is it +effect or -effect?

Recall of Q4

(Q2) is the effect reversed?

Precision Q4

(Q3) which spans are agents and themes?

(Q4) what are the writer’s sentiments?

52

Part 2 Summary

• Inferring sentiments toward entities participating in the +/-effect events .

• Developed an annotated corpus (Deng et al., ACL

2013).

• Developed a graph-based propagation model showing the inference ability of rules (Deng and

Wiebe, EACL 2014).

• Developed an Integer Linear Programming model jointly resolving various ambiguities w.r.t. +/-effect events and sentiments (Deng at al., COLING 2014).

56

Completed and

Proposed

Work

Corpus:

MPQA 3.0

• Expert Annotations on 70 documents (Deng et al.,

NAACL 2015)

• Non-expert Annotations on hundreds of documents

Sentiment

Inference on

+/-Effect Events

& Entities

Sentiment

Inference on

General Events

& Entities

• A corpus of +/-effect event sentiments (Deng et al., ACL 2013)

• A model validating rules (Deng and Wiebe, EACL 2014)

• A model inferring sentiments (Deng et al., COLING 2014)

• Joint Models

• A pilot study (Deng and Wiebe, EMNLP 2015)

• Extracting Nested Source and Entity/Event Target

• Blocking the rules

57

Joint Models

• In (Deng et al., COLING 2014), we use Integer Linear

Programming framework.

• Local systems are run.

• Joint models take local scores as input, and sentiment inference rules as constraints.

• In ILP, the rules are written in equations and in equations.

å i ,

< i , k , j

> u i , pos

-

å j ,

< i , k , j

> u j , pos

+ u k ,

+ effect

u k , reversed

<=

1

+ x ikj

59

Joint Models:

General Inference Rules

• Great! Dr. Thompson likes the project. …

• Explicit sentiment: o Positive( Great ) o Source( Great, speaker ) o ETarget( Great , likes ) o PositivePair(speaker, likes )

• Explicit sentiment: o Positive(likes) o Source(likes, Dr. Thompson) o ETarget(likes, project) o PositivePair( Dr. Thompson , project)

60

Joint Models:

General Inference Rules

• Great! Dr. Thompson likes the project. …

• Explicit sentiment: o Positive( Great ) o Source( Great, speaker ) o ETarget( Great , likes ) o PositivePair(speaker, likes )

• Explicit sentiment: o Positive(likes)

PositivePair(speaker, likes) ^

Positive(likes) ^

ETarget(likes, project) o Source(likes, Dr. Thompson) PositivePair(spkear, project) o ETarget(likes, project) o PositivePair( Dr. Thompson , project)

61

Joint Models

• More complex rules, in first order logics.

• Markov Logic Network (Richardson and Domingos,

2006).

o a set of atoms to be grounded o a set of weighted if-then rules o rule: friend(a,b) ^ voteFor(a,c) voteFor(b,c) o atom: friend(a,b), voteFor(a,c) o ground atom: friend (Mary, Tom)

• MLN selects a set of ground atoms that maximize the number of satisfied rules.

62

Joint Model:

Pilot Study Atoms

• PositivePair(s,t)

• NegativePair(s,t)

Predicted by joint models

• Positive(y) Negative(y)

• Source(y,s) Etarget(y,t)

• +Effect(x) -Effect(y)

• Agent(x,a) Theme(x,a)

Assigned scores by local systems

63

Joint Model:

Pilot Study Experiments

• 3 joint models:

• Joint-1 (without any inference)

• Joint-2 (added general sentiment inference rules)

• Joint-3 (added +/-effect event information and the rules)

66

Joint Model:

Pilot Study Performances

• Task: extracting PostivePair(s,t) and NegativePair(s,t).

• Baselines: for an opinion extracted by state-of-the-art systems o source s: the head of extracted source span o eTarget t:

• ALL NP/VP: o all the nouns and verbs are eTargets

• Opinion/Target Span Heads (state-of-the-art) : o head of extracted target span; o head of opinion span o PositivePair or NegativePair: the extracted polarity

69

Joint Model:

Pilot Study Performances

• Task: extracting PostivePairs and NegativePairs.

0,5

0,45

0,4

0,35

0,3

0,25

0,2

0,15

0,1

0,05

0

ALL NP/VP

Opinion/Target

Span Heads

PSL1

PSL2

PSL3

70

Joint Model:

Pilot Study Conclusions

• We cannot directly use the state-of-the-art sentiment analysis system outputs (spans) for entity/event-level sentiment analysis task.

• The inference rules can find more entity/event-level sentiments.

• The most basic joint models in our pilot study can improve in accuracies.

71

Joint Model:

Proposed Extensions

• Various variations of Markov Logic Network.

• Integer Linear Programming.

• Each local component being improved.

o Nested Sources o ETarget o Blocking the rules

72

nested source: writer, Imam, Rushdie

Nested Sources

negative attitude

When the Imam issued the fatwa against Salman

Rushdie for insulting the Prophet …

• How do we know Rushdie is negative toward Prophet?

• Because Imam claims so , by issuing the fatwa against him.

• How do we know Imam has issued the fatwa?

• Because the writer tells us so .

74

nested source: writer, Imam, Rushdie

Nested Sources

negative attitude

When the Imam issued the fatwa against Salman

Rushdie for insulting the Prophet …

• Nested source reveals the embedded private states in MPQA.

• Attributing quotations (Pareti et al., 2013, de La

Clergerie et al., 2011, Almeida et al., 2014).

• The overlapped opinions and opinion targets.

75

ETarget (Entity/Event Target)

• Extracting named entities and events as potential eTargets (Pan et al., 2015, Finkel et al., 2005, Nadeau and

Sekine, 2007, Li et al., 2013, Chen et al., 2009, Chen and Ji,

2009) .

• Entity co-reference resolution

(Haghighi and Klein, 2009;

Haghighi and Klein, 2010; Song et al., 2012)

.

• Event co-reference resolution

(Li et al., 2013, Chen et al.,

2009, Chen and Ji, 2009)

.

• Integrating external world knowledge o Entity Linking to Wikipedia (Ji and Grishman, 2011; Milne and Witten,

2008; Dai et al., 2011; Rao et al., 2013)

76

Blocking Inference Rules

• That man killed the lovely squirrel on purpose.

o Positive toward squirrel o killing is a –effect event o Negative toward that man

• That man accidentally hurt the lovely squirrel.

o Positive toward the squirrel o hurting is a –effect event o Negative toward that man

79

Blocking Inference Rules

• That man killed the lovely squirrel on purpose.

o Positive toward squirrel o killing is a –effect event o Negative toward that man

• That man accidentally hurt the lovely squirrel.

o Positive toward the squirrel o hurting is a –effect event o Negative toward that man

80

Blocking Inference Rules

Collect

Blocking

Cases

Compare and Find differences

Learn to

Recognize

81

Part 3 Summary

• Joint models: o A pilot study (Deng and Wiebe, EMNLP 2015) o Improved joint models integrating improved components.

• Explicit Opinions: o Opinion expressions and polarities (state-of-the-art) o Opinion nested sources o Opinion eTargets (entity/event-level targets)

• Implicit Opinions: o General inference rules (Wiebe and Deng, arxIV 2014) o When the rules are blocked

84

Completed and

Proposed

Work

Corpus:

MPQA 3.0

• Expert Annotations on 70 documents (Deng et al.,

NAACL 2015)

• Non-expert Annotations on hundreds of documents

Sentiment

Inference on

+/-Effect Events

& Entities

Sentiment

Inference on

General Events

& Entities

• A corpus of +/-effect event sentiments (Deng et al., ACL 2013)

• A model validating rules (Deng and Wiebe, EACL 2014)

• A model inferring sentiments (Deng et al., COLING 2014)

• Joint Models

• A pilot study (Deng and Wiebe, EMNLP 2015)

• Extracting Nested Source and Entity/Event Target

• Blocking the rules

85

Research Statement

• Defining a new sentiment analysis task

(entity/event-level sentiment analysis task),

• this work develops annotated corpora as resources of the task

• and investigates joint prediction models

• integrating explicit sentiments, entity or event information and inference rules together

• to automatically recognize both explicit and implicit sentiments expressed among entities and events in the text.

86

Timeline

Deliverable results Date Content

Sep - Nov Collecting Non-Expert Annotation Completed MPQA 3.0 corpus

Nov - Jan

Jan - Mar

Extracting Nested Source and

ETarget

Analyzing Blocked Rules

NAACL 2016:

A System Extracting Nested

Source & Entity/Event-Level

ETarget

ACL/COLING/EMNLP 2016:

Improved Graph-Based Model

Performances

Mar - May

An Improved Joint Model

Integrating improved Components

Journals submitted

May - Aug Thesis Writing Thesis Ready for Defense

Aug - Dec Thesis Revising Completed Thesis

87

88