CS 155 - Applied Crypto Group at Stanford University

advertisement

CS 155

May 3, 2005

Secure Operating Systems

John Mitchell

Last Lecture

Access Control Concepts

• Matrix, ACL, Capabilities

• Multi-level security (MLS)

OS Mechanisms

• Multics

– Ring structure

• Amoeba

– Distributed, capabilities

• Unix

– File system, Setuid

• Windows

– File system, Tokens, EFS

• SE Linux

This lecture

Secure OS

• Stronger mechanisms

• Some limitations

Assurance

• Orange Book, TCSEC

• Common Criteria

• Windows 2000 certification

Cryptographic File Systems

Embedded OS

• Some issues in Symbian

security

Software Patches

– Role-based, Domain type enforcement

What makes a “secure” OS?

Extra security features (compared to last lecture)

• Stronger authentication mechanisms

– Example: require token + password

• More security policy options

– Example: only let users read file f for purpose p

• Logging and other features

More secure implementation

• Apply secure design and coding principles

• Assurance and certification

– Code audit or formal verification

• Maintenance procedures

– Apply patches, etc.

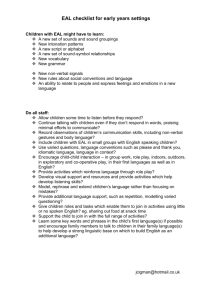

Sample Features of “Trusted OS”

Mandatory access control

• MAC not under user control, precedence over DAC

Object reuse protection

• Write over old data when file space is allocated

Complete mediation

• Prevent any access that circumvents monitor

Audit

• Log security-related events and check logs

Intrusion detection

• Anomaly detection

– Learn normal activity, Report abnormal actions

• Attack detection

– Recognize patterns associated with known attacks

DAC and MAC

Discretionary Access Control

• Restrict a subject's access to an object

– Generally: limit a user's access to a file

– Owner of file controls other users' accesses

Mandatory Access Control

• Needed when security policy dictates that:

– protection decisions must not be left to object owner

– system enforces a security policy over the wishes or

intentions of the object owner

Jack, Kack, Lack, Mack, Nack, Ouack, Pack and Quack

DAC vs MAC

DAC

• Object owner has full

power

• Complete trust in users

• Decisions are based only

on user id and object

ownerships

• Impossible to control

information flow

MAC

• Object owner CAN have

some power

• Only trust in

administrators

• Objects and tasks

themselves can have ids

• Makes information flow

control possible

Information flow

High

High

inputs

outputs

Process

Low

Low

inputs

outputs

Controlling information flow

MAC policy

• Information from one object may only flow to an

object at the same or at a higher security level

Conservative approach

• Information flow takes place when an object

changes its state or when a new object is created

Implementation as access policy

• If a process reads a file at one security level, it

cannot create or write a file at a lower level

• This is not a DAC policy, not an ACL policy

SELinux

Security-enhanced Linux system (NSA)

• Enforce separation of information based on confidentiality

and integrity requirements

• Mandatory access control incorporated into the major

subsystems of the kernel

– Limit tampering and bypassing of application security

mechanisms

– Confine damage caused by malicious applications

Why Linux? Open source

• Already subject to public review

• NSA can review source, modify and extend

– Assurance methods later in lecture …

http://www.nsa.gov/selinux/

Problem: crypto module

Signing

key

Input

Signing

algorithm

Output

Actual hope: low security output does not reveal high security input

Information flow analysis

First guess

• Mark expressions as high or low

– Some resemblance to Perl tainting

• Check assignment for high value in low location

But consider

if (xhigh > 0) ylow = 0;

else ylow = 1;

State of the art

• Much research on type systems and program

analysis to determine software information flow

• Still not ready for prime time

Covert Channels

Butler Lampson

• Difficulty achieving confinement (paper on web)

• Communicate by using CPU, locking/unlocking file,

sending/delaying msg, …

Gustavus Simmons

• Cryptographic techniques make it impossible to

detect presence of a covert channel

Example

The Two-Server Trojan Horse:

[McLean]

• Device P can chose from two Key Servers

• P is expected to choose randomly, to balance load

• But reveals key one bit at a time

P key

Observations

S1

S2

• Information flow easily detected by noninterference analysis

of the algorithm

• More subtle if choice based on random seed known to

external attacker

Also: DNS lookup, SSL nonce, …

Sample Features of Trusted OS

Mandatory access control

• MAC not under user control, precedence over DAC

Object reuse protection

• Write over old data when file space is allocated

Complete mediation

• Prevent any access that circumvents monitor

Audit

• Log security-related events and check logs

Intrusion detection

• Anomaly detection

– Learn normal activity, Report abnormal actions

• Attack detection

– Recognize patterns associated with known attacks

Interesting risk: data lifetime

Recent work

• Shredding Your Garbage: Reducing Data Lifetime Through

Secure Deallocation

by Jim Chow, Ben Pfaff, Tal Garfinkel, Mendel Rosenblum

Example

• User types password into web form

• Web server reads password

• Where does this go in memory?

– Many copies, on stack and heap

– Optimizing compilers may remove “dead” assignment/memcopy

– Presents interesting security risk

Sample Features of Trusted OS

Mandatory access control

• MAC not under user control, precedence over DAC

Object reuse protection

• Write over old data when file space is allocated

Complete mediation

• Prevent any access that circumvents monitor

Audit

• Log security-related events and check logs

Intrusion detection

(cover in another lecture)

• Anomaly detection

– Learn normal activity, Report abnormal actions

• Attack detection

– Recognize patterns associated with known attacks

Kernelized Design

Trusted Computing Base

• Hardware and software for

enforcing security rules

User space

User

process

Reference monitor

• Part of TCB

• All system calls go through

reference monitor for

security checking

• Most OS not designed this

way

Reference

monitor

TCB

OS kernel

Kernel space

Audit

Log security-related events

Protect audit log

• Write to write-once non-volatile medium

Audit logs can become huge

• Manage size by following policy

– Storage becomes more feasible

– Analysis more feasible since entries more meaningful

• Example policies

– Audit only first, last access by process to a file

– Do not record routine, expected events

• E.g., starting one process always loads …

Assurance methods

Testing

• Can demonstrate existence of flaw, not absence

Formal verification

• Time-consuming, painstaking process

“Validation”

• Requirements checking

• Design and code reviews

– Sit around table, drink lots of coffee, …

• Module and system testing

Rainbow Series

DoD Trusted Computer Sys Evaluation Criteria (Orange Book)

Audit in Trusted Systems

(Tan Book)

Configuration Management in Trusted Systems (Amber Book)

Trusted Distribution in Trusted Systems (Dark Lavender Book)

Security Modeling in Trusted Systems

(Aqua Book)

Formal Verification Systems

(Purple Book)

Covert Channel Analysis of Trusted Systems (Light Pink Book)

… many more

http://www.radium.ncsc.mil/tpep/library/rainbow/index.html

Orange Book Criteria (TCSEC)

Level D

• No security requirements

Level C

For environments with cooperating users

• C1 – protected mode OS, authenticated login, DAC,

security testing and documentation (Unix)

• C2 – DAC to level of individual user, object

initialization, auditing

(Windows NT 4.0)

Level B, A

• All users and objects must be assigned a security

label (classified, unclassified, etc.)

• System must enforce Bell-LaPadula model

Levels B, A

(continued)

Level B

• B1 – classification and Bell-LaPadula

• B2 – system designed in top-down modular way,

must be possible to verify, covert channels must be

analyzed

• B3 – ACLs with users and groups, formal TCB must

be presented, adequate security auditing, secure

crash recovery

Level A1

• Formal proof of protection system, formal proof

that model is correct, demonstration that impl

conforms to model, formal covert channel analysis

Common Criteria

Three parts

• CC Documents

– Protection profiles: requirements for category of systems

• Functional requirements

• Assurance requirements

• CC Evaluation Methodology

• National Schemes (local ways of doing evaluation)

Replaces TCSEC, endorsed by 14 countries

• CC adopted 1998

• Last TCSEC evaluation completed 2000

http://www.commoncriteria.org/

Protection Profiles

Requirements for categories of systems

• Subject to review and certified

Example: Controlled Access PP (CAPP_V1.d)

• Security functional requirements

– Authentication, User Data Protection, Prevent Audit Loss

• Security assurance requirements

– Security testing, Admin guidance, Life-cycle support, …

• Assumes non-hostile and well-managed users

• Does not consider malicious system developers

Evaluation Assurance Levels 1 – 4

EAL 1: Functionally Tested

• Review of functional and interface specifications

• Some independent testing

EAL 2: Structurally Tested

• Analysis of security functions, incl high-level design

• Independent testing, review of developer testing

EAL 3: Methodically Tested and Checked

• Development environment controls; config mgmt

EAL 4: Methodically Designed, Tested, Reviewed

• Informal spec of security policy, Independent testing

Evaluation Assurance Levels 5 – 7

EAL 5: Semiformally Designed and Tested

• Formal model, modular design

• Vulnerability search, covert channel analysis

EAL 6: Semiformally Verified Design and Tested

• Structured development process

EAL 7: Formally Verified Design and Tested

• Formal presentation of functional specification

• Product or system design must be simple

• Independent confirmation of developer tests

Example: Windows 2000, EAL 4+

Evaluation performed by SAIC

Used “Controlled Access Protection Profile”

Level EAL 4 + Flaw Remediation

• “EAL 4 … represents the highest level at which

products not built specifically to meet the

requirements of EAL 5-7 ought to be evaluated.”

(EAL 5-7 requires more stringent design and

development procedures …)

• Flaw Remediation

Evaluation based on specific configurations

• Produced configuration guide that may be useful

Is Windows is “Secure”?

Good things

• Design goals include security goals

• Independent review, configuration guidelines

But …

• “Secure” is a complex concept

– What properties protected against what attacks?

• Typical installation includes more than just OS

– Many problems arise from applications, device drivers

– Windows driver certification program

• Security depends on installation as well as system

Secure attention sequence (SAS)

CTRL+ALT+DEL

• “… can be read only by Windows, ensuring that the

information in the ensuing logon dialog box can be read only

by Windows. This can prevent rogue programs from gaining

access to the computer.”

How does this work?

• Winlogon service responds to SAS

• DLL called GINA (for Graphical Identification 'n'

Authentication) implemented in msgina.dll gathers and

marshals information provided by the user and sends it to

the Local Security Authority (LSA) for verification

• The SAS provides a level of protection against Trojan horse

login prompts, but not against driver level attacks.

Encrypted File Systems (EFS, CFS)

Store files in encrypted form

• Key management: user’s key decrypts file

• Useful protection if someone steals disk

Windows – EFS

• User marks a file for encryption

• Unique file encryption key is created

• Key is encrypted, can be stored on smart card

Unix – CFS

[Matt Blaze]

• Transparent use

• Local NFS server running on "loopback" interface

• Key protected by passphrase

Q: Why use crypto file system?

General security questions

• What properties are provided?

• Against what form of attack?

Crypto file system

• What properties?

– Secrecy, integrity, authenticity, … ?

• Against what kinds of attack?

– Someone steals your laptop?

– Someone steals your removable disk?

– Someone has network access to shared file system?

Depends on how file system configured and used

Encrypted file systems

Several possible designs

•

•

•

•

•

Block based systems

Disk based systems

Network loopback based systems

Stackable file systems

Application based encryption

Some references

• A cryptographic file system for unix

– Matt Blaze

• Cryptographic File Systems Performance

– Charles Wright, Jay Dave and Erez Zadok

• Cryptoloop HowTo

– Dennis Kaledin et. al

• Ncryptfs: A secure and convenient cryptographic file system

– Wright et. al

Block Based

Encrypt one disk block at a time

• Not dependent on underlying file system

• Can write to raw device or preallocated file

Sample block-based implementation

Cryptoloop

• Uses Linux loopback device driver, CryptoAPI

– Linux kernel CryptoAPI exports an interface to encryption

functions and hash functions

• Can write to a raw device or to a preallocated file

– Preallocated file effectively cuts buffer cache in half

Other block-based file systems

CGD (Cyptographic disk driver)

• For NetBSD

• raw device only

BestCrypt

• Commercial product for Linux and windows

• preallocated file for storage

vncrypt

• For FreeBSD: uses the vn device driver

• preallocated file for storage

vnd

• For OpenBSD: uses the Vnode disk driver (vnd)

• preallocated file for storage

Disk Based

Encrypt data at the file system level

Disk Based

EFS (Encryption File System)

• Extension to NTFS based on NT kernel.

• Uses windows access control and authentication libraries

though located in the kernel, its tightly coupled with user

space dlls to do encryption and user authentication.

• Encryption keys are stored on the disk, encrypted with user

password

StegFS

• A file system that employs encryption and steganography

• Inspection of system will not reveal content or extent of

hidden data

• uses modified ext2 kernel driver

• Very slow and hence impractical

Network Based

CFS

• User level crypto NFS server

– Performance hampered by many context switches and

data copies between user and kernel space

• Data appears in user space in cleartext

Network Based

TCFS

• modified kernel mode nfs client

• works with normal nfs server

• keys are stored on the filesystem

Stackable Systems

Ncryptfs

• Can operate on top of any file system.

Application based encryption

Applications like pgp, SafeHouse allow users to

encrypt/decrypt files

File may be in cleartext on the disk while the user is

editing and saving it

Encrypted file system

Complete file system encryption is feasible in

real time

• Crypto operations are not a big bottleneck

• Performance study: with single processor, I/O is

limiting factor

• Caching plays a big role in performance of

encrypted systems

Embedded operating systems

Symbian History

• Psion released EPOC32 in 1996

– based on 1989 EPOC OS

– EPOC32 was designed with OO in C++

• Symbian Ltd. formed in 1998

– Ericsson, Nokia, Motorola and Psion

– EPOC renamed to Symbian OS

– Currently ~30 phones with Symbian,15 licensees

• Current ownership

Nokia 47.5%

Ericsson 15.6%

SonyEricsson 13.1%

Panasonic 10.5%

Siemens 8.4%

Samsung 4.5%

See: Symbian phone security, Job de Haas, BlackHat, Amsterdam 2005

Symbian UI

Two main version

Series60

UIQ

Architecture

Multitasking, preemptive kernel

MMU protection of kernel and process spaces

Strong Client–Server architecture

Plug-in patterns

Filesystem in ROM, Flash, RAM and on SD-card

Symbian Development

Emulator on x86 runs most of native code base

• Compiled to x86 (so not running ARM cpu)

• Emulator is one windows process

Limited support for on-target debugging

•

•

•

•

It does not work on all devices

Uses a gdb stub

Metrowerks provides MetroTRK

Future: v9 will move to ARM Real View (RVCT) and

the EABI standard

Mobile phone risks

Toll fraud:

• Auto dialers.

• High cost SMS/MMS.

• Phone Proxy

Loss or theft:

• Data loss.

• Data compromise.

• Loss of Identity (caller ID)

Availability:

• SPAM.

• Destruction of the device (flash)

• Destruction of data.

Risks induced by usage:

• Mobile banking.

• Confidential e-mail, documents.

• Device present at confidential

meetings: snooping

Attack vectors

•

•

•

•

•

•

•

•

•

•

•

Executables

Bluetooth

GPRS / GSM

OTA

IrDa

Browser

SMS / MMS

SD card

WAP

E-mail

Too many entry points to list all

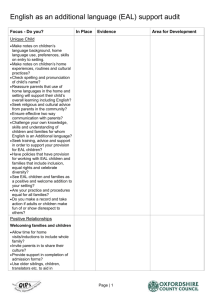

Symbian security features

Crypto:

• Algorithms

• Certificate framework

• Protocols: HTTPS, WTLS, …

Symbian signed:

• Public key signatures on

applications

• Root CA’s in ROM

Separation

• Kernel vs. user space;

• process space

• Secured ‘wallet’ storage

Access controls

• SIM PIN, device security code

• Bluetooth pairing

Artificial Limitations / patches

• Prevent loading device drivers in

the kernel (Nokia).

• Disallow overriding of ROM

based plug-ins

Limitations

• No concept of roles or users.

• No access controls in the file

system.

• No user confirmation needed for

access by applications.

• User view on device is limited:

partial filesystem, selected

processes.

• Majority of interesting

applications is unsigned.

Are attacks prevented?

• Fraud: user should not

accept unsigned apps

• Loss/theft: In practice, little

protection

• Availability: any application

can render phone unusable

(skulls trojan).

Symbian attacks

What goes wrong?

• All known attacks need user confirmation. Often

more than once.

• People loose a lot devices

Skulls Trojan:

• Theme that replaces all icons and cannot be de-installed

Caribe:

• Installs itself as a ‘Recognizer’ to get activated at boot time

and starts broadcasting itself over Bluetooth

Example vulnerability

February 23, 2005 notice on Nokia site:

Proposed workaround

User confirmation

This is what appears on the screen when an

untrusted application is loaded:

Installing patches

Many attacks occur after patch released

• Applies to OS and applications

Case study: patches for Open SSL

• Recall: Secure Socket Layer (SSL) protocol

provides authentication and confidentiality

between two communicating applications

• OpenSSL is a open source implementation

– Remote buffer overflow in OpenSSL server

• Case study by Eric Rescorla, RTFM, Inc.

Server Side SSLv2 Vulnerability

During a handshake with the server, the client can send a overly

long CLIENT-MASTER-KEY for SSLv2.

Resulting in heap overflow.

Client

Server

challenge, cipher_specs

{master_key} server_public_key

connection-id,

server_certificate,

cipher_specs

verification and

finalization

“No Session Identifier” Handshake for Remote Connections

Server Side SSLv2 Vulnerability

Structure of CLIENT-MASTER-KEY message

char MSG-CLIENT-MASTER-KEY

char CIPHER-KIND[3]

char CLEAR-KEY-LENGTH-MSB

char CLEAR-KEY-LENGTH-LSB

char ENCRYPTED-KEY-LENGTH-MSB

char ENCRYPTED-KEY-LENGTH-LSB

char KEY-ARG-LENGTH-MSB

char KEY-ARG-LENGTH-LSB

char CLEAR-KEY-DATA[MSB<<8|LSB]

char ENCRYPTED-KEYDATA[MSB<<8|LSB]

char KEY-ARG-DATA[MSB<<8|LSB]

• All block ciphers used in OpenSSL use 8 byte key

• OpenSSL allocates fixed 8-byte buffer for KEY-ARG-DATA

• Specifying a larger key length with KEY-ARG-DATA overflow the heap

• OpenSSL uses a lot of function pointers that heap overflow can exploit

Ethical Probing for Fixes

struct CLIENT MASTER KEY

MKL

struct CLIENT MASTER KEY

Patched servers close connection if key length too big

Unpatched servers accept KEY-ARG-LENGTH > 8

• Rescorla set KEY-ARG-LENGTH = 9

• Overwrites next field in the heap, master_key_length (MKL)

• MKL not used anywhere else, so no real damage

Timeline

July

30 31 2

August

September

9

30th July 2002

•

•

•

•

•

13

Initial announcement of vulnerability

OpenSSL 0.9.6e available for download (bug fixed)

Patches for other versions released

Major OS vendors (Debian, Trustix, Engarde, Gentoo) announce

Posting of vulnerability to SlashDot

31st July 2002

• FreeBSD announces

2nd August 2002

• Apple announces. NetBSD announces

9th August 2002

• OpenSSL 0.9.6g released

13th September 2002

• Slapper worm released (More than 60% still vulnerable)

Patches and upgrades over 30 days

Vulnerable Servers over a 30 Days Period

% vulnerable

Graphs ends

at 60%

vulnerable

days

23% of the servers are fixed within first week of announcement

More than 60% of servers still vulnerable when the Slapper

worm which exploited the bug was released 6 weeks later

Second wave after worm exploit

Graphs starts

at 60%

vulnerable

Vulnerable Servers after Slapper was released

% vulnerable

days

Some administrators only patch/upgrade

when exploit shows up

Summary

1/3 upgrade when advisory released

1/3 upgrade when exploit released

1/3 do not bother

OS Security lectures

Access Control Concepts

• Matrix, ACL, Capabilities

• Multi-level security (MLS)

OS Mechanisms

• Multics

– Ring structure

• Amoeba

– Distributed, capabilities

• Unix

– File system, Setuid

• Windows

– File system, Tokens, EFS

• SE Linux

Secure OS

• Stronger mechanisms

• Some limitations

Assurance

• Orange Book, TCSEC

• Common Criteria

• Windows 2000 certification

Cryptographic File Systems

Embedded OS

• Some issues in Symbian

security

Software Patches

– Role-based, Domain type enforcement