nm_bayes_misc - UCL Department of Geography

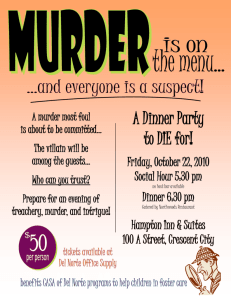

advertisement

MSc Methods XX: YY

Dr. Mathias (Mat) Disney

UCL Geography

Office: 113, Pearson Building

Tel: 7670 0592

Email: mdisney@ucl.geog.ac.uk

www.geog.ucl.ac.uk/~mdisney

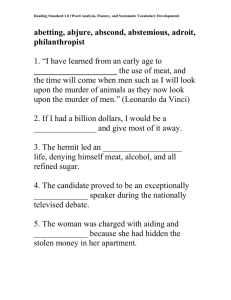

Induction and deduction

Gauch (2006): “Seven pillars of Science”

1.

2.

3.

4.

Realism: physical world is real;

Presuppositions: world is orderly and comprehensible;

Evidence: science demands evidence;

Logic: science uses standard, settled logic to connect

evidence and assumptions with conclusions;

5. Limits: many matters cannot usefully be examined by

science;

6. Universality: science is public and inclusive;

7. Worldview: science must contribute to a meaningful

worldview.

What’s this got to do with methods?

• Fundamental laws of probability can be derived

from statements of logic

• BUT there are different ways to apply

• Two key ways

– Frequentist

– Bayesian – after Rev. Thomas Bayes (1702-1761)

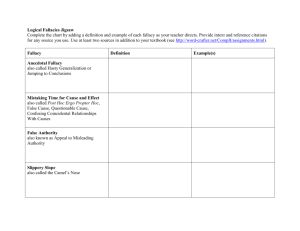

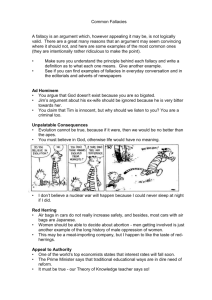

Aside: sound argument v fallacy

• Fallacies can be hard to spot in longer, more

detailed arguments:

– Fallacies of composition; ambiguity; false dilemmas;

circular reasoning; genetic fallacies (ad hominem)

• Gauch (2003) notes:

– For an argument to be accepted by any audience as

proof, audience MUST accept premises and validity

– That is: part of responsibility for rational dialogue falls to

the audience

– If audience data lacking and / or logic weak then valid

argument may be incorrectly rejected (or vice versa)

Aside: sound argument v fallacy

• If plants lack nitrogen, they become yellowish

– The plants are yellowish, therefore they lack N

– The plants do not lack N, so they do not become

yellowish

– The plants lack N, so they become yellowish

– The plants are not yellowish, so they do not lack N

•

•

•

•

Affirming the antecedent: p q; p, q ✓

Denying the consequent: p q: ~q, ~p ✓

Affirming the consequent: p q: q, p X

Denying the antecedent: p q: ~p, ~q X

Bayesian reasoning

• Bayesian view is directly related to how we do science

• Frequentist view of hypothesis testing is fundamentally

flawed (Jaynes, ch 17 for eg):

– To test H do it indirectly - invent null hypothesis Ho that denies

H, then argue against Ho

– But in practice, Ho is not (usually) a direct denial of H

– H usually a disjunction of many different hypotheses, where Ho

denies all of them while assuming things (eg normal distribution

of errors) which H neither assumes nor denies

• Jeffreys (1939, p316): “…an hypothesis that may be true is rejected

because it has failed to predict observable results that have not occurred.

This seems remarkable…on the face of it, the evidence might more

reasonably be taken as evidence for the hypothesis, not against it. The

same applies to all all the current significance tests based on P-values.”

Bayes: see Gauch (2003) ch 5

• Prior knowledge?

– What is known beyond the particular experiment at

hand, which may be substantial or negligible

• We all have priors: assumptions, experience,

other pieces of evidence

• Bayes approach explicitly requires you to

assign a probability to your prior (somehow)

• Bayesian view - probability as degree of belief

rather than a frequency of occurrence (in the

long run…)

A more complex example: mean of Gaussian

• For N data,

é ( x - m)2 ù

1

prob ( xk | m, s ) =

exp ê- k 2 ú

2s

s 2p

êë

úû

• Given data {xk}, what is best estimate of μ and error, σ?

N

• Likelihood?

prob ({ xk } | m, s , I ) = Õ prob ( xk | m, s , I )

k=1

• Simple uniform prior?

ìï A =1 m - m

( max min ) mmin £ m £ mmax

prob ( m | s , I ) = prob ( m | I ) = í

ïî

0

otherwise

• Log(Posterior),L

N

L = loge éë prob ( m | { xk }, s , I )ùû = const - å

k=1

( xk - m )

2s 2

2

A more complex example: mean of Gaussian

• For best estimate μo

• So

•

•

•

•

•

N

N

k=1

k=1

å xk = å m 0 = N m 0

N

dL

x -m

=å k 2 0 =0

dm u0 k=1 s

and best estimate is simple mean i.e.

N

d2L

1

N

= -å 2 = - 2

2

dm u0

s

k=1 s

Confidence depends on σ i.e.

s

And so m = m0 ±

N

Here μmin = -2 μmax = 15

If we make larger?

Weighting of error for each point?

1 N

m 0 = å xk

N k=1

Common errors: ignored prior

• After Stirzaker (1994) and Gauch (2003):

• Blood test for rare disease occurring by chance in

1:100,000. Test is quite accurate:

– Will tell if you have disease 95% of time i.e. p = 0.95

– BUT also gives false positive 0.5% of the time i.e. p = 0.005

• Q: if test says you have disease, what is the probability this

diagnosis is correct?

– 80% of health experts questioned gave the wrong answer (Gauch,

2003: 211)

– Use 2-hypothesis form of Bayes’ Theorem

Common errors: ignored prior

• Back to our disease test

P ( H1| D) P ( D | H1) P ( H1)

=

´

P ( H 2 | D) P ( D | H 2 ) P ( H 2 )

P ( H1| D)

p(correct)p(have)

=

=

P ( H 2 | D) p(correct)p(have) + p correct p have

(

) (

( 0.95 ´ 0.00001)

» 1: 500

( 0.95´ 0.00001) + ( 0.005´ 0.99999)

)

• Correct diagnosis only 1 time in 500 - 499 false

+ve!

• For a disease as rare as this, the false positive

rate (1:200) makes test essentially useless

What went wrong?

• Knowledge of general population gives prior

odds diseased:healthy 1:100000

• Knowledge of +ve test gives likelihood odds 95:5

• Mistake is to base conclusion on likelihood odds

• Prior odds completely dominate

– 0.005 x 0.99999 ~ 0.005 >> 0.95 x 0.00001 ~1x10-6

The tragic case of Sally Clark

• Two cot-deaths (SIDS), 1 year apart, aged 11 weeks and

8 weeks. Mother Sally Clark charged with double

murder, tried and convicted in 1999

– Statistical evidence was misunderstood, “expert” testimony was

wrong, and a fundamental logical fallacy was introduced

• What happened?

• We can use Bayes’ Theorem to decide between 2

hypotheses

– H1 = Sally Clark committed double murder

– H2 = Two children DID die of SIDS

•

•

http://betterexplained.com/articles/an-intuitive-and-short-explanation-of-bayestheorem/

http://yudkowsky.net/rational/bayes

14

The tragic case of Sally Clark

P ( H1| D) P ( D | H1) P ( H1)

=

´

P ( H 2 | D) P ( D | H 2 ) P ( H 2 )

prob. of H1 or H2

given data D

Likelihoods i.e. prob. of

getting data D IF H1 is

true, or if H2 is true

Very important PRIOR probability i.e.

previous best guess

• Data? We observe there are 2 dead children

• We need to decide which of H1 or H2 are more

plausible, given D (and prior expectations)

• i.e. want ratio P(H1|D) / P(H2|D) i.e. odds of H1 being

true compared to H2, GIVEN data and prior

15

The tragic case of Sally Clark

• ERROR 1: events NOT independent

• P(1 child dying of SIDS)? ~ 1:1300, but for affluent nonsmoking, mother > 26yrs ~ 1:8500.

• Prof. Sir Roy Meadows (expert witness)

– P(2 deaths)? 1:8500*8500 ~ 1:73 million.

– This was KEY to her conviction & is demonstrably wrong

– ~650000 births a year in UK, so at 1:73M a double cot death is a 1

in 100 year event. BUT 1 or 2 occur every year – how come?? No

one checked …

– NOT independent P(2nd death | 1st death) 5-10 higher i.e. 1:100 to

200, so P(H2) actually 1:1300*5/1300 ~ 1:300000

16

The tragic case of Sally Clark

• ERROR 2: “Prosecutor’s Fallacy”

– 1:300000 still VERY rare, so she’s unlikely to be innocent, right??

• Meadows “Law”: ‘one cot death is a tragedy, two cot deaths is suspicious and,

until the contrary is proved, three cot deaths is murder’

– WRONG: Fallacy to mistake chance of a rare event as chance that

defendant is innocent

• In large samples, even rare events occur quite frequently someone wins the lottery (1:14M) nearly every week

• 650000 births a year, expect 2-3 double cot deaths…..

• AND we are ignoring rarity of double murder (H1)

17

The tragic case of Sally Clark

• ERROR 3: ignoring odds of alternative (also very rare)

– Single child murder v. rare (~30 cases a year) BUT generally significant

family/social problems i.e. NOT like the Clarks.

– P(1 murder) ~ 30:650000 i.e. 1:21700

– Double MUCH rarer, BUT P(2nd|1st murder) ~ 200 x more likely given first,

so P(H1|D) ~ (1/21700* 200/21700) ~ 1:2.4M

• So, two very rare events, but double murder ~ 10 x rarer than

double SIDS

• So P(H1|D) / P(H2|D)?

– P (murder) : P (cot death) ~ 1:10 i.e. 10 x more likely to be double SIDS

– Says nothing about guilt & innocence, just relative probability

18

The tragic case of Sally Clark

• Sally Clark acquitted in 2003 after 2nd appeal (but not on

statistical fallacies) after 3 yrs in prison, died of alcohol

poisoning in 2007

– Meadows “Law” redux: triple murder v triple SIDS?

• In fact, P(triple murder | 2 previous) : P(triple SIDS| 2 previous) ~ ((21700 x

123) x 10) / ((1300 x 228) x 50) = 1.8:1

• So P(triple murder) > P(SIDS) but not by much

• Meadows’ ‘Law’ should be:

– ‘when three sudden deaths have occurred in the same family, statistics give no

strong indication one way or the other as to whether the deaths are more or less

likely to be SIDS than homicides’

From: Hill, R. (2004) Multiple sudden infant deaths – coincidence or beyond coincidence, Pediatric and

Perinatal Epidemiology, 18, 320-326 (http://www.cse.salford.ac.uk/staff/RHill/ppe_5601.pdf)

19

Common errors: reversed conditional

• After Stewart (1996) & Gauch (2003: 212):

– Boy? Girl? Assume P(B) = P(G) = 0.5 and independent

– For a family with 2 children, what is P that other is a girl, given that

one is a girl?

• 4 possible combinations, each P(0.25): BB, BG, GB, GG

• Can’t be BB, and in only 1 of 3 remaining is GG possible

• So P(B):P(G) now 2:1

– Using Bayes’ Theorem: X = at least 1 G, Y = GG

– P(X) = ¾ and so P(X ÇY ) P(X) = 14 43 =1 3

Stewart, I. (1996) The Interrogator’s Fallacy, Sci. Am., 275(3), 172-175.

Common errors: reversed conditional

• Easy to forget that order does matter with

conditional Ps

– As P ( X ÇY ) = P (Y Ç X ) and P ( X ÈY ) = P (Y È X )but

– P ( X |Y ) ¹ P (Y | X ) as this is cause & effect

– Gauch (2003) notes use of “when” in incorrectly phrasing Q: For a family

with 2 children, what is P that other is a girl, when one is a girl?

– P(X when Y) not defined

– It is not P(X|Y), nor is it P(Y|X) or even P(X AND Y)

Stewart, I. (1996) The Interrogator’s Fallacy, Sci. Am., 275(3), 172-175.

Common errors: reversed conditional

• Relates to Prosecutor’s Fallacy again

– Stewart uses DNA match example

– What is P(match) i.e. prob. suspect’s DNA sample matches that from crime

scene, given they are innocent?

– But this is wrong question – SHOULD ask:

– What is P(innocent) i.e. prob. suspect is innocent, given a DNA match?

• Note Bayesian approach – we can’t calculate likelihood of innocence (1st case), but we can

estimate likelihood of DNA match, given priors

• Evidence: DNA match of all markers P(match|innocent) = 1:1000000.

• BUT question jury must answer is P(innocent|match). Priors?

– Genetic history and structure of population of possible perpetrators

– Typically means evidence about as strong as you get from match using half genetic

markers, but ignoring population structure

– Evidence combines mulitplicatively, so strength goes up as ~ (no. markers)1/2

Stewart, I. (1996) The Interrogator’s Fallacy, Sci. Am., 275(3), 172-175.

Common errors: reversed conditional

• If P(innocent|match) ~ 1:1000000 then P(match|innocent) ~ 1:1000

• Other priors? Strong local ethnic identity? Many common ancestors

within 1-200 yrs (isolated rural areas maybe)?

• P(match|innocent) >> 1:1000, maybe 1:100

• Says nothing about innocence, but a jury must consider whether the

DNA evidence establishes guilt beyond reasonable doubt

Stewart, I. (1996) The Interrogator’s Fallacy, Sci. Am., 275(3), 172-175.

Aside: the problem with P values

• Significance testing and P-values are widespread

• BUT they tell you nothing about the effect you’re interested in (see

Siegfried (2010) for eg).

• All P value of < 0.05 can say is:

– There is a 5% chance of obtaining the observed (or more extreme) result even

if no real effect exists i.e. if the null hypothesis is correct

• Two possible conclusions remain:

– i) there is a real effect

– Ii) the result is an improbable (1 in 20) fluke

• BUT P value cannot tell you which is which

• If P > 0.05 then also two conclusions:

– i) there is no real effect

– ii) test not capable of discriminating a weak effect

A P value is the probability of an observed (or more extreme) result arising

only from chance.

Credit: S. Goodman, adapted by A. Nandy

http://www.sciencenews.org/view/access/id/57253/name/feat_statistics_pv

alue_chart.jpg