Integrating Campus and Regional Grid Infrastructure

advertisement

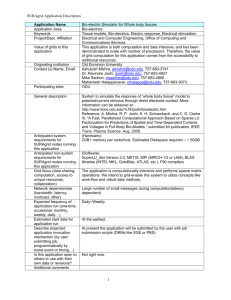

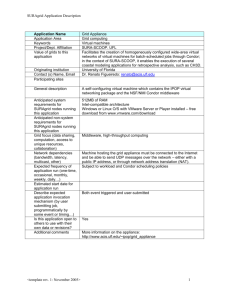

SURAgrid Update SURA BoF @ Internet2 SMM April 23, 2007 Mary Fran Yafchak, maryfran@sura.org SURA IT Program Coordinator, SURAgrid project manager SURAgrid Vision SURAgrid Institutional Resources (e.g. Current participants) Gateways to National Cyberinfrastructure (e.g. Teragrid) VO or Project Resources (e.g, SCOOP) Industry Partner Coop Resources (e.g. IBM partnership) Other externally funded resources (e.g. group proposals) Heterogeneous Environment to Meet Diverse User Needs Project-Specific View “MySURAgrid” View Project-specific tools SURAgrid Resources SURAgrid Resources and Applications Sample User Portals SURA regional development: • Develop & manage partnership relations • Facilitate collaborative project development • Orchestrate centralized services & support • Foster and catalyze application development • Develop training & education (user, admin) • Other…(Community-driven, over time…) SURAgrid Growth & “Mix” (Key: SURA member; not SURA member) University of Alabama at Birmingham University of Alabama in Huntsville University of Florida Georgia State University University of Michigan University of Southern California Texas Advanced Computing Center University of Virginia As of April 2004 (25%) SURAgrid Growth & “Mix” (Key: SURA member; not SURA member) University of Alabama at Birmingham University of Alabama in Huntsville University of Florida Georgia State University University of Michigan University of Southern California Texas Advanced Computing Center University of Virginia Tulane University University of Arkansas George Mason University University of South Carolina Great Plains Network Louisiana State Mississippi Center for SuperComputing Research Texas Tech University of Louisiana at Lafayette North Carolina State University Texas A&M University As of April 2005 (21%) SURAgrid Growth & “Mix” (Key: SURA member; not SURA member) University of Alabama at Birmingham University of Alabama in Huntsville University of Florida Georgia State University University of Michigan University of Southern California Texas Advanced Computing Center University of Virginia Tulane University University of Arkansas George Mason University University of South Carolina Great Plains Network Louisiana State Mississippi Center for SuperComputing Research Texas Tech University of Louisiana at Lafayette North Carolina State University Texas A&M University University of North Carolina, Charlotte University of Kentucky Old Dominion University Vanderbilt University Bowie State University Louisiana Tech University University of Maryland As of April 2006 (23%) SURAgrid Growth & “Mix” (Key: SURA member; not SURA member) University of Alabama at Birmingham University of Alabama in Huntsville University of Florida Georgia State University University of Michigan University of Southern California Texas Advanced Computing Center University of Virginia Tulane University University of Arkansas George Mason University University of South Carolina Great Plains Network Louisiana State Mississippi Center for SuperComputing Research Texas Tech University of Louisiana at Lafayette North Carolina State University Texas A&M University University of North Carolina, Charlotte University of Kentucky Old Dominion University Vanderbilt University Bowie State University Louisiana Tech University University of Maryland Kennesaw State University Clemson University Wake Forest University As of April 2007 (27%) Potential with talks in-progress: 33% Bowie State GMU SURAgrid Participants UMD UMich (As of March 2007) UKY UVA GPN UArk Vanderbilt UAH ODU USC Wake Forest MCSR SC UNCC TTU Clemson TACC LATech UFL UAB TAMU = SURA Member NC State LSU ULL Kennesaw State GSU Tulane = non-SURA Participant = Resources on the grid And new SURAgrid staff too! A warm welcome to… Linda Akli, Grid Application & Outreach Specialist (as of January 2007) Mary Trauner, Contractor in resource deployment & support (as of last week!) SURAgrid Infrastructure Specialist (coming soon - interviews in progress…) SURAgrid Application Growth Five teams showcased their app at I2 FMM in Dec 07 – – – – GSU: Multiple Genome Alignment on the Grid UAB: Dynamic BLAST ODU: Bioelectric Simulator for Whole Body Tissues NCState: Simulation-Optimization for Threat Management in Urban Water Systems – UNC: Storm Surge Modeling with ADCIRC Documentation – Application “glossies” (see handouts) – Running Your Application on SURAgrid, v2 • http://www.sura.org/programs/docs/RunAppsSURAgridV2.pdf And more applications in the pipeline (see handout) More on SCOOP & SURAgrid Current collaboration – SURA IT staff in SCOOP planning & communications – Active deployment on SURAgrid resources • Supported individual model runs in 2006 hurricane season • Planning to support integrated model runs in 2007 Future collaboration? – SURA IT & SCOOP management seeking to increase resources for SCOOP-SURAgrid integration – Areas of potential co-development: • • • • Event-driven resource scheduling Data storage, discovery, redundancy, etc. (“data grid”) Secure multi-level access (Integrated portals, tiered PKI?) Access to computation & facilities for high quality visualization SURAgrid Resource growth Contributors about the same but nature is changing… – Several test resources being replaced by larger resources, or those from production environments – Several institutions now contributing multiple resources New additions – GSU’s IBM P575 (first from the SURAgrid-IBM partnership) • LSU & TAMU integrations pending… – LSU’s SuperMike • See http://www.hpcwire.com/hpc/1320723.html – Production cluster from UMich (also supports ATLAS) • See https://hep.pa.msu.edu/twiki/bin/view/AGLT2 Total combined capacity as of March 2007: 1991 CPUs for total of approximately 10.5 teraflops, with 4.6 terabytes of storage. Infrastructure Development Updates to SURAgrid portal (maintained by TACC): – – – – Improvements in user documentation Enhanced system detail & resources “at a glance” Test scripts at the service level See Documentation & Resource Monitor at gridportal.sura.org Access management (developed by UVA): – Tiered-PKI being designed to provide multiple levels of assurance within SURAgrid – Plans underway for most critical but affordable components while seeking funding for the rest – SURAgrid CA (at current LoA), strong authentication for site administrators, foundational policy documentation Infrastructure Development New! SURAgrid Accounting Working Group to address the immediate need for grid-wide views of resource and application activity – Participants: UAB, GMU, GSU, LSU, UMich, ODU, TAMU, TTU; SURA facilitating New! SURAgrid “stack packaging” task force – TTU leading, with participation from GSU, GMU, LSU, UAB And with growth…we need things like: More formal governance process Increased need to find the means to expand & sustain the effort Governance & Decision-Making SURAgrid Project Planning Working Group – Established at Sept 2006 In-Person Meeting to develop governance options for SURAgrid – Participants: • Linda Akli, SURA • Gary Crane, SURA • Steve Johnson, Texas A&M University • Sandi Redman, University of Alabama in Huntsville • Don Riley, University of Maryland & SURA IT Fellow • Mike Sachon, Old Dominium University • Srikanth Sastry, Texas A&M University • Art Vandenberg, Georgia State University • Mary Fran Yafchak, SURA Governance Overview To date SURAgrid has used consensus-based decision making with SURA facilitating Maturity & investment requires more formal structure – Ensure those investing have appropriate role in governance – Support sustainable growth & active participation Suggested initial classes of membership – Contributing Member: HE or related org contributing significant resources to advance SURAgrid infrastructure • SURA is contributing member by definition – Participating Member: HE or related org participating in SURAgrid activities other than Contributing Member – Partnership Member: Entity (org, commercial, non-HE…) with strategic relationship with SURAgrid Proposed Governance Structure Formation of SURAgrid Governance Committee – Primary governing body, elected by SURAgrid Contributing Members – Will act on behalf of SURAgrid community • Guidance, Facilitation, Reporting Initial SURAgrid Governance Committee will have 9 members: – 8 elected by current SURAgrid Participating Organizations, 1 appointed by SURA – Current SURAgrid Participating Organizations defined as all those participating as of December 31, 2006 Transitioning to New Governance SURAgrid Participating Organization designate a SURAgrid lead representative – Done New governance process/structure approved by SURA IT Steering Group – Done New Governance process/structure approved by vote of SURAgrid Participating leads – Done Call for nominations for SURAgrid Governance Committee candidates – Done Nominations will be accepted through midnight April 28 – In progress Election of SURAgrid Governance Committee members is expected to be completed by May 12 Sustaining the effort, Part 1 Continuing to seek external funding for SURAgrid through collaborative proposal development – SURA-led NSF STCI (Strategic Technologies for Cyberinfrastructure) proposal (under review) • Expanded grid-wide operations & support, Portal maintenance & development, Development of two-tiered PKI (Public Key Infrastructure) • Submission included 9 letters of support from other SURAgrid sites as well as Teragrid, SCOOP & NCSA – SURAgrid as grid service provider in NSF CCLI (Course, Curriculum & Laboratory Improvement) proposal led by UNC-C (Barry Wilkinson) & UArk (Amy Apon) (under review) • Other partners: LSU, GMU, Lewis & Clark University, UNC Wilmington, Southern University & Howard University Sustaining the effort, Part 2 SURAgrid included as participant in LSU’s Nov 06 submission to NSF 06-599 “High-Performance Computing for Science and Engineering SURAgrid was also a partner in LSU’s Feb 07 NSF proposal submission to the NSF 06-573 “Leadership-Class System Acquisition - Creating a Petascale Computing Environment for Science and Engineering” program. SURA staff have also been working with John Connolly (Director of the University of Kentucky Center for Computational Sciences) and several IT leaders from SURA’s EPSCoR community to explore the development of a SURA program to leverage SURAgrid and SURAgrid’s Corporate Partners program to create a cyberinfrastructure program targeted at the SURA EPSCoR community. Now to Gary (Whew!) for more detail on corporate partnerships…