ppt - Columbia University

advertisement

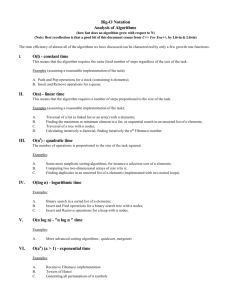

Algorithms and Complexity

Zeph Grunschlag

Copyright © Zeph Grunschlag,

2001-2002.

Announcements

HW 3 Due

First ½ of HW4 available.

Only first 6 problems of HW4 appear on

Midterm

Midterm 1, two weeks from today.

Late HW4’s can’t be accepted after

Friday, 3/1 (solutions go up early)

L8

2

Agenda

Section 1.8: Growth of Functions

Big-O

Big- (Omega)

Big- (Theta)

Section 2.1: Algorithms

Defining Properties

Pseudocode

Section 2.2: Complexity of Algorithms

L8

3

Section 1.8

Big-O, Big-, Big-

Big-O notation is a way of comparing

functions. Useful for computing

algorithmic complexity, i.e. the amount

of time that it takes for computer

program to run.

L8

4

Notational Issues

The notation is unconventional. Seemingly, an

equation is established; however, this is not

the intention.

EG: 3x 3 + 5x 2 – 9 = O (x 3)

Doesn’t mean that there’s a function O (x 3) and

that it equals 3x 3 + 5x 2 – 9. Rather the

example is read as:

“3x 3+5x 2 –9 is big-Oh of x 3”

Which actually means:

“3x 3+5x 2 –9 is asymptotically dominated by x 3”

L8

5

Intuitive Notion of Big-O

Asymptotic notation captures the behavior of

functions for large values of x.

EG: Dominant term of 3x 3+5x 2 –9 is x 3.

For small x not clear why x 3 dominates more

than x 2 or even x; however, as x becomes

larger and larger, other terms become

insignificant, and only x 3 remains in the

picture:

L8

6

Intuitive Notion of Big-O

domain – [0,2]

y = 3x 3+5x 2 –9

y=x3

y=x2

y=x

L8

7

Intuitive Notion of Big-O

domain – [0,5]

y = 3x 3+5x 2 –9

y=x3

y=x2

y=x

L8

8

Intuitive Notion of Big-O

domain – [0,10]

y = 3x 3+5x 2 –9

y=x3

y=x2

y=x

L8

9

Intuitive Notion of Big-O

domain – [0,100]

y = 3x 3+5x 2 –9

y=x3

L8

y=x2

y=x

10

Intuitive Notion of Big-O

In fact, 3x 3+5x 2 –9 is smaller than 5x

for large enough values of x:

3

y = 5x 3

y = 3x 3+5x 2 –9

y=x2

y=x

L8

11

Big-O. Formal Definition

The intuition motivates the idea that a function

f (x ) is asymptotically dominated by g (x ) if

some constant multiple of g (x ) is actually

bigger than f (x ) for all large x. Formally:

DEF: Let f and g be functions with domain R0

or N and codomain R. If there are constants

C and k such

x > k, |f (x )| C |g (x )|

i.e., past k, f is less than or equal to a

multiple of g, then we write:

f (x ) = O ( g (x ) )

L8

12

Common Misunderstanding

It’s true that 3x 3 + 5x 2 – 9 = O (x 3) as we’ll

prove shortly. However, also true are:

3x 3 + 5x 2 – 9 = O (x 4)

x 3 = O (3x 3 + 5x 2 – 9)

sin(x) = O (x 4)

NOTE: In CS, use of big-O typically involves

mentioning only the most dominant term.

“The running time is O (x 2.5)”

Mathematically big-O is more subtle. It’s a way

of comparing arbitrary pairs of functions.

L8

13

Big-O. Example

EG: Show that 3x 3 + 5x 2 – 9 = O (x 3).

From the previous graphs it makes sense

to let C = 5. Let’s find k so that

3x 3 + 5x 2 – 9 5x 3 for x > k :

L8

14

EG: Show that

3x 3 + 5x 2 – 9 = O (x 3).

From the previous graphs it makes sense

to let C = 5. Let’s find k so that

3x 3 + 5x 2 – 9 5x 3 for x > k :

1. Collect terms: 5x 2 ≤ 2x 3 + 9

L8

15

EG: Show that

3x 3 + 5x 2 – 9 = O (x 3).

From the previous graphs it makes sense

to let C = 5. Let’s find k so that

3x 3 + 5x 2 – 9 5x 3 for x > k :

1. Collect terms: 5x 2 ≤ 2x 3 + 9

2. What k will make 5x 2 ≤ x 3 past k ?

L8

16

EG: Show that

3x 3 + 5x 2 – 9 = O (x 3).

From the previous graphs it makes sense

to let C = 5. Let’s find k so that

3x 3 + 5x 2 – 9 5x 3 for x > k :

1. Collect terms: 5x 2 ≤ 2x 3 + 9

2. What k will make 5x 2 ≤ x 3 past k ?

3. k = 5 !

L8

17

EG: Show that

3x 3 + 5x 2 – 9 = O (x 3).

From the previous graphs it makes sense

to let C = 5. Let’s find k so that

3x 3 + 5x 2 – 9 5x 3 for x > k :

1. Collect terms: 5x 2 ≤ 2x 3 + 9

2. What k will make 5x 2 ≤ x 3 past k ?

3. k = 5 !

4. So for x > 5, 5x 2 ≤ x 3 ≤ 2x 3 + 9

L8

18

EG: Show that

3x 3 + 5x 2 – 9 = O (x 3).

From the previous graphs it makes sense

to let C = 5. Let’s find k so that

3x 3 + 5x 2 – 9 5x 3 for x > k :

1. Collect terms: 5x 2 ≤ 2x 3 + 9

2. What k will make 5x 2 ≤ x 3 past k ?

3. k = 5 !

4. So for x > 5, 5x 2 ≤ x 3 ≤ 2x 3 + 9

5. Solution: C = 5, k = 5 (not unique!)

L8

19

Big-O. Negative Example

x 4 O (3x 3 + 5x 2 – 9) :

Show that no C, k can exist such that past k,

C (3x 3 + 5x 2 – 9) x 4 is always true.

Easiest way is with limits (yes Calculus is

good to know):

L8

20

Big-O. Negative Example

x 4 O (3x 3 + 5x 2 – 9) :

Show that no C, k can exist such that past k,

C (3x 3 + 5x 2 – 9) x 4 is always true.

Easiest way is with limits (yes Calculus is

good to know):

x4

x

lim

lim

3

2

x C (3 x 5 x 9)

x C (3 5 / x 9 / x 3 )

L8

21

Big-O. Negative Example

x 4 O (3x 3 + 5x 2 – 9) :

Show that no C, k can exist such that past k,

C (3x 3 + 5x 2 – 9) x 4 is always true.

Easiest way is with limits (yes Calculus is

good to know):

x4

x

lim

lim

3

2

x C (3 x 5 x 9)

x C (3 5 / x 9 / x 3 )

x

1

lim

lim x

x C (3 0 0)

3C x

L8

22

Big-O. Negative Example

x 4 O (3x 3 + 5x 2 – 9) :

Show that no C, k can exist such that past k,

C (3x 3 + 5x 2 – 9) x 4 is always true.

Easiest way is with limits (yes Calculus is

good to know):

x4

x

lim

lim

3

2

x C (3 x 5 x 9)

x C (3 5 / x 9 / x 3 )

x

1

lim

lim x

x C (3 0 0)

3C x

Thus no-matter C, x 4 will always catch up and

eclipse C (3x 3 + 5x 2 – 9) •

L8

23

Big-O and limits

Knowing how to use limits can help to prove

big-O relationships:

LEMMA: If the limit as x of the

quotient |f (x) / g (x)| exists (so is noninfinite) then f (x ) = O ( g (x ) ).

EG: 3x 3 + 5x 2 – 9 = O (x 3 ). Compute:

x3

1

1

lim 3

lim

2

3

x 3 x 5 x 9

x 3 5 / x 9 / x

3

L8

…so big-O relationship proved.

24

Big- and Big-

Big- is just the reverse of big-O. I.e.

f (x ) = (g (x )) g (x ) = O (f (x ))

So big- says that asymptotically f (x )

dominates g (x ).

Big- says that both functions dominate eachother so are asymptotically equivalent. I.e.

f (x ) = (g (x ))

f (x ) = O (g (x )) f (x ) = (g (x ))

Synonym for f = (g): “f is of order g ”

L8

25

Useful facts

Any polynomial is big- of its largest

term

EG: x 4/100000 + 3x 3 + 5x 2 – 9 = (x 4)

The sum of two functions is big-O of

the biggest

EG: x 4 ln(x ) + x 5 = O (x 5)

Non-zero constants are irrelevant:

L8

EG: 17x 4 ln(x ) = O (x 4 ln(x ))

26

Big-O, Big-, Big-.

Examples

Q: Order the following from smallest to

largest asymptotically. Group together

all functions which are big- of each

other:

1

1

x

e

x

x sin x, ln x, x x , ,13 ,13 x, e , x , x

x

x

20

2

( x sin x)( x 102), x ln x, x(ln x) , lg 2 x

L8

27

Big-O, Big-, Big-.

Examples

A:

1.1 x

2.13 1 x

3. ln x, lg 2 x (change of base formula)

4. x sin x, x x ,13 x

5. x ln x

2

6. x(ln x)

e

7. x

8. ( x sin x)( x 20 102)

x

9. e

10. x x

L8

28

Incomparable Functions

Given two functions f (x ) and g (x ) it is

not always the case that one dominates

the other so that f and g are

asymptotically incomparable.

E.G:

f (x) = |x 2 sin(x)| vs. g (x) = 5x 1.5

L8

29

Incomparable Functions

2500

y = x2

2000

1500

y = |x 2 sin(x)|

y = 5x 1.5

1000

500

L8

30

0

0

5

10

15

20

25

30

35

40

45

50

Incomparable Functions

4

4

x 10

3.5

y = x2

3

2.5

y = 5x 1.5

2

y = |x 2 sin(x)|

1.5

1

0.5

0

0

L8

20

40

60

80

100

120

140

160

180

200

31

Example for Section 1.8

Link to example proving big-Omega of a

sum.

L8

32

Section 2.1

Algorithms and Pseudocode

By now, most of you are well adept at

understanding, analyzing and creating

algorithms. This is what you did in your (prerequisite) 1 semester of programming!

DEF: An algorithm is a finite set of precise

instructions for performing a computation or

solving a problem.

Synonyms for a algorithm are: program, recipe,

procedure, and many others.

L8

33

Hallmarks of Algorithms

The text book lays out 7 properties that an

algorithm should have to satisfy the notion

“precise instructions” in such a way that no

ambiguity arises:

1. Input. Spell out what the algorithm eats

2. Output. Spell out what sort of stuff it spits

out

3. Determinism (or Definiteness) At each

point in computation, should be able to tell

exactly what happens next

L8

34

Hallmarks of Algorithms

4. Correctness. Algorithm should do what it

5.

6.

7.

Q:

L8

claims to be doing.

Finiteness. Finite number of steps for

computation no matter what input.

Effectiveness. Each step itself should be

doable in a finite amount of time.

Generality. Algorithm should be valid on all

possible inputs.

Which of the conditions guarantee that an

algorithm has no infinite loops?

35

Hallmarks of Algorithms

A: Finiteness & Effectiveness

L8

36

Pseudocode

Starting out, students often find it confusing to

write pseudocode, but find it easy to read

pseudocode. Sometimes, students are so

anti-pseudocode that they’d rather write

compiling code in homework and exams.

This is a major waste of time!

I strongly suggest you use pseudocode. You

don’t have to learn the pseudo-Pascal in

Appendix 2 of the text-book.

A possible alternative: pseudo-Java…

L8

37

Pseudo-Java

Start by representing an algorithm as a Java

method. It’s clear that Java specific modifiers

such as final, static, etc. are not worth

worrying about. So consider the method:

int f(int[] a){

int x = a[0];

for(int i=1; i<a.length; i++){

if(x > a[i])

x = a[i];

}

return x;

}

Q: What does this algorithm do?

L8

38

Pseudo-Java

A: It finds the minimum of a sequence of

numbers.

int f(int[] a){

int x = a[0];

for(int i=1; i<a.length; i++){

if(x > a[i])

x = a[i];

}

return x;

}

Q: Is there an input that causes Java to throw

an exception?

L8

39

Pseudo-Java

A: Yes, arrays of length 0.

This illustrates one of the dangers of

relying on real code. Forces you to

worry about language specific

technicalities, exceptions, etc.

L8

40

Pseudo-Java

int f(int[] a){

int x = a[0];

for(int i=1; i<a.length; i++){

if(x > a[i])

x = a[i];

}

return x;

}

Q: Are there any statements that are too long

winded, or confusing to non-Java people?

L8

41

Pseudo-Java

A: Yes, several:

1. int f(int[] a)

Non-Java people may not realize that this

says that input should be an array of int’s

FIX (also helps with some problems below)

spell out exactly how the array looks and

use subscripts, which also tells us the size

of the array:

“integer f( integer_array (a1, a2, …, an) )”

Type declaration notation “integer f()”

is a succinct way of specifying the output .

L8

42

Pseudo-Java

2. a[0]

L8

brackets annoying in index evaluation.

Why are we starting at 0?

FIX: Use subscripts: “ai” and start with

index 1.

43

Psuedo-Java

3. for(int i=1; i<a.length; i++)

Counter in for-loop is obviously an int

Change “int i=1” to “i=1”

Really annoying if constantly have to refer to array length

using the period operator

Subscripts fixed this. Now “a.length” becomes “n ”

Can’t we just directly iterate over whole sequence

and not worry about “i++” (that way don’t have

to remember peculiar for-loop syntax)

Change whole statement to “for(i=1 to n)”

Q: Were there any unintended limitations that

using Java created?

L8

44

Pseudo-Java

A: Yes. We restricted the input/output to be of

type int. Probably wanted algorithm to

work on arbitrary integers, not just those that

can be encapsulated in 32 bits!

Java solution: use class

java.math.BigInteger

…NOT!

Way too gnarly! Just output “integer” instead

of int.

Resulting pseudo-code (also removed

semicolons):

L8

45

Pseudo-Java

integer f(integer_array (a1, a2, …, an) ){

x = a1

for(i =2 to n){

if(x > ai)

x = ai

}

return x

}

L8

46

Ill-defined Algorithms.

Examples.

Q: What’s wrong with each example below?

1. integer power(a, b) {…rest is okay…}

2. integer power(integer a, rational b)

{…rest is okay…}

3. boolean f(real x){

// “real” = real no.

for(each digit d in x){

if(d == 0)

return true;

}

return false;

}L8

47

Ill-defined Algorithms.

Examples.

A:

1. Input ill-defined.

2. Must be incorrect as output of

fractional power such as 2½ is usually

not an integer.

3. Number of steps infinite on certain

inputs. EG: 2.121212121212121212…

L8

48

Ill-defined Algorithms.

Examples.

Q: What’s wrong with:

String f(integer a) {

if(a == 0){

return “Zero”

OR return “The cardinality of {}”

}

}

L8

49

Ill-defined Algorithms.

Examples.

A: Two problems

L8

Non-deterministic –what should we

output when reading 0?

Non-general –what happens to the input

1?

50

Algorithm for Surjectivity

Give an algorithm that determines whether a

function from a finite set to another is onto.

Hint : Assume the form of the sets is as simple

as possible, e.g. sets of numbers starting

from 1. Algorithm doesn’t depend on type of

elements since could always use a “look-up

table” to convert between one form and

another. This assumption also creates

cleaner, clearer pseudocode.

L8

51

Algorithm for Surjectivity

boolean isOnto( function f: (1, 2,…, n) (1, 2,…, m) ){

if( m > n ) return false

// can’t be onto

soFarIsOnto = true

for( j = 1 to m ){

soFarIsOnto = false

for(i = 1 to n ){

if ( f(i ) == j )

soFarIsOnto = true

if( !soFarIsOnto ) return false;

}

}

return true;

L8

52

}

Improved Algorithm for

Surjectivity

boolean isOntoB( function f: (1, 2,…, n) (1, 2,…, m) ){

if( m > n ) return false // can’t be onto

for( j = 1 to m )

beenHit[ j ] = false; // does f ever output j ?

for(i = 1 to n )

beenHit[ f(i ) ] = true;

for(j = 1 to m )

if( !beenHit[ j ] )

return false;

return true;

}

L8

53

Section 2.2

Algorithmic Complexity

Q: Why is the second algorithm better

than the first?

L8

54

Section 2.2

Algorithmic Complexity

A: Because the second algorithm runs

faster. Even under the criterion of

code-length, algorithm 2 is better.

Let’s see why:

L8

55

Running time of 1st algorithm

boolean isOnto( function f: (1,

2,…, n) (1, 2,…, m) ){

if( m > n ) return false

soFarIsOnto = true

for( j = 1 to m ){

soFarIsOnto = false

for(i = 1 to n ){

if ( f(i ) == j )

soFarIsOnto = true

if( !soFarIsOnto )

return false

}

}

return true;

}

L8

1 step OR:

1 step (assigment)

m loops: 1 increment plus

1 step (assignment)

n loops: 1 increment plus

1 step possibly leads to:

1 step (assignment)

1 step possibly leads to:

1 step (return)

possibly 1 step

56

Running time of 1st algorithm

WORST-CASE running time:

1 step (m>n) OR:

1 step (assigment)

m loops: 1 increment plus

1 step (assignment)

n loops: 1 increment plus

1 step possibly leads to:

1 step (assignment)

1 step possibly leads to:

1 step (return)

possibly 1 step

L8

Number of steps = 1 OR 1+

1+

m·

(1+ 1 +

n·

(1+1

+ 1

+1

+ 1

)

+1

)

= 1 (if m>n) OR 5mn+3m+2

57

Running time of 2nd algorithm

boolean isOntoB( function f: (1,

2,…, n) (1, 2,…, m) ){

if( m > n ) return false

for( j = 1 to m )

beenHit[ j ] = false

for(i = 1 to n )

beenHit[ f(i ) ] = true

for(j = 1 to m )

if( !beenHit[ j ] )

return false

return true

}

L8

1 step OR:

m loops: 1 increment plus

1 step (assignment)

n loops: 1 increment plus

1 step (assignment)

m loops: 1 increment plus

1 step possibly leads to:

1 step

possibly 1 step

.

58

Running time of 2nd algorithm

WORST-CASE running time:

1 step (m>n) OR:

m loops: 1 increment plus

1 step (assignment)

n loops: 1 increment plus

1 step (assignment)

m loops: 1 increment plus

1 step possibly leads to:

1 step

possibly 1 step

.

L8

Number of steps = 1 OR 1+

m · (1+

1)

+ n · (1+

1)

+ m · (1+

1

+ 1)

+ 1

= 1 (if m>n) OR 5m + 2n + 2

59

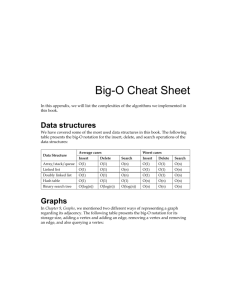

Comparing Running Times

The first algorithm requires at most 5mn+3m+2

steps, while the second algorithm requires at

most 5m+2n+2 steps. In both cases, for worst

case times we can assume that m n as this

is the longer-running case. This reduces the

respective running times to 5n 2+3n+2 and

5n+2n+2= 8n+2.

To tell which algorithm better, find the most

important terms using big- notation:

5n 2+3n+2 = (n 2) –quadratic time complexity

8n+2 = (n) –linear time complexity

WINNER

Q: Any issues with this line of reasoning?

L8

60

Comparing Running Times.

Issues

1. Inaccurate to summarize running times

5n 2+3n+2 , 8n+2 only by biggest term. For

example, for n=1 both algorithms take 10

steps.

2. Inaccurate to count the number of “basic

steps” without measuring how long each

basic step takes. Maybe the basic steps of

the second algorithm are much longer than

those of the first algorithm so that in

actuality first algorithm is faster.

L8

61

Comparing Running Times.

Issues

3. Surely the running time depends on the

platform on which it is executed. E.g., Ccode on a Pentium IV will execute much

faster than Java on a Palm-Pilot.

4. The running time calculations counted many

operations that may not occur. In fact, a

close look reveals that we can be certain

the calculations were an over-estimate since

certain conditional statements were

mutually exclusive. Perhaps we overestimated so much that algorithm 1 was

actually a linear-time algorithm.

L8

62

Comparing Running Times.

Responses

1. Big- inaccurate: Quadratic time Cn 2

will always take longer than linear

time Dn for large enough input, no

matter what C and D are;

furthermore, it is the large input sizes

that give us the real problems so are

of most concern.

L8

63

Comparing Running Times.

Responses

2. “Basic steps” counting inaccurate: True that

we have to define what a basic step is.

EG: Does multiplying numbers constitute a

basic step or not. Depending on the

computing platform, and the type of

problem (e.g. multiplying int’s vs.

multiplying arbitrary integers) multiplication

may take a fixed amount of time, or not.

When this is ambiguous, you’ll be told

explicitly what a basic step is.

Q: What were the basic steps in previous

algorithms?

L8

64

Comparing Running Times

A: Basic steps—

Assignment

Increment

Comparison

Negation

Return

Random array access

Function output access

Each may in fact require a different number bit

operations –the actual operations that can

be carried out in a single cycle on a

processor. However, since each operation is

itself O (1) --i.e. takes a constant amount of

time, asymptotically as if each step was in

fact 1 time-unit long!

L8

65

Comparing Running Times.

Issues

3. Platform dependence: Turns out there is usually a

4.

L8

constant multiple between the various basic

operations in one platform and another. Thus, bigO erases this difference as well.

Running time is too pessimistic: It is definitely true

that when m > n the estimates are over-kill. Even

when m=n there are cases which run much faster

than the big-Theta estimate. However, since we

can always find inputs which do achieve the bigTheta estimates (e.g. when f is onto), and the

worst-case running time is defined in terms of the

worst possible inputs, the estimates are valid.

66

Worst Case vs. Average Case

The time complexity described above is worst case

complexity. This kind of complexity is useful when

one needs absolute guarantees for how long a

program will run. The worst case complexity for a

given n is computed from the case of size n that

takes the longest.

On other hand, if a method needs to be run repeatedly

many times, average case complexity is most

suitable. The average case complexity is the avg.

complexity over all possible inputs of a given size.

Usually computing avg. case complexity requires

probability theory.

Q: Does one of the two surjectivity algorithms perform

better on average than worst case?

L8

67

Worst Case vs. Average Case

A: Yes. The first algorithm performs

better on average. This is because

surjective functions are actually rather

rare, and the algorithm terminates early

when a non-hit element is found near

the beginning.

With probability theory will be able to

show that when m = n, the first

algorithm has O (n) average complexity.

L8

68

Big-O

A Grain of Salt

Big-O notation gives a good first guess for

deciding which algorithms are faster. In

practice, the guess isn’t always correct.

Consider time functions n 6 vs. 1000n 5.9.

Asymptotically, the second is better. Often

catch such examples of purported advances

in theoretical computer science publications.

The following graph shows the relative

performance of the two algorithms:

L8

69

Running-time

In days

Big-O

A Grain of Salt

T(n) =

1000n 5.9

Assuming each operation

takes a nano-second, so

computer runs at 1 GHz

T(n) = n

6

Input size n

L8

70

Big-O

A Grain of Salt

In fact, 1000n 5.9 only catches up to n 6

when 1000n 5.9 = n 6, i.e.:

1000= n 0.1, i.e.:

n = 100010 = 1030 operations

= 1030/109 = 1021 seconds

1021/(3x107) 3x1013 years

3x1013/(2x1010)

1500 universe lifetimes!

L8

71