Introduction to AI

advertisement

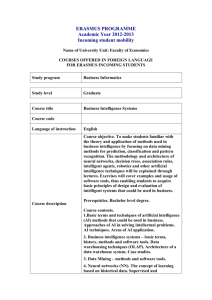

Artificial Intelligence

CPSC 327

Week 1

The Astonishing Hypothesis

(with apologies to Francis Crick)

1

Towards a Definition

• The name of the field is composed of two

words:

– Artificial

• Art

• Artifact

• Artifice

• Article

– Intelligence

What do these have in common?

2

So, AI is the construction of

intelligent systems

• “Artificial Intelligence (AI) may be defined as the

•

•

•

branch of computer science that is concerned

with the automation of intelligent behavior.” p. 1

Key notion: behavior

Classic def. of AI doesn’t care how it’s

composed.

Intelligent systems behave intelligently.

3

But what’s intelligence?

4

Some indicators

• Ability to do mathematics

• Ability to design a machine

• Ability to play chess

• Ability to speak

• Ability to write an essay

5

What do all of these have in

common?

6

AI has concentrated on those

things that we get rewarded for in

school.

7

A more precise set of criteria*

1. Intelligence must entail a set of skills to solve

2.

genuine problems valued across cultures

Potential isolation by brain damage: “to the

extend that a particular faculty can be

destroyed as a result of head trauma, its

isolation from other faculties seems likely.”

*Howard Gardner, Frames of Mind, Basic Books, 1993, pp. 62-67

8

Two more criteria

3.

Existence of prodigies. That is, the skills may be plotted along a

standard normal distribution. Some people are way out on the

right side.

4.

Existence of one or more basic information processing operations

that deal with specific inputs. “One might go so far as to define a

human intelligence as a neural mechanism or computational

system which is genetically programmed to be activated or

“triggered” by certain kinds of internally or externally presented

information.” For example:

–

–

–

–

–

Sensitivity to pitch relations (musicians)

Ability to see patterns among symbols (mathematicians)

Ability to imitate bodily movements (athletes)

Ability to understand emotional and power relations among a group

of people (politicians)

Ability to speak a language (all humans)

9

Two More

5. Evolutionary history and evolutionary

plausibility. “A specific intelligence

becomes more plausible to the extent

that one can locate its evolutionary

antecedents.”

6. Distinctive developmental history—levels

of expertise through which every novice

passes

10

Yet another

7. Support from experimental psychology.

“To the extent that various specific

computational mechanisms…work

together smoothly, experimental

psychology can also help demonstrate

the ways in which modular … abilities

may interact in the execution of complex

tasks.” Psychometric findings are also

relevant.

11

Finally

8. Susceptibility to encoding in a symbol system. “Much

of human representation and communication … takes

place via symbol systems—culturally contrived systems

of meaning which capture important forms of

information. Language, picturing, mathematics are

but three of the symbol systems that have become

important the world over for human survival and

human productivity…Symbol systems may have

evolved in just those cases where there exists a

computational capacity ripe for harnessing….”

12

An Historical Aside

• Newell & Simon, two AI pioneers,

formulated the Physical Symbol System

Hypothesis in their 1978 Turing Award

Lecture:

“A physical symbol system possesses the

necessary and sufficient conditions for

general intelligent action.” (about which,

more later).

13

Gardner’s Seven Intelligences

These 8 criteria lead to seven intelligences

• Musical intelligence

• Logical-mathematical intelligence

• Linguistic intelligence

• Spatial intelligence (kekule’ and the Benzene ring,

artist)

• Bodily-kinesthetic (athlete, dancer, surgeon)

• Intrapersonal—access to one’s own emotional life

(novelist, shaman)

• Interpersonal—ability to read the emotional state of

others (politician, gambler, therapist)

.

14

The good and bad news

• AI has had lots of success with logical

intelligence

• Less success with linguistic intelligence

• Almost no success with what comes under

the heading of common sense

15

Yet another definition

• AI is the science of making machines do

the sort of things that are done by human

minds (Oxford Companion to Mind)

• Why? I mean, who cares?

16

Five applications

• Build various kinds of intelligent assistants

–

–

–

–

Monitor email

Perform hazardous tasks

Monitor correct operations of a computer network

Monitor/rewrite news

• Make computers and other appliances easier to

•

•

•

use

Machine translation

Intelligent tutors

Model human cognition

17

Model Human Cognition

• Another Def.

– AI is the study of mental faculties through the

use of computational models

18

Good Points of this definition

1. Stays away from purely human intelligence by

talking of mental faculties

•

•

•

•

•

Perceive the world

Learn, remember, control action

Create new ideas

Communicate

Create the experience of feelings, intentions, selfawareness

2. Introduces the notion of a computational

model

19

Fundamental Assumption in AI

• Computational/Representational

Understanding of Mind

– Theory can best be understood in terms of

representational structures in the mind and

computational procedures that act on them

– Implication is that the material in which these

are implemented is irrelevant

20

So

• Material of the brain

– Neural cells and electrical potential called

synapses

• Material of Computers

– Silicon, copper, electrical impulses organized

to implement the laws of symbolic logic

21

Central Feature of AI

• Materials are irrelevant

• Intelligence implemented in silicon is still

intelligence

• Turing Test laid out the ground rules over

fifty years ago

22

Physical Symbol System Hypothesis

• Allen Newell & Herbert Simon

• “A physical symbol system has the

necessary and sufficient means for general

intelligent action.”

• What is a PSS?

– A program

– A Turing Machine

23

To Explain

• Symbol

– May designate anything

– If it designates something in the world, it has a

semantics

– May be manipulated according to rules and so has a

syntax

• Necessary

– Any system that exhibits general intelligence, will

prove, upon analysis, to be a physical symbol system

24

Further

• Sufficient

– Any physical symbol system of large enough

size can be organized to exhibit general

intelligent action

• General Intelligent Action

– Same scope as human behavior: in any real

situation, behavior appropriate to the ends of

the system and adaptive to the demands of

the environment can occur

25

Example: Language Generation

• Mary hit the ball.

– Letters are symbols for sounds

– Arranged according the rules of spelling

– To form words

– But, words refer to

• Objects: Mary, John, Ball

• Actions: hit

• Relationships: to

• These form the semantics of the sentence

26

• By arranging these words according to

linguistic rules, called syntax, we get

sentences

• But how do we know the rules?

• Language spoken by native speakers is

data. Linguists tease out the regularities.

• So, a grammar is descriptive, not

prescriptive

27

Simple Context Free Grammar

S NP VP

VP V NP (PP)

PP P NP

NP (det) N

det {a, the}

N {Mary, John, ball, bat}

P {to, with}

V bat

Try deriving the sentence:

Mary hit the ball to John with the bat.

Notice the recursive structure

28

So we have

• Symbols

• Syntax

• Semantics

If these were sufficiently complex, we would

have a PSS that generates all English

sentences.

29

The Astonishing Hypothesis

• Intelligence is, at bottom, symbol manipulation

• Convenient for computer scientists

• Hard to know which came first

– Claim then the computer

– Computer then the claim

• Western thought from Aristotle to Boole to Frege

•

has paid special attention to logic

Especially interesting to learn that logic is

pattern matching, a claim that I’ll argue for

when we study proofs by resolution refutation

30

Objections/Counter Objections

• Computers only do what they’re told

– Debugging programs: we often don’t know what we’ve told computers to do

– Rules given to AI program are like the axioms of an algebra. They allow the

inference of the theorems that were not anticipated

– PDP is not rule bound. Or at least, it’s difficult to specify the rules

• Can’t specify rules to govern all of behavior

– Machine learning

• Searle’s Chinese box experiment

• AI systems are brittle and not scaleable

– PDP

• Intelligence and logic are not the same thing

– PDP

– genetic algorithms

– Hidden Markov models

31

AI Areas

1. Game playing

– Source of results in state space search, state space representation,

heuristic reasoning

2. Theorem Proving

– Early successes: Theorem 2.85 from Principia

– Problem: prove large number of irrelevant theorems before stumbling

onto the goal

3. Expert systems

– Domain-specific knowledge

– Rigidly hand-crafted

– Don’t learn

Common threads to all three

– Well-defined set of rules

– No outside knowledge is required

32

4. NLP

• Success with parsing

• Success with speech synthesis and

•

•

•

transcription

Growing success with translation

All successes are probabilistic

Language is deceptively rule-bound

–

–

He saw her duck

“janet needed some money. She got her piggy bank

and shook it. Finally, some money came out.”

• Why did Janet get the piggy bank?

• Did Janet get the money?

33

• Why did Janet shake the piggy bank?

5. Cognitive Modeling

•

•

Forces precision

Existence proof

6. Robotics

7. Machine Learning (e.g., neural networks,

evolutionary computing, stochastic

models)

34

Two Strands in AI

1.

–

–

–

–

Strand based on logic

“The reliance on logic as a way of representing knowledge and on

logical inference as the primary mechanism for intelligent reasoning

are so dominant in Western philosophy that their “truth” often seems

unassailable. It is no surprise, then, that approaches based on these

assumptions have dominated the science of artificial intelligence from

its inception to the present day.” p. 16

But various forms of philosophical relativism have questioned the

“objective basis of language, science, and society” in the past half

century.

Examples come from philosophy of language (Wittgenstein, Grice,

Austin, Searle), phenomenology (Husserl, Heidegger, Dreyfus), logic

(Godel: In any logical system there must remain propositions that

can’t be proven from within the system), linguistics (Winograd,

Lakoff, usage-based linguists), post-modern thought (Derrida: “There

is no outside the text”).

The cumulative effect has been to call the AI project—at least as

classically conceived—into question.

35

2. Strand based on biological metaphors and

stochastic modeling

–

Artificial life and genetic algorithms take their

inspiration from the principles of biological

evolution. Intelligence as emergent.

– Connectionism (PDP) takes it inspiration from a

highly abstract view of neurons connected by

synapses through a feedback mechanism

– Hidden Markov models: a machine learning

technique that makes Bayesian inferences for

chains of events

• Bayes Rule: P(X|Y) = (P(Y|X) * P(X))/P(Y))

• In English: the probability that we have class today given

that today is Thursday equals the probability that today is

Thursday given that we have class times the probability

that we have class divided by the probability that today is

Thursday.

36