3 /56 - PhysBAM

advertisement

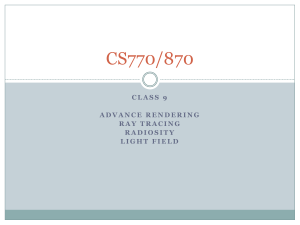

1/56

OpenGL vs. Real World

• Limits to opengl

rendering

• Reflectance

– Draw the object twice

– Cannot easily handle

complex reflections

2/56

OpenGL vs. Real World

• Limits to opengl

rendering

• Global Illumination

– Ambient Lighting

– Not realistic

Note the soft shadow above and the hard shadow below

3/56

OpenGL vs. Real World

• Limits to opengl

rendering

• Volumetrics

– Sprite rendering

– Not realistic

4/56

Why We Use Ray Tracing

• Much more realistic than scanline renderings.

• Capable of simulating a wide variety of optical effects.

– reflection, refraction, shadows, scattering, subsurface scattering,

dispersion, caustics, and participating media.

5/56

What is Ray Tracing

• Generating an image by tracing the path of

light through pixels in an image plane

• Simulating the effects of light’s encounters with

virtual objects.

6/56

Ray Tracing History

7/56

Ray Tracing History

8/56

Ray Tracing History

Image courtesy Paul Heckbert 1983

9/56

Ray Tracing History

Kajiya

1986

10/56

Real-Time Ray Tracing

• Ray tracing is typically

not used in games

– Too expensive but

possible

– GPUs are very good at

tasks that are easily

parallelizable

• NVIDIA Optix system

• Typically all GPU power

is used

11/56

Basic Ray Tracing Algorithm

Create a ‘virtual window’ into the scene

12/56

Defining the Rays

[0,+∞)

13/56

Basic Ray Tracing Algorithm

Shoot ray from eye through pixel, see what it hits

14/56

Basic Ray Tracing Algorithm

Shoot ray toward light to see if point is in shadow

15/56

Basic Ray Tracing Algorithm

Compute shading from light source

16/56

Basic Ray Tracing Algorithm

Record pixel color

17/56

Pseudocode

Image Raytrace (Eye eye, Scene scene, int width, int height)

{

Image image = new Image (width, height) ;

for (int i = 0 ; i < height ; i++)

for (int j = 0 ; j < width ; j++)

{

Ray ray = RayThruPixel (eye, i, j) ;

Intersection hit = Intersect (ray, scene) ;

image[i][j] = FindColor (hit) ;

}

return image ;

}

18/56

Defining the Camera

• Camera at eye, looking at lookAt, with up

direction being up

– Recall the gluLookAt for OpenGL camera.

Up vector

b

a = lookAt – eye

b = up

Eye

a

lookAt

19/56

Defining the Camera

• a, b don’t have to be orthogonal or unit length

• Form an orthonormal basis u, v, and w:

b w

u

b w

a

w

a

v w u

v

b

u

a

w

20/56

Recall the OpenGL Frustum

Uses a pinhole camera.

The image plane is in front of the focal point which means the image is right side

up.

The frustum is the volume of our view (shown in blue below).

The image plane is the plane of the frustum nearest to the camera.

21/56

Defining the Image Plane

• The viewing system has the origin eye and is aligned to the uvw basis.

• The image plane is defined as a rectangle aligned to uv and orthogonal to

w.

• The point P on the image plane can be represented by coordinate (u,v,w)

with the basis u, v, and w and the origin eye: P=eye+uu+vv+ww.

• Similar to the image plane in OpenGL.

22/56

Defining the Image Plane

UR

UL

vs

v

u

w

eye

fovy

wsw

C

The size of the image plane is

(2us,2vs).

LL

us

us = tan(fovy/2)*ws

vs = tan(aspect • fovy / 2 )*ws

C = eye + wsw

LL = C + (us• u) – (vs • v)

UL = C + (us• u) + (vs • v)

LR = C – (us• u) – (vs • v)

UR = C – (us• u) + (vs • v)

LR

Note that the size of the pixel

plane (2us,2vs) and its distance ws

from the eye determines the field

of view.

23/56

Computing Rays from Pixels

• Given a pixel (i, j) in the viewport (i0, j0, nw, nh), we

can calculate the coordinate of the pixel in the (u,v,w)

coordinate system:

i i 0

u uw (

0.5)

nw

j j0

v vw (

0.5)

nh

w ws

• So the location of the pixel in the

world space is P eye uu vv ww

• Then the direction of the ray is D ( P eye) P eye

• The ray through pixel (i,j) is R(t ) eye tD

24/56

Calculating Ray-Object Intersections

• Given a ray R(t)=A+tD, find the first

intersection with any object where t ≥ tmin and

t ≤ tmax.

• The object can be a polygon mesh, an implicit

surface, a parametric surface, etc.

25/56

Ray-Sphere Intersection

• Ray equation:

R(t ) A tD

• Implicit equation for sphere:

2

2

( X C) r

• Combine them together:

( A tD C ) r

2

2

26/56

Ray-Sphere Intersection

Quadratic equation in t:

( A tD C ) 2 r 2

D t 2( A C ) Dt ( A C ) r

2 2

2

2

t 2 2( A C ) Dt ( A C ) 2 r 2 0

With discriminant:

4[( A C ) D] 4( A C ) r

2

2

2

27/56

Ray-Sphere Intersection

0

0

0

For the case with two solutions with t1<t2,

choose t1 for the first intersection.

28/56

Ray-Sphere Intersection

• Intersection Point:

P A t1D

• Intersection Normal:

P

P C

N

P C

29/56

Ray-Plane Intersection

•

Ray equation:

R(t ) A tD

•

Implicit equation for plane:

ax by cz d 0

•

Combine them together and solve t for the intersection point p:

•

For ray-triangle intersection test, we can project the 3 vertices of the triangle and

the intersection point p onto the plane, and run the point-inside-triangle test in 2D

as we did for rasterization in Lecture 3.

a( xA txD ) b( y A tyD ) c( z A tzD ) d 0

30/56

Or you can avoid projection…

• For each edge e of the triangle:

– Compute the normal direction n orthogonal to e and pointing to its

opposite vertex in the plane of the triangle.

n e1

e 2 e1

e2

2

e2

– Pick one of the two endpoints of e (assuming it is P1 here), and test

whether ( P P1 ) n 0

– If ( P P1 ) n 0 , then P is outside the triangle.

• Otherwise P is inside the triangle.

31/56

Recall: Point Inside Triangle Test in Rasterization

rasterize( vert v[3] )

{

line l0, l1, l2;

makeline(v[0],v[1],l2);

makeline(v[1],v[2],l0);

makeline(v[2],v[0],l1);

for( y=0; y<YRES; y++ ) {

for( x=0; x<XRES; x++ ) {

e0 = l0.a * x + l0.b * y + l0.c;

e1 = l1.a * x + l1.b * y + l1.c;

e2 = l2.a * x + l2.b * y + l2.c;

if( e0<=0 && e1<=0 && e2<=0 )

fragment(x,y);

}

}

v0

l1

l2

v1

l0

v2

}

32/56

We can also use Barycentric Coordinates…

33/56

Ray-Triangle Intersection

• Ray equation:

R(t ) A tD

• Parametric equation for triangle:

X P1 ( P2 P1 ) ( P3 P1 )

• Combine:

P2

A tD P1 ( P2 P1 ) ( P3 P1 )

D

A

P3

P1

34/56

Ray-Triangle Intersection

A tD P1 ( P2 P1 ) ( P3 P1 )

is 3 equations with 3 unknowns:

x A txD x1 ( x2 x1 ) ( x3 x1 )

y A tyD y1 ( y2 y1 ) ( y3 y1 )

z tz z ( z z ) ( z z )

D

1

2

1

3

1

A

in which

P1 ( x1 , y1 , z1 ), P2 ( x2 , y2 , z2 ), P3 ( x3 , y3 , z3 )

35/56

Ray-Triangle Intersection

• Rewriting it as a standard linear equation:

x2 x1

y y

2 1

z2 z1

x3 x1

y3 y1

z3 z1

xD x A x1

y D y A y1

z D t z A z1

Satisfying tmin t tmax

0 1

0 1

36/56

Ray-Triangle Intersection

• Solving the linear equation using Cramer’s rule, we

will get the expressions for , , t .

x1 x A

x1 x3

xD

x1 x2

x1 x A

xD

x1 x2

x1 x3

x1 x A

y1 y A

y1 y3

yD

y1 y2

y1 y A

yD

y1 y2

y1 y3

y1 y A

z1 z A

x2 x1

y2 y1

z1 z3

x3 x1

y3 y1

zD

xD

yD

z1 z 2

x2 x1

y2 y1

z1 z A

x3 x1

y3 y1

zD

xD

yD

z 2 z1

z3 z1

zD

z 2 z1

z3 z1

zD

t

z1 z 2 z1 z3 z1 z A

x2 x1 x3 x1 xD

y2 y1 y3 y1 y D

z 2 z1

z3 z1

zD

• Notice the 4 matrices have some common columns

which means we can reduce the number of

operations by reusing the numbers when computing

determinants.

37/56

Pseudocode

bool RayTriangle (Ray R, Vec3 V1, Vec3 V2, Vec3 V3, Interval [tmin, tmax])

{

compute t;

if(t < tmin or t > tmax) return false;

compute β;

if(β < 0 or β > 1) return false;

compute α;

if(α < 0 or α > 1-β) return false;

return true;

}

// Notice the conditions for early termination.

// Remind: for the projected 2D point inside triangle test, you

can return early as well when testing the sides of the 3 edges.

38/56

Computing Normals for Intersection Points

• Barycentric interpolation again

– Interpolating from the 3 normals

of the triangle vertices

N (1 ) N1 N 2 N 3

• Adding extra details on the

surface

– Bump mapping, normal mapping,

and displacement mapping.

39/56

Texture, Bump, Normal, and Displacement Mapping

•

•

•

Texture mapping

– Compute the texture coordinate (u, v) of the intersection point by barycentric

interpolating from the texture coordinates of the 3 vertices of the triangle.

– Lookup the texture color c by (u, v) from the texture

– Take c as the local material color and multiple c with the computed lighting

color for the final point color

Bump mapping and normal mapping

– For each intersection point, obtaining the perturbed normal by looking up the

heightmap/normal texture using (u, v) similar to looking up color in texture

mapping

– Combine the perturbation with the true surface normal and use the new

normal to calculate the lighting at that point

Displacement mapping

– Notice that the location of the intersection point will be changed by

displacement

– Requires adaptive tessellation to obtain micropolygons to represent the

surface with enough resolution for accurate intersection tests

40/56

Ray Tracing Transformed Objects

• Rendering duplicated objects in the scene.

• Keep one instance of the geometry data and

transform it.

41/56

Ray Tracing Transformed Objects

• Triangle: Still a triangle after transformation

• Sphere: becomes ellipsoid

– Write another intersection routine?

– …or reuse ray-sphere intersection code?

42/56

Ray Tracing Transformed Objects

• Idea: Intersect untransformed object with

inverse-transformed ray

43/56

28/44

Ray Tracing Transformed Objects

• Transform intersection ( p, n) back to world

coordinates

– Intersection point:

– Intersection normal:

44/56

29/44

Recall: Transforming Normals

We can’t just multiply the normal by the 3x3 submatrix of

modelview matrix. If the modelview matrix is non-orthogonal,

e.g. contains a non-uniform scaling…

scale y

N’ is a WRONG normal in the

transformed space

Idea is to preserve dot products N V for arbitrary V

Insert identity matrix

N V NT IV NT M 1MV N V

T

V

M

V

and

N

M

N

where

45/56

Lighting the Intersection Point

• The lighting on each intersection point is the sum of influences

from all light sources.

– Cast rays from the intersection point to all light sources.

• Similar to the OpenGL lighting model.

• Different light types:

– Ambient light, point light, directional light, spot light, area

light, volume light.

• Different material properties:

– Diffusion, specular, shininess, emission, etc.

• Different shading models:

– Diffusive shading, Phong shading.

46/56

Casting Shadows Rays

• Detect shadow by rays to light source

R(t ) S t ( L S )

t [ ,1)

• Test for occluder

– No occluder, shade normally (e.g. Phong model)

– Yes occluder, skip light (don’t skip ambient)

47/56

Spurious Self-Occlusion

• Once the intersection point is found, add ε to t to avoid the redundant

intersection report for the shadow ray and the original surface

• This often fails for grazing shadow rays near the objects silhouette.

• Better to offset P in the normal direction from the surface

– The direction of the shadow ray shot from the perturbed point to the light source may

be slightly different from direction of the original shadow ray.

• Also need to detect the intersections of the new P with other objects in

the scene to avoid an incorrect offset.

The perturbed point may be

still inside the object

Offset along the ray

Offset along the normal

48/56

Avoiding incorrect self-shadowing

• Self shadowing

– Add shadow bias (ε)

– Test object ID

Incorrect self-shadowing

Correct

49/56

23/44

Example: Diffusive Shading

The intersection point and

normal calculated in the rayobject intersection test

50/56

Example: Phong Shading

The cosine of the angle between the

halfway vector H and the normal

vector N is raised to a user-specified

power phong_exp, which controls

the sharpness of the highlight.

51/56

Example Code: Shadows

Cast a shadow ray from the

intersection point and detect

whether it hits any object.

If it does, then the shadow ray

returns 0 and no lighting is

computed for that point.

Otherwise lighting the point

normally.

52/56

Why can we sum the lights?

• The light incident on a surface is

proportional to the incident irradiance

• An increase in incident energy results in an

increase of reflected energy

• Bidirectional Reflection Distribution

Function (BRDF)

Lo due to i (i , o ) BRDF (i , o ) Li d i cos i

53/56

Light Types in Ray Tracing

Point Light

Directional Light

Spot Light

Area Light

Area Light from a light tube

Volume light

54/56

Area Lights

– Lights in the real world have an area

• Points on the surface can be partially occluded

• Shoot a number of rays from the intersection point to

different points on the light

• Take the average of the results

• Creates soft shadows

55/56

Parallel Ray Tracing

• Ray tracing is inherently parallel, since the rays for one

pixel are independent of the others

– Take advantage of the modern parallel CPU/GPU/Clusters to

significantly accelerate your ray tracer

• Use threading (e.g., Pthread, OpenMP) to distribute rays among cores

• Use Message Passing Interface (MPI) to distribute rays among processors

(across machines)

• Use OptiX/CUDA to distribute rays on GPU

– Distribute rays to different threads/processors in a memory

coherent way

• E.g., assign the spatially neighboring rays (on the image plane) to the same

core/processor

• These rays usually tend to intersect with the same object and traverse the

same elements in the acceleration structure.

56/56