Evaluation Research

advertisement

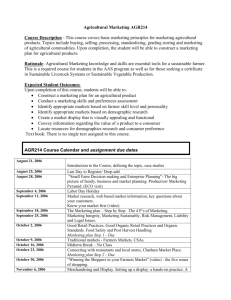

Evaluation Research Kodi D. Havins AED 615 Fall 2006 Dr. Franklin Evaluation Research • Definitions: is the systematic assessment of the worth or merit of some object” – “Evaluation is the systematic acquisition and assessment of information to provide useful feedback about some object” – “Evaluation (Trochim, 2006) Evaluation Research • Basically is used to provide feedback on an event, organization, program, policy, technology, person, activity, etc. – All of these are called “object(s)” • It assesses information about the “object” • Used heavily in social sciences and government • agencies Usually outcomes influence decision-making process Research Designs • 4 Major Types: 1. 2. 3. 4. Survey Research Case Study Field Experiment Secondary Data Analysis (Garson, n.d.) Procedures • 4 Strategies: 1. Scientific-Experimental Models 2. Management-Oriented Systems Models 3. Qualitative/Anthropological Models 4. Participant-Oriented Models (Trochim, 2006) 1. Scientific-Experimental Models • Take values and methods from the sciences (especially social sciences) • Prioritize on the desirability of impartiality, accuracy, objectivity, and validity of the information generated (Trochim, 2006) 1. Scientific-Experimental Models • Examples: Tradition of experimental and quasiexperimental designs Objectives-based research that comes from education Econometrically-oriented perspectives including cost-effectiveness and costbenefit analysis Recent articulation of theory-driven evaluation (Trochim, 2006) 2. Management-Oriented Systems • 2 of the most common: – – Program Evaluation and Review Technique (PERT) Critical Path Method (CPM) (Trochim, 2006) 2. Management-Oriented Systems Examples: • Framework (“Logframe”) Model – Used widely by U.S. Agency for International Development (Trochim, 2006) 3. Qualitative/Anthropological Models • Emphasis on: – – – The importance of observation The need to retain the phenomenological quality of the evaluation context The value of subjective human interpretation in the evaluation process (Trochim, 2006) 3. Qualitative/Anthropological Models Examples: – – – – The approaches known in evaluation as naturalistic or “Fourth Generation” Evaluation The various qualitative schools Critical Theory and art criticism approaches The “Grounded Theory” approaches of Glaser and Strauss (Trochim, 2006) 4. Participant-Oriented Models • Emphasize the central importance of the evaluation participants (Trochim, 2006) 4. Participant-Oriented Models Examples: – Client-Centered Research – Stakeholder Approaches – Consumer-Oriented Research (Trochim, 2006) So, how do you decide which one to use??? Things to keep in mind: • Great evaluators use ideas from each of the 4 strategies as they need them • Methodologies needed will and should be varied – This should not be an easy process • There is no simple answer!!! (Trochim, 2006) Types of Evaluations: • There are many different types of evaluations Types of Evaluations: • 2 Important Types: 1. Formative Evaluation 2. Summative Evaluation (Trochim, 2006) 1. Formative Evaluation • Purpose: – To strengthen or improve the object being evaluated (Trochim, 2006) • In other words, to provide useful feedback to for the greater good of the “object” 1. Formative Evaluation • Includes several Evaluation Types – – – – – Needs Assessment Evaluablitiy Assessment Structured Conceptualization Implementation Evaluation Process Evaluation (Trochim, 2006) 1. Formative Evaluation • Questions generally asked: – What is the definition and scope of the problem or issue, or what’s the question? – Where is the problem and how big or serious is it? – How should the program or technology be delivered to address the problem? – How well is the program or technology delivered? (Trochim, 2006) 2. Summative Evaluation • Purpose: – To examine the effects or outcomes of some object (Trochim, 2006) • In other words, to review the results/conclusions of an “object” 2. Summative Evaluation • Can be subdivided into different categories: – – – – – Outcome Evaluations Impact Evaluation Cost-Effectiveness and Cost-Benefit Analysis Secondary Analysis Meta-Analysis (Trochim, 2006) 2. Summative Evaluation • Questions generally asked: – What type of evaluation is feasible? – What was the effectiveness of the program or technology? – What is the net impact of the program? (Trochim, 2006) Limitations • Limitations of this type of research include the level of expertise and knowledge of the expert panels – If the evaluation is only as good as the knowledge of the expert panel Journal Article A Review of Subject Matter Topics Researched in Agricultural and Extension Education By: Rama B. Radhakrishna & Wenwei Xu A Review of Subject Matter Topics Researched in Agricultural and Extension Education Retrieved from: Journal of Agriculture Education http://pubs.aged.tamu.edu/jae/search/defa ult.asp Purpose of Study • The purpose of the study was to “examine subject matter topics researched in agricultural education over a ten year period” Objectives • The objectives of the study were to: 1. Identify subject matter topics researched in agricultural extension education in the last decade (1986 – 1996) 2. Categorize subject matter topics published in the Journal of Agricultural Education and proceedings of the National Agricultural Education Research Meeting over a ten year period (1986-1996) How the Evaluation Method was used • 2 sources were used to gain data – Journal of Agricultural Education – Papers Presented at the National Agricultural Education Research meetings (NAERM) from 1986-1996 How the Evaluation Method was used • Resulted in 402 journal articles and 451 papers How the Evaluation Method was used • Each paper was given a code number • Each was reviewed and categorized into relevant subject matter categories – 3 Criteria were used: • Title of Study • Central Theme and Focus • Findings and conclusions How the Evaluation Method was used • 25 subject-matter topics were identified • The list of the 25 topics was given to a panel of experts for review and validation How the Evaluation Method was used • Panel of Experts – Asked to comment on: 1. The appropriateness of categories 2. Add or identify categories they thought that were 3. left out or delete categories they felt did not fit Suggest whether some categories could be combined How the Evaluation Method was used • Revised list of subject-matter categories contained 30 subject matter topics • Data was then summarized using frequencies and percentages Some of the Findings Top 5 Studies: • 75 Secondary Agriculture Programs Studies • 70 Styles/Theory and Cognition Studies • 43 Professionalism Studies • 42 Extension Studies • 38 Agriculture Mechanics/Safety Studies Conclusions of Study “Study provided information on subject matter topics investigated by agricultural and extension educators, which in turn provides perspectives about the research efforts of the agricultural and extension education profession.” Summary of Evaluation Research Method • The evaluation method was used in this study to assess what topics were researched in the agricultural education field during a 10 year period. Summary of Evaluation Research Method • The outcomes of this study will provide useful feedback to those in the profession References • Radhkrishna, R.B. & Xu, W. (1997). A review of subject • matter topics researched in agricultural and extension education. Journal of Agricultural Education. 38(3), 5969. Trochim, W.M. (2006) Research methods knowledge base. Drake University. Retrieved from: http://www.socialresearchmethods.net/kb/intreval.htm on 10/26/06. Any Questions??? Thank You