Assessment Strategies for Inquiry Units

Assessment Strategies for

Inquiry Units

Delwyn L. Harnisch

University of Nebraska, Lincoln

Teacher’s College

National Center for Information Technology in Education ( NCITE ) harnisch@unl.edu

National Center for

Information Technology in

Education

. . . bringing together researchers and practitioners to appropriately and effectively apply technology to learning.

• Initial partners: UNL Teachers

College, NET, and CSE

• Planning participants: UNL,

UNO, UNK, NDE, Aim Institute

• Where, why, and how does educational technology apply to learning

• Basic research, applied research, technology transfer

NCITE Major Themes

• R&D: The role of technology in teaching and learning

– Supporting learners with technology

– Supporting teaching with technology

– Technology supported assessment/evaluation

• R&D: Interrelationship of technology, education, and society

– Social and cultural issues and technologies

• Moving research into practice: people and tools

– Creation/development of educational technology leaders

– Develop, adapt, and evaluate educational technology tools

Initial Goals

• Create initial organization, governance, and staffing

• “Put NCITE on the map”

– Take steps to ensure respected, sustainable role in educational technology R&D and transition to practice

– Establish educational, commercial, and government collaborations and contacts

Build Upon Existing Expertise

• Centers and Institutes

– Center for Innovative Instruction

– BUROS Institute for Mental Measurement

– Nebraska Educational Telecommunications

– Office of Internet Studies (UNO)

• Current Research

– Affinity Learning

– Tools Promoting Deeper Online Learning

– Cognitive Diagnostic Assessment

– Measuring Technology Competencies

Build New Opportunities

• Seed Grants

– $75K - $100K per year set aside

– Grants will most likely be $15K to $30K

– Selected based on probability of outside funding and relevance to

NCITE

• Potential areas – Funded Proposal Writing/Review Teams

– Research into the role of human teachers/coaches in computerbased learning

• At the current state of technology, having humans “in the loop” is vital

– Programmatic, education, and technical look at Blackboard and

WebCT

– Assessment and effectiveness of visualization tools

– Intelligent Agents for “leveling the peer playing field”

– Literacy/Writing Assessment

– Measurement of educator technical competency

Create Collaborations

• Extend partnerships

– Faculty/student affiliates

– Nebraska Department of Education, K-12

– National linkages (e.g., Higher Education, ADL

Initiative, NCSA)

• Nebraska Symposium on Information

Technology in Education (2002 and beyond) http://www.ncite.org/

NCSA and NCITE

• Current initiatives

– Joint proposals and projects

– Faculty affiliation

– Seminars with discipline leaders

– Research linkages and support

– Research resources and infrastructure

– Publication and dissemination

Evaluation of Inquiry Units

Requires

• Reasoning Strategies

• Problem-Based Approach

• Real-Life Connections

Reasoning in areas of academic work

Where students interpret

Texts and data

Where Students pose questions or identify issues and through research, search for answers

Where students

Search for ways to reach goals and

Overcome obstacles

Where students use imagination and discipline to design novel and expressive products

What is Evaluation?

• evaluation addresses a range of questions about quality and effectiveness --both implementation and outcome –

• uses a range of methods --qualitative and quantitative--to gather and analyze data.

• evaluation explicitly uses a range of criteria upon which to judge program success, e.g., student interest and motivation, parent satisfaction, teacher enthusiasm, standards of the profession,and student learning as measured in multiple ways.

• Handbook for Mixed Method Evaluations

Types of Evaluations: Planning

• Assesses understanding of project goals, objectives, timelines and strategies

• Key questions

– Who are the people involved?

– Who are the students?

– What are the activities that will involve the students?

– What is the cost?

– What are the measurable outcomes?

– How will the data be collected?

– How long is the program?

Types of Evaluations: Formative

• Assesses ongoing project activities

– Implementation evaluation:

– Progress Evaluation

Formative: Implementation Evaluation

• Assesses whether project is being conducted as planned.

• Key questions:

– Were appropriate participants selected?

– Do activities and strategies match those in the plan?

– Were appropriate staff members hired and trained and working according to the plan?

– Were activities conducted according to plan?

– Was management plan followed?

Formative: Progress Evaluation

• Assesses the progress made by the participants in meeting goals

• Key questions:

– Are participants moving toward the anticipated project goals?

– Which activities and strategies are aiding the participants toward the goal?

• Mid-year GK12 Evaluation Form

Summative Evaluation

• Assesses project successful in meeting goals

• Key questions

– Was the project successful?

– Did the project meet overall goals?

– Did participants benefit from the project?

– What components were most effective?

– Were the results worth the project’s cost?

– Is the project replicable and transportable?

Stages in Conducting an Evaluation

• Develop evaluation questions

• Match questions with appropriate data collection methods

• Collect data

• Analyze data

• Report information to interested audiences

Developing Evaluation Questions

• Clarify goals of the evaluation

• Identify and involve key stakeholders

• Describe activity or intervention to be evaluated

• Formulate potential relevant evaluation questions

• Determine available resources

• Prioritize and eliminate questions if necessary

Match Questions with Data

Collection Methods

• Select appropriate methodological approach

– Quantitative

– Qualitative

– Mixed Method

• Determine data sources

• Select appropriate data collection method to collect data and build data base.

Collecting Data

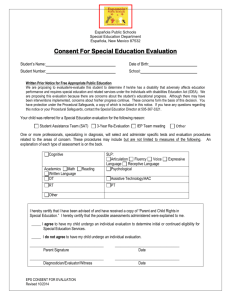

• Obtain necessary clearances

• Consider needs and sensitivities of respondents

• Train data collectors

• Avoid causing disruption to data collection site

Analyze Data

• Inspect raw data and clean if necessary

• Conduct initial evaluation based on evaluation plan

• Conduct additional analyses based on initial results

• Integrate and synthesize findings

Reporting Information

• Report information to targeted audiences

• Deliver reports in a timely manner

• Customize reports and presentations

Sample Assessment

Strategies for Inquiry Units

Adapted from Teaching, Learning, & Assessment Together by Arthur K. Ellis

Assessment Possibilities – Jigsaw

• Groups of students given different parts of an event or problem

• Groups research their part

• Groups come together and “teach” others about their part

• Entire group draws conclusions

• Promotes collaborative learning

Question Authoring

• Students write down questions on content or concepts they don’t know or areas of interest at the beginning of a lesson

• Students compare questions in dyads

• Teacher posts questions

• Teacher suggests reading or activities that help student answer question

Problem Solving: Talk about it

• Students engage in self-talk or talk with a friend while solving a problem

• Goals

– Self-feedback mechanism

– Testing ideas in public

– Make thought process deliberate

• Students share strategies with the class

• Teacher comments on strategies

Creative Work: Learning illustrated

• Ask students to visualize the concept

– Concept map

– Picture

– Flow chart

– Diagram

– Map

• Promotes visualization

Examples

Science Review Math Concept Map

Analytic Work: Key Idea

• Ask what is the “Key” idea at the end of a lesson or unit

• Ask students to explain why this is a “key idea”

• Can be done in groups

• Goal

– Students see learning as composed of ideas

– Teacher sees what students have valued

Analytic Work: I Can Teach

• Students write, diagram, or map concepts that they could teach to other students

• Shows how well students know the idea

• Helps students who have not grasped concepts the first time

• Allows teacher to evaluate their teaching methods

Real Life Connections:

Authentic Applications

• Have students see how an idea is applied in real life

• Students visualize this idea in posters, projects, or diagram

• Students reflect on the concept and how it is applied in their visual presentation

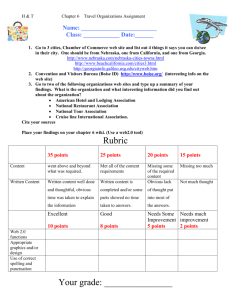

• Example evaluation form

Example: Roller coasters

Real Life Connections:

Getting a Job

• Role play activity

• Students imagine they hold a particular occupation

• Students see how the work they are doing in school is related to the occupation

• Could be used as a problem-based exercise

Summary Activities:

I Learned Statements

• Statements of personal learning at end of lesson or unit

• Can be done in writing or orally

• Teachers see what was really important to students

• Example evaluation form

Summary Activities:

Clear and Unclear Windows

• Students sort out what is clear or unclear to them on paper

• Students who have clear understanding can help those who don’t

• Example evaluation form

Need for Careful Planning

• Need to have evaluation plan guided by a theory that takes into account

– the type of information needed to make decisions

– the suitability of different kinds of methods for learning about different kinds of phenomena

– the technical limitations and biases of various methods

– the importance of purpose of evaluation in mixedmethod evaluation

– that different purposes invoke different designed mixes of methods

Conclusion

• Mixed method framework for evaluation invites the "uncertainty and openness needed for evaluators to use their findings for action and change"

• The ability to examine multiple questions about a program offers "exciting and potentially meaningful opportunities for connectedness and solidarity" for evaluators, program managers, and program participants

• The "practical possibilities" of mixing inquiry methodologies contribute to, and reflect, the pluralistic nature of modern society

N CITE