LilyDu-Computer Timeline

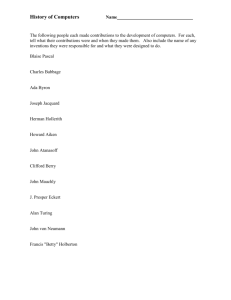

advertisement

Timeline Of Computer History By: Lily Du The calculator Blaise Pascal’s machine, La Pascaline, could add and subtract. It had a set of eight gears that worked together. La Pascaline never became popular. It broke very often and Pascal was the only one who could fix it. It was also very slow, and clerks were frightened of it- scared that it would replace them at their jobs. Pascal was later famous for math and philosophy, yet was still honored with a computer language named after him for his role in computer history. In 1676, Gottfried Wilhelm von Leibniz, built the Leibniz Calculator. It could add, subtract, multiply, divide and figure square roots. It was never manufactured, but almost every mechanical calculator in the next 150 years was built in a similar way. La Pascaline The Leibniz Calculator The Weaving Machine In 1801, Joseph Marie Jacquard built a “programmed” machine for the weaving industry. This machine greatly cut down the time and effort that it took to weave intricate designs. Jacquard built a loom that “read” sets of heavy cardboard punched cards. All of the cards for this design were linked together like a belt. As they passed over a set of rods on the loom, they guided the parts of the loom to make the design. Jacquard’s “programmed” Loom The Story Of Charles Babbage Charles Babbage’s Difference Engine Charles Babbage was a professor at Cambridge University (England). In his work he often used tables of numbers that were already figured, such as square roots. These tables were filled with mistakes- made by the clerks who figured the numbers and those who copied them. This motivated Babbage to come up with something that could compute and print the tables exactly. In 1822, a model of his first idea had been born. However, he wasn’t able to build a full-sized machine because parts for the larger machine were almost impossible to make in Babbage’s time. Even the smallest mistakes in the brass, mechanics and pewter rods made the machine not function properly. Charles Babbage and the Analytical Machine Charles’ idea for this machine was amazingly like a computer. It would read a program from punched cards, figure and store the answers to problems, and print the answers on paper. Unfortunately, Babbage died before the machine was completed, but his son made a model from Babbage’s notes and drawings. Thanks to Babbage’s ideas, the model worked and he is now known as the Father of Computers. A model of Babbage’s Analytical Machine, put together by his son. Lady Lovelace Augusta Ada Byron, also known as Lady Lovelace, was a genius in math. Intrigued by Babbage’s work, she translated an article about the Analytical Engine from French to English. She contributed her own important notes about how the machine should work. Lady Lovelace also aide Babbage with “programs” for the Analytical Engine. Many of her ideas are similar to those used in today’s computer programs. Unfortunately, she too never lived to see her ideas used. Her work has long outlived her. She is called “the first programmer,” and in her honor, a programming language used chiefly by the U.S government was named Ada in her honor. Herman Hollerith Hollerith’s Tabulating Machine In 1887, the people at the U.S Census Bureau had finally finished counting the 1880 census. Though they were a little late, for by then the information was so out of date that it had little meaning, what would happen in the 1890 census, when the population was even greater? Using their slow methods, it would be time to take the 1900 census before the 1890 census was counted. The Census Bureau held a contest to find a faster way to count and record, and that’s where Herman comes in. He won the contest, and managed to build a Tabulating Machine that read and sorted data from punched cards. The holes punched in the cards matched each person’s answers to questions. The tabulator read and punched cards as they passed over tiny brushes. Each time a brush found a hole, it completed an electric circuit- which then caused special counting dials to increase the amount of data for that answer. International Business Machines Corporation Thanks to Hollerith’s tabulating machine, it took less than three years to count the 1890 census, even with the 13 million more people since 1880. Happy with his success, Hollerith formed the Tabulating Machine Company in 1896. The company made machines for census takers in western Europe and Canada. Hollerith sold his company in 1911, which later became International Business Machines Corporation (IBM). The First Calculator Howard Aiken of Harvard University started working on a multi-purpose machine that could be programmed to do many computing jobs. In 1944, he had finally finished the Mark I, the very first automatic calculator. It was similar to the Analytical Engine that Charles Babbage had planned many years before. It was not a true computer, because it worked by mechanical methods, not electronic. Unfortunately, the Mark I was outdated before it could be finished. The Mark I, the very first automatic calculator The ABC The ABC was built by John Atanasoff and his helper, Clifford Berry. The ABC was the first electronic computer – Titled the ABC for Atanasoff-Berry computer. It was unusual for two reasons, it used vacuum tubes to store and count data; secondly, all data fed into the ABC were coded using binary notation. Therefore, any number or letter could be written in a series of zeros and ones. They stood for the two states of electricity- on or off, flowing or not flowing. The vacuum tubes were quick, and the binary digits kept the data simple. Though the machine was incredible, it could only do special work. The AtanasoffBerry Computer (ABC) The ENIAC John Mauchly and J.Presper Eckert created the ENIAC (Electronic Numerical Integrator And Calculator). It was huge, and had 18000 vacuum tubes that did its work. In twenty seconds, ENIAC could do a math problem that would have taken forty hours for one person to finish. Though, it did seem too good to be true. Each time it used a new program, operators had to rewire it and reset its switches by hand, which could take several hours and there were often mistakes. It took several people to operate the ENIAC. Computers Through the Ages The First Generation (1951-1958): The UNIVersal Automatic Computer (UNIVAC) The first generation of computers began in 1951, when the first commercial computer was sold to the U.S Census Bureau. Another similar to it was installed at general Electric’s appliance Park in Louisville, Kentucky. They were UNIVAC I computers, short for UNIVersal Automatic Computer. They were developed by Mauchly and Eckert (who build the ENIAC.) First generation computers were colossal, slow, expensive and often very undependable. They used thousands of vacuum tubes which took up a lot of space and gave off a great deal of heat. To prevent overheating, they had to be kept in rooms with special air conditioning. When a tube burned out, a lot of time was wasted hunting for it. Writing a program was difficult and errors were very common. Then, everything was changed in 1952, when Rear Admiral Grace Murray Hopper (of the U.S navy) and her staff wrote a set of language translator programs. These programs translated words and symbols into binary numbers. As a result, programmers could then write programs in codes that used words and symbols rather than ones and zeros- which meant less errors and confusion. Second Generation (1959-1964) In the late 1950s, transistors began to replace vacuum tubes in computers. Similar to vacuum tubes, transistors hold tiny circuits. Transistors were made of different materials and they conducted electricity faster and better than vacuum tubes. They were also much smaller and gave off less heat. Second-generation computers had better primary memory than those before them. They could send data to storage such as magnetic tapes and disks outside the main computer unit. All these new developments led to real-time computing, which meant that events could be input and processed as they happened. Therefore, many different jobs could be done by computer. Transistors clustered together on a circuit card Third Generation (1965-1970) A third-generation minicomputer Jack Kilby of Texas was working on integrated circuits. He discovered a way to put several circuits on a tiny chip- which is where we get the name “integrated circuit”. Kilby started off slow, but soon a single silicon chip less than one-eighth inch square could hold sixty-four complete circuits. The computers of the third generation used less power, cost less, and were smaller and much more dependable than earlier machines. Although they became smaller, their primary memories increased by putting memory on chips. During the 1960s, minicomputers were improved and commonly used for a variety of small jobs. They were smaller, had less storage space, and cost less though they could do many of the same things as large computers. Remote terminals are devices placed some distance away from a central computer and linked to it through cables such as telephone lines. They became very common as the industry began to grow. Fourth Generation (1971-Today) In the fourth generation, engineers continued to fit more circuits onto a single chip in large-scale integration, making computers even faster. Ted Hoff, and engineer at Intel Corporation, thought he could make one chip that could be programmed to do many jobs. This idea resulted in the microprocessor or “computer on a chip”. This chip did all the computing and logic work of a computer. The microprocessor was made to originally be used in calculators and now computers. However, it led to the creation of personal computers, or micro computers. For the first time in 1977, a person could walk into a store and buy a low-priced personal computer (offered by Radio Shack). The success of micro computers led to a flood of software. The early programs were games, then educational programs. A single microprocessor based on very-large-scale integration is more powerful than any of the 1950s computers. These days, everything is being developed so quickly, who knows what will be next. Ted Hoff, an engineer at Intel Corporation