ICT131 editedgroupA

advertisement

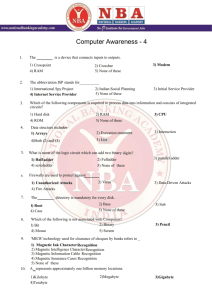

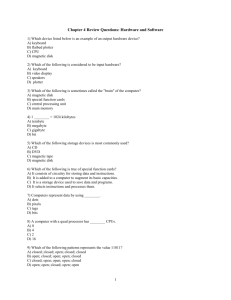

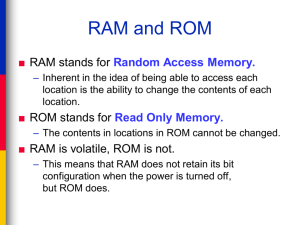

Lecturer: Mr Chembe Christopher Email: cchembe@mu.ac.zm Student’s Email Group: ict131-2012@googlegroups.com Course Content. Introduction to computer science; Input and Output Devices; Memory; Software; and Hardware and Networking. Lectures and Assessments 3 hrs per week 40 % Continuous Assessment 10 % Quiz 10 % Assignments 20 % Test 0ne and two 60 % Final Exam Recommended Reading Data Processing Second Edition by H. M. Libati ICT 131 Module Lecture Resources Other Materials Introduction History of Computers Types of Computers Components of Computers Introduction A Computer is a device that accepts data in one form and processes it to produce data in another form. The forms in which data is accepted or produced by the computer vary enormously from simple words or numbers to signals sent from or received by other items of technology. So, when the computer processes data it actually performs a number of separate functions as follows: Input: The computer accepts data from outside for processing within. Storage: The computer holds data internally before, during and after processing. Processing: The computer performs operations on the data it holds within. Output: The computer produces data from within for external use. Data process Input Data Output Storage History of Computing The first electronic computers were produced in the 1940s. since then a series of radical breakthroughs in electronic occurred. With major breakthrough the computer based upon the older form of electronics have been replaced by a new “generation” of computers based upon the newer form of electronics. These “generations” classified as follows: First generation Second generation Third generation Fourth generation Fifth generation First Generation 1946-1956 Vacuum tubes to store and process information. Vacuum tubes consumed large amounts of power, generated heat, and were short-lived. Limited memory and processing capability. Examples of first generation computers are EDSAC, EDVAC, LEO and UNIVAC1. EDSAC EDSAC Second Generation 1957-1963 Transistors for storage and processing information. Transistors consumed less power than vacuum tubes, produced less heat, and were cheaper, more stable, more reliable. Second generation computers, with increased processing and storage capabilities, began to be more widely used for scientific and business purposes. Examples of second generation computers are LEO mark III, ATLAS and the IBM 7000 series. Third Generation 1964- 1979 Integrated circuits(IC) for storing and processing information. Integrated circuits are made by printing numerous, small transistors on silicon chips. These devices are called semiconductors. Third generation computers employed software that could be used by non-technical people, thus enlarging the computers role in business. Examples of third generation computers are the ICL 1900 series and the IBM 360 series. Fourth Generation 1980-present, Very Large Scale Integrated circuits (VLSI) to store and process information. The VLSI technique allows the installation of hundreds of thousands of circuits (transistors and other components) on a small chip. With ultra large-scale integration, 10 million transistors could be placed on a chip. These computers are inexpensive and widely used in business and everyday life. Fifth Generation The first four generations of computer hardware are based on the Von Neumann architecture, which processed information sequentially, one instruction at a time. The fifth generation of computers uses massively parallel processing to process multiple instructions simultaneously. Massively parallel computers use flexibly connected networks linking thousands of inexpensive, commonly used chips to address large computing problems, attaining supercomputer speeds. With enough chips networked together, massively parallel machines can perform more than a trillion floating point operations per seconda teraflop. A floating point operation (flop) is a basic computer arithmetic operation, such as addition or subtraction, on numbers that include a decimal point. Types of Computers Computers are distinguished on the basis of their processing capabilities. Computers with the most processing power are also the largest and most expensive. Super Computers Mainframes Minicomputers Personal Computers Network Computers Super Computers Super computers are the computers with the most processing power. Super computers are especially valuable for large simulation models of real-world phenomena Super computers are used to model the weather for better weather prediction, to test weapons non-destructively, to design aircraft, for more efficient and less costly production, and to make sequences in motion pictures. Super computers are generally operated at 4 to 10 times faster than the next most powerful computer class, the mainframe. Super computers use the technology of parallel processing. However, in contrast with neural computing, which uses massively parallel processing, super-computers use noninterconnected CPUs. Mainframes Mainframes are not as powerful and generally not as expensive as supercomputers. Large corporations, where data processing is centralized Applications that run on a mainframe can be large and complex, allowing for data and information to be shared throughout the organization. Online secondary storage may use high-capacity magnetic and optical storage media with capacities in the gigabyte to terabyte range. Several hundreds or even thousands of online computers can be linked to a mainframe. Minicomputers Minicomputers are also called midrange computers, are smaller and less expensive than mainframe computers. Minicomputers are usually designed to accomplish specific tasks such as process control, scientific research, and engineering applications. Larger companies gain greater corporate flexibility by distributing data processing with minicomputers in organizational units instead of centralizing computing at one location. These minicomputers are connected to each other and often to a mainframe through telecommunication links. Personal Computers Personal computers are the smallest and least expensive category of general purpose computers. In general, modern personal computers have between 2 to 4 gigabytes of primary storage, a CD-ROM drive, and 250 to 350 gigabytes or more of secondary storage. They may be subdivided into four classifications based on size: Desktops Laptops Notebooks Palmtops Network Computers The computers described so far are considered “smart” computers. However, mainframe and midrange computers use “dumb” terminals, which are basically input/output devices. A network computer (NC) is a desktop terminal that does not store software programs or data permanently. Similar to a “dumb” terminal, the NC is simpler and cheaper than a PC and easy to maintain. Users can download software or data they need from a server or a mainframe over an intranet or internet. There is no need for hard disks, floppy disks, CD-ROMs and their drives. The central computer can save any material for the user. The NCs provide security as well. However, users are limited in what they can do the terminals. Components of a Computer Computer hardware is composed of the following components: The input devices accept data and instructions and convert them to a form that the computer can understand. The output devices present data in a form people can understand. The CPU manipulates the data and controls the tasks done by the other components. The primary storage (internal storage) temporarily stores data and program instructions during processing. It also stores intermediate results of the processing. The secondary storage (external) stores data and programs for future use. Components of a Computer cont’d Input/Output Devices The input/output (I/O) devices of a computer are not part of the CPU, but are channels for communicating between the external environment and the CPU. Data and instructions are entered into the computer through input devices, and processing results are provided through output devices. Widely used I/O devices are the visual display unit (VDU), storage, printers, keyboards, mouse and image-scanning devices. I/O devices are controlled directly by the CPU or indirectly through special processors dedicated to input and output processing. Generally speaking, I/O devices are subclassified into secondary storage devices (primarily disk and tape drives) and peripheral devices (any input/output device that is attached to the computer). Central Processing Unit(CPU) The central processing unit (CPU) is also referred to as a microprocessor because of its small size. The CPU is the center of all computer-processing activities, where all processing is controlled, data are manipulated, arithmetic computations are performed, and logical comparisons are made. The CPU consists of the control unit, the arithmeticlogic unit (ALU), and the primary storage (or main memory). Arithmetic and Logic Unit(ALU) The arithmetic-logic unit performs required arithmetic and comparisons, or logic, operations. The ALU adds, subtracts, multiplies, divides, compares, and determines whether a number is positive, negative, or zero. All computer applications are achieved through these six operations. The ALU operations are performed sequentially, based on instructions from the control unit. Primary Storage Primary storage, or main memory, stores data and program statements for the CPU. It has four basic purposes: 1. To store data that have been input until they are transferred to the ALU for processing 2. To store data and results during intermediate stages of processing 3. To hold data after processing until they are transferred to an output device 4. To hold program statements or instructions received from input devices and from secondary storage Control Unit The control unit reads instructions and directs the other components of the computer system to perform the functions required by the program. It interprets and carries out instructions contained in computer programs, selecting program statements from the primary storage, moving them to the instruction registers in the control unit, and then carrying them out. The control unit does not actually change or create data; it merely directs the data flow within the CPU. The control unit can process only one instruction at a time, but it can execute instructions so quickly (millions per second) The series of operations required to process a single machine instruction is called a machine cycle. Each machine cycle consists of the instruction cycle, which sets up circuitry to perform a required operation, and the execution cycle, during which the operation is actually carried out. Buses Instructions and data move between computer subsystems and the processor via communications channels called buses. A bus is a channel (or shared data path) through which data are passed in electronic form. Three types of buses link the CPU, primary storage, and the other devices in the computer system. The data bus moves data to and from primary storage. The address bus transmits signals for locating a given address in primary storage. The control bus transmits signals specifying whether to “read” or “write” data to or from a given primary storage address, input device, or output device. The capacity of a bus, called bus width, is defined by the number of bits they carry at one time. Bus speeds are also important, currently averaging about 133 megahertz (MHz). Processor Speed The speed of a chip depends on four things: the clock speed, the word length, the data bus width, and the design of the chip. The clock, located within the control unit, is the component that provides the timing for all processor operations. The beat frequency of the clock (measured in megahertz [MHz] or millions of cycles per second) determines how many times per second the processor performs operations. Word length is the number of bits( detail of bits you will be studying in unit-5) that can be processed at one time by a chip. Chips are commonly labelled as 8-bit, 16-bit, 32-bit, 64-bit, and 128-bit devices. The larger the word length, the faster the chip speed. The width of the buses determines how much data can be moved at one time. The wider the data bus (e.g., 64 bits), the faster the chip. Input Devices Output Devices Input Devices Users can command the computer and communicate with it by using one or more input devices. Each input device accepts a specific form of data. For example, keyboards transmit typed characters, and handwriting recognizers “read” handwritten characters. Users want communication with computers to be simple, fast, and error free. Therefore, a variety of input devices fits the needs of different individuals and applications Categories Keying devices Examples Punched card reader Keyboard Point-of-sale terminal Pointing devices (Devices that point to objects on the computer screen) Mouse Touch screen Touchpad (or track pad) Light pen Joysticks Optical character recognition (Devices that scan characters) Bar code scanner Optical character reader Optical mark reader Wand reader Cordless reader Handwriting recognizers Pen Voice recognizers (Data are entered by voice) Other devices Microphone MICR ATM Digitizers Keyboard: The most common input device is the keyboard. The keyboard is designed like a typewriter but with many additional special keys. Mouse: The computer mouse is a handheld device used to point a cursor at a desired place on the screen, such as an icon, a cell in a table, an item in a menu, or any other object. Once the arrow is placed on an object, the user clicks a button on the mouse, instructing the computer to take some action. Punched card reader: A card reader such as the IBM 3505 is an electronically mechanized input device used to read Hollerith Cards. Sometimes combined with card punches such as the IBM 2540 card reader-punch, such devices were almost always attached to a computer but in earlier days could be found as stand-alone duplication or serialization devices. Joystick: Joysticks are used primarily at workstations that can display dynamic graphics. They are also used in playing video games. The joystick moves and positions the cursor at the desired object on the screen. Automated Teller Machine: Automated teller machines (ATMs) are interactive input/output devices that enable people to obtain cash, make deposits, transfer funds, and update their bank accounts instantly from many locations. ATMs can handle a variety of banking transactions, including the transfer of funds to specified accounts. One drawback of ATMs is their vulnerability to computer crimes and to attacks made on customers as they use outdoor ATMs. Point-of-Sale Terminals: Many retail organizations utilize point of sale (POS) terminals. The POS terminal has a specialized keyboard. POS devices increase the speed of data entry and reduce the chance of errors. POS terminals may include many features such as scanner, printer, voice synthesis and accounting software. Bar Code Scanner Bar code scanners scan the black-and-white bars written in the Universal Product Code (UPC). This code specifies the name of the product and its manufacturer (product ID). Then a computer finds in the database the price equivalent to the product’s ID. Bar codes are especially valuable in high volume processing where keyboard energy is too slow and/or inaccurate. Optical Character Reader With an optical character reader (OCR), source documents such as reports, typed manuscripts, and books can be entered directly into a computer without the need for keying. An OCR converts text and images on paper into digital form and stores the data on disk or other storage media. OCRs are available in different sizes and for different types of applications. Magnetic Ink Character Reader: Magnetic ink character readers (MICRs) read information printed on checks in magnetic ink. This information identifies the bank and the account number. On a canceled check, the amount is also readable after it is added in magnetic ink. Output Devices The output generated by a computer can be transmitted to the user via several devices and media. The presentation of information is extremely important in encouraging users to embrace computers. Monitors The data entered into a computer can be visible on the computer monitor, which is basically a video screen that displays both input and output. Monitors come in different sizes, ranging from inches to several feet, and in different colors. The major benefit is the interactive nature of the device. Impact Printers: Like typewriters, impact printers use some form of striking action to press a carbon or fabric ribbon against paper to create a character. The most common impact printers are the dot matrix, daisy wheel, and line. Line printers print one line at a time; therefore, they are faster than one-character type printers. Impact printers are slow and noisy, cannot do high-resolution graphics, and are often subject to mechanical breakdowns. Nonimpact Printers: Nonimpact printers overcome the deficiencies of impact printers. Laser printers are of higher speed, containing high-quality devices that use laser beams to write information on photosensitive drums, whole pages at a time; then the paper passes over the drum and picks up the image with toner. Thermal printers create whole characters on specially treated paper that responds to patterns of heat produced by the printer. Ink-jet printers shoot tiny dots of ink onto paper. Sometimes called bubble jet, they are relatively inexpensive and are especially suited for low-volume graphical applications when different colors of ink are required. Plotters Plotters are printing devices using computer-driven pens for creating highquality black-and-white or color graphic images—charts, graphs, and drawings. They are used in complex, low-volume situations such as engineering and architectural drawing, and they come in different types and sizes. Main Memory Secondary Storage Memory In computing and telecommunications a bit is a basic unit of information storage and communication; it is the maximum amount of information that can be stored by a device or other physical system that can normally exist in only two distinct states. These states are often interpreted (especially in the storage of numerical data) as the binary digits 0 and 1. They may be interpreted also as logical values, either "true" or "false"; or two settings of a flag or switch, either "on" or "off". Byte A byte is an ordered collection of bits, with each bit denoting a single binary value of 1 or 0. The byte most often consists of 8 bits in modern systems; however, the size of a byte can vary and is generally determined by the underlying computer operating system or hardware. Byte cont’d Historically, byte size was determined by the number of bits required to represent a single character from a Western character set. Its size was generally determined by the number of possible characters in the supported character set and was chosen to be a divisor of the computer's word size. Word A group of bytes is called a word. Word size dependent on the architecture platform(eg., 32-bit machine, 64-bit machine). A 32-bit word contains 4 bytes; a 64-bit word contains 8 bytes. In a 32-bit machine, everything will be 32-bits wide (all registers, buses, numbers). The wider the word, the higher the processing power. Memory There are two categories of memory: the register, which is part of the CPU and is very fast, and the internal memory chips, which reside outside the CPU and are slower. A register is circuitry in the CPU that allows for the fast storage and retrieval of data and instructions during the processing. The internal memory comprises two types of storage space: RAM and ROM and is used to store data just before they are processed by the CPU. Random Access Memory(RAM) RAM is the place in which the CPU stores the instructions and data it is processing. The larger the memory area, the larger the programs that can be stored and executed. With the newer computer operating system software, more than one program may be operating at a time, each occupying a portion of RAM. Most personal computers as of 2010 needed 3 to 4 gigabytes of RAM to process “multimedia” applications, which combine sound, graphics, animation, and video, thus requiring more memory. RAM – Cont’d The advantage of RAM is that it is very fast in storing and retrieving any type of data, whether textual, graphical, sound, or animation-based. Its disadvantages are that it is relatively expensive and volatile. This volatility means that all data and programs stored in RAM are lost when the power is turned off. To lessen this potential loss of data, many of the newer application programs perform periodic automatic “saves” of the data. RAM – Cont’d Many software programs are larger than the internal, primary storage (RAM) available to store them. To get around this limitation, some programs are divided into smaller blocks, with each block loaded into RAM only when necessary. However, depending on the program, continuously loading and unloading blocks can slow down performance considerably, especially since secondary storage is so much slower than RAM. As a compromise, some architectures use high-speed cache memory as a temporary storage for the most frequently used blocks. Types of RAM Dynamic random access memories (DRAM) are the most widely used RAM chips. These are known to be volatile since they need to be recharged and refreshed hundreds of times per second in order to retain the information stored in them. Synchronous DRAM (SDRAM) is a relatively new and different kind of RAM. SDRAM is rapidly becoming the new memory standard for modern PCs. The reason is that its synchronized design permits support for the much higher bus speeds that have started to enter the market. Static Random Access Memory (SRAM): Random-access memory in which each bit of storage is a bistable, commonly consisting of cross-coupled inverters. It is called "static" because it will retain a value as long as power is supplied, unlike dynamic random-access memory (DRAM) which must be regularly refreshed. It is however, still volatile, i.e. it will lose its contents when the power is switched off, in contrast to ROM. SRAM is usually faster than DRAM but since each bit requires several transistors (about six) you can get less bits of SRAM in the same area. It usually costs more per bit than DRAM and so is used for the most speed-critical parts of a computer (e.g. cache memory) or other circuit. Read Only Memory(ROM) ROM is that portion of primary storage that cannot be changed or erased. ROM is nonvolatile; that is, the program instructions are continually retained within the ROM, whether power is supplied to the computer or not. ROM is necessary to users who need to be able to restore a program or data after the computer has been turned off or, as a safeguard, to prevent a program or data from being changed. For example, the instructions needed to start, or “boot,” a computer must not be lost when it is turned off. Types of ROM Programmable read-only memory (PROM) is a memory chip on which a program can be stored. But once the PROM has been used, you cannot wipe it clean and use it to store something else. Like ROMs, PROMs are nonvolatile. Erasable programmable read-only memory (EPROM) is a special type of PROM that can be erased by exposing it to ultraviolet light. Stored Program Concept A stored-program digital computer is one that keeps its programmed instructions, as well as its data, in read-write, random-access memory (RAM). Stored-program computers were advancement over the program-controlled computers of the 1940s, such as the colossus and the ENIAC, which were programmed by setting switches and inserting patch leads to route data and to control signals between various functional units. In the vast majority of modern computers, the same memory is used for both data and program instructions. Secondary Storage Secondary storage is separate from primary storage and the CPU, but directly connected to it. An example would be the 3.5-inch disk you place in your PC’s A drive. It stores the data in a format that is compatible with data stored in primary storage, but secondary storage provides the computer with vastly increased space for storing and processing large quantities of software and data. Primary storage is volatile, contained in memory chips, and very fast in storing and retrieving data. Secondary Storage cont’d In contrast, secondary storage is nonvolatile, uses many different forms of media that are less expensive than primary storage, and is relatively slower than primary storage. Secondary storage media include magnetic tape, magnetic disk, magnetic diskette, optical storage, and digital videodisk. Magnetic Tape Magnetic Tape is kept on a large reel or in a small cartridge or cassette. Today, cartridges and cassettes are replacing reels because they are easier to use and access. The principal advantages of magnetic tapes are that it is inexpensive, relatively stable, and long lasting, and that it can store very large volumes of data. Magnetic Tape cont’d A magnetic tape is excellent for backup or archival storage of data and can be reused. The main disadvantage of magnetic tape is that it must be searched from the beginning to find the desired data. The magnetic tape itself is fragile and must be handled with care. Magnetic tape is also labour intensive to mount and dismount in a mainframe computer. Magnetic Drums Drum memory is a magnetic data storage device and was an early form of computer memory widely used in the 1950s and into the 1960s For many machines, a drum formed the main working memory of the machine, with data and programs being loaded on to or off the drum using media such as paper tape or punch cards. Drums were so commonly used for the main working memory that these computers were often referred to as drum machines. Magnetic Drums cont’d Drums were later replaced as the main working memory by memory such as core memory and a variety of other systems which were faster as they had no moving parts, and which lasted until semiconductor memory entered the scene. A drum is a large metal cylinder that is coated on the outside surface with a ferromagnetic recording material. A row of read-write heads runs along the long axis of the drum, one for each track. Magnetic Disk Magnetic disks, also called hard disks, alleviate some of the problems associated with magnetic tape by assigning specific address locations for data, so that users can go directly to the address without having to go through intervening locations looking for the right data to retrieve. This process is called direct access. Magnetic Disk cont’d Most computers today rely on hard disks for retrieving and storing large amounts of instructions and data in a non-volatile and rapid manner. The hard drives of 2010 microcomputers provide 250 to 350 gigabytes of data storage. The speed of access to data on hard-disk drives is a function of the rotational speed of the disk and the speed of the read/write heads. The read/write heads must position themselves, and the disk pack must rotate until the proper information is located. Advanced disk drives have access speeds of 8 to 12 milliseconds. Magnetic disks provide storage for large amounts of data and instructions that can be rapidly accessed. Another advantage of disks over reel is that a robot can change them. This can drastically reduce the expenses of a data center. The disks’ disadvantages are that they are more expensive than magnetic tape and they are susceptible to “disk crashes.” In contrast to large, fixed disk drives, one current approach is to combine a large number of small disk drives each with 10- to 50-gigabyte capacity, developed originally for microcomputers. These devices are called redundant arrays of inexpensive disks (RAID). Because data are stored redundantly across many drives, the overall impact on system performance is lessened when one drive malfunctions. Also, multiple drives provide multiple data paths, improving performance. Finally, because of manufacturing efficiencies of small drives, the cost of RAID devices is significantly lower than the cost of large disk drives of the same capacity. Magnetic Diskette Hard disks are not practical for transporting data of instructions from one personal computer to another. To accomplish this task effectively, developers created the magnetic diskette. (These diskettes are also called “floppy disks,” a name first given the very flexible 5.25-inch disks used in the 1980s and early 1990s.) Magnetic Diskette cont’d The magnetic diskette used today is a 3.5-inch, removable, somewhat flexible magnetic platter encased in a plastic housing. Unlike the hard disk drive, the read/write head of the diskette drive actually touches the surface of the disk. As a result, the speed of the drive is much slower, with an accompanying reduction in data transfer rate. Magnetic Diskette cont’d However, the diskettes themselves are very inexpensive, thin enough to be mailed, and able to store relatively large amounts of data. A standard high-density disk contains 1.44 megabytes. Zip disks are larger than conventional floppy disks, and about twice as thick. Disks formatted for zip drives contain 250 megabytes. Optical Storage Optical storage devices have extremely high storage density. Typically, much more information can be stored on a standard 5.25-inch optical disk than on a comparably sized floppy (about 400 times more). Since a highly focused laser beam is used to read/write information encoded on an optical disk, the information can be highly condensed. Another advantage of optical storage is that the medium itself is less susceptible to contamination or deterioration. Digital Video Disk(DVD) DVD is a new storage disk that offers higher quality and denser storage capabilities. In 2001, the disk’s maximum storage capacity is 17 Gbytes, which is sufficient for storing about five movies. It includes superb audio (six-track vs. the two-track stereo). Like CDs, DVD comes as DVD-ROM (read-only) and DVD-RAM. Rewritable DVD-RAM systems are already on the market, offering a capacity of 4.7 GB Flash Memory Flash memory is a non-volatile computer memory that can be electrically erased and reprogrammed. It is a technology that is primarily used in memory cards and USB flash drives for general storage and transfer of data between computers and other digital products. It is a specific type of EEPROM (Electrically Erasable Programmable Read-Only Memory) that is erased and programmed in large blocks; in early flash the entire chip had to be erased at once. Software Operating Systems Overview of Programming Languages System Development Methodology Software Software is a term used (in contrast to hardware) to describe all programs that are used in a particular computer installation. The term is often used to mean not only the program themselves but they are associated documentation. Computer hardware cannot perform a single act without instructions. These instructions are known as software or computer programs. Software is at the heart of all computer applications. There are two major types of software: application software and system software. System Software Systems software acts primarily as an intermediary between computer hardware and application programs, and knowledgeable users may also directly manipulate it. Systems software provides important self-regulatory functions for computer systems, such as loading itself when the computer is first turned on, as in Windows Professional; managing hardware resources such as secondary storage for all applications; and providing commonly used sets of instructions for all applications to use. System Software – Cont’d There are subdivision in system software Operating systems and control programs Translators Utilities and service programs Database Management System Operating Systems and Control Programs Computers are required to give efficient and relative service without the need for continual intervention by the user. On smaller computer systems, such as micro computers, the control program may also accept commands typed in by the user. Such a control program is often called monitor On larger computer systems it is common for only part of the monitor to remain in main storage. Other parts of the monitor are brought into memory when required, ie, there are “resident” and “transient” parts of the monitor. The resident part of the monitor on large systems is often called the Kernel, Executive or supervisor program. An operating system is a suite of programs that takes over the operation of the computer to the extent of being able to allow a number of programs to be run on the computer without human intervention by an operator. Translators The earliest computer programs were written in the actual language of the computer. Nowadays, however, programmers write their programs in programming languages which relatively easy to learn. Each program is translated into the language of the machine before being used for operational purposes. This translation is done by computer programs called translators. Utilities and service programs Utilities, also called service programs, are system programs that provide a useful service to the user of the computer by providing facilities for performing tasks of a routine nature. Common types of utility programs are: Sort Editor File Copying Dump File Maintenance Tracing and Debugging Database Management System The database management system is a complex software system that constructs, expands and maintains the database. It also provides the controlled interface between the user and data in the database. The DBMS allocates storage to data. It maintains indices so that any required data can be retrieved, and so that separate items of data in the database can be crossreferenced. The structure of a database is dynamic and can be changed as needed. The DBMS provides an interface with user programs. These may be written in number of different programming languages. Operating Systems An operating system, or OS, is a software program that enables the computer hardware to communicate and operate with the computer software. An OS is responsible for the management and coordination of activities and the sharing of the resources of the computer. The operating system acts as a host for computing applications run on the machine. As a host, one of the purposes of an operating system is to handle the details of the operation of the hardware. Functions of the OS Processor management - that is, assignment of processor to different tasks being performed by the computer system. Memory management - that is, allocation of main memory and other storage areas to the system programmes as well as user programmes and data. Input/output management - that is, co-ordination and assignment of the different output and input device while one or more programmes are being executed. File management - that is, the storage of file of various storage devices to another. It also allows all files to be easily changed and modified through the use of text editors or some other files manipulation routines. Establishment and enforcement of a priority system - that is, it determines and maintains the order in which jobs are to be executed in the computer system. Automatic transition from job to job as directed by special control statements. Interpretation of commands and instructions. Coordination and assignment of compilers, assemblers, utility programs, and other software to the various user of the computer system. Facilitates easy communication between the computer system and the computer operator (human). It also establishes data security and integrity. Types of Operating Systems Single-program systems Simple batch system Multi-access and Time-sharing systems Real-time systems Application Software Application software is a set of computer instructions, written in a programming language. The instructions direct computer hardware to perform specific data or information processing activities that provide functionality to the user. This functionality may be broad, such as general word processing, or narrow, such as an organization’s payroll program. An application program applies a computer to a need, such as increasing productivity of accountants or improved decisions regarding an inventory level. Application programming is either the creation or the modification and improvement of application software. Programming Languages Programming languages are the basic building blocks for all systems and application software. Programming languages are basically a set of symbols and rules used to write program code. Each language uses a different set of rules and the syntax that dictates how the symbols are arranged so they have meaning. The characteristics of the languages depend on their purpose. Languages also differ according to when they were developed; today’s languages are more sophisticated than those written in the 1950s and the 1960s. Levels of Programming languages We can choose any language for writing a program according to the need. But a computer executes programs only after they are represented internally in binary form (sequences of 1’s and 0's). Programs written in any other language must be translated to the binary representation of the instructions before they can be executed by the computer. Programs written for a computer may be in one of the following categories of languages. Machine Language This is a sequence of instructions written in the form of binary numbers consisting of 1's, 0's to which the computer responds directly. 'machine language was initially referred to as code, although now the term code is used more broadly to refer to any program text. An instruction prepared in any machine language will have at least two parts. The first part is the Command or Operation, which tells the computer what functions is to be performed. All computers have an operation code for each of its functions. The second part of the instruction is the operand or it tells the computer where to find or store the data that has to be manipulated. Assembly Language When we employ symbols (letter, digits or special characters) for the operation part, the address part and other parts of the instruction code, this representation is called an assembly language program. This is considered to be the second generation language. Machine and Assembly languages are referred to as low level languages since the coding for a problem is at the individual instruction level. Each machine has got its own assembly language which is dependent upon the internal architecture of the processor. An assembler is a translator which takes its input in the form of an assembly language program and produces machine language code as its output. High-level Language The time and cost of creating machine and assembly languages was quite high. And this was the prime motivation for the development of high level languages. Since a high level source program must be translated first into a form the machine can understand, this is done by software called Compiler which takes the source code as input and produces as output the machine language code of the machine on which it is to be executed. During the process of translation, - the Compiler reads the source programs statement-wise and checks the syntactical errors. If there is any error, the computer generates a print-out of the errors it has detected. This action is known as diagnostics. There is another type of software which also does the translation. This is called an Interpreter. The Compiler and Interpreter have different approaches to translation. The following table lists the differences between a Compiler and an Interpreter. System Development Methodology New computer systems frequently replace existing manual systems, and the new systems may themselves be replaced after sometime. The process of replacing the old system by the new is often organized into a series of stages. The whole process is called “system life cycle”. The system life cycle may be regarded as a “framework” upon which the work can be organized and methods applied. The system life cycle is the traditional framework for developing new systems. Preliminary survey or study The purpose of this survey is to establish whether there is need for a new system and if so to specify the objectives of the system. Feasibility study The purpose of the feasibility study is to investigate the project in sufficient depth to be able to provide information that either justifies the development of the new system or shows why the project should not continue. Investigation and fact recording At this stage in the life cycle a detailed study is conducted. The purpose of this study is to fully understand the existing system and to identify the basic information requirements. This requires a contribution from the users of both of the existing system and the proposed system. Analysis Analysis of the full description of the existing system and of the objectives of the proposed system should lead to the full specification of users’ requirements. Design The analysis may lead to a number of possible alternative designs. For example, different combinations of manual and computerized elements may be considered. Once one alternative has been selected the purpose of the design stage is to work from the requirements specification to produce a system specification. Implementation Implementation involves following the details set out in the system specification. Three particularly important tasks are hardware provision, programming and staff training. It is worth observing in passing that the programming task has its own “life cycle” in the form of the various stages in programming, such that analysis and design occur at many different levels. Maintenance and review Once a system is implemented and in full operation it is examined to see if it has met the objectives set out in the original specification. Hardware and Networking