RUN

advertisement

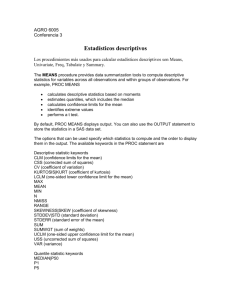

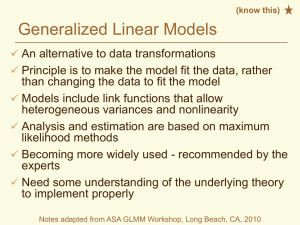

EPIB698D Lecture 3 Raul Cruz-Cano Spring 2013 Proc Univariate • The UNIVARIATE procedure provides data summarization on the distribution of numeric variables. PROC UNIVARIATE <option(s)>; Var variable-1 variable-n; Run; Options: PLOTS : create low-resolution stem-and-leaf, box, and normal probability plots NORMAL: Request tests for normality data blood; INFILE 'C:\blood.txt'; INPUT subjectID $ gender $ bloodtype $ age_group $ RBC WBC cholesterol; run; proc univariate data =blood ; var cholesterol; run; OUTPUT (1) The UNIVARIATE Procedure Variable: cholesterol Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights 795 Sum Observations 160141 Variance 2488.6844 Kurtosis -0.0706044 Corrected SS 1976015.41 Std Error Mean 1.76929947 OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 N - This is the number of valid observations for the variable. The total number of observations is the sum of N and the number of missing values. Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean Moments Moments are statistical summaries of a distribution 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 Sum Weights - A numeric variable can be specified as a weight variable to weight the values of the analysis variable. The default weight variable is defined to be 1 for each observation. This field is the sum of observation values for the weight variable OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 Sum Observations - This is the sum of observation values. In case that a weight variable is specified, this field will be the weighted sum. The mean for the variable is the sum of observations divided by the sum of weights. OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 Std Deviation - Standard deviation is the square root of the variance. It measures the spread of a set of observations. The larger the standard deviation is, the more spread out the observations are. OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 Variance - The variance is a measure of variability. It is the sum of the squared distances of data value from the mean divided by N-1. We don't generally use variance as an index of spread because it is in squared units. Instead, we use standard deviation. OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 Skewness - Skewness measures the degree and direction of asymmetry. A symmetric distribution such as a normal distribution has a skewness of 0, and a distribution that is skewed to the left, e.g. when the mean is less than the median, has a negative skewness. OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 (1)Kurtosis - Kurtosis is a measure of the heaviness of the tails of a distribution. In SAS, a normal distribution has kurtosis 0. (2) Extremely nonnormal distributions may have high positive or negative kurtosis values, while nearly normal distributions will have kurtosis values close to 0. (3) Kurtosis is positive if the tails are "heavier" than for a normal distribution and negative if the tails are "lighter" than for a normal distribution. OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Uncorrected Sum of Square Distances from the Mean This is the sum of squared data values. Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 Corrected SS - This is the sum of squared distance of data values from the mean. This number divided by the number of observations minus one gives the variance. OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 (1)Coeff Variation - The coefficient of variation is another way of measuring variability. (2)It is a unitless measure. (3)It is defined as the ratio of the standard deviation to the mean and is generally expressed as a percentage. (4) It is useful for comparing variation between different variables. OUTPUT (1) Moments N Mean Std Deviation Skewness Uncorrected SS Coeff Variation 795 201.43522 49.8867157 -0.0014449 34234053 24.7656371 Sum Weights Sum Observations Variance Kurtosis Corrected SS Std Error Mean 795 160141 2488.6844 -0.0706044 1976015.41 1.76929947 (1)Std Error Mean - This is the estimated standard deviation of the sample mean. (2)It is estimated as the standard deviation of the sample divided by the square root of sample size. (3)This provides a measure of the variability of the sample mean. OUTPUT (2) Location Variability Mean 201.4352 Std Deviation 49.88672 Median 202.0000 Variance 2489 Mode 208.0000 Range 314.00000 Interquartile Range 71.00000 NOTE: The mode displayed is the smallest of 2 modes with a count of 12. Median - The median is a measure of central tendency. It is the middle number when the values are arranged in ascending (or descending) order. It is less sensitive than the mean to extreme observations. Mode - The mode is another measure of central tendency. It is the value that occurs most frequently in the variable. OUTPUT (3) Location Variability Mean 201.4352 Std Deviation 49.88672 Median 202.0000 Variance 2489 Mode 208.0000 Range 314.00000 Interquartile Range 71.00000 NOTE: The mode displayed is the smallest of 2 modes with a count of 12. Range - The range is a measure of the spread of a variable. It is equal to the difference between the largest and the smallest observations. It is easy to compute and easy to understand. Interquartile Range - The interquartile range is the difference between the upper (75% Q) and the lower quartiles (25% Q). It measures the spread of a data set. It is robust to extreme observations. OUTPUT (3) Tests for Location: Mu0=0 Test -Statistic- -----p Value------ Student's t t 113.8503 Pr > |t| <.0001 Sign M 397.5 Pr >= |M| <.0001 Signed Rank S 158205 Pr >= |S| <.0001 OUTPUT (3) Student's t t 113.8503 Pr > |t| <.0001 Sign M 397.5 Pr >= |M| <.0001 Signed Rank S 158205 Pr >= |S| <.0001 (1) Sign - The sign test is a simple nonparametric procedure to test the null hypothesis regarding the population median. (2) It is used when we have a small sample from a nonnormal distribution. (3)The statistic M is defined to be M=(N+-N-)/2 where N+ is the number of values that are greater than Mu0 and N- is the number of values that are less than Mu0. Values equal to Mu0 are discarded. (4)Under the hypothesis that the population median is equal to Mu0, the sign test calculates the p-value for M using a binomial distribution. (5)The interpretation of the p-value is the same as for t-test. In our example the M-statistic is 398 and the p-value is less than 0.0001. We conclude that the median of variable is significantly different from zero. OUTPUT (3) Student's t t 113.8503 Pr > |t| <.0001 Sign M 397.5 Pr >= |M| <.0001 Signed Rank S 158205 Pr >= |S| <.0001 (1) Signed Rank - The signed rank test is also known as the Wilcoxon test. It is used to test the null hypothesis that the population median equals Mu0. (2) It assumes that the distribution of the population is symmetric. (3)The Wilcoxon signed rank test statistic is computed based on the rank sum and the numbers of observations that are either above or below the median. (4) The interpretation of the p-value is the same as for the t-test. In our example, the S-statistic is 158205 and the p-value is less than 0.0001. We therefore conclude that the median of the variable is significantly different from zero. OUTPUT (4) Quantiles (Definition 5) Quantile Estimate 100% Max 99% 95% 90% 75% Q3 50% Median 25% Q1 10% 5% 1% 0% Min 331 318 282 267 236 202 165 138 123 94 17 95% - Ninety-five percent of all values of the variable are equal to or less than this value. OUTPUT (5) Extreme Observations ----Lowest-------Highest--Value Obs Value Obs 17 829 323 828 36 492 328 203 56 133 328 375 65 841 328 541 69 79 331 191 Missing Values -----Percent Of----Missing Missing Value Count All Obs Obs . 205 20.50 100.00 Extreme Observations This is a list of the five lowest and five highest values of the variable PROC FREQ • PROC FREQ and PROC MEANS have literally been part of SAS for over 30 years • Probably THE most used of the SAS analytical procedures. • They provide useful information directly and indirectly and are easy to use, so people run them daily without thinking about them. • These procedures can facilitate the construction of many useful statistics and data views that are not readily evident from the documentation. Proc Freq • PROC FREQ can be used to count frequencies of both character and numeric variables • When you have counts for one variable, it is called one-way frequencies • When you have two or more variables, the counts are called two-way, three-way or so on up to n-way frequencies; or simply cross-tabulations • Syntax: Proc freq ; Table(s) variable-combinations; • To produce one-ways frequencies, just put variable name after “TABLES”; To produced cross-tabulations, put an asterisk (*) between the variables 22 Proc Freq • The blood.txt data contain information of 1000 subjects. The variables include: subject ID, gender, blood_type, age group, red blood cell count, white blood cell count, and cholesterol. • Here is the data with first few subjects: 1 Female AB Young 7710 7.4 258 2 Male AB Old 6560 4.7 . 3 Male A Young 5690 7.53 184 4 Male B Old 6680 6.85 . 5 Male A Young . 7.72 187 • We want to derive frequencies of gender, age group, and blood type. 23 PROC FREQ options • Nocol: Suppress the column percentage for each cell • Norow: Suppress the row percentage for each cell • Nopercent: Suppress the percentages in crosstabulation tables, or percentages and cumulative percentages in one-way frequency tables and in list format 24 Proc Freq data blood; infile "c:\blood.txt"; input ID Gender $ Blood_Type $ Age_Group $ RBC WBC cholesterol; run; proc freq data=blood; tables Gender Blood_Type; tables Gender * Age_Group * Blood_Type / nocol norow nopercent; run; 25 Continuous Values • You would produce literally hundreds of categories for each value of a continuous variable (tuition, math SAT score, salary, age, etc). • You need to transform the numeric variables into categorical variables. proc freq data=blood ; tables RBC*WBC /norow nocol nopercent ; run; data blood; length rbc_group $ 12 wbc_group $12; set blood; if 0 <= RBC <6375 then rbc_group ="Low"; else if 6375 <= RBC < 7040 then rbc_group ="Medium Low"; else if 7040 <= RBC < 7710 then rbc_group ="Medium High"; else rbc_group =" High"; proc univariate data=blood ; var RBC WBC; run; if 0 <= WBC <4.84 then wbc_group ="Low"; else if 4.84 <= WBC < 5.52 then wbc_group ="Medium Low"; else if 5.52 <= WBC < 6.11 then wbc_group ="Medium High"; else wbc_group =" High"; run; proc freq data=blood ; tables rbc_group*wbc_group; run; PROC FREQ options • Missprint: Display missing value frequencies • Missing: Treat missing values as nonmissing data one; input A Freq; datalines; 12 22 .2 ; Run; proc freq data=one; tables A; title 'Default'; run; proc freq data=one; tables A / missprint; title 'MISSPRINT Option'; run; proc freq data=one; tables A / missing; title 'MISSING Option'; run; 27 PROC FREQ options • The order option orders the values of the frequency and crosstabulation table variables according to the specified order, where: – Data: orders values according to their order in the input dataset – Formatted: orders values by their formatted values – freq: orders values by descending frequency count – Internal: orders values by their unformatted values 28 PROC FREQ output Creates an output data set with frequencies, percentages, and expected cell frequencies • Out=: Specify an output data set to contain variable values and frequency counts data blood; infile "c:\blood.txt"; input ID Gender $ Blood_Type $ Age_Group $ RBC WBC cholesterol; run; proc freq data=blood order = freq; tables Blood_Type / out = proccount;; run; proc print data = proccount; run; out goes here not in the proc step 29 PROC FREQ options • Some of the statistics available through the FREQ procedure include: – Chisq provides chi-square tests of independence of each stratum and computes measures of association. – A significant Chi Square (p<.001, for example) indicates that there is a strong dependence between the variables proc freq data=blood ; tables rbc_group*wbc_group/norow nocol nopercent chisq; run; Proc corr The CORR procedure is a statistical procedure for numeric random variables that computes correlation statistics (The default correlation analysis includes descriptive statistics, Pearson correlation statistics, and probabilities for each analysis variable). PROC CORR options; VAR variables; WITH variables; BY variables; Proc corr data=blood; var RBC WBC cholesterol; run; Proc Corr Output Simple Statistics Variable RBC WBC cholesterol N 908 916 795 Mean Std Dev 7043 1003 5.48353 0.98412 201.43522 49.88672 Sum Minimum 6395020 5023 160141 4070 1.71000 17.00000 Maximum 10550 8.75000 331.00000 N - This is the number of valid (i.e., non-missing) cases used in the correlation. By default, proc corr uses pairwise deletion for missing observations, meaning that a pair of observations (one from each variable in the pair being correlated) is included if both values are non-missing. If you use the nomiss option on the proc corr statement, proc corr uses listwise deletion and omits all observations with missing data on any of the named variables. Proc Corr Pearson Correlation Coefficients Prob > |r| under H0: Rho=0 Number of Observations RBC RBC 1.00000 908 P-value H0: Corr=0 WBC cholesterol Number of observations -0.00203 0.9534 833 0.06583 0.0765 725 WBC cholesterol -0.00203 0.9534 833 1.00000 916 0.02496 0.5014 728 0.06583 0.0765 725 0.02496 0.5014 728 1.00000 795 Pearson Correlation Coefficients - measure the strength and direction of the linear relationship between the two variables. The correlation coefficient can range from -1 to +1, with -1 indicating a perfect negative correlation, +1 indicating a perfect positive correlation, and 0 indicating no correlation at all. SAS ODS (Output Delivery System) • ODS is a powerful tool that can enhance the efficiency of statistical reporting and meet the needs of the investigator. • To create output objects that can be send to destinations such as HTML, PDF, RTF (rich text format), or SAS data sets. • To eliminate the need for macros that used to convert standard SAS output to a Microsoft Word, or HTML document Fish Measurement Data The data set contains 35 fish from the species Bream caught in Finland's lake Laengelmavesi with the following measurements: • Weight (in grams) • Length3 (length from the nose to the end of its tail, in cm) • HtPct (max height, as percentage of Length3) • WidthPct (max width, as percentage of Length3) ods graphics on; title 'Fish Measurement Data'; proc corr data=fish1 nomiss plots=matrix(histogram); var Height Width Length3 Weight3; run; ods graphics off; data Fish1 (drop=HtPct WidthPct); title 'Fish Measurement Data'; input Weight Length3 HtPct WidthPct @@; Weight3= Weight**(1/3); Height=HtPct*Length3/100; Width=WidthPct*Length3/100; datalines; 242.0 30.0 38.4 13.4 290.0 31.2 40.0 13.8 340.0 31.1 39.8 15.1 363.0 33.5 38.0 13.3 430.0 34.0 36.6 15.1 450.0 34.7 39.2 14.2 500.0 34.5 41.1 15.3 390.0 35.0 36.2 13.4 450.0 35.1 39.9 13.8 500.0 36.2 39.3 13.7 475.0 36.2 39.4 14.1 500.0 36.2 39.7 13.3 500.0 36.4 37.8 12.0 . 37.3 37.3 13.6 600.0 37.2 40.2 13.9 600.0 37.2 41.5 15.0 700.0 38.3 38.8 13.8 700.0 38.5 38.8 13.5 610.0 38.6 40.5 13.3 650.0 38.7 37.4 14.8 575.0 39.5 38.3 14.1 685.0 39.2 40.8 13.7 620.0 39.7 39.1 13.3 680.0 40.6 38.1 15.1 700.0 40.5 40.1 13.8 725.0 40.9 40.0 14.8 720.0 40.6 40.3 15.0 714.0 41.5 39.8 14.1 850.0 41.6 40.6 14.9 1000.0 42.6 44.5 15.5 920.0 44.1 40.9 14.3 955.0 44.0 41.1 14.3 925.0 45.3 41.4 14.9 975.0 45.9 40.6 14.7 950.0 46.5 37.9 13.7 ; run; Weighted Data Problem: Pct. of Voting Population Minority Voters White Voters Pct. of People who have a phone Minority Voters White Voters Solution: Give more “weight” to the minority people with telephone Weighted Data Not limited to 2 categories Pct. of Voting Population Pct. of People who have a phone Minority/Dem. Minority/Dem. Minority/Rep. Minority/Rep. White /Dem White /Dem White /Rep White /Rep How many categories? As many as there are significant Proportion Suppose minority voters are 1/3 of the voting population but only 1/6 of the people with phone 1 1 ? ? 2 6 3 5 2 4 ? ? .8 6 3 5 Needless to say that in reality this is a much more complex issue Which weight we need to use? • Oversimplified example (don’t take seriously) Pct. of People who have a phone Minority Voters White Voters Pct. of Voting Population in 2008 Minority Voters O White Voters Pct. of Voting Population in 2010 Minority Voters White Voters M Proportion Suppose minority voters are 1/3 of the voting population but only 1/6 of the people with phone 1. 2. 3. 4. 100 minority + 500 white answer the phone survey 75 Minority will vote for candidate X 250 White will votes for candidate X Non-Weighted Conclusion: 325/600 =54.16% of the voters will vote for candidate X 5. Weighted Conclusion: a) 75 minority = 75% of minority with phone=>(.75)*(1/6)=12.5% of people with phone * 2 weight= 25% pct of voting population b) 250 white = 50% of white people with phone =>(.5)*(5/6)= 41.66% of people with phone * .8 weight =>33.33% c) 25% +33.33%=58.33% SAS Weighted Mean proc means data=sashelp.class; var height; run; proc means data=sashelp.class; weight weight; var height; run; Both PRC FREQ and PROC CORR also allow to use weights using the statement weight. Another (better?) approach for weighted data • Experimental design data have all the properties that we learned about in statistics classes. – The data are going to be independent – Identically-distributed observations with some known error distribution – There is an underlying assumption that the data come to use as a finite number of observations from a conceptually infinite population – Simple random sampling without replacement for the sample data • Sample survey data, – The sample survey data do not have independent errors. – The sample survey data may cover many small sub-populations, so we do not expect that the errors are identically distributed. – The sample survey data do not come from a conceptually infinite population. PROC MEANS vs PROC SURVEYMEANS 1. We have a target population of 647 receipt amounts, classified by the company region. If we need to perform a full audit, and it is too expensive to perform the full audit on every one of the receipts in the company database, then we need to take a sample. 2. We want to sample the larger receipt amounts more frequently. 3. Sample 'proportional to size'. That means that we choose a multiplier variable, and we make our choices based on the size of that multiplier. If the multiplier for receipt A is five times the multiplier for receipt B, then receipt A will be five times more likely to be selected than receipt B. We will use the receipt amount as the multiplier data AuditFrame (drop=seed); seed=18354982; do i=1 to 600; if i<101 then region='H'; else if i<201 then region='S'; else if i<401 then region='R'; else region='G'; Amount = round ( 9990*ranuni(seed)+10, 0.01); output; end; do i=601 to 617; if i<603 then region='H'; else if i<606 then region='S'; else if i<612 then region='R'; else region='G'; Amount = round ( 10000*ranuni(seed)+10000, 0.01); output; end; do i = 618 to 647; If i<628 then region='H'; else if i<638 then region='S'; else if i<642 then region='R'; else region='G'; Amount = round ( 9*ranuni(seed)+1, 0.01); output; end; run; PROC MEANS vs PROC SURVEYMEANS We can perform this sample selection using PROC SURVEYSELECT: proc surveyselect data=AuditFrame out=AuditSample3 method=PPS seed=39563462 sampsize=100; size Amount; run; This gives us a weighted random sample of size 100. Now we'll just (artificially) create the data set of the audit results. We will have a validated receipt amount for each receipt. Ideally, the validated amount would be exactly equal to the listed amount in every case. data AuditCheck3; set AuditSample3; ValidatedAmt = Amount; if region='S' and mod(i,3)=0 then ValidatedAmt = round(Amount*(.8+.2*ranuni(1234)),0.01); if region='H' then do; if floor(Amount/100)=13 then ValidatedAmt=1037.50; if floor(Amount/100)=60 then ValidatedAmt=6035.30; if floor(Amount/100)=85 then ValidatedAmt=8565.97; if floor(Amount/100)=87 then ValidatedAmt=8872.92; if floor(Amount/100)=95 then ValidatedAmt=9750.05; end; diff = ValidatedAmt - Amount; run; PROC MEANS vs PROC SURVEYMEANS • • • The WEIGHT statement in PROC MEANS allows a user to give some data points more emphasis. But that isn't the right way to address the weights we have here. We built a sample using a specific sample design, and we have sampling weights which have a real, physical meaning. A sampling weight for a given data point is the number of receipts in the target population which that sample point represents. The primary difference is the inclusion of the TOTAL= option. PROC SURVEYMEANS allows us to compute a Finite Population Correction Factor and adjust the error estimates accordingly. This factor adjusts for the fact that we already know the answers for some percentage of the finite population, and so we really only need to make error estimates for the remainder of that finite population. proc means data=AuditCheck3 mean stderr clm; var ValidatedAmt diff; run; proc means data=AuditCheck3 mean stderr clm; var ValidatedAmt diff; weight SamplingWeight; run; proc surveymeans data=AuditCheck3 mean stderr clm total=647; var ValidatedAmt diff; weight SamplingWeight; run; Are the point estimates different ? What about the confidence intervals? Household Component of the Medical Expenditure Panel Survey (MEPS HC) • The MEPS HC is a nationally representative survey of the U.S. civilian noninstitutionalized population. • It collects medical expenditure data as well as information on demographic characteristics, access to health care, health insurance coverage, as well as income and employment data. • MEPS is cosponsored by the Agency for Healthcare Research and Quality (AHRQ) and the National Center for Health Statistics (NCHS). • For the comparisons reported here we used the MEPS 2005 Full Year Consolidated Data File (HC-097). • This is a public use file available for download from the MEPS web site (http://www.meps.ahrq.gov). Transforming from SAS transport (SSP) format to SAS Dataset (SAS7BDAT) • The MEPS is not a simple random sample, its design includes: – – – – Stratification Clustering Multiple stages of Selection Disproportionate sampling. • The MEPS public use files (such as HC-097) include variables for generating weighted national estimates and for use of the Taylor method for variance estimation. These variables are: – person-level weight (PERWT05F on HC-097) – stratum (VARSTR on HC-097) – cluster/psu(VARPSU on HC-097). LIBNAME PUFLIB 'C:\'; FILENAME IN1 'C:\H97.SSP'; PROC XCOPY IN=IN1 OUT=PUFLIB IMPORT; RUN; Needed for even better estimates of the CI H97.SASBDAT occupies 408MB vs. 257MB for H97.SSP vs. 14MB for H97.ZIP PROC SURVEYFREQ Simple Example SAS7BDAT PROC SURVEYFREQ DATA= PUFLIB.H97; TABLES HISPANX*INSCOV05 / ROW; WEIGHT PERWT05F; RUN;