Participants - Texas A&M Supercomputing Facility

advertisement

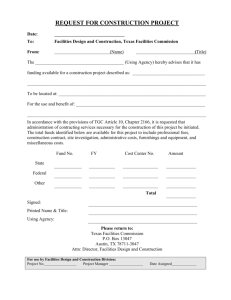

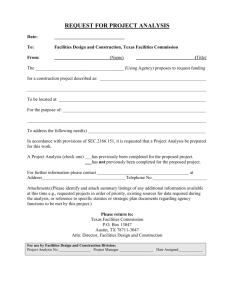

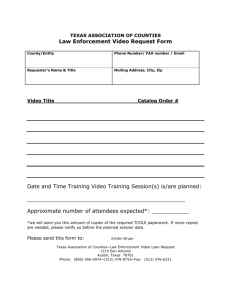

HiPCAT High Performance Computing Across Texas (A Consortium of Texas Universities) Tony Elam Computer and Information Technology Institute Rice University http://citi.rice.edu elam@rice.edu Agenda • • • • • • • Grids Opportunity Players Objective Stages and Plans Industry Conclusion HiPCAT - TIGRE • HiPCAT - High Performance Computing Across Texas • TIGRE - Texas Internet Grid for Research and Education • Participants: — Share knowledge and high performance computing (HPC) resources in the state of Texas — Pursue the development of a competitive Texas Grid — Promotion of research, education and competitiveness of Texas industries and universities — Computation, information, and collaboration — Rice University — Texas A&M University — Texas Tech University — University of Houston — University of Texas Grids are “Hot” DISCOM SinRG APGrid IPG TeraGrid Data - Computation - Information - Access - Knowledge But What Are They? • Collection of heterogeneous computing resources • Distributed • Complex (Today) • BUT - Future of computational science! — Computers, data repositories, networks, and tools — Varying in power, architectures, and purpose — Across the room, campus, city, state, nation, globe — Hard to program — Hard to manage — Interconnected by networks – Links may vary in bandwidth – Load may vary dynamically – Reliability and security concerns — Exciting computational, informational, and collaborative tools Opportunity/Competition • Over $100 million per year in U.S. and international investment in Grids for science and engineering applications — NSF: Distributed Terascale Facility (DTF) — NASA: Information Power Grid (IPG) — DOD: Global Information Grid (GIG) — European Data Grid — NEESGrid – National Earthquake Engineering Simulation Grid — GriPhyn – Grid Physics, many others…. • University players (all with major State support) • Texas is NOT positioned today to compete nationally — University Illinois - NCSA — University California San Diego - SDSC — Caltech - CACR — Many others…. – Cornell Theory Center (CTC), Ohio Supercomputing Center (OSC), Pittsburgh Supercomputing Center (PSC), North Carolina Supercomputing Center (NCSC), Alabama Supercomputing Authority (ASA) National Opportunities Abound • Funding Opportunities in I/T Agency DOC/NIST DOD DOE EPA HHS/NIH NASA NSF Total FY2001 Funding $44M $350M $667M $4M $233M $230M $740M $2.268B • One Application Area - Nano Agency DOC/NIST DOD DOE EPA HHS/NIH NASA NSF Total FY2001 Funding $18M $110M $96M $0M $36M $20M $217M $497M Many application areas of interest to Texas require significant computing, communications and collaborative technologies: - biotechnology, environmental, aerospace, petrochemical… User Poses the Problem Model or Simulation is Evoked Supercomputer Critical Information is Accessed Supercomputer Database Additional Resource is Needed Supercomputer Database Supercomputer Latest Sensor Data Incorporated Sensor Sensor Supercomputer Database Supercomputer Collaboration on Results Sensor Sensor Supercomputer Database Supercomputer Future Texas Grid Rice University • Participants: • • Network connectivity - Internet2 access • Visualization systems - ImmersaDesks — — — — — — — — — — — — Jordan Konisky, Vice-provost for Research and Graduate Studies Charles Henry, Vice-provost, Librarian, CIO Tony Elam, Associate Dean of Engineering Moshe Vardi, Director, CITI, Professor CS Ken Kennedy, Director, HiPerSoft and LACSI, Professor CS Robert Fowler, Senior Research Scientist, HiPerSoft and LACSI Assoc. Director Phil Bedient, Professor CEE, Severe Weather Kathy Ensor, Professor Statistics, Computational Finance & Econ Don Johnson, Professor ECE, NeuroSciences Alan Levander, Professor Earth Sciences, Geophysics Bill Symes, Professor CAAM, Seismic Processing - Inversion Modeling Paul Padley, Asst. Professor Physics & Astronomy - High Energy Physics Computational systems — Compaq Alpha Cluster (48 processor) — Future Intel IA-64 Cluster Texas A&M University • Participants: • • Network connectivity - Internet2 access • Visualization systems - SGI workstations — Richard Ewing, Vice-provost Research — Pierce Cantrell, Assoc. Provost for Information Technology — Spiros Vellas, Assoc. Director for Supercomputing — Richard Panetta, Professor Meteorology — Michael Hall, Assoc. Director Institute for Scientific Computation, Professor Chemistry — Lawrence Rauchwerger, Professor CS Computational systems — 32 CPU/16GB SGI Origin2000 — 48 CPU/48GB SGI Origin3000 Texas Tech University • Participants: • • Network Connectivity – Internet2 access • Visualization systems – 35 seat Reality Center — Philip Smith, Director HPC Center — James Abbott, Assoc. Dir HPCC and Chemical Engineering — David Chaffin, TTU HPCC — Dan Cooke, Chair CS — Chia-Bo Chang, Geosciences — Greg Gellene, Chemistry Computational systems — SGI 56 CPU Origin2000 — 2 32+ IA 32 Beowulf Clusters — 8x20 foot screen w/stereo capability — 2 Onyx2 graphics pipes University of Houston • Participants: • • Network Connectivity – Internet2 access • Visualization systems — Art Vailas, Vice-provost Research — Lennart Johnsson, Professor CS — Barbara Chapman, Professor CS — Kim Andrews, Director High Performance Computing Computational systems — IBM 64 CPU SP2 — Linux Clusters (40 dual processors) w/multiple high speed interconnects — Sun Center of Excellence – 3 SunFire 6800s (48 processors), 12 SunFire V880 — Multimedia Theatre/Cave — ImmersaDesk University of Texas • • • • Participants: — Juan Sanchez, Vice President for Research — Jay Boisseau, Director of Texas Advanced Computing Center — Chris Hempel, TACC Assoc. Director for Advanced Systems — Kelly Gaither, TACC Assoc. Director for Scientific Visualization — Mary Thomas, TACC Grid Technologies Group Manager — Rich Toscano, TACC Grid Technologies Developer — Shyamal Mitra, TACC Computational Scientist — Peter Hoeflich, Astronomy Department Research Scientist Network Connectivity - Internet2 access at OC-3 Computational systems — IBM Regatta-HPC eServers: 4 systems with 16 POWER4 processors each — Cray T3E with 272 processors, Cray SV1 with 16 processors — Intel Itanium cluster (40 800MHz CPU) & Pentium III cluster (64 1GHz CPU) Visualization systems — SGI Onyx2 with 24 processor and 6 Infinite Reality 2 graphics pipes — 3x1 cylindrically-symmetric power wall, 5x2 large-panel power wall Our Objectives • To build a nationally recognized infrastructure in advanced computing and networking that will enhance collaboration among Texas research institutions of higher education and industry, thereby allowing them to compete nationally and internationally in science and engineering • To enable Texas scientists and engineers in academia and industry to pursue leading edge research in biomedicine, energy and the environment, aerospace, materials science, agriculture, and information technology • To educate a highly competitive workforce that will allow Texas to assume a leadership role in the national and global economy Stage 1: Years 1-2 • Establish a baseline capability, prototype system and policies • • OC3 to OC12 communications — Create a minimum Texas Grid system between participants – Computers, storage, network, visualization — Port a significant application to this environment, measure and tune the performance of the application — Establish policies and procedures for management and use of the Texas Grid — Adopt software, tools, and protocols for general submission, monitoring, and benchmarking of jobs submitted to the Texas Grid — Adopt collaborative research tools to enhance cooperative research among the Texas universities — Provide computational access to machines, data, and tools from any center within the Texas Grid — Establish an industrial partnership program for the Texas Grid — Provide a statewide forum for training in HPCC $5 million Stage 2: Years 3-4 • Aggressive pursuit of national funding opportunities • Grow the Texas Grid community of users • • • OC12 to OC48 communications — Enhance the baseline capability (computers, storage, networks) — Enable several significant applications on the Texas Grid that demonstrate the power, capability, and usefulness – Biomedical, Nanoscience, Environmental, Economic, ... — Initiate coordinated research program in Grids (s/w and h/w) – Telescoping languages, adaptive systems, collaborative environments, and innovative development tools — Offer coordinated HPCC education across the state (co-development) — Add additional universities — Add industrial partners — Explore regional partnerships $5 million for cost share only - core institutions $1 million infrastructure for new partners/users Stage 3: Years 5-6 • Establish the regional Grid • • • Win “the” major national computational center competition • • $5 million for cost share only — NASA Johnson Space Center (JSC) — DOE Los Alamos National Laboratory (LANL) — NOAA National Severe Storm Laboratory (NSSL) Continue to grow the Texas Grid community of users Texas Grid in full “production” — Web accessibility — Educational Grid for computational science and engineering — Virtual community and laboratory for scientists and engineers $1-4 million infrastructure enhancement for new and existing partners/users Industrial Buy-In • Computing Industry • Applications • Communications — IBM — HP/Compaq — Intel — Microsoft — Sun — SGI — Dell — Boeing — Lockheed-Martin — Schlumberger — Quest, SWBell Conclusion • • Five major Texas universities in agreement and collaboration! • Request the TIF Board consider the project • Help position the HiPCAT consortium for national competitions Texas is a “high technology” state — The Texas Grid is needed for the state to remain competitive in the future – Computational science and engineering – Microelectronics and advanced materials (nanotechnology) – Medicine, bioengineering and bioinformatics – Energy and environment – Defense, aerospace and space — Meeting w/representatives of each institution — Written proposal submitted for consideration