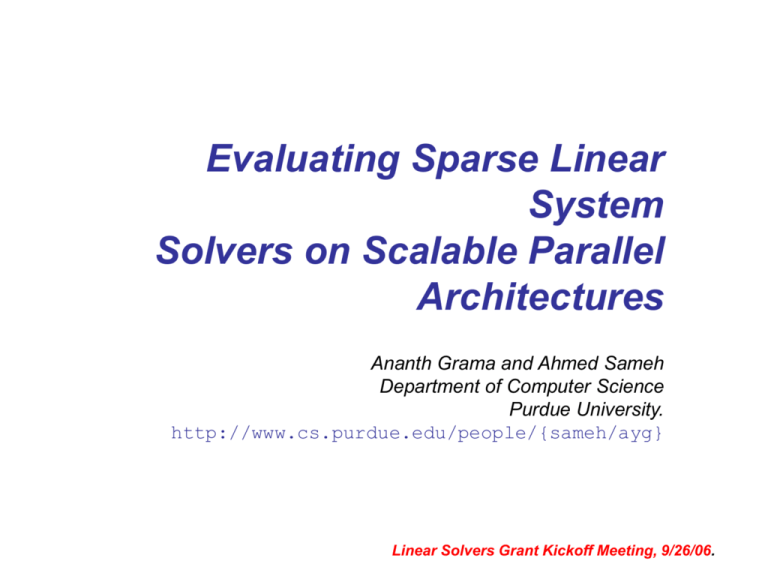

Evaluating Sparse Linear System Solvers on

advertisement

Evaluating Sparse Linear

System

Solvers on Scalable Parallel

Architectures

Ananth Grama and Ahmed Sameh

Department of Computer Science

Purdue University.

http://www.cs.purdue.edu/people/{sameh/ayg}

Linear Solvers Grant Kickoff Meeting, 9/26/06.

Evaluating Sparse Linear System Solvers on Scalable

Parallel Architectures

Project Overview

• Identify sweet spots in algorithmarchitecture-programming model space for

efficient sparse linear system solvers.

• Design a new class of highly scalable

sparse solvers suited to petascale HPC

systems.

• Develop analytical frameworks for

performance prediction and projection.

Methodology

• Design generalized sparse solvers (direct,

iterative, and hybrid) and evaluate their

scaling/communication characteristics.

• Evaluate architectural features and their

impact on scalable solver performance.

• Evaluate performance and productivity

aspects of programming models -- PGAs

(CAF, UPC) and MPI.

Challenges and Impact

Milestones / Schedule

• Generalizing the space of parallel sparse linear

• Final deliverable: Comprehensive evaluation of

system solvers.

scaling properties of existing (and novel sparse

solvers).

• Analysis and implementation on parallel platforms

• Performance projection to the petascale

•Guidance for architecture and programming

model design / performance envelope.

• Benchmarks and libraries for HPCS.

• Six month target: Comparative performance of

solvers on multicore SMPs and clusters.

• 12-month target: Evaluation on these solvers on

Cray X1, BG, JS20/21, for CAF/UPC/MPI

implementations.

Introduction

• A critical aspect of High-Productivity is the

identification of points/regions in the algorithm/

architecture/ programming model space that are

amenable for implementation on petascale

systems.

• This project aims at identifying such points for

commonly used sparse linear system solvers,

and at developing more robust novel solvers.

• These novel solvers emphasize reduction in

memory/remote accesses at the expense of

(possibly) higher FLOP counts – yielding much

better actual performance.

Project Rationale

• Sparse system solvers govern the overall

performance of many CSE applications on HPC

systems.

• Design of HPC architectures and programming

models should be influenced by their suitability

for such solvers and related kernels.

• Extreme need for concurrency on novel

architectural models require fundamental reexamination of conventional sparse solvers.

Typical Computational Kernels for PDE’s

Integration

Newton Iteration

Linear system solvers

k

k

t

Fluid Structure Interaction

NESSIE – Nanoelectronics

Simulation Environment

Numerical Parallel

Algorithms:

Linear solver (SPIKE),

Eigenpairs solver (TraceMin),

preconditioning strategies,…

3D CNTs-3D Molec. Devices

Transport/Electrostatics

Multi-scale, Multi-physics

Multi-method

3D Si Nanowires

3D III/V Devices

Mathematical

Methodologies:

Finite Element Method,

mode decomposition,

multi-scale, non-linear

numerical schemes,…

2D MOSFETs

Top Gate

ε2=16

18nm

tox=20nm

S

ε1=3.9

CNT channel

D

tc

Gate insulator

Gate

Lc=30nm

E. Polizzi (1998-2005)

Simulation, Model Reduction and

Real-Time Control of Structures

Fluid-Solid Interaction

Project Goals

• Develop generalizations of direct and iterative

solvers – e.g. the Spike polyalgorithm.

• Implement such generalizations on various

architectures (multicore, multicore SMPs,

multicore SMP aggregates) and programming

models (PGAs, Messaging APIs)

• Analytically quantify performance and project to

petascale platforms.

• Compare relative performance, identify

architecture/programming model features, and

guide algorithm/ architecture/ programming

model co-design.

Background

• Personnel:

– Ahmed Sameh, Samuel Conte Professor of Computer

Science, has worked on development of parallel

numerical algorithms for four decades.

– Ananth Grama, Professor and University Scholar, has

worked both on numerical aspects of sparse solvers,

as well as analytical frameworks for parallel systems.

– (To be named – Postdoctoral Researcher)* will be

primarily responsible for implementation and

benchmarking.

*We have identified three candidates for this position and will shortly be hiring one

of them.

Background…

• Technical

– We have built extensive infrastructure for

parallel sparse solvers – including the Spike

parallel toolkit, augmented-spectral ordering

techniques, and multipole-based

preconditioners

– We have diverse hardware infrastructure,

including Intel/AMP multicore SMP clusters,

JS20/21 Blade servers, BlueGene/L, Cray X1.

Background…

• Technical…

– We have initiated installation of Co-Array

Fortran and Unified Parallel C on our

machines and porting our toolkits to these

PGAs.

– We have extensive experience in analysis of

performance and scalability of parallel

algorithms, including development of the

isoefficiency metric for scalability.

Technical Highlights

The SPIKE Toolkit

SPIKE: Introduction

after RCM reordering

• Engineering problems usually produce large sparse linear systems

• Banded (or banded with low-rank perturbations) structure is often

obtained after reordering

• SPIKE partitions the banded matrix into a block tridiagonal form

• Each partition is associated with one CPU, or one node

multilevel parallelism

SPIKE: Introduction…

SPIKE: Introduction …

AX=F SX=diag(A1-1,…,Ap-1) F

Reduced system ((p-1) x 2m)

V1

W2

V2

V3

W3

W4

Retrieve solution

A(n x n)

Bj, Cj (m x m), m<<n

SPIKE: A Hybrid Algorithm

Different choices depending on the properties of the matrix and/or platform

architecture

• The spikes can be

computed:

– Explicitly (fully or partially)

– On the Fly

– Approximately

• The diagonal blocks can be

solved:

– Directly (dense LU, Cholesky,

or sparse counterparts)

– Iteratively (with a

preconditioning strategy)

• The reduced system can be

solved:

– Directly (Recursive SPIKE)

– Iteratively (with a

preconditioning scheme)

– Approximately (Truncated

SPIKE)

The SPIKE algorithm

Hierarchy of Computational Modules

(systems dense within the band)

Level Description

3

SPIKE

2

LAPACK blocked algorithms

1

0

Primitives for banded matrices

(our own)

BLAS3 (matrix-matrix primitives)

SPIKE versions

Algorithm

E

R

T

F

Factorization

Explicit

Recursive

Truncated

on the Fly

P

LU w/

pivoting

Explicit generation of

spikes- reduced system

is solved iteratively with

a preconditioner.

Explicit generation of

spikes- reduced system

is solved directly using

recursive SPIKE

L

LU w/o

pivoting

Explicit generation of

spikes- reduced system

is solved iteratively with

a preconditioner.

Explicit generation of

spikes- reduce system is

solved directly using

recursive SPIKE

U

LU and UL

w/o pivot.

A

alternate

LU / UL

Explicit generation of

spikes using new

partitioning- reduced

system is solved

iteratively with a

preconditioner.

Implicit generation of

reduced system which

is solved on-the-fly

using an iterative

method.

Truncated generation

of spike tips: Vb is

exact, Wt is approx.reduced system is

solved directly

Implicit generation of

reduced system which

is solved on-the-fly

using an iterative

method.

Truncated generation

of spike tips: Vb, Wt are

exact- reduced system

is solved directly

Implicit generation of

reduced system which

is solved on-the-fly

using an iterative

method with precond.

Truncated generation

of spikes using new

partitioning- reduced

system is solved

directly

SPIKE Hybrids

1.

SPIKE versions

R = recursive

F = on-the-fly

2.

E = explicit

T = truncated

Factorization

No pivoting:

L = LU

U = LU & UL

A = alternate LU & UL

Pivoting:

P = LU

3.

Solution improvement:

–

–

–

0

2

3

direct solver only

iterative refinement

outer Bicgstab iterations

SPIKE “on-the-fly”

• Does not require generating the spikes explicitly

Ideally suited for banded systems with large sparse

bands

• The reduced system is solved iteratively

with/without a preconditioning strategy

I E1 0

F

I

0

G

2

2

H2 0 I E2 0

S

0

0

F

I

G

3

3

H3 0 I E3

0 F4 I

0

Ei 0, I Ai1 Bi

I

0

Gi I ,0Ai1 Bi

I

I

Fi I ,0Ai1 Ci

0

I

H i 0, I Ai1 Ci

0

Numerical Experiments

(dense within the band)

– Computing platforms

• 4 nodes Linux Xeon cluster

• 512 processor IBM-SP

– Performance comparison w/

LAPACK, and ScaLAPACK

SPIKE: Scalability

b=401; RHS=1;

IBM-SP

Spike (RL0)

Speed improvement Spike vs Scalapack

# procs.

N=0.5 M

8

16

32

64

128

256

512

7.2

8.2

7.9

7.0

6.0

5.0

4.1

8.4

8.2

7.7

6.9

5.7

4.8

8.3

8.0

7.6

6.0

5.4

8.2

8.0

7.4

6.4

N=1M

N=2M

N=4M

Tscal/Tspike

9

8

0.5 M

1M

2M

3M

7

6

5

4

8

16

32

64

128

256

# processors

512

SPIKE partitioning

Partitioning -- 1

A1

Processors

B1

C2

A2 B

C3

2

A3 B

C4

3

A4

Partitioning -- 2

A1

B1

C2

A2

C3

B2

A3

1

2

3

4

Processors

1

2,3

4

- 4 processor example

Factorizations (w/o pivoting)

LU

LU and UL

LU and UL

UL

Factorizations (w/o pivoting)

LU

UL (p=2); LU (p=3)

UL

SPIKE: Small number of processors

4-node Xeon Intel Linux

cluster with infiniband

interconnection - Two 3.2

Ghz processors per node; 4

GB of memory/ node; 1 MB

cache; 64-bit arithmetic.

Intel fortran, Intel MPI

Intel MKL libraries for

LAPACK, SCALAPACK

Speed-up / LAPACK

• ScaLAPACK needs at least 4-8 processors to perform

as well as LAPACK.

• SPIKE benefits from the LU-UL strategy and realizes

speed improvement over LAPACK on 2 or more

5

processors. 4.5

4

3.5

3

2.5

2

1.5

1

0.5

0

n=960,000

b=201

LAPACK

ScaLAPACK

SPIKE Truncated

SPIKE-new

System is diag.

dominant

1 CPU 2 CPU 4 CPU 8 CPU

General sparse systems

• Science and engineering

problems often produce large

sparse linear systems

• Banded (or banded with lowrank perturbations) structure is

often obtained after reordering

After RCM reordering

• While most ILU preconditioners depend on reordering strategies

that minimize the fill-in in the factorization stage, we propose to:

– extract a narrow banded matrix, via a reordering-dropping strategy,

to be used as a preconditioner.

– make use of an improved SPIKE “on-the-fly” scheme that is

ideally suited for banded systems that are sparse within the band.

Sparse parallel direct solvers and

banded systems

N=432,000, b= 177, nnz= 7, 955, 116, fill-in of the band: 10.4%

PARDISO

Reordering

Factorization

Solve

1 CPU- (1 Node)

19.0

4.13

0.83

2 CPU- (1 Node)

18.3

3.11

0.83

SuperLU

Reordering

Factorization

Solve

1 CPU - (1 Node)

10.3

17*

8.4*

2 CPU- (2 Nodes)

8.2

37*

12.5*

4 CPU- (4 Nodes)

8.2

41*

16.7*

8 CPU- (4 Nodes)

7.6

178*

15.9*

MUMPS

Reordering

Factorization

Solve

1 CPU - (1 Node)

16.2

6.3

0.8

2 CPU- (2 Nodes)

17.2

4.2

0.6

4 CPU- (4 Nodes)

17.8

3

0.55

8 CPU- (4 Nodes)

17.7

20*

1.9*

* Memory swap

–too much fill-in

Multilevel Parallelism: SPIKE calling MKLPARDISO for banded systems that are sparse

within the band

SPIKE

A1

B1

C2

Node 1

A2

Node 3

Node 4

B2

C3

A3

B3

C4

Node 2

Pardiso Pardiso Pardiso Pardiso

A4

This SPIKE hybrid scheme exhibits better performance

than other parallel direct sparse solvers used alone.

SPIKE-MKL ”on-the-fly”

for systems that are sparse within the band

N=432,000, b= 177, nnz= 7, 955, 116, sparsity of the band: 10.4%

MUMPS: time (2-nodes) = 21.35 s; time (4-nodes) = 39.6 s

For narrow banded systems, SPIKE will consider the matrix dense within the band.

Reordering schemes for minimizing the bandwidth can be used if necessary.

N=471,800, b= 1455, nnz= 9, 499, 744, sparsity of the band: 1.4%

Good scalability using “on-the-fly” SPIKE scheme

A Preconditioning Approach

1. Reorder the matrix to bring most of the

elements within a band via

•

•

HSL’s MC64 (to maximize sum or product of the

diagonal elements)

RCM (Reverse Cuthill-McKee) or MD (Minimum Degree)

2. Extract a band from the reordered matrix to

use as preconditioner.

3. Use an iterative method (e.g. Bicgstab) to

solve the linear system (outer solver).

4. Use SPIKE to solve the system involving the

preconditioner (inner solver).

Matrix DW8192, order N = 8192, NNZ = 41746,

Condest (A) = 1.39e+07,

Square Dielectric Waveguide

Sparsity = 0.0622% , (A+A’) indefinite

GMRES + ILU preconditioner

vs. Bicgstab with banded

preconditioner

• Gmres w/o precond:

– Gmres + ILU (no fill-in):

– Gmres + ILU (1 e-3):

– Gmres + ILU (1 e-4):

• NNZ(L) = 612,889

• NNZ(U) = 414,661

>5000 iterations

Fails

Fails

16 iters., ||r|| ~ 10-5

• Spike - Bicgstab:

– preconditioner of bandwidth = 70

– 16 iters., ||r|| ~ 10-7

• NNZ = 573,440

Comparisons on a 4-node Xeon

Linux Cluster (2 CPUs/node)

• SuperLU:

– 1 node -3.56 s

– 2 nodes -1.87 s

– 4 nodes -3.02 s (memory limitation)

• MUMPS:

– 1 node -0.26 s

– 2 nodes -0.36 s

Matrix DW8192

– 4 nodes -0.34 s

• SPIKE – RL3 (bandwidth = 129):

Matrix DW8192

– 1 node -0.28 s

– 2 nodes -0.27 s

– 4 nodes -0.20 s

Sparse Matvec kernel

– Bicgstab required 6 iterations only

– Norm of rel. res. ~ 10-6

Sparse

Matvec

kernel

needs

fine-tuning

needs fine-tuning

Matrix: BMW7st_1

(stiffness matrix)

Order N =141,347, NNZ = 7,318,399

after RCM reordering

Comparisons on a 4-node Xeon

Linux Cluster (2 CPUs/node)

• SuperLU:

– 1 node

– 2 nodes

– 4 nodes

• MUMPS:

– 1 node

– 2 nodes

– 4 nodes

----

26.56 s

21.17 s

45.03 s

----

09.71 s

08.03 s

08.05 s

• SPIKE – TL3 (bandwidth = 101):

–

–

–

–

–

1 node

-02.38 s

2 nodes

-01.62 s

4 nodes

-01.33 s

Bicgstab required 5 iterations only

Norm of rel. res. ~ 10-6

Matrix:

BMW7st_1

is diag. dominant

Sparse Matvec kernel

needs fine-tuning

Reservoir Modeling

Bicgstab + ILU (‘0’, fill-in)

NNZ = 50,720

Matrix after RCM reordering

Bicgtab + banded preconditioner

bandwidth = 61; NNZ = 12,464

Driven Cavity Example

• Square domain: 1 x, y 1

B.CS.:

ux = uy = 0

on x, y = 1 ; x = 1

ux = 1, uy = 0

on y = 1

• Picard’s iterations; Q2/Q1 elements: Linearized

equations (Oseen problem)

• G0

• E = As = (A + AT)/2

• viscosity: 0.1, 0.02, 0.01,0.002

Linear system for 128 x 128 grid

after spectral reordering

MA48 as a preconditioner for

GMRES on a uniprocessor

Droptol

# of iter.

T(factor.)

T(GMRES iters.)

nnz(L+U)

final residual

v

0

2

142.7

0.3 7,195,157

2.07E-13 1/50

1.00E-04

24

115.5

1.4 2,177,181

2.83E-09 1/50

1.00E-03

248

109.6

17.3 1,611,030

5.11E-08 1/50

** for drop tolerance = .0001, total time ~ 117 sec.

** No convergence for a drop tolerance of 10-2

Spike – Pardiso for solving

systems involving the banded

preconditioner

• On 4 nodes (8 CPU’s) of a Xeon-Intel cluster:

–

–

–

–

bandwidth of extracted preconditioner = 1401

total time

= 16 sec.

# of Gmres iters.

= 131

2-norm of residual = 10-7

• Speed improvement over sequential

procedure with drop tolerance = .0001:

– 117/16 ~ 7.3

Technical Highlights…

• Analysis of Scaling Properties

– In early work, we developed the Isoefficiency

metric for scalability.

– With the likely scenario of utilizing up to 100K

processing cores, this work becomes critical.

– Isoefficiency quantifies the performance of a

parallel system (a parallel program and the

underlying architecture) as the number of

processors is increased.

Technical Highlights…

• Isoefficiency Analysis

– The efficiency of any parallel program for a

given problem instance goes down with

increasing number of processors.

– For a family of parallel programs (formally

referred to as scalable programs), increasing

the problem size results in an increase in

efficiency.

Technical Highlights…

• Isoefficiency is the rate at which problem

size must be increased w.r.t. number of

processors, to maintain constant

efficiency.

• This rate is critical, since it is ultimately

limited by total memory size.

• Isoefficiency is a key indicator of a

program’s ability to scale to very large

machine configurations.

• Isoefficiency analysis will be used

extensively for performance projection and

scaling properties.

Architecture

• We target the following currently available architectures

–

–

–

–

IBM JS20/21 and BlueGene/L platforms

Cray X1/XT3

AMD Opteron multicore SMP and SMP clusters

Intel Xeon multicore SMP and SMP clusters

• These platforms represent a wide range of currently

available architectural extremes.

_______________________________________________

Intel, AMD, and IBM JS21 platforms are available locally at Purdue. We intend to get access to the

BlueGene at LLNL and the Cray XT3 at ORNL.

Implementation

• Current implementations are MPI based.

• The Spike tooklit will be ported to

– POSIX and OpenMP

– UPC and CAF

– Titanium and X10 (if releases are available)

• These implementations will be

comprehensively benchmarked across

platforms.

Benchmarks/Metrics

• We aim to formally specify a number of

benchmark problems (sparse systems arising in

nanoelectronics, structural mechanics, and fluidstructure interaction)

• We will abstract architecture characteristics –

processor speed, memory bandwidth, link

bandwidth, bisection bandwidth (some of these

abstractions can be derived from HPCC

benchmarks).

• We will quantify solvers on the basis of wallclock time, FLOP count, parallel efficiency,

scalability, and projected performance to

petascale systems.

Benchmarks/Metrics

•

•

•

Other popular benchmarks such as HPCC do

not address sparse linear solvers in

meaningful ways.

HPCC comprises of seven benchmark tests –

HPL, DGEMM, STREAM, PTRANS,

RandomAccess, FFT, and Communication

bandwidth and latency.

They only peripherally address underlying

performance issues in sparse system solvers.

Progress/Accomplishments

• Implementation of the parallel Spike

polyalgorithm toolkit.

• Incorporation of a number of parallel direct

and preconditioned iterative solvers (e.g.

SuperLU, MUMPS, and Pardiso) into the

Spike toolkit.

• Evaluation of Spike on the IBM/SP, SGI

Altix, Intel Xeon clusters, and Intel

multicore platforms.

Milestones

• Final deliverable: Comprehensive

evaluation of scaling properties of existing

(and new solvers).

• Six month target: Comparative

performance of solvers on multicore SMPs

and clusters.

• Twelve-month target: Comprehensive

evaluation on Cray X1, BG, JS20/21, of

CAF/UPC/MPI implementations.

Financials

• The total cost of this project is

approximately $150K for its one-year

duration.

• The budget primarily accounts for a postdoctoral researcher’s salary/benefits and

minor summer-time for the PIs.

• Together, these three project personnel

are responsible for accomplishing project

milestones and reporting.

Concluding Remarks

• This project takes a comprehensive view of

parallel sparse linear system solvers and their

suitability for petascale HPC systems.

• Its results directly influence ongoing and future

development of HPC systems.

• A number of major challenges are likely to

emerge, both as a result of this project, and from

impending architectural innovations.

Concluding Remarks…

• Architectural innovations on the horizon

– Scalable multicore platforms: 64 to 128 cores on the

horizon

– Heterogeneous multicore: It is likely that cores will be

heterogeneous – some with floating point units,

others with vector units, yet others with

programmable hardware (indeed such chips are

commonly used in cell phones)

– Significantly higher pressure on the memory

subsystem

Concluding Remarks…

• Challenges for programming models

and runtime systems:

– Affinity scheduling is important for

performance – need to specify tasks that must

be co-scheduled (suitable programming

abstractions needed).

– Programming constructs for utilizing

heterogeneity.

Concluding Remarks…

• Challenges for algorithm and application

development

– FLOPS are cheap, memory references are

expensive – explore new families of algorithms that

optimize for (minimize) the latter

– Algorithmic techniques and programming constructs

for specifying algorithmic asynchrony (used to mask

system latency)

– Many of the optimizations are likely to be beyond the

technical reach of applications programmers – need

for scalable library support

– Increased emphasis on scalability analysis