Turing Machines for Dummies

advertisement

Turing Machines for Dummies

Why representations do matter

Peter van Emde Boas

ILLC-FNWI-Univ. Of Amsterdam

Bronstee.com Software & Services B.V.

SOFSEM 2012 – Jan 25 2012

Špindlerúv Mlýn

Czech Republic

1

2

Turing Machine

Tape

K: States

: tape

symbols

Read/Write

head

Finite

Program : P

P (K ) (K {L,0,R}) :

(q,s,q’,s’,m) P denotes the instruction:

When reading s in state q print s’, perform

move m and proceed to state q’ .

Nondeterminism!

3

Transitions

Configuration c : finite string in *(K) *

$ A B A A C <q,A> C B B A $

Transition c --> c’ obtained by performing

instruction in P

E.G., the instruction <q,A,r,B,R>

$ A B A A C <q,A> C B B A $ |-$ A B A A C B <r,C> B B A $

Computation: sequence of transitions

4

Configurations

Three ingredients are required for describing a Configuration:

The Machine State : q

The contents of the tape (preferably with endmarkers) :

$ ABAAC B C B BA$

The position of the reading head : i

Available options

Mathematical Representation:

< q , xj xj+1 …. xi …. xl-1 xl , i

>

Intrinsic Representation:

$ xj xj+1 …. q xi …. xl-1 xl $ or $ xj xj+1 …. <q xi > …. xl-1 xl $

↓

Semi Intrinsic:

< q , $ xj xj+1 …. xi …. xl-1 xl $ >

5

6

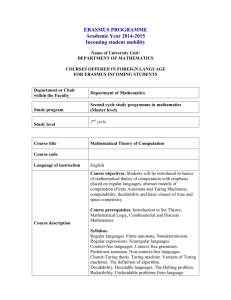

Theme of this presentation

• The Convenience of the Intrinsic Representation

– Its History : who invented it, who saw its usefulness ?

– Applications which are hard, if not impossible when the

Mathematical representation is used

•

•

•

•

Chomsky Hierarchy and Automata models

Master reductions for NP: Cook-Levin and Tilings

Stockmeyer on Regular Expressions

Parallel Computation Thesis : the Second Machine Class

– Is there a real problem ?

7

HISTORY

Turing Machine and their Use

8

The teachings of our Master

Our textbooks present Turing Machine

programs in the format of quintuples or

quadruples.

What format did Turing use himself ?

Some fragments of the 1936 paper

Looks like quintuples….

Configuration means state in our terminology

9

For Turing Composite

transitions are allowed

10

This is an example of the Intrinsic Representation

Complete Configuration means Configuration on our terminology

11

A Macro language for Turing Machine programs

12

This Macro Language supports

Recursion !

13

The format of TM programs

which today is conventional

arises as a simplification

introduced for the purpose

of constructing the

Universal Turing Machine

Turing operates as an Engineer

(Programmer) rather than a

Mathematician / Logician

14

Nondeterminism

• Our concept of Nondeterminism (the

applicable instruction is not

necessarily unique) is for Turing a

serious programming error

• Nondeterminism became accepted in

the late 1950-ies as a consequence of

the needs of Automata Theory

15

Using the model

•

•

Initial configuration on some input

Final configuration

– No available instruction

– By final state (accept , reject)

– Evaporation of the state

•

Complete Computation

– From initial to final configuration (or infinity)

•

Result of computation

– Language recognition (always halting, condition on final

configuration)

– Language acceptance (accept by termination)

– Function evaluation (partial function/relation, requires termination)

•

Non Terminating Computations

– Stream Computing

– Interactive computation

16

Example Turing Machine

K = {q,r,_}

S = {0,1,B}

P = { (q,0,q,0,R),

(q,1,q,1,R),

(q,B,r,B,L),

(r,0,_,1,0),

(r,1,r,0,L),

(r,B,_,1,0) }

q0 1 0 1 1 B

0 q1 0 1 1 B

0 1 q0 1 1 B

0 1 0 q1 1 B

0 1 0 1 q1 B

0 1 0 1 1 qB

0 1 0 1 r1 B

0 1 0 r1 0 B

0 1 r0 0 0 B

0 1 1 0 0 B

Successor Machine;

Increments a number in binary.

_ represents the empty halting state. 11 + 1 = 12

17

Variants

Semi Infinite Tape

B

A

O O

B

B

A

B

O

B

A

A

B

B

O

A

B

B

O O

A

B

O

B

A

A

B

O

O

q

B

B

Tape folding; remember

which track you are on….

q

18

O

Multiple Tapes

B

A

O O

B

B

A

B

O

B

A

A

B

B

O

O

Z

Z

Z

Y

X

X

Y

Z

q

Z

X

Z

U

U

U

X

Y

19

Multiple Tapes

B

A

O O

B

B

A

B

O

B

A

A

B

B

O

O

Z

X

Z

U

U

U

X

Y

Z

Z

Z

Y

X

X

Y

Z

q

Tapes become tracks on a single tape

Markers used for maintaining head positions on the tracks

20

Invariance Thesis

• Other variants

– Multi dimensional tapes

– Multi heads on a single tape

– Jumps to other head positions

• All models of Turing Machines are

equivalent

– up to polynomial overhead in time and

constant factor overhead in space

• First Machine Class

– Includes RAM / RASP model as well

21

How Turing Machines are used

• Marvelous TM algorithms do exist in the literature

– Hennie Stearns: oblivious k-tapes on two tapes

– Slisenko: Real-time Palindromes regognition and string

matching

– Vitányi: Real-time Oblivious multi-counter simulation

• The main use of TM’s is for proving negative

results (using reductions)

– Undecidability

– NP-hardness

– Other hardness results

• Requires direct encoding of TM-computations in

target formalisms: Master Reductions

22

Time-Space Diagram

q0 1 0 1 1 B

0 q1 0 1 1 B

0 1 q0 1 1 B

0 1 0 q1 1 B

0 1 0 1 q1 B

0 1 0 1 1 qB

0 1 0 1 r1 B

0 1 0 r1 0 B

0 1 r0 0 0 B

0 1 1 0 0 B

Master Reductions use this

Time-Space Diagram as

representation of the computation

subject to the Reduction

The Intrinsic Representation is

far more useful, if not required,

for constructing these

Master Reductions

WHY ??

Because validity of the Diagram

can be checked Locally

23

Time for a new hero

© Peter van Emde Boas ; 19781016

Larry Stockmeyer

Thesis MIT 1974

Rep. MAC-TR-133

FOCS 1978, Ann Arbor

24

Stockmeyer on representations

This is a Mathematical Representation

25

Stockmeyer on representations

For the Single Tape model the Intrinsic Representation is used

26

Stockmeyer’s Lemma

Validiy of transition becomes a local check on a 2 by 3 window

in the Time-Space Diagram

NB: for Stockmeyer Functions are partial and multi-valued, I.E., Relations

Is this the first time the convenience of the Intrinsic Representation is mentioned explicitly ?

27

Applications

• Automata Theory

• Master reductions for NP

– Cook/Levin reduction to SAT

– Bounded Tiling

• Stockmeyer on Regular Expressions

• Parallel Computation Thesis

28

Automata Theory

• The Machine based characterization of the

Chomsky Hierarchy

– Regular grammars Finite Automata

– Context Free grammars Push Down

Automata

– Context Sensitive grammars Linear

Bounded Automata

– Unrestricted grammars Turing Machines

29

A Side Remark

Traditional Textbooks present Automata Theory in the order:

REG , CF, CSL, Type 0 resp. FA, PDA, LBA, TM

Alternative: start with Turing Machines, treating the alternative

models as restricted models

Advantage: the concepts involving Configurations and Computations

don’t need a separate presentation for each model

The desired characterizations are obtained by correlating

production steps in the grammar world and computation segments

in the Machine world, observing the required restrictions on both sides

30

A trivial Observation

• The production proces in the

Grammar world can be simulated by

a single Tape Turing Machine

• Turing Machines are after all perfect

symbol manipulators

• Remains to show that restricted

grammar classes can be simulated

by restricted Machine models

31

The Converse Direction

• Using the Intrinsic Representation,

the transitions of a Turing Machine

are described by Context Sensitive

Rules:

<q,a> <p,b,R>

<q,a> <p,b,0>

<q,a> <p,b,L>

corresponds to qaX bpX

corresponds to qa pb

corresponds to Xqa pXb

etcetera

32

The context

• In the grammar world a production starts

with the start symbol S, and terminates in

a string of terminals

• In the machine world a computation starts

with an initial ID with the terminal string

on the input tape, and ends in an

accepting configuration

• Hence: Mutual Simulations require some

adaptations……

33

TM simulation of a type 0 grammar

In the initial Configuration the TM writes the start symbol S

in a second track of the tape.

The productions in the grammar are stepwise simulated in

this second track (shifting the symbols left/right of the rewritten

ones over the required distance)

When the production is completed the TM checks whether the

two tracks contain the same string, and accepts accordingly

Hence: the language generated by a type 0 grammar can be

recognized by a Turing Machine

34

A type 0 grammar simulates a TM

From S generate the Initial Configuration in two tracks

(this can be done using Regular productions only)

Simulate the TM computation using the CS rules in the first

track, leaving the symbols in the second track invariant

If the machine accepts, erase the entire first track

(this may require lenght reducing rules, hence type 0….)

Hence: The language accepted by a Turing Machine can be

produced by a Type 0 grammar.

35

CS grammars and LBA

• The same proof idea works

• LBA simulates CS grammar: no

intermediate string exceeds the given

input in lenght

• CS grammar simulates LBA: in previous

proof erasing rules are only needed to

remove extra workspace on the tape, and

the LBA doesn’t use extra workspace

• Beware for the endmarkers: better have

them printed as markers on the first and

last input symbol….

36

CF grammars and PDA

• Snag: the PDA is a two tape device

• Solution: code configurations as

<processed input segment><state><reversed stack>

• Yields correspondence between

leftmost derivation and PDA

computations

37

Syntax tree

Left Derivation:

S

B

A

A

x

B

A

y

C

C

z

*S

*AB

*ACB

x*CB

xz*B

xz*BA

xzy*A

xzy*C

xzyz*

PDA Instructions:

*,λ,S *,AB

*,λ,A *,AC

*,λ,B *,BA

*,λ,A *,C

*,x,A *, λ

*,y,B *, λ

*,z,S *, λ

z

CF Rules:

S AB , A AC , B BA, A C,

A x , B y, C z

The Left derivation is equal

to the time-space diagram

of the PDA computation

Hence: a single state PDA can accept what the CF grammar produces

38

PDA and CFG

• A single state PDA can simulate a CFG

• The PDA accepts by empty stack

• If the PDA has several states the CFG

rules must encode these states

PDA Instructions:

CF Rules:

q,λ,S r,AB

r,λ,A s,AC

q,λ,A s,C

r,x,A s, λ

[qSα] [rAβ][βBα]

[rAα] [sAβ][βCα]

[qAα] [sCα]

[qAs] x

α , β , range

over the state

symbols

[rAr] means “in state r, with A on top of the stack, a computation

starts after which in state s the symbol below A is exposed”

39

Regular Grammars and Finite Automata

The standard translation: q,a r

instruction

q ar

production rule

Machine configuration and partial derivation

abbaqacbac

abbaq

For the computation the input already processed is irrelevant;

all information resides in the state, and the unread input

determines the computation.

In the grammar the past symbols are produced and the future

symbols are invisible.

A matter of perspective: past vs. future

40

Master reductions for NP

• Cook-Levin reduction to SAT

– Based on Mathematical representation

– Based on Intrinsic representation

• What is the difference ?

• Tiling based reduction

– Does it require an Intrinsic

representation ?

41

Time-Space Diagram

q0 1 0 1 1 B

0 q1 0 1 1 B

0 1 q0 1 1 B

0 1 0 q1 1 B

0 1 0 1 q1 B

0 1 0 1 1 qB

0 1 0 1 r1 B

0 1 0 r1 0 B

0 1 r0 0 0 B

0 1 1 0 0 B

Intrinsic

0

0

0

0

0

0

0

0

0

0

1

1

1

1

1

1

1

1

1

1

0

0

0

0

0

0

0

0

0

1

1

1

1

1

1

1

1

1

0

0

1

1

1

1

1

1

1

0

0

0

B

B

B

B

B

B

B

B

B

B

Mathematical

42

q

q

q

q

q

q

r

r

r

-

0

1

2

3

4

5

4

3

2

2

Reduction to SAT (intrinsic)

The key idea is to introduce a family of propositional variables:

P[i,j,a] expressing at row i (time i) on position j (space j) the

symbol a is written in the diagram

Conditions:

I

at every position some symbol is written

II

at no position more than one symbol is written

III

the diagram starts with the initial configuration on the input

IV

the diagram terminates with an accepting configuration

V

the transitions follow the Turing Machine program

Va : expressed using implications (beware for Nondeterminism)

Vb : expressed by exclusion of illegal transitions

43

Reduction to SAT (mathematical)

The key idea is to introduce a family of propositional variables:

P[i,j,a] expressing at row i (time i) on position j (space j) the

symbol a is written in the diagram

Q[i,q] expressing at time i the machine is in state q

M[i,j] expressing at time i the head is in position j

Extra Conditions:

VI

at every time the machine is in some state

VII

at no time the machine is in more than one state

VIII

at every time the head is in some position

IX

at no time the head is in more than one position

The correctness conditions III , IV and V are rephrased,

somewhat easier to understand….

44

What’s the difference ??

Assume that a computation of T steps is described, hence the

height (but also the width) of the diagram is O(T)

Assume that the number of symbols used is K

K = O( # states * # tape symbols )

Investigate the size of the required propositional formulas.

Investigate whether these formulas are expressed as clauses.

45

Expressing the Conditions

Conditions:

I

at every position some symbol is written

II

at no position more than one symbol is written

III

the diagram starts with the initial configuration

on the input

IV

the diagram terminates with an accepting

configuration

V

the transitions follow the Turing Machine program

Va : expressed using implications

Vb : expressed by exclusion of illegal transitions

VI

at every time the machine is in some state

VII

at no time the machine is in more than one state

VIII

at every time the head is in some position

IX

at no time the head is in more than one position

All conditions (except Va) are easily expressed by clauses

46

O(T2K)

O(T2K2)

O(T)

O(1)

O(T2K2)

O(T2K6)

O(TK)

O(TK2)

O(T2)

O(T3)

Conclusion

• The standard proof (EG., Garey &

Johnson) uses the Mathematical

representation, yielding a cubic formula

size blow-up

• However, a quadratic formula size blow-up

is achievable when using the intrinsic

representation

• Same overhead is obtained when taking

the detour by the tiling based reduction

(next)

47

Tiling based Reduction

Tile Type: square divided in 4

coloured triangles.

Infinite stock available

No rotations or reflections allowed

Tiling: Covering of region of the

plane such that adjacent tiles have

matching colours

Boundary condition: colours given along

(part of) edge of region, or some given

tile at some given position.

48

Turing Machines and Tilings

Idea: tile a region and let successive

color sequences along rows correspond to

successive configurations.....

s

s

qs

s

s

symbol

passing

tile

q

q’

q’s’

s’

(q,s,q’,s’,0)

(q,s,q’,s’,R)

q

qs

qs

qs

state

accepting

tiles

qs

q’

s’

instruction

step

tiles

(q,s,q’,s’,L)

SNAG: Pairs of phantom heads appearing out of nowhere...

Solution: Right and Left Moving States....

49

Example Turing Machine

K = {q,r,_}

S = {0,1,B}

P = { (q,0,q,0,R),

(q,1,q,1,R),

(q,B,r,B,L),

(r,0,_,1,0),

(r,1,r,0,L),

(r,B,_,1,0) }

Successor Machine;

adds 1 to a binary integer.

_ denotes empty halt state.

q0 1 0 1 1 B

0 q1 0 1 1 B

0 1 q0 1 1 B

0 1 0 q1 1 B

0 1 0 1 q1 B

0 1 0 1 1 qB

0 1 0 1 r1 B

0 1 0 r1 0 B

0 1 r0 0 0 B

0 1 1 0 0 B

11 + 1 = 12

50

Reduction to Tilings

q0 1 0 1 1 B

0 q1 0 1 1 B

0 1 q0 1 1 B

0 1 0 q1 1 B

0 1 0 1 q1 B

0 1 0 1 1 qB

0 1 0 1 r1 B

0 1 0 r1 0 B

0 1 r0 0 0 B

0 1 1 0 0 B

© Peter van Emde Boas ; 19921029

51

Implementation in Hardware

© Peter van Emde Boas ; 19950310

© Peter van Emde Boas ; 19950310

© Peter van Emde Boas ; 19921031

htpp://www.squaringthecircles.com/turingtiles

52

Tiling reductions

space

initial configuration

Program : Tile Types

Input: Boundary

condition

Space: Width region

Time: Height region

blank

border

time

blank

border

accepting configuration/

by construction unique

53

Tiling Problems

Square Tiling: Tiling a given square with

boundary condition: Complete for NP.

Corridor Tiling: Tiling a rectangle with

boundary conditions on entrance and exit

(length is undetermined):

Complete for PSPACE .

Origin Constrained Tiling: Tiling the entire plane

with a given Tile at the Origin.

Complete for co-RE hence Undecidable

Tiling: Tiling the entire plain without constraints.

Still Complete for co-RE

(Wang/Berger’s Theorem). Hard to Prove!

54

Detour to SAT

A reduction from Bounded Tiling to SAT requires propositional

variables t[i,j,s] expressing at position (i,j) a tile of type s is

placed

Conditions:

I

Everywhere some tile is placed

II

Nowhere more than one tile is placed

III

Boundary conditions are observed

IV

Adjacency conditions are observed

O(T2K)

O(T2K2)

O(TK)

O(T2K2)

T height and width of the tiled region

K number of tile types

All conditions are expressed as clauses

55

Is the intrinsic representation needed ?

• A tiling reduction is posible for the

semi-intrinsic representation (state

information can be transmitted

through rows…)

• Translating numeric information into

geometric info (without introducing a

semi-intrinsic representation) seems

hard if not impossible…

56

Stockmeyer on Regular

Expressions

Thesis MIT 1974

Rep. MAC-TR-133

57

Regular Expressions

S finite alphabet (in our applications Σ U (K ˟ Σ) U { $ } )

REG(S) :

0 REG(S)

1 REG(S)

a REG(S) for a S

M(0) = empty language

M(1) = {λ} singleton empty word

M(a) = {a} singleton letter a word

If f, g REG(S) then

f + g REG(S)

M(f + g) = M(f) U M(g) union

f.g REG(S)

M(f.g) = M(f).M(g) concatenation

f* REG(S)

M(f*) = M(f)* Kleene star

f* = 1 + f + f.f + f.f.f. + ….

Extra operations:

f2 = f.f

f∩g

M(f ∩ g) = M(f) ∩ M(g) intersection

~f

M(~ f) = S* \ M(f)

squaring

complementation

58

Regular Expressions

•

•

•

•

•

•

•

•

Describe the Regular languages over S

Transformation expression Finite automaton is easy (construction

where the parentheses in the expression become the states in the Finite

Automaton)

Converse transformation more difficult but standard textbook material

(induction over number of states)

Other interpretations exist and are useful: Regular Algebra’s, EG., in

programming logics (PDL)

Complete axiomatizations exist

No direct algebraic expressions for intersection and complementation

Regular languages being closed under intersection and

complementation implies that these operations are expressible in all

individual instances

Extra operators yield succinctness

59

Stockmeyer’s Decision Problems

NEC(f,S)

is M(f) a proper subset of S* ?

EQ(f,g)

INEQ(f,g)

is M(f) = M(g) ?

is M(f) ≠ M(g) ?

NEC(f,S) is equivalent to INEQ(f,S*)

EQ and INEQ are complementary problems

Stockmeyer (1974) characterizes the complexity of these

problems, depending on the set of available operators

Considering complementary problems was meaninful:

the Immerman-Szelepsényi result was discovered only 13

years later….

60

Stockmeyer’s Master Reduction

Given a TM program P and some input string ω, there doesn’t exist

a regular expression denoting the (linearizations of) accepting

time-space diagrams.

But Violations against representing such a diagram can be described

by regular expressions

Syllabus Errorum approach: construct a Regular expression which

enumerates all possible violations, and test whether there remains

a string not covered by this expression (NEC problem)

61

Syllabus Errorum

A correct time-space diagram consists of configurations, all

of equal lenght, separated by $ symbols

The first configuration must be the intitial configuration on the

given input

The last configuration must be accepting (the unique accepting)

configuration

The diagram may contain no illegal transitions

This condition is captured by the absense of forbidden 2 by 3

windows in the diagram, as expressed by Stockmeyer’s lemma;

For this method to work the Intrinsic representation seems essential.

62

Yardstick expressions

Alphabet S used: Σ U (K ˟ Σ) U { $ } ;

The width of the time space diagram (space consumed by the

computation) is denoted M .

Let V = Σ U (K ˟ Σ) , W = Σ

Given alphabet Z and number N we construct an regular expression

Ya(Z,N) representing strings of length N of symbols from Z

Note that Ya(1+Z,N) now represents strings of lenght ≤ N

Using these yardstick expressions the various sources of errors

can be described

63

Error Descriptions

There is a substring inbetween two $ symbols which is to short:

S*.$. Ya(1+V,M-1) .$.S*

There is a substring inbetween two $ symbols which is to long:

S*.$. Ya(V,M+1) .S*.$.S*

There is an incorrect transition in the diagram:

xyz

S*.xyz. Ya($+V,M-2) .uvw.S*

where u v w is a forbidden 2 by 3

window in the diagram

Similar (even more simple) expressions for the properties “starts

wrong” and “ends wrong”

64

The reduction

• The regular expression which is the sum of all

these error types represents the exact

complement of the set of time space diagrams of

accepting computations by P on input ω in

space M

• Denote this expression by ER(P, ω, M)

• Input is accepted iff NEC( ER(P, ω, M) , S)

• Remains to estimate the lenght of this expression

• Remember that we consider Nondeterministic

space bounded computations

65

The size of the yardstick expressions

• Without extra operators Ya(Z,N) is of

size O(N) yielding NPSPACE

hardness for NEC

• With squaring 2 Ya(Z,N) is of size

O(log(N)) yielding NEXPSPACE

hardness for NEC

66

Expressions without *

• The same method also works without

using * , but now the height of the

diagram (time) must be restricted

• Yields reductions showing NP hardness

and NEXPTIME hardness for the INEQ

problem (without or with squaring)

• Nonelementary hardness if

complementation is added

• Matching upper bounds are also obtained

67

Parallel Computation Thesis

// PTIME = // NPTIME = PSPACE

True for Computational Models which combine

Exponential Growth potential with

Uniform Behavior.

The Second

Machine Class

68

Representative examples

Sequential devices operating on huge objects

Vector machine

Pratt & Stockmeyer

74,76

MRAM

Hartmanis & Simon

74

MRAM without bit-logic

Bertoni, Mauri, Sabadini 81

EDITRAM

Stegwee, Torenvliet, VEB 85

ASMM

Tromp, VEB

90,93

Alternating TM (RAM)

Chandra, Stockmeyer & Kozen 81

Parallel Devices

Parallel TM

PRAM

SIMDAG

Aggregate

Array Proc. Machine

Savitch

Savitch & Stimson

Goldschlager

Goldschlager

v Leeuwen & Wiedermann

69

77

76,79

78,82

78,82

85

How to prove it

• Inclusion //NPTIME PSPACE :

– Guess computation trace

– Verify that it accepts by means of

recursive procedure

– Validate that the parameters are

polynomially bounded in size

– Uniformity of behavior is essential

70

How to prove it

• Inclusion PSPACE //PTIME :

– Today’s authors show that

QBF //PTIME

– Original proofs give direct simulations

of PSPACE computations, based on

techniques originating from the proof of

Savitch’ Theorem PSPACE = NPSPACE

71

Walter Savitch

© Peter van Emde Boas

© Peter van Emde Boas

Amsterdam; CWI, Aug 1976

© Peter van Emde Boas

San Diego, Oct 1983

Proved in 1970 PSPACE = NPSPACE

72

Understanding PSPACE

Acceptance = Reachability in Computation Graph

Solitaire Problem: finding an Accepting path in an Exponentially large,

but highly Regular Graph

Matrix Powering Algorithm: Parallelism

Recursive Procedure: Savitch Theorem

Logic: QBF, Alternation, Games

73

Polynomial Space Configuration

Graph

• Configurations & Transitions:

– (finite) State, Focus of Interaction &

Memory Contents

– Transitions are Local (involving State

and Memory locations in Focus only;

Focus may shift). Only a Finite number

of Transitions in a Configuration

– Input Space doesn´t count for Space

Measure

74

Polynomial Space Configuration

Graph

• Exponential Size Configuration Graph:

– input length: |x| = k ; Space bound: S(k)

– Number of States: q (constant)

– Number of Focus Locations: k.S(k)t

(where t denotes the number of “heads”)

– Number of Memory Contents: CS(k)

– Together: q.k.S(k)t. CS(k) = 2O(S(k))

(assuming S(k) = W(log(k)) )

75

Polynomial Space Configuration

Graph

• Uniqueness Initial & Final Accepting

Configuration:

– Before Accepting Erase Everything

– Return Focus to Starting Positions

– Halt in Unique Accepting State

Start

Goal

76

Path Finding in Configuration

Graph

77

Path Finding in Configuration

Graph

Cycles in accepting path are irrelevant

Trash Nodes: Unreachable:

or Useless

78

Unreasonable Algorithm

• Step 1: generate this Exponentially large

structure

• Step 2: Perform Exponentially long heavy

computation on this structure

• Step 3: Extract a single bit of information

from the result - the rest of the work is

wasted.

• : Akoue Pantwn, Eklege de ‘a sumfereis

• Which is just what the Parallel Models do.....

79

Unreasonable Algorithm

Transitive Closure of Adjacency Matrix by

Iterated squaring ==> // Models

Recursive approaches ==> // Models,

Savitch' Theorem &

Hardness QBF and Games

80

Adjacency Matrix

2

1

M :=

1

0

1

0

1

0

1

0

0

0

0

1

1

0

0

1

0

0

1

0

1

0

0

1

1

3

5

4

Matrix describes Presence of

Edges in Graph;

1 on diagonal: length zero paths

81

Adjacency Matrix

M2 =

1

1

1

1

1

0

1

0

0

0

0

1

1

0

0

1

0

1

1

1

1

1

0

1

1

1

3

5

In Boolean Matrix Algebra

M2 : Paths up to length 2

M4 : paths up to length 4

M4 =

1

1

1

1

1

2

4

0

1

0

0

0

0

1

1

0

0

1

1

1

1

1

1

1

1

1

1

2

1

3

5

82

4

Matrix Squaring

M[i,j] :=

( M[i,k]

M[k,j] )

k

On an N node graph, a single squaring requires

O(N3) operations

Log(N) squarings are required to compute N-th

Power of the Matrix

Remember that N = 2O(S)

83

Think Parallel

• O( N3 ) processors can compute these

squarings in time

– O( log(N)) if unbounded fan-in is allowed

– O( log(N)2 ) if fan-in is bounded

• This is the basis for recognizing PSPACE

in polynomial time on PRAM models

• More in Second Machine Class paper

and/or chapter in Handbook of TCS; (both

publications from the 1980-ies)

84

How to obtain this Matrix ?

The row/column index is a binary number

which codes a configuration.

This code must be efficient in order that it

is easy to recognize whether two configurations

are connected by a transition

"Locality" of the transitions is key: configuration

only changes at focus; everywhere else it

remains the same.

85

How to obtain this Matrix ?

The intrinsic representation for Turing Machine

has all desired properties.

We need some routine to extract from a binary

bitstring a group of bits coding a single symbol.

On a RAM model your values are numbers - not

bitstrings. Extracting these symbol codes

requires number-to-binary conversion, which

presupposes the availability of some

"multiplicative" instruction which lacks in the

standard model (but which is always granted).

86

The Problem ??!

Is there a dragon out there ??

© Games Workshop

87

What do actual authors Use ?

• Whenever TM computations are used

in master reductions, the author will

almost always chose either an

intrinsic or a semi intrinsic

representation

• This holds even if the formal

definition for configurations is based

on the mathematical representation

88

Representative authors

Author

Title

Mathematical

Reidel

Ency of Math

X

RI Soare

RE sets and

degrees 87

X

SC Kleene

Intro to

Metamath 52

X

Boolos & Jeffrey Computability

& Logic 74

Börger

Computability

89

Cohen

Computability

& Logic 87

M Davis

Computability

& Unsolv. 58

Intrinsic

Semi-Intrinsic

X

X

X

X

89

Representative authors

Author

Title

Mathematical

Hopcroft &

Ullman

Formal Lang

& Autom 69

X

Hopcroft &

Ullman

Formal Lang

& Autom 79

F Hennie

Intro to

Comput 77

Harrison

Intro Formal

Languages 78

Sudkamp

Intro TCS

06

Lewis &

Papadimitriou

Elts th of

comp 81

Mehlhorn

EATCS mon 2

84

Intrinsic

Semi-Intrinsic

X

illustration

X

X

Without states

X

X

earlier eds as well

X

X

for reductions

X

90

Representative authors

Author

Title

Mathematical

Intrinsic

Rudick &

Wigderson

Comp Compl

Theory 04

J Savage

Models of

Computation 98

Balcazar Diaz &

Gabarro

Structural

Complexity 88

X

H Rogers

Th Recursive

Functions 67

X

Odifreddi

Classical Rec

Theory 89

X

WJ Savitch

Abstr Mach &

Grammars 82

X

J Martin

Intr Lang & th

of comp 97

Semi-Intrinsic

X

X

For k tapes

X

X

X

91

We are Safe

• In practice, all authors on the basis

of their intuition use the intrinsic

representation

• Why then it seems that Stockmeyer

is the unique author who makes the

advantages explicit ??

92