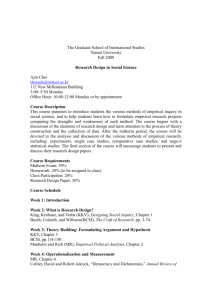

Yonsei University

advertisement

Chapter 2 Computer Evolution and Performance Yonsei University Contents • • • • 2-2 A Brief History of Computers Designing for Performance Pentium and PowerPC Evolution Performance Evaluation Yonsei University ENIAC • • • • A brief history of computers Electronic Numerical Integrator And Computer John Mauchly and John Presper Eckert Trajectory tables for weapons Started 1943 / Finished 1946 – Too late for war effort • • • • • • • • • 2-3 Used until 1955 Decimal (not binary) 20 accumulators of 10 digits Programmed manually by switches 18,000 vacuum tubes 30 tons 1,500 square feet 140 kW power consumption 5,000 additions per second Yonsei University von Neumann/Turing A brief history of computers • • • • Stored Program concept Main memory storing programs and data ALU operating on binary data Control unit interpreting instructions from memory and executing • Input and output equipment operated by control unit • Princeton Institute for Advanced Studies – IAS • Completed 1952 2-4 Yonsei University von Nuemann Machine Input Output Equipment Arithmetic And Logic Unit A brief history of computers Main Memory Program Control Unit • If a program could be represented in a form suitable for storing in memory, the programming process could be facilitated • A computer could get its its instructions from memory, and a program could could be set or altered by setting the values of a portion of memory 2-5 Yonsei University IAS Memory Formats A brief history of computers 01 39 (a) Number Word Sign Bit Left Instruction 0 Right Instruction 8 Opcode 19 20 Address 28 Opcode 39 Address (b) Instruction Word • 1000 x 40 bit words – – – – 2-6 Binary number 2 x 20 bit instructions Each instruction consisting of an 8-bit opcode A 12-bit address designating one of the words in memory Yonsei University IAS Registers A brief history of computers • Memory Buffer Register – Containing a word to be stored in memory, or used to receive a word from memory • Memory Address Register – Specifying the address in memory of the word to be written from or read into the MBR • Instruction Register – Containing the 8-bit opcode instruction being executed • Instruction Buffer Register – Employed to hold temporarily the righthand instruction from a word memory • Program Counter – Containing the address of the next instruction-pair to be fetched from memory • Accumulator and Multiplier Quotient – Employed to hold temporarily operands and results of ALU operations. 2-7 Yonsei University Structure of IAS A brief history of computers Central Processing Unit Arithmetic and Logic Unit Accumulator MQ Arithmetic & Logic Circuits MBR Input Output Equipment Instructions Main & Data Memory PC IBR MAR IR Control Circuits Program Control Unit 2-8 Address Yonsei University Partial Flowchart of IAS A brief history of computers Start Yes No Memory Access required Fetch Cycle IR ← IBR (0:7) MAR ← IBR (8:19) Is Next Instruction In IBR? No MAR ← PC MBR ← M(MAR) IR ← IBR (20:27) MAR←IBR(28:39) No Left Instruction Required? Yes IBR←MBR(20:39) IR ← MBR (0:7) MAR ←MBR(8:19) PC ← PC+1 AC ← M(X) Go to M(X, 0:19) ExecuMBR ← M(MAR) tion Cycle AC ← MBR 2-9 Decode instruction in IR If AC ≥ 0 then Go to M(X, 0:19) Yes PC ← MAR Is AC ≥ 0? No AC ← AC + M(X) MBR ← M(MAR) AC ← MBR Yonsei University The IAS Instruction Set 2-10 A brief history of computers Yonsei University The IAS Instruction Set 2-11 A brief history of computers Yonsei University The IAS Instruction Set A brief history of computers • Data transfer – Move data between memory and ALU registers or between two ALU registers • Unconditional branch – This sequence can be changed by a branch instruction allowing decision points • Conditional branch – The branch can be made dependent on a condition, thus allowing decision points • Arithmetic – Operations performed by the ALU • Address modify – Permits addresses to be computed in the ALU and then inserted into instruction stored in memory. 2-12 Yonsei University Commercial Computers • • • • • A brief history of computers 1947 - Eckert-Mauchly Computer Corporation UNIVAC I (Universal Automatic Computer) US Bureau of Census 1950 calculations Became part of Sperry-Rand Corporation Late 1950s - UNIVAC II – Faster – More memory 2-13 Yonsei University IBM A brief history of computers • Had helped build the Mark I • Punched-card processing equipment • 1953 - the 701 – IBM’s first stored program computer – Scientific calculations • 1955 - the 702 – Business applications • Lead to 700/7000 series 2-14 Yonsei University Computer Generations 2-15 Generation Approximate Dates 1 2 3 1946-1957 1958-1964 1965-1971 4 1972-1977 5 1978- . A brief history of computers Technology Vacuum tube Transistor Small- and Medium-scale Integration Large-scale Integration Very-large-scale Integration Typical Speed (operations per second) 40,000 200,000 1,000,000 10,000,000 100,000,000 Yonsei University Transistors • • • • • • • • 2-16 A brief history of computers Replaced vacuum tubes Smaller Cheaper Less heat dissipation Solid State device Made from Silicon (Sand) Invented 1947 at Bell Labs William Shockley et al. Yonsei University Transistor Based Computers A brief history of computers • Second generation machines • NCR & RCA produced small transistor machines • IBM 7000 • Digital Equipment Corporation (DEC) - 1957 – Produced PDP-1 2-17 Yonsei University IBM 700/7000 Series 2-18 Model Number First Delivery 701 1952 Vacuum Tubes 704 1955 709 A brief history of computers CPU Memory Technology Technology Cycle Time(㎲) Memory Size(K) ElectroStatic tubes 30 2-4 Vacuum Tubes Core 12 4-32 1958 Vacuum Tubes Core 12 32 7090 1960 Transistor Core 2.18 32 7094 I 1962 Transistor Core 2 32 7094 II 1964 Transistor Core 1.4 32 Yonsei University IBM 700/7000 Series A brief history of computers Number Hardwired I/O Instruction of Index Floating Overlap Fetch Registers Point (Channels) Overlap Speed (relative To 701) Model Number Number of Opcodes 701 24 0 No No No 1 704 80 3 Yes No No 2.5 709 140 3 Yes Yes No 4 7090 169 3 Yes Yes No 25 Yes Yes 30 Yes Yes 50 7094 I 185 7 7094 II 185 7 2-19 Yes (double Precision) Yes (double Precision) Yonsei University An IBM 7094 Configuration A brief history of computers Mag Tape Units CPU Data Channel Card Punch Line Printer Card Reader Multiplexor Drum Data Channel Disk Data Channel Disk Hypertapes Memory Data Channel 2-20 Teleprocessing Equipment Yonsei University The IBM 7094 A brief history of computers • The most important point is the use of data channels. A data channel is an independent I/O module with its own processor and its own instruction set. • Another new feature is the multiplexor, which is the central termination point for data channel, the CPU, and memory. 2-21 Yonsei University Microelectronics A brief history of computers • Literally - “small electronics” • A computer is made up of gates, memory cells and interconnections • These can be manufactured on a semiconductor • e.g. silicon wafer 2-22 Yonsei University Microelectronics A brief history of computers • Data storage – Provided by memory cells • Data processing – Provided by gates • Data movement – The paths between components are used to move data from memory to memory and from memory through gates to memory • Control – The paths between components can carry control signals. The memory cell will store the bit on its input lead when the WRITE control signal is ON and will place that bit on its output lead when the READ control signal is ON. 2-23 Yonsei University Wafer, Chip, and Gate A brief history of computers Wafer Chip Package Chip Gate • Small-scale integration (SSI) 2-24 Yonsei University Generations of Computer A brief history of computers • Vacuum tube - 1946-1957 • Transistor - 1958-1964 • Small scale integration - 1965 on – Up to 100 devices on a chip • Medium scale integration - to 1971 – 100-3,000 devices on a chip • Large scale integration - 1971-1977 – 3,000 - 100,000 devices on a chip • Very large scale integration - 1978 to date – 100,000 - 100,000,000 devices on a chip • Ultra large scale integration – Over 100,000,000 devices on a chip 2-25 Yonsei University Moore’s Law A brief history of computers • Increased density of components on chip • Gordon Moore - cofounder of Intel • Number of transistors on a chip will double every year • Since 1970’s development has slowed a little – Number of transistors doubles every 18 months • Cost of a chip has remained almost unchanged • Higher packing density means shorter electrical paths, giving higher performance • Smaller size gives increased flexibility • Reduced power and cooling requirements • Fewer interconnections increases reliability 2-26 Yonsei University Growth in CPU Transistor Count 2-27 A brief history of computers Yonsei University IBM 360 series A brief history of computers • 1964 • Replaced (& not compatible with) 7000 series • First planned “family” of computers – – – – – – Similar or identical instruction sets Similar or identical O/S Increasing speed Increasing number of I/O ports(i.e. more terminals) Increased memory size Increased cost • Multiplexed switch structure 2-28 Yonsei University Key Characteristics of 360 Family A brief history of computers • Many of its features have become standard on other large computers Characters 2-29 Model 30 Model 40 Model 50 Model 65 Model 75 Maximum memory size (bytes) 64K 256K 256K 512K 512K Data rate from memory (Mbytes/s) 0.5 0.8 2.0 8.0 16.0 Processor cycle time (㎲) 1.0 0.625 0.5 0.25 0.2 Relative speed 1 3.5 10 21 50 Maximum number of data channels 3 3 4 6 6 Maximum data rate on one channel (Mbytes/s) 250 400 800 1250 1250 Yonsei University DEC PDP-8 • • • • • A brief history of computers 1964 First minicomputer (after miniskirt!) Did not need air conditioned room Small enough to sit on a lab bench $16,000 – $100k+ for IBM 360 • Embedded applications & OEM • Later models of the PDP-8 used a bus structure that is now virtually universal for minicomputers and microcomputers 2-30 Yonsei University PDP-8/E Block Diagram A brief history of computers • Highly flexible architecture allowing modules to be plugged into the bus to create various configurations 2-31 Yonsei University Semiconductor Memory A brief history of computers • The first application of integrated circuit technology to computers – construction of the processor – also used to construct memories • 1970 • Fairchild • Size of a single core – i.e. 1 bit of magnetic core storage • • • • 2-32 Holds 256 bits Non-destructive read Much faster than core Capacity approximately doubles each year Yonsei University Evolution of Intel Microprocessors 2-33 A brief history of computers Yonsei University Evolution of Intel Microprocessors 2-34 A brief history of computers Yonsei University Evolution of Intel Microprocessors 2-35 A brief history of computers Yonsei University Microprocessor Speed Design for performance • In memory chips, the relentless pursuit of speed has quadrupled the capacity of DRAM, every years • Pipelining • On board cache • On board L1 & L2 cache • Branch prediction • Data flow analysis • Speculative execution 2-36 Yonsei University Design for Evolution of DRAM / Processor Characteristics performance 2-37 Yonsei University Performance Mismatch Design for performance • Processor speed increased • Memory capacity increased • Memory speed lags behind processor speed 2-38 Yonsei University Performance Balance Design for performance • It is responsible for carrying a constant flow of program instructions and data between memory chips and the processor → The interface between processor and main memory is the most crucial pathway in the entire computer 2-39 Yonsei University Trends in DRAM use 2-40 Design for performance Yonsei University Performance Balance Design for performance • On average, the number of DRAMs per system is going down. • The solid black lines in the figure show that, for a fixed-sized memory, the number of DRAMs needed is declining • The shaded bands show that for a particular type of system, main memory size has slowly increased while the number of DRAMs has declined 2-41 Yonsei University Solutions Design for performance • Increase number of bits retrieved at one time – Make DRAM “wider” rather than “deeper” • Change DRAM interface – Cache • Reduce frequency of memory access – More complex cache and cache on chip • Increase interconnection bandwidth – High speed buses – Hierarchy of buses 2-42 Yonsei University Performance Balance Design for performance • Two constantly evolving factors to be coped with – The rate at which performance is changing in the various technology areas differs greatly from one type of element to another – New applications and new peripheral devices constantly change the nature of the demand on the system in terms of typical instruction profile and the data access patterns. 2-43 Yonsei University Intel Pentium and PowerPC evolution • Pentium - results of design effort on CISCs • 1971 - 4004 – First microprocessor – All CPU components on a single chip – 4 bit • Followed in 1972 by 8008 – 8 bit – Both designed for specific applications • 1974 - 8080 – Intel’s first general purpose microprocessor • 8086 – 16 bit, instruction cache, or queue • 80286 – addressing a 16-Mbyte memory 2-44 Yonsei University Intel Pentium and PowerPC evolution • 80386 – 32 bit, multitasking • 80486 – built-in math coprocessor • Pentium – superscalar techniques • Pentium Pro • Pentium II – Intel MMX thchnology • Pentium III – additional floating-point instruction • Merced – 64-bit organization 2-45 Yonsei University PowerPC Pentium and PowerPC evolution • RISC systems • PowerPC Processor Summary 2-46 Yonsei University Two Notions of Performance Performance evaluation Plane DC to Paris Speed Passengers Throughput (pmph) Boeing 747 6.5 hours 610 mph 470 286,700 BAD/Sud Concodre 3 hours 1350 mph 132 178,200 • Which has higher performance? – Time to do the task (Execution Time) • execution time, response time, latency – Tasks per day, hour, week, sec, ns. .. (Performance) • throughput, bandwidth – Response time and throughput often are in opposition 2-47 Yonsei University To Assess Performance Performance evaluation • Response Time – Time to complete a task • Throughput – Total amount of work done per time • Execution Time (CPU Time) – User CPU time • Time spent in the program – System CPU time • Time spent in OS • Elapsed Time – Execution Time + Time of I/O and time sharing 2-48 Yonsei University Criteria of Performance Performance evaluation • Execution time seems to measure the power of the CPU • Elapsed time measures the performance of whole system including OS and I/O • User is interested in elapsed time • Sales people are interested in the highest number of performance that can be quoted • Performance analysist is interested in both execution time and elapsed time 2-49 Yonsei University Definitions Performance evaluation • Performance is in units of things-per-second – bigger is better • If we are primarily concerned with response time – performance(x) = 1 execution_time(x) " X is n times faster than Y" means n 2-50 = Performance(X) ---------------------Performance(Y) Yonsei University Example Performance evaluation • Time of Concorde vs. Boeing 747? – bigger is better – Concord is 1350 mph / 610 mph = 2.2 times faster = 6.5hours/3hours • Throughput of Concorde vs. Boeing 747 ? – Concord is 178,200 pmph / 286,700 pmph = 0.62 times faster – Boeing is 286,700 pmph / 178,200 pmph = 1.6 times faster • Boeing is 1.6 times (60% faster in terms of throughput • Concord is 2.2 times (220% faster in terms of flying time • We will focus primarily on execution time for a single job 2-51 Yonsei University Basis of Evaluation Cons Pros • representative Actual Target Workload • portable • widely used • improvements useful in reality • easy to run, early in design cycle • identify peak capability and potential bottlenecks 2-52 Performance evaluation • very specific • non-portable • difficult to run, or measure • hard to identify cause •less representative Full Application Benchmarks Small kernel Benchmarks Microbenchmarks • easy to cool • peak may be a long way from application performance Yonsei University MIPS Performance evaluation • Millions of Instruction(Executed) Per Second • Often used measure of performance • Native MIPS = = = = 2-53 clock rate CPI × 106 instruction count execution time × 106 instruction count CPU clocks × cycle time × 106 instruction count × clock rate cycle time × 106 instruction count × clock rate instruction count × CPI × 106 clock rate CPI × 106 Yonsei University MIPS Performance evaluation • Meaningless information – Run a program and time it – Count the number of executed instruction to get MIPs rating • Problems – Cannot compare different computers with different instruction sets – Varies between programs executed on the same computer • Peak MIPS – This is what many manufacturers provide – Usually neglecting ‘peak’o 2-54 Yonsei University Relative MIPS Performance evaluation • Call VAX 11/780 1 MIPS machine (not true) CPU time of VAX 11/780 • . × MIPS of VAX 11/780 CPU time of machine A CPU time of VAX 11/780 • . CPU time of machine A • Makes MIPS rating more independent of benchmark programs • Advantage of relative MIPS is small 2-55 Yonsei University FLOPS Performance evaluation • Million Floating Point Instructions Per Second • Used for engineering and scientific applications where floating point operations account for a high fraction of all executed instructions • Problems – Program dependent – Many programs does not use floating point operations – Machine dependent – Depends on relative mixture of integer and floating point operations – Depends on relative mixture of cheep(+.-) and expensive(×) floating point operations • Normalized FLOPS (relative FLOPS) • Peak FLOPS 2-56 Yonsei University SPEC Marks Performance evaluation • System Performance Evaluation Coorperative • Non-profit group initially founded by APOLLO, HP, MIPSCO, and SUN • Now includes many more like IBM, DEC, AT&T, MOTOROLA, etc • Measures the ratio of execution time on the target measure to that on a VAX 11/780 • Summarizes performance by taking the geometric means of the ratios 2-57 Yonsei University SPEC95 Performance evaluation • Eighteen application benchmarks (with inputs) reflecting a technical computing workload • Eight integer – go, m88ksim, gcc, compress, li, ijpeg, perl, vortex • Ten floating-point intensive – tomcatv, swim, su2cor, hydro2d, mgrid, applu, turb3d, apsi, fppp, wave5 • Must run with standard compiler flags – eliminate special undocumented incantations that may not even generate working code for real programs 2-58 Yonsei University Metrics of performance Performance evaluation Answers per month Application Useful Operations per second Programming Language Compiler ISA (millions) of Instructions per second ?MIPS (millions) of (F.P.) operations per second ?MFLOP/s Datapath Control Megabytes per second Function Units Transistors Wires Pins Cycles per second (clock rate) Each metric has a place and a purpose, and each can be misused 2-59 Yonsei University Aspects of CPU Performance CPU time = Seconds Program Performance evaluation = Instructions x Cycles Program instr. count x Seconds Instruction CPI Cycle clock rate Program Compiler Instr. Set Arch Organization Technology 2-60 Yonsei University Criteria of Performance Performance evaluation • CPU Time – (Instruction count) × (CPI) × (Clock Cycle) cycle second × – number of Instructions × instruction cycle • Clock Rate cycle –. seconds – Depends on technology and organization • CPI – Cycles Per Instruction – Depends on organization and instruction set • Instruction Count – Depends on compiler and instruction set 2-61 Yonsei University Criteria of Performance Performance evaluation • If CPI is not uniform across all instructions n – CPU cycles = Σ i=1 (CPIi × Ii) • n - number of instructions in instruction set • CPIi - CPI for instruction i • Ii - number of times instruction i occurs in a program • CPU Time = Σ i=1 (CPIi × Ii × clock cycle) n Σ i=1(CPIi × Ii) • CPI = number of executed instruction n • It assumes that a given instruction always takes the same number of cycles to execute 2-62 Yonsei University Aspects of CPU Performance CPU time = Seconds Program = Instructions x Cycles Program instr. count 2-63 CPI X Compiler X X Instr. Set X X Technology x Seconds Instruction Program Organization Performance evaluation X Cycle clock rate X X Yonsei University CPI Performance evaluation average cycles per instruction CPI = (CPU Time * Clock Rate) / Instruction Count = Clock Cycles / Instruction Count n CPU time = ∑ (Clock Cycle Time × CPI i × I I) i=1 n CPI = ∑ CPI i × F i i=1 where F i Ii = Instruction Count "instruction frequency" Invest Resources where time is Spent! 2-64 Yonsei University Example of RISC Base Machine (Reg / Reg) Op Freq Cycles CPI(i) ALU 50% 1 .5 Load 20% 5 1.0 Store 10% 3 .3 Branch 20% 2 .4 2.2 Performance evaluation % Time 23% 45% 14% 18% Typical Mix How much faster would the machine be is a better data cache reduced the average load time to 2 cycles? How does this compare with using branch prediction to shave a cycle off the branch time? What if two ALU instructions could be executed at once? 2-65 Yonsei University Amdahl's Law Performance evaluation Speedup due to enhancement E: ExTime w/o E Performance w/ E Speedup(E) = -------------------- = -------------------------ExTime w/ E Performance w/o E Suppose that enhancement E accelerates a fraction F of the task by a factor S and the remainder of the task is unaffected then, ExTime(with E) ((1-F) + F/S) X ExTime(without E) Speedup(with E) . 1 . (1-F) + F/S 2-66 Yonsei University Cost Performance evaluation • Traditionally ignored by textbooks because of rapid change • Driven by learning curve : manufacturing costs decrease with time • Understanding learning curve effects on yield is key to cost projection • Yield – Fraction of manufactured items that survive the testing procedure • Testing and Packaging – Big factors in lowering costs 2-67 Yonsei University Cost Performance evaluation • Cost of Chips – Cost = manufacture + testing + packaging final yield – Cost of die = cost of wafer dies per wafer × die yield – Wafer Yield = dies / wafer • Cost vs. Price – – – – 2-68 Component cost : 15~33% Direct cost : 6~8% Gross margin : 34~39% Average discount : 25~40% Yonsei University