Ch7 Synthesis - Department of Computer Engineering

advertisement

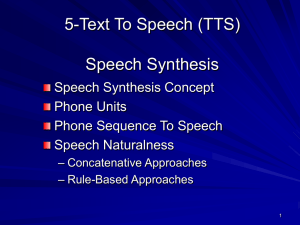

6-Text To Speech (TTS)

Speech Synthesis

Speech Synthesis Concept

Speech Naturalness

Phone Sequence To Speech

– Articulatory Approaches

– Concatenative Approaches

– HMM-based Approaches

– Rule-Based Approaches

1

Speech Synthesis Concept

Text

Text

Text to

Phone Sequence

Natural Language

Processing (NLP)

Speech waveform

Phone Sequence

to Speech

Speech

waveform

Speech Processing

2

Speech Naturalness

Obviation of undesirable noise and

distortion and dissociation from speech

Prosody generation

– Speech energy

– Duration

– pitch

– Intonation

– Stress

3

Speech Naturalness (Cont’d)

Intonation and Stress are very effective in

speech naturalness

Intonation : Variation of Pitch frequency

along speaking

Stress : Increasing the pitch frequency in a

specific time

4

Which word receives an

intonation?

It depends on the context.

– The ‘new’ information in the answer to a question is

often accented

while the ‘old’ information is usually not.

– Q1: What types of foods are a good source of vitamins?

– A1: LEGUMES are a good source of vitamins.

– Q2: Are legumes a source of vitamins?

– A2: Legumes are a GOOD source of vitamins.

– Q3: I’ve heard that legumes are healthy, but what are

they a good source of ?

– A3: Legumes are a good source of VITAMINS.

Slide from Jennifer Vendit

Same ‘tune’, different alignment

400

350

300

250

200

150

100

50

LEGUMES are a good source of vitamins

The main rise-fall accent (= “I assert this”) shifts locations.

Slide from Jennifer Vendit

Same ‘tune’, different alignment

400

350

300

250

200

150

100

50

Legumes are a GOOD source of vitamins

The main rise-fall accent (= “I assert this”) shifts locations.

Slide from Jennifer Vendit

Same ‘tune’, different alignment

400

350

300

250

200

150

100

50

legumes are a good source of VITAMINS

The main rise-fall accent (= “I assert this”) shifts locations.

Slide from Jennifer Vendit

Types of Waveform Synthesis

Articulatory Synthesis:

– Model movements of articulators and acoustics of

vocal tract

Concatenative Synthesis:

– Use databases of stored speech to assemble new

utterances.

Diphone

Unit Selection

Statistical (HMM) Synthesis

– Trains parameters on databases of speech

Rule-Based (Formant) Synthesis:

– Start with acoustics, create rules/filters to create

waveform

9

Articulatory Synthesis

Simulation of physical processes of human

articulation

Wolfgang von Kempelen (1734-1804) and

others used bellows, reeds and tubes to

construct mechanical speaking machines

Modern versions “simulate” electronically

the effect of articulator positions, vocal

tract shape, etc on air flow.

10

Concatenative approaches

Two main approaches:

1- Concatenating Phone Units

– Example: concatenating samples of recorded

diphones or syllables

2- Unit selection

– Uses several samples for each phone unit

and selects the most appropriate one when

synthesizing

11

Phone Units

Paragraph (

Sentence (

)

)

Word (Depends on the language. Usually more than 100,000)

Syllable

Diphone & Triphone

Phoneme (Between 10 , 100)

12

Phone Units (Cont’d)

Diphone : We model Transitions between

two phonemes

p1

p2

p3

p4

p5

. . . . .

Diphone

Phoneme

13

Phone Units (Cont’d)

Farsi phonemes: 30

Farsi diphones: 30*30 = 900

Phoneme /zho/ is missing (?)

Farsi triphones: 27000 in theory

Not all of the triphones are used

14

Phone Units (Cont’d)

Syllable = Onset (Consonant) + Rhyme

Syllable is a set of phonemes that exactly

contains one vowel

Syllables in Farsi : CV , CVC , CVCC

We have about 4000 Syllables in Farsi

Syllables in English :V, CV , CVC ,CCVC,

CCVCC, CCCVC, CCCVCC, . . .

Number of Syllables in English is too many

15

Phone Sequence To Speech

(Cont’d)

Text

Text to

Phone

Sequence

NLP

Phone

Sequence

to primitive

utterance

primitive

utterance Speech

to Natural

Speech

Speech Processing

16

Concatenative Approaches

In this approaches we store units of

natural speech for reconstruction of

desired speech

We could select the appropriate phone

unit for speech synthesis

we can store compressed parameters

instead of main waveform

17

Concatenative Approaches

(Cont’d)

Benefits of storing compressed

parameters instead of main waveform

– Less memory use

– General state instead of a specific stored

utterance

– Generating prosody easily

18

Concatenative Approaches

(Cont’d)

Phone Unit

Type of Storing

Paragraph

Main Waveform

Sentence

Main Waveform

Word

Main Waveform

Syllable

Coded/Main Waveform

Diphone

Coded Waveform

Phoneme

Coded Waveform

19

Concatenative Approaches

(Cont’d)

Pitch Synchronous Overlap-Add-Method

(PSOLA) is a famous method in phoneme

transmit smoothing

Overlap-Add-Method is a standard DSP

method

PSOLA is a base action for Voice Conversion.

In this method in analysis stage we select

frames that are synchronous by pitch markers.

20

Diphone Architecture Example

Training:

– Choose units (kinds of diphones)

– Record 1 speaker saying 1 example of each diphone

– Mark the boundaries of each diphones,

cut each diphone out and create a diphone database

Synthesizing an utterance,

– grab relevant sequence of diphones from database

– Concatenate the diphones, doing slight signal

processing at boundaries

– use signal processing to change the prosody (F0,

energy, duration) of selected sequence of diphones

21

Unit Selection

Same idea as concatenative synthesis, but

database contains bigger varieties of

“phone units” from diphones to sentences

Multiple examples of phone units (under

different prosodic conditions) are recorded

Selection of appropriate unit therefore

becomes more complex, as there are in

the database competing candidates for

selection

22

Unit Selection

Unlike diphone concatenation, little or no signal

processing applied to each unit

Natural data solves problems with diphones

– Diphone databases are carefully designed but:

Speaker makes errors

Speaker doesn’t speak intended dialect

Require database design to be right

– If it’s automatic

Labeled with what the speaker actually said

Coarticulation, schwas, flaps are natural

“There’s no data like more data”

– Lots of copies of each unit mean you can choose just

the right one for the context

– Larger units mean you can capture wider effects

23

Unit Selection Issues

Given a big database

For each segment (diphone) that we want to synthesize

– Find the unit in the database that is the best to synthesize this target

segment

What does “best” mean?

– “Target cost”: Closest match to the target description, in terms of

Phonetic context

F0, stress, phrase position

– “Join cost”: Best join with neighboring units

Matching formants + other spectral characteristics

Matching energy

Matching F0

n

n

i=1

i=2

C(t1n ,u1n )= C target (t i ,u i )+ C join (u i-1 ,u i )

24

Unit Selection Search

25

Joining Units

unit selection, just like diphone, need to join the

units

– Pitch-synchronously

For diphone synthesis, need to modify F0 and

duration

– For unit selection, in principle also need to modify F0

and duration of selection units

– But in practice, if unit-selection database is big

enough (commercial systems)

no prosodic modifications (selected targets may already be

close to desired prosody)

26

Unit Selection Summary

Advantages

– Quality is far superior to diphones

– Natural prosody selection sounds better

Disadvantages:

– Quality can be very bad in some places

HCI problem: mix of very good and very bad is quite

annoying

– Synthesis is computationally expensive

– Needs more memory than diphone synthesis

27

Rule-Based Approach Stages

Determine the speech model and model

parameters

Determine type of phone units

Determine some parameter amount for

each phone unit

Substitute sequence of phone units by

its equivalent parameter sequence

Put parameter sequence in speech

model

28

KLATT 80 Model

29

KLATT 88 Model

30

THE KLSYN88 CASCADE PARALLEL FORMANT SYNTHESIZER

GLOTTAL SOUND SOURCES

FILTERED

IMPULSE

TRAIN

SO

MODIFIED

LF

MODEL

FRICATION

NOISE

GENERATOR

NASAL

POLE ZERO

PAIR

TRACHEAL

POLE ZERO

PAIR

SS

F1 B1

DF1 DB1

FIRST

FORMANT

RESONATOR

F2 B2

F3 B3

CP

AH

+

SECOND

FORMANT

RESONATOR

THIRTH

FORMANT

RESONATOR

ASPIRATION

NOISE

GENERATOR

A3F

A4F

A5F

SECOND

FORMANT

RESONATOR

THIRD

FORMANT

RESONATOR

B2F

FOURTH

FORMANT

RESONATOR

F5 B5

FIFTH

FORMANT

RESONATOR

B4F

FIFTH

FORMANT

RESONATOR

B5F

AB

A1V

FIRST

FORMANT

RESONATOR

A2V

SECOND

FORMANT

RESONATOR

-

+

A3V

THIRTH

FORMANT

RESONATOR

A4V

FOURTH

FORMANT

RESONATOR

ATV

TRACHEAL

FORMANT

RESONATOR

-

+

-

B6F F6

+

+

B3F

FOURTH

FORMANT

RESONATOR

SIXTH

FORMANT

RESONATOR

ANV

NASAL

FORMANT

RESONATOR

+

FIRST

DIFFERENCE

PREEMPHASIS

+

A6F

F4 B4

CASCADE VOCAL TRACT MODEL LARYNGEAL SOUND SOURCES

SPECTRAL

TILT LOW-PAS

RESONANTOR

A2F

AF

FTP FTZ

BTP BTZ

TL

F0 AV OO FL DI

KL GLOTT

88 model

(default)

FNP FNZ

BNP BNZ

+

-

BYPASS PATH

PARALLEL VOCAL TRACT MODEL FRICATION SOUND SOURCES

PARALLEL VOCAL TRACT MODEL LYRYNGEAL

SOUND SOURCES (NORMALLY NOT USED)

31

Three Voicing Source Model In

KLATT 88

The old KLSYN impulsive source

The KLGLOTT88 model

The modified LF model

32

HMM-Based Synthesis

Corpus-based, statistical parametric

synthesis

– Proposed in mid-'90s, becomes popular since

mid-'00s

– Large data + automatic training => Automatic

voice building

– Source-filter model + statistical acoustic model

Flexible to change its voice characteristics

– HMM as its statistical acoustic model

– We focus on HMM-based speech synthesis

33

34

First extract parametric representations of

speech including spectral and excitation

parameters from a speech database

Model them by using a set of generative models

(e.g., HMMs)

Training

Synthesis

35

Speech Parameter Modeling Based

on HMM

Spectral parameter modeling

Excitation parameter modeling

State duration modeling

36

Spectral parameter modeling

Mel-cepstral analysis has been used for spectral

estimation

A continuous density HMM is used for the vocal

tract modeling in the same way as speech

recognition systems.

The continuous density Markov model is a finite

state machine which makes one state transition at

each time unit (i.e. frame). First, a decision is

made to which state to succeed (including the

state itself). Then an output vector is generated

according to the probability density function (pdf)

for the current state

37

Cont’d…

38

F0 parameter modeling

While the observation of F0 has a

continuous value in the voiced region,

there exists no value for the unvoiced

region. We can model this kind of

observation sequence assuming that the

observed F0 value occurs from onedimensional spaces and the “unvoiced”

symbol occurs from the zero-dimensional

space.

39

40

Calculation of dynamic feature:

As was mentioned, mel-cepstral coefficient is used as spectral

parameter, Their dynamic feature Δc and Δ2c are calculated

as follows:

Dynamic features for F0: In unvoiced region, pt, Δpt and Δ2pt

are defined as a discrete symbol.

When dynamic features at the boundary between voiced and

unvoiced cannot be calculated, they are defined as a discrete

symbol.

41

Effect of dynamic feature

By using dynamic features, the generated speech parameter vectors reflect

not only the means of static and dynamic feature vectors but also the

covariances of those

Estimation will be

smoother

Good and bad effect

42

Multi-Stream HMM structure:

The sequence of mel-cepstral coefficient vector and F0 pattern are

modeled by a continuous density HMM and multi-space probability

distribution HMM

Putting all this together has some advantages

43

Synthesis part

An arbitrarily given text to be synthesized is converted to

a context-based label sequence.

The text is converted a context dependent label

sequence by a text analyzer.

For the TTS system, the text analyzer should have the

ability to extract contextual information. However, no text

analyzer has the ability to extract accentual phrase and

to decide accent type of accentual phrase.

44

Some contextual factors

When we have HMM for each phoneme

{preceding, current, succeeding} phoneme

position of breath group in sentence

{preceding, current, succeeding} part-of-speech

position of current accentual phrase in current

breath group

position of current phoneme in current accentual

phrase

45

46

According to the obtained state durations,

a sequence of mel-cepstral coefficients

and F0 values including voiced/unvoiced

decisions is generated from the sentence

HMM by using the speech parameter

generation algorithm

Finally, speech is synthesized directly from

the generated mel-cepstral coefficients

and F0 values by the MLSA filter

47

Spectral representation & corresponding

filter

cepstrum: LMA filter

generalized cepstrum: GLSA filter

mel-cepstrum: MLSA (Mel Log

Spectrum Approximation) filter

mel-generalized cepstrum: MGLSA

filter

LSP: LSP filter

PARCOR: all-pole lattice filter

LPC: all-pole filter

48

Advantages

Most of the advantages of statistical

parametric synthesis against unit-selection

synthesis are related to its flexibility due to

the statistical modeling process.

Transforming voice characteristics, speaking

styles, and emotions.

Also Combination of unit selection and voice

conversion (VC) techniques can alleviate this

problem, high-quality voice-conversion is still

problematic.

49

Adaptation (mimicking voices):Techniques of adaptation were originally

developed in speech recognition to adjust general acoustic model , These

techniques have also been applied to HMM-based speech synthesis to

obtain speaker-specific synthesis systems with a small amount of speech

data

Interpolation (mixing voices): Interpolate parameters among representative

HMM sets

- Can obtain new voices even no adaptation data is available

- Gradually change speakers. & speaking styles

50

Some rule-based applications

KLATT C Implementation:

http://www.cs.cmu.edu/afs/cs/project/airepository/ai/areas/speech/systems/klatt/0.html

A web page which generates speech waveform online

given KLATT parameters:

http://www.asel.udel.edu/speech/

tutorials/synthesis/Klatt.html

Formant Synthesis Demo

using Fant’s Formant Model

http://www.speech.kth.se/

wavesurfer/formant/

51

TTS Online Demos

AT&T:

– http://www2.research.att.com/~ttsweb/tts/demo.php

Festival

– http://www.cstr.ed.ac.uk/projects/festival/morevoices.

html

Cepstral

– http://www.cepstral.com/cgi-bin/demos/general

IBM

– http://www.research.ibm.com/tts/coredemo.shtml

52

Festival

Open source speech synthesis system

Designed for development and runtime use

–

–

–

–

Use in many commercial and academic systems

Distributed with RedHat 9.x, etc

Hundreds of thousands of users

Multilingual

No built-in language

Designed to allow addition of new languages

– Additional tools for rapid voice development

Statistical learning tools

Scripts for building models

1/5/07

Text from Richard Sproat

Festival as software

http://festvox.org/festival/

General system for multi-lingual TTS

C/C++ code with Scheme scripting language

General replaceable modules:

– Lexicons, LTS, duration, intonation, phrasing, POS

tagging, tokenizing, diphone/unit selection, signal

processing

General tools

– Intonation analysis (f0, Tilt), signal processing, CART

building, N-gram, SCFG, WFST

1/5/07

Text from Richard Sproat

Festival as software

http://festvox.org/festival/

No fixed theories

New languages without new C++ code

Multiplatform (Unix/Windows)

Full sources in distribution

Free software

1/5/07

Text from Richard Sproat

CMU FestVox project

Festival is an engine, how do you make voices?

Festvox: building synthetic voices:

–

–

–

–

Tools, scripts, documentation

Discussion and examples for building voices

Example voice databases

Step by step walkthroughs of processes

Support for English and other languages

Support for different waveform synthesis

methods

– Diphone

– Unit selection

– Limited domain

1/5/07

Text from Richard Sproa

Future Trends

Speaker adaptation

Language adaptation

57