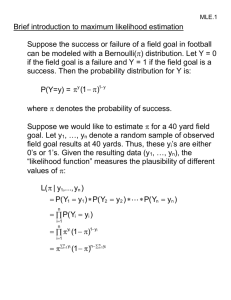

mle

advertisement

Learning with Maximum Likelihood Note to other teachers and users of these slides. Andrew would be delighted if you found this source material useful in giving your own lectures. Feel free to use these slides verbatim, or to modify them to fit your own needs. PowerPoint originals are available. If you make use of a significant portion of these slides in your own lecture, please include this message, or the following link to the source repository of Andrew’s tutorials: http://www.cs.cmu.edu/~awm/tutorials . Comments and corrections gratefully received. Andrew W. Moore Professor School of Computer Science Carnegie Mellon University www.cs.cmu.edu/~awm awm@cs.cmu.edu 412-268-7599 Copyright © 2001, 2004, Andrew W. Moore Sep 6th, 2001 Maximum Likelihood learning of Gaussians for Data Mining • • • • • Why we should care Learning Univariate Gaussians Learning Multivariate Gaussians What’s a biased estimator? Bayesian Learning of Gaussians Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 2 Why we should care • Maximum Likelihood Estimation is a very very very very fundamental part of data analysis. • “MLE for Gaussians” is training wheels for our future techniques • Learning Gaussians is more useful than you might guess… Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 3 Learning Gaussians from Data • Suppose you have x1, x2, … xR ~ (i.i.d) N(,2) • But you don’t know (you do know 2) MLE: For which is x1, x2, … xR most likely? MAP: Which maximizes p(|x1, x2, … xR , 2)? Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 4 Learning Gaussians from Data • Suppose you have x1, x2, … xR ~(i.i.d) N(,2) • But you don’t know (you do know 2) Sneer MLE: For which is x1, x2, … xR most likely? MAP: Which maximizes p(|x1, x2, … xR , 2)? Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 5 Learning Gaussians from Data • Suppose you have x1, x2, … xR ~(i.i.d) N(,2) • But you don’t know (you do know 2) Sneer MLE: For which is x1, x2, … xR most likely? MAP: Which maximizes p(|x1, x2, … xR , 2)? Despite this, we’ll spend 95% of our time on MLE. Why? Wait and see… Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 6 MLE for univariate Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,2) • But you don’t know (you do know 2) • MLE: For which is x1, x2, … xR most likely? mle arg max p( x1 , x2 ,...xR | , 2 ) Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 7 Algebra Euphoria mle arg max p( x1 , x2 ,...xR | , 2 ) = (by i.i.d) = (monotonicity of log) = (plug in formula for Gaussian) = (after simplification) Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 8 Algebra Euphoria mle arg max p( x1 , x2 ,...xR | , 2 ) R = arg max p ( xi | , ) (by i.i.d) R (monotonicity of log) 2 i 1 2 = arg max log p ( x | , ) i i 1 = arg max = 1 2 ( xi ) 2 2 2 i 1 R arg min R 2 ( x ) i (plug in formula for Gaussian) (after simplification) i 1 Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 9 Intermission: A General Scalar MLE strategy Task: Find MLE assuming known form for p(Data| ,stuff) 1. Write LL = log P(Data| ,stuff) 2. Work out LL/ using high-school calculus 3. Set LL/=0 for a maximum, creating an equation in terms of 4. Solve it* 5. Check that you’ve found a maximum rather than a minimum or saddle-point, and be careful if is constrained *This is a perfect example of something that works perfectly in all textbook examples and usually involves surprising pain if you need it for something new. Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 10 The MLE mle arg max p( x1 , x2 ,...xR | , 2 ) arg min R 2 ( x ) i i 1 LL s.t. 0 = (what?) Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 11 The MLE mle arg max p( x1 , x2 ,...xR | , 2 ) arg min R 2 ( x ) i i 1 R LL 2 ( x ) s.t. 0 i i 1 R 2( xi ) i 1 1 R Thus xi R i 1 Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 12 Lawks-a-lawdy! mle 1 R xi R i 1 • The best estimate of the mean of a distribution is the mean of the sample! At first sight: This kind of pedantic, algebra-filled and ultimately unsurprising fact is exactly the reason people throw down their “Statistics” book and pick up their “Agent Based Evolutionary Data Mining Using The Neuro-Fuzz Transform” book. Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 13 A General MLE strategy Suppose = (1, 2, …, n)T is a vector of parameters. Task: Find MLE assuming known form for p(Data| ,stuff) 1. Write LL = log P(Data| ,stuff) 2. Work out LL/ using high-school calculus LL θ1 LL LL θ2 θ LL θ n Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 14 A General MLE strategy Suppose = (1, 2, …, n)T is a vector of parameters. Task: Find MLE assuming known form for p(Data| ,stuff) 1. Write LL = log P(Data| ,stuff) 2. Work out LL/ using high-school calculus 3. Solve the set of simultaneous equations LL 0 θ1 LL 0 θ2 LL 0 θn Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 15 A General MLE strategy Suppose = (1, 2, …, n)T is a vector of parameters. Task: Find MLE assuming known form for p(Data| ,stuff) 1. Write LL = log P(Data| ,stuff) 2. Work out LL/ using high-school calculus 3. Solve the set of simultaneous equations LL 0 θ1 LL 0 θ2 LL 0 θn Copyright © 2001, 2004, Andrew W. Moore 4. Check that you’re at a maximum Maximum Likelihood: Slide 16 A General MLE strategy Suppose = (1, 2, …, n)T is a vector of parameters. Task: Find MLE assuming known form for p(Data| ,stuff) 1. Write LL = log P(Data| ,stuff) 2. Work out LL/ using high-school calculus 3. Solve the set of simultaneous equations If you can’t solve them, what should you do? Copyright © 2001, 2004, Andrew W. Moore LL 0 θ1 LL 0 θ2 LL 0 θn 4. Check that you’re at a maximum Maximum Likelihood: Slide 17 MLE for univariate Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,2) • But you don’t know or 2 • MLE: For which =(,2) is x1, x2,…xR most likely? 1 1 2 log p( x1 , x2 ,...xR | , ) R(log log ) 2 2 2 2 LL 1 2 R (x i 1 i R 2 ( x ) i i 1 ) LL R 1 2 2 2 2 4 R 2 ( x ) i i 1 Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 18 MLE for univariate Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,2) • But you don’t know or 2 • MLE: For which =(,2) is x1, x2,…xR most likely? 1 1 2 log p( x1 , x2 ,...xR | , ) R(log log ) 2 2 2 2 0 1 0 2 R (x ) R 1 i i 1 2 2 2 4 R 2 ( x ) i i 1 R 2 ( x ) i i 1 Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 19 MLE for univariate Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,2) • But you don’t know or 2 • MLE: For which =(,2) is x1, x2,…xR most likely? 1 1 log p( x1 , x2 ,...xR | , ) R(log log 2 ) 2 2 2 2 R 2 ( x ) i i 1 1 R 0 2 ( xi ) xi i 1 R i 1 R 1 R 2 0 ( x ) what? i 2 4 2 2 i 1 1 R Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 20 MLE for univariate Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,2) • But you don’t know or 2 • MLE: For which =(,2) is x1, x2,…xR most likely? mle 2 mle 1 R xi R i 1 1 R ( xi mle ) 2 R i 1 Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 21 Unbiased Estimators • An estimator of a parameter is unbiased if the expected value of the estimate is the same as the true value of the parameters. • If x1, x2, … xR ~(i.i.d) N(,2) then R 1 mle E[ ] E xi R i 1 mle is unbiased Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 22 Biased Estimators • An estimator of a parameter is biased if the expected value of the estimate is different from the true value of the parameters. • If x1, x2, … xR ~(i.i.d) N(,2) then 2 E mle 2 R R 1 1 1 E ( xi mle ) 2 E xi x j 2 R j 1 R i 1 R i 1 R 2mle is biased Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 23 MLE Variance Bias • If x1, x2, … xR ~(i.i.d) N(,2) then 2 E mle 2 1 R R 1 1 2 2 E x x 1 i j R R i 1 j 1 R Intuition check: consider the case of R=1 Why should our guts expect that underestimate of true 2? 2mle would be an How could you prove that? Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 24 Unbiased estimate of Variance • If x1, x2, … xR ~(i.i.d) N(,2) then 2 E mle So define 2 1 R R 1 1 2 2 E x x 1 i j R R i 1 j 1 R 2 unbiased Copyright © 2001, 2004, Andrew W. Moore 2 mle 1 1 R 2 So E unbiased 2 Maximum Likelihood: Slide 25 Unbiased estimate of Variance • If x1, x2, … xR ~(i.i.d) N(,2) then 2 E mle So define 2 1 R R 1 1 2 2 E x x 1 i j R R i 1 j 1 R 2 unbiased 2 unbiased Copyright © 2001, 2004, Andrew W. Moore 2 mle 1 1 R 2 So E unbiased 2 1 R mle 2 ( x ) i R 1 i 1 Maximum Likelihood: Slide 26 Unbiaseditude discussion • Which is best? 2 mle 1 R ( xi mle ) 2 R i 1 R 1 2 mle 2 unbiased ( x ) i R 1 i 1 Answer: •It depends on the task •And doesn’t make much difference once R--> large Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 27 Don’t get too excited about being unbiased • Assume x1, x2, … xR ~(i.i.d) N(,2) • Suppose we had these estimators for the mean 1 suboptimal R7 R crap x1 R x i 1 i Are either of these unbiased? Will either of them asymptote to the correct value as R gets large? Which is more useful? Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 28 MLE for m-dimensional Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MLE: For which =(,S) is x1, x2, … xR most likely? μ mle Σ mle 1 R xk R k 1 1 R x k μ mle x k μ mle R k 1 Copyright © 2001, 2004, Andrew W. Moore T Maximum Likelihood: Slide 29 MLE for m-dimensional Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MLE: For which =(,S) is x1, x2, … xR most likely? μ mle Σ mle 1 R xk R k 1 μ mle i 1 R x ki R k 1 1 R x k mle x k mle R k 1 T Where 1 i m And xki is value of the ith component of xk (the ith attribute of the kth record) And imle is the ith component of mle Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 30 MLE for m-dimensional Gaussian • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MLE: For which =(,S) is x1, x2, … xR most likely? Where 1 i m, 1 j m μ mle Σ mle 1 R xk R k 1 1 R x k mle x k mle R k 1 ijmle T And xki is value of the ith component of xk (the ith attribute of the kth record) And ijmle is the (i,j)th component of Smle 1 R x ki imle x kj mle j R k 1 Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 31 MLE for m-dimensional Gaussian Q: How would you prove this? • Suppose you have x1, x2, … xRA:~(i.i.d) Just plug N(,S) through the MLE recipe. • But you don’t know or S how Sxmle most is forcedlikely? to be • MLE: For which =(,S) is xNote , x , … 1 2 R symmetric non-negative definite μ Note the unbiased case R mle Σ mle 1 xk R k 1 How many datapoints would you need before the Gaussian has a chance of being non-degenerate? 1 R x k mle x k mle R k 1 T mle Σ 1 R unbiased mle mle T Σ x k x k 1 R 1 k 1 1 R Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 32 Confidence intervals We need to talk We need to discuss how accurate we expect mle and Smle to be as a function of R And we need to consider how to estimate these accuracies from data… •Analytically * •Non-parametrically (using randomization and bootstrapping) * But we won’t. Not yet. *Will be discussed in future Andrew lectures…just before we need this technology. Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 33 Structural error Actually, we need to talk about something else too.. What if we do all this analysis when the true distribution is in fact not Gaussian? How can we tell? * How can we survive? * *Will be discussed in future Andrew lectures…just before we need this technology. Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 34 Gaussian MLE in action Using R=392 cars from the “MPG” UCI dataset supplied by Ross Quinlan Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 35 Data-starved Gaussian MLE Using three subsets of MPG. Each subset has 6 randomly-chosen cars. Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 36 Bivariate MLE in action Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 37 Multivariate MLE Covariance matrices are not exciting to look at Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 38 Being Bayesian: MAP estimates for Gaussians • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MAP: Which (,S) maximizes p(,S |x1, x2, … xR)? Step 1: Put a prior on (,S) Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 39 Being Bayesian: MAP estimates for Gaussians • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MAP: Which (,S) maximizes p(,S |x1, x2, … xR)? Step 1: Put a prior on (,S) Step 1a: Put a prior on S n0-m-1) S ~ IW(n0, n0-m-1) S 0 ) This thing is called the Inverse-Wishart distribution. A PDF over SPD matrices! Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 40 Being Bayesian: MAP estimates for Gaussians n0 small: “I am not sure • Suppose you have x , x , about my guess of S 0 1“ 2 … xR ~(i.i.d) N(,S) S 0 : (Roughly) my best guess of S • But you don’t know or S n0 large: “I’m pretty • MAP: Which (,S) sure maximizes p(,S |x1, x2, … xR)? about my guess of S 0 “ Step 1: Put a prior on (,S) ES S 0 Step 1a: Put a prior on S n0-m-1) S ~ IW(n0, n0-m-1) S 0 ) This thing is called the Inverse-Wishart distribution. A PDF over SPD matrices! Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 41 Being Bayesian: MAP estimates for Gaussians • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MAP: Which (,S) maximizes p(,S |x1, x2, … xR)? Step 1: Put a prior on (,S) Step 1a: Put a prior on S n0-m-1)S ~ IW(n0, n0-m-1)S 0 ) Step 1b: Put a prior on | S | S ~ N(0 , S / k0) Copyright © 2001, 2004, Andrew W. Moore Together, “S” and “ | S” define a joint distribution on (,S) Maximum Likelihood: Slide 42 Being Bayesian: MAP estimates for Gaussians • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S k0 small: “I am not sure about my |x guess of, …0 “x )? • MAP: Which (,S) maximizes p(,S , x 1 2 R Put guess a priorofon 0Step : My1:best (,S) a0 prior on S Step E 1a: Put k0 large: “I’m pretty sure about my guess of 0 “ n0-m-1)S ~ IW(n0, n0-m-1)S 0 ) Step 1b: Put a prior on | S | S ~ N(0 , S / k0) Together, “S” and “ | S” define a joint distribution on (,S) Notice how we are forced to express our ignorance of proportionally to S Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 43 Being Bayesian: MAP estimates for Gaussians • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MAP: Which (,S) maximizes p(,S |x1, x2, … xR)? Step 1: Put a prior on (,S) Step 1a: Put a prior on S Why do we use this form of prior? n0-m-1)S ~ IW(n0, n0-m-1)S 0 ) Step 1b: Put a prior on | S | S ~ N(0 , S / k0) Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 44 Being Bayesian: MAP estimates for Gaussians • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • But you don’t know or S • MAP: Which (,S) maximizes p(,S |x1, x2, … xR)? Step 1: Put a prior on (,S) Why do we use this form of prior? Step 1a: Put a prior on S Actually, we don’t have to n0-m-1)S ~ IW(n0, n0-m-1)S 0 ) Step 1b: Put a prior on | S But it is computationally and algebraically convenient… …it’s a conjugate prior. | S ~ N(0 , S / k0) Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 45 Being Bayesian: MAP estimates for Gaussians • Suppose you have x1, x2, … xR ~(i.i.d) N(,S) • MAP: Which (,S) maximizes p(,S |x1, x2, … xR)? Step 1: Prior: n0-m-1) S ~ IW(n0, n0-m-1) S 0 ), | S ~ N(0 , S / k0) Step 2: k 0 μ 0 Rx n R n 0 R 1 R x xk μ R k0 R k k R R k 1 R 0 T x μ x μ T 0 0 (n R m 1) Σ R (n 0 m 1) Σ 0 x k x x k x R 1/ k 0 1/ R k 1 Step 3: Posterior: (nR+m-1)S ~ IW(nR, (nR+m-1) S R ), | S ~ N(R , S / kR) Result: map = R, E[S |x1, x2, Copyright © 2001, 2004, Andrew W. Moore … xR ]= SR Maximum Likelihood: Slide 46 carefully at what these formulae are Being Bayesian:•Look MAP estimates for Gaussians doing. It’s all very sensible. priors prior form and posterior • Suppose you have•Conjugate x1, x2, … xRmean ~(i.i.d) N(,S) form are same and characterized by “sufficient • MAP: Which (,S)statistics” maximizes of the p(,S data. |x1, x2, … xR)? is a, student-t Step 1: Prior: n0-m-1) S ~ •The IW(n0marginal , n0-m-1)distribution S 0 ), | Son ~ N( 0 S / k0) •One point of view: it’s pretty academic if R > 30 Step 2: R k 0 μ 0 Rx n R n 0 R 1 x xk μ R k0 R k k R R k 1 R 0 T x μ x μ T 0 0 (n R m 1) Σ R (n 0 m 1) Σ 0 x k x x k x R 1/ k 0 1/ R k 1 Step 3: Posterior: (nR+m-1)S ~ IW(nR, (nR+m-1) S R ), | S ~ N(R , S / kR) Result: map = R, E[S |x1, x2, Copyright © 2001, 2004, Andrew W. Moore … xR ]= SR Maximum Likelihood: Slide 47 Where we’re at Inputs Inputs Inputs Categorical inputs only Classifier Real-valued inputs only Predict Joint BC category Naïve BC Density Estimator Probability Regressor Predict real no. Copyright © 2001, 2004, Andrew W. Moore Joint DE Mixed Real / Cat okay Dec Tree Gauss DE Naïve DE Maximum Likelihood: Slide 48 What you should know • The Recipe for MLE • What do we sometimes prefer MLE to MAP? • Understand MLE estimation of Gaussian parameters • Understand “biased estimator” versus “unbiased estimator” • Appreciate the outline behind Bayesian estimation of Gaussian parameters Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 49 Useful exercise • We’d already done some MLE in this class without even telling you! • Suppose categorical arity-n inputs x1, x2, … xR~(i.i.d.) from a multinomial M(p1, p2, … pn) where P(xk=j|p)=pj • What is the MLE p=(p1, p2, … pn)? Copyright © 2001, 2004, Andrew W. Moore Maximum Likelihood: Slide 50