Available

advertisement

Ans-1

File organization refers to the relationship of the key of the record to the physical location of that record in the computer file.

File organization may be either physical file or a logical file. A physical file is a physical unit, such as magnetic tape or a disk.

A logical file on the other hand is a complete set of records for a specific application or purpose.

A logical file may occupy a part of physical file or may extend over more than one physical file.

The objectives of computer based file organization:

Ease of file creation and maintenance

Efficient means of storing and retrieving information.

The various file organization methods are:

Sequential access.

Direct or random access.

Index sequential access.

The selection of a particular method depends on:

Type of application.

Method of processing.

Size of the file.

File inquiry capabilities.

File volatility.

The response time.

1. Sequential access method: Here the records are arranged in the ascending or descending order or chronological order of a key

field which may be numeric or both. Since the records are ordered by a key field, there is no storage location identification. It is

used in applications like payroll management where the file is to be processed in entirety, i.e. each record is processed. Here, to

have an access to a particular record, each record must be examined until we get the desired record.

Sequential files are normally created and stored on magnetic tape using batch processing method.

Advantages:

Simple to understand.

Easy to maintain and organize

Loading a record requires only the record key.

Relatively inexpensive I/O media and devices can be used.

Easy to reconstruct the files.

The proportion of file records to be processed is high.

Disadvantages:

Entire file must be processed, to get specific information.

Very low activity rate stored.

Transactions must be stored and placed in sequence prior to processing.

Data redundancy is high, as same data can be stored at different places with different keys.

Impossible to handle random enquiries.

2. Direct access files organization: (Random or relative organization). Files in his type are stored in direct access storage devices

such as magnetic disk, using an identifying key. The identifying key relates to tits actual storage position in the file. The computer

can directly locate the key to find the desired record without having to search through any other record first. Here the records are

stored randomly, hence the name random file. It uses online system where the response and updation are fast.

Advantages:

Records can be immediately accessed for updation.

Several files can be simultaneously updated during transaction processing.

Transaction need not be sorted.

Existing records can be amended or modified.

Very easy to handle random enquiries.

Most suitable for interactive online applications.

Disadvantages:

Data may be accidentally erased or over written unless special precautions are taken.

Risk of loss of accuracy and breach of security. Special backup and reconstruction procedures must be

established.

Les efficient use of storage space.

Expensive hardware and software are required.

High complexity in programming.

File updation is more difficult when compared to that of sequential method.

3. Indexed sequential access organization: Here the records are stored sequentially on a direct access device i.e. magnetic disk and

the data is accessible randomly and sequentially. It covers the positive aspects of both sequential and direct access files.

The type of file organization is suitable for both batch processing and online processing.

Here, the records are organized in sequence foe efficient processing of large batch jobs but an index is also used to speed up

access to the records.

Indexing permit access to selected records without searching the entire file.

Advantages:

Permits efficient and economic use of sequential processing technique when the activity rate is high.

Permits quick access to records, in a relatively efficient way when this activity is a fraction of the work

load.

Disadvantages:

Slow retrieval, when compared to other methods.

Does not use the storage space efficiently.

Hardware and software used are relatively expensive.

ANS-2

ROLE OF DBA

One of the main reasons for using DBMS is to have a central control of both data and the programs accessing those

data. A person who has such control over the system is called a Database Administrator(DBA). The following are the

functions of a Database administrator

1.Schema Definition

2.Storage structure and access method definition

3.Schema and physical organization modification.

4.Granting authorization for data access.

5.Routine Maintenance

Schema Definition

The Database Administrator creates the database schema by executing DDL statements. Schema includes the logical

structure of database table(Relation) like data types of attributes,length of attributes,integrity constraints etc.

Storage structure and access method definition

Database tables or indexes are stored in the following ways: Flat files,Heaps,B+ Tree etc..

Schema and physical organization modification

The DBA carries out changes to the existing schema and physical organization.

Granting authorization for data access

The DBA provides different access rights to the users according to their level. Ordinary users might have highly

restricted access to data, while you go up in the hierarchy to the administrator ,you will get more access rights.

Routine Maintenance

Some of the routine maintenance activities of a DBA is given below: Taking backup of database periodically

Ensuring enough disk space is available all the time.

Monitoring jobs running on the database.

Ensure that performance is not degraded by some expensive task submitted by some users.

Performance Tuning

USE OF DATA DICTIONARY BY DBA

A data dictionary, or metadata repository, as defined is a "centralized repository of information about data such as

meaning, relationships to other data, origin, usage, and format." The term may have one of several closely related

meanings pertaining to databases and database management systems (DBMS):

a document describing a database or collection of databases

an integral component of a DBMS that is required to determine its structure

a piece of middleware that extends or supplants the native data dictionary of a DBMS

The term Data Dictionary and Data Repository are used to indicate a more general software utility than a catalogue.

A Catalogue is closely coupled with the DBMS Software; it provides the information stored in it to user and the DBA,

but it is mainly accessed by the various software modules of the DBMS itself, such as DDL and DML compilers, the

query optimiser, the transaction processor, report generators, and the constraint enforcer. On the other hand, a Data

Dictionary is a data structure that stores meta-data, i.e., data about data. The Software package for a stand-aloneData

Dictionary or Data Repository may interact with the software modules of the DBMS, but it is mainly used by the

Designers, Users and Administrators of a computer system for information resource management. These systems are

used to maintain information on system hardware and software configuration, documentation, application and users as

well as other information relevant to system administration.

If a data dictionary system is used only by the designers, users, and administrators and not by the DBMS Software , it

is called a Passive Data Dictionary; otherwise, it is called an Active Data Dictionary or Data Dictionary. An Active

Data Dictionary is automatically updated as changes occur in the database. A Passive Data Dictionary must be

manually updated.

The data Dictionary consists of record types (tables) created in the database by systems generated command files,

tailored for each supported back-end DBMS. Command files contain SQL Statements for CREATE TABLE, CREATE

UNIQUE INDEX, ALTER TABLE (for referential integrity), etc., using the specific statement required by that type of

database.

Database users and application developers can benefit from an authoritative data dictionary document that catalogs

the organization, contents, and conventions of one or more databases.[2] This typically includes the names and

descriptions of various tables and fields in each database, plus additional details, like the type and length of each data

element. There is no universal standard as to the level of detail in such a document, but it is primarily a weak kind of

data.

ANS-3

(I)

INVERTED FILE ORGANISATION

REFER TO PAGE-76 BLOCK-1 TOPIC-3.6.3 & FIGURE-27

(II)

REFERENTIAL INTEGRITY

Referential integrity is a property of data which, when satisfied, requires every value of one attribute (column) of

a relation (table) to exist as a value of another attribute in a different (or the same) relation (table).

For referential integrity to hold in a relational database, any field in a table that is declared a foreign key can

contain only values from a parent table's primary key or a candidate key. For instance, deleting a record that

contains a value referred to by a foreign key in another table would break referential integrity. Some relational

database management systems (RDBMS) can enforce referential integrity, normally either by deleting the foreign

key rows as well to maintain integrity, or by returning an error and not performing the delete. Which method is

used may be determined by a referential integrity constraint defined in a data dictionary.

Benefits of Referential Integrity

Improved data quality

An obvious benefit is the boost to the quality of data that is stored in a database. There can still be errors, but at least

data references are genuine and intact.

Faster development

Referential integrity is declared. This is much more productive (one or two orders of magnitude) than writing custom

programming code.

Fewer bugs

The declarations of referential integrity are more concise than the equivalent programming code. In essence, such

declarations reuse the tried and tested general-purpose code in a database engine, rather than redeveloping the same

logic on a case-by-case basis.

Consistency across applications

Referential integrity ensures the quality of data references across the multiple application programs that may access a

database. You will note that the definitions from the Web are expressed in terms of relational databases. However, the

principle of referential integrity applies more broadly. Referential integrity applies to both relational and OO databases,

as well as programming languages and modeling.

(III)

FOREIGN KEY

A foreign key is a field in a relational table that matches a candidate key of another table. The foreign key can be

used to cross-reference tables.

For example, say we have two tables, a CUSTOMER table that includes all customer data, and an ORDERS table

that includes all customer orders. The intention here is that all orders must be associated with a customer that is

already in the CUSTOMER table. To do this, we will place a foreign key in the ORDERS table and have it relate to the

primary key of the CUSTOMER table.

The foreign key identifies a column or set of columns in one (referencing) table that refers to a column or set of

columns in another (referenced) table. The columns in the referencing table must reference the columns of the primary

key or other superkey in the referenced table. The values in one row of the referencing columns must occur in a single

row in the referenced table. Thus, a row in the referencing table cannot contain values that don't exist in the

referenced table (except potentially NULL). This way references can be made to link information together and it is an

essential part ofdatabase normalization. Multiple rows in the referencing table may refer to the same row in the

referenced table. Most of the time, it reflects the one (parent table or referenced table) to many (child table, or

referencing table) relationship.

The referencing and referenced table may be the same table, i.e. the foreign key refers back to the same table. Such a

foreign key is known in SQL:2003 as a self-referencing or recursive foreign key.

A table may have multiple foreign keys, and each foreign key can have a different referenced table. Each foreign key

is enforced independently by the database system. Therefore, cascading relationships between tables can be

established using foreign keys.

Improper foreign key/primary key relationships or not enforcing those relationships are often the source of many

database and data modeling problems.

Foreign keys are defined in the ANSI SQL Standard, through a FOREIGN KEY constraint. The syntax to add such a

constraint to an existing table is defined in SQL:2003 as shown below. Omitting the column list in the REFERENCES

clause implies that the foreign key shall reference the primary key of the referenced table.

ALTER TABLE <TABLE identifier>

ADD [ CONSTRAINT <CONSTRAINT identifier> ]

FOREIGN KEY ( <COLUMN expression> {, <COLUMN expression>}... )

REFERENCES <TABLE identifier> [ ( <COLUMN expression> {, <COLUMN expression>}... ) ]

[ ON UPDATE <referential action> ]

[ ON DELETE <referential action> ]

(iv) TRANSACTION

A transaction comprises a unit of work performed within a database management system (or similar system) against

a database, and treated in a coherent and reliable way independent of other transactions. Transactions in a database

environment have two main purposes:

1. To provide reliable units of work that allow correct recovery from failures and keep a database consistent even

in cases of system failure, when execution stops (completely or partially) and many operations upon a

database remain uncompleted, with unclear status.

2. To provide isolation between programs accessing a database concurrently. If this isolation is not provided the

programs outcome are possibly erroneous.

A database transaction, by definition, must be atomic, consistent, isolated and durable. Database practitioners often

refer to these properties of database transactions using the acronym ACID.

Transactions provide an "all-or-nothing" proposition, stating that each work-unit performed in a database must either

complete in its entirety or have no effect whatsoever. Further, the system must isolate each transaction from other

transactions, results must conform to existing constraints in the database, and transactions that complete successfully

must get written to durable storage.

The properties of database transactions are summed up with the acronym ACID:

Atomicity - all or nothing

All of the tasks (usually SQL requests) of a database transaction must be completed;

If incomplete due to any possible reasons, the database transaction must be aborted.

Consistency - serializability and integrity

The database must be in a consistent or legal state before and after the database transaction. It means that a

database transaction must not break the database integrity constraints.

Isolation

Data used during the execution of a database transaction must not be used by another database transaction until the

execution is completed. Therefore, the partial results of an incomplete transaction must not be usable for other

transactions until the transaction is successfully committed. It also means that the execution of a transaction is not

affected by the database operations of other concurrent transactions.

Durability

All the database modifications of a transaction will be made permanent even if a system failure occurs after the

transaction has been completed.

Theoretically, a database management system (DBMS) guarantees all the ACID properties for each database transaction. In reality,

these ACID properties are frequently more or less reduced to improve performance.

(V)CANDIDATE KEY

In the relational model of databases, a candidate key of a relation is a minimal superkey for that relation; that is,

a set of attributes such that

1. the relation does not have two distinct tuples (i.e. rows or records in common database language) with the

same values for these attributes (which means that the set of attributes is a superkey)

2. there is no proper subset of these attributes for which (1) holds (which means that the set is minimal).

The constituent attributes are called prime attributes. Conversely, an attribute that does not occur in ANY candidate

key is called a non-prime attribute.

Since a relation contains no duplicate tuples, the set of all its attributes is a superkey if NULL values are not used. It

follows that every relation will have at least one candidate key.

The candidate keys of a relation tell us all the possible ways we can identify its tuples. As such they are an important

concept for the design database schema.

For practical reasons RDBMSs usually require that for each relation one of its candidate keys is declared as

the primary key, which means that it is considered as the preferred way to identify individual tuples. Foreign keys, for

example, are usually required to reference such a primary key and not any of the other candidate keys.

A table may have more than one key,each key is called a candidate key.

E.g( 1) A table CUSTOMER consists of columns: Customer_Id,name,Address etc..

Customer_Id is the only key (unique),thus a candidate key.

E.g(2) consider a table CAR where we can have 2 keys namely license_no,serial_no (which should be unique).

Both license_no and serial_no are candidate keys.

Q4

(I)

PRIMARY & SECONDARY INDEXES

PAGE NO-47, PAGE NO-52 BLOCK-1 CH-3

(II)

DISTRIBUTED BS CENTRALIZED DATABASE

Centralized database is a database in which data is stored and maintained in a single location. This is the traditional

approach for storing data in large enterprises. Distributed database is a database in which data is stored in storage

devices that are not located in the same physical location but the database is controlled using a central Database

Management System (DBMS).

What is Centralized Database?

In a centralized database, all the data of an organization is stored in a single place such as a mainframe computer or a

server. Users in remote locations access the data through the Wide Area Network (WAN) using the

application programs provided to access the data. The centralized database (the mainframe or the server) should be

able to satisfy all the requests coming to the system, therefore could easily become a bottleneck. But since all the data

reside in a single place it easier to maintain and back up data. Furthermore, it is easier to maintain data integrity,

because once data is stored in a centralized database, outdated data is no longer available in other places.

What is Distributed Database?

In a distributed database, the data is stored in storage devices that are located in different physical locations. They are

not attached to a common CPU but the database is controlled by a central DBMS. Users access the data in a

distributed database by accessing the WAN. To keep a distributed database up to date, it uses the replication and

duplication processes. The replication process identifies changes in the distributed database and applies those

changes to make sure that all the distributed databases look the same. Depending on the number of distributed

databases, this process could become very complex and time consuming. The duplication process identifies one

database as a master database and duplicates that database. This process is not complicated as the replication

process but makes sure that all the distributed databases have the same data.

What is the difference between Distributed Database and Centralized Database?

While a centralized database keeps its data in storage devices that are in a single location connected to a single CPU,

a distributed database system keeps its data in storage devices that are possibly located in different geographical

locations and managed using a central DBMS. A centralized database is easier to maintain and keep updated since all

the data are stored in a single location. Furthermore, it is easier to maintain data integrity and avoid the requirement

for data duplication. But, all the requests coming to access data are processed by a single entity such as a single

mainframe, and therefore it could easily become a bottleneck. But with distributed databases, this bottleneck can be

avoided since the databases are parallelized making the load balanced between several servers. But keeping the data

up to date in distributed database system requires additional work, therefore increases the cost of maintenance and

complexity and also requires additional software for this purpose. Furthermore, designing databases for a distributed

database is more complex than the same for a centralized database.

(III)

BTREE & B+ TREE

PAGE 64 BTREE- DEFINITION & 5 POINTS

PAGE 69 BTREE- 7 FEATURES, 3 VARIATIONS OF BTREE, 6 ADVANTAGES, 4 DISADVANTAGES

(IV)

DATA REPLICATION & DATA FRAGMENTATION

PAGE-56 BLOCK-2 DESIGN OF DISTRIBUTED DATABASES

(V)

PROCEDURAL & NON PROCEDURAL DML

Non-Procedural DML:

A high-level or non-procedural DML allows the user to specify what data is required without specifying how it

is to be obtained. Many DBMSs allow high-level DML statements either to be entered interactively from a

terminal or to be embedded in a general-purpose programming language.

The end-users use a high-level query language to specify their requests to DBMS to retrieve data. Usually a

single statement is given to the DBMS to retrieve or update multiple records. The DBMS translates a DML

statement into a procedure that manipulates the set of records. The examples of non-procedural DMLs are

SQL and QBE (Query-By-Example) that are used by relational database systems. These languages are

easier to learn and use. The part of a non-procedural DML, which is related to data retrieval from database,

is known as query language.

Procedural DML:

A low-level or procedural DML allows the user, i.e. programmer to specify what data is needed and how to

obtain it. This type of DML typically retrieves individual records from the database and processes each

separately. In this language, the looping, branching etc. statements are used to retrieve and process each

record from a set of records. The programmers use the low-level DML

ANS-5

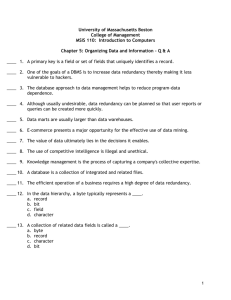

NORMALISATION

Normalisation is the process of taking data from a problem and reducing it to a set of relations while

ensuring data integrity and eliminating data redundancy

Data integrity - all of the data in the database are consistent, and satisfy all integrity constraints.

Data redundancy – if data in the database can be found in two different locations (direct redundancy) or

if data can be calculated from other data items (indirect redundancy) then the data is said to contain

redundancy.

Data should only be stored once and avoid storing data that can be calculated from other data already

held in the database. During the process of normalisation redundancy must be removed, but not at the

expense of breaking data integrity rules.

If redundancy exists in the database then problems can arise when the database is in normal operation:

When data is inserted the data must be duplicated correctly in all places where there is redundancy. For

instance, if two tables exist for in a database, and both tables contain the employee name, then creating a

new employee entry requires that both tables be updated with the employee name.

When data is modified in the database, if the data being changed has redundancy, then all versions of the

redundant data must be updated simultaneously. So in the employee example a change to the employee

name must happen in both tables simultaneously.

The removal of redundancy helps to prevent insertion, deletion, and update errors, since the data is only

available in one attribute of one table in the database.

2NF

A relation is in 2NF if, and only if, it is in 1NF and every non-key attribute is fully

functionally dependent on the whole key.

Thus the relation is in 1NF with no repeating groups, and all non-key attributes must

depend on the whole key, not just some part of it. Another way of saying this is that

there must be no partial key dependencies (PKDs).

The problems arise when there is a compound key, e.g. the key to the Record relation matric_no, subject. In this case it is possible for non-key attributes to depend on only

part of the key - i.e. on only one of the two key attributes. This is what 2NF tries to

prevent.

Consider again the Student relation from the flattened Student #2 table:

Student(matric_no, name, date_of_birth, subject, grade )

There are no repeating groups

The relation is already in 1NF

However, we have a compound primary key - so we must check all of the non-key

attributes against each part of the key to ensure they are functionally dependent

on it.

matric_no determines name and date_of_birth, but not grade.

subject together with matric_no determines grade, but not name or date_of_birth.

So there is a problem with potential redundancies

3NF

3NF is an even stricter normal form and removes virtually all the redundant data :

A relation is in 3NF if, and only if, it is in 2NF and there are no transitive functional

dependencies

Transitive functional dependencies arise:

when one non-key attribute is functionally dependent on another non-key

attribute:

FD: non-key attribute -> non-key attribute

and when there is redundancy in the database

By definition transitive functional dependency can only occur if there is more than one

non-key field, so we can say that a relation in 2NF with zero or one non-key field must

automatically be in 3NF.

What is the difference between 1NF and 2NF and 3NF?

1NF, 2NF and 3NF are normal forms that are used in relational databases to minimize redundancies in tables. 3NF is

considered as a stronger normal form than the 2NF, and it is considered as a stronger normal form than 1NF.

Therefore in general, obtaining a table that complies with the 3NF form will require decomposing a table that is in the

2NF. Similarly, obtaining a table that complies with the 2NF will require decomposing a table that is in the 1NF.

However, if a table that complies with 1NF contains candidate keys that are only made up of a single attribute (i.e.

non-composite candidate keys), such a table would automatically comply with 2NF. Decomposition of tables will result

in additional join operations (or Cartesian products) when executing queries. This will increase the computational time.

On the other hand, the tables that comply with stronger normal forms would have fewer redundancies than tables that

only comply with weaker normal forms.

ANS-6 THIS WILL BE GIVEN BY RADIANT ON PAPER

ANS 7- BLOCK-1, CHAPTER-3

PAGE 70 TO PAGE -72 TOPIC 3.5 FULLY

ANS 8

i.

ii.

select d.day,d.shift from dutyallocation d, employee e where e.e_name=’vijay’

and e.emp_no=d.emp_no;

select count(emp_no) from dutyallocation group by shift;