Chap7Alt

PSY 402

Theories of Learning

Chapter 7 – Behavior & Its Consequences

Instrumental & Operant Learning

Two Early Approaches

Reinforcement Theory

Thorndike’s “Law of Effect” for cats in the puzzle box.

Skinner boxes – rats pressing bars

Contiguity Theory

Guthrie – association is enough

Estes – Stimulus Sampling Theory

Problems with Contiguity Theory

Guthrie proposed that no reinforcement was needed – just contiguity (closeness) in time and place.

If learning is immediate and one-trial, why are learning curves gradual?

Only a few stimulus elements are associated on each trial, but more build up with each trial.

His view was wrong but influential (Estes).

Guthrie & Reinforcement

The reinforcer is salient, so it changes the stimulus (environmental situation).

Reward keeps competing responses from being associated with the initial stimulus.

Competing responses are instead associated with the presence of the reward.

Fixity of cat flank-rubbing supported Guthrie but was later shown to be related to the presence of the experimenter instead.

Tolman’s Operational Behaviorism

His theories relied on “intervening variables” not mechanistic S-R associations.

Behavior is motivated by goals.

Behavior is flexible, a means to an end.

Rats in mazes form cognitive maps of their environment.

Animals learn about stimuli, not just behavior.

Evidence of Cognitive Maps

Changing the maze layout resulted in running toward the same “goal.”

A light could have been used as a cue in both situations.

Using a “plus maze,” some rats were trained to always turn a certain direction, while others were trained to reach a consistent place.

The consistent place was easier to learn.

Latent Learning

Rats were given experience in a complex maze, without reward.

Later they were rewarded for finding the goal box.

Performance (number of errors) improved greatly with reward, even among previously unrewarded rats.

Reward motivates performance, not learning.

Skinner’s Contribution

Skinner was uninterested in theory – he wanted to see how learning works in practice.

Operant chambers permit behaviors to be repeated as often as desired – more voluntary.

Superstitious behavior

– animals were rewarded at intervals without regard to their behaviors.

Animals related whatever they were doing to the reward, and wound up doing odd things.

Stimulus Control

Skinner discovered that stimuli (cues) provide information about the opportunity for reinforcement (reward).

The stimulus sets the occasion for the behavior.

Fading – gradually transferring stimulus control from a simple stimulus to a more complex one.

Operant behavior is controlled by both stimuli and reinforcers.

Discriminative Stimuli

Discriminative stimuli act as “occasion setters” (see Chap 5) in classical conditioning.

The stimulus that signals the opportunity for responding and gaining a reward is S D .

The stimulus that signals the absence of opportunity is S

D

.

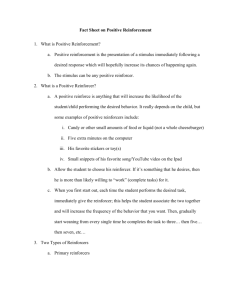

Types of Reinforcers

Primary reinforcer

– stimuli or events that reinforce because of their intrinsic properties:

Food, water, sex

Secondary reinforcer

– stimuli or events that reinforce because of their association with a primary reinforcer:

Money, praise, grades, sounds (clicks)

Called conditioned reinforcers .

Behavior Chains

Secondary (conditioned) reinforcers reward intermediate steps in a chain of behavior leading to a primary reinforcer.

Secondary reinforcers can also be discriminative stimuli that set the occasion for more responding.

Classical conditioning is a glue that enables chains of behavior leading to a goal.