Artificial Neural Networks

advertisement

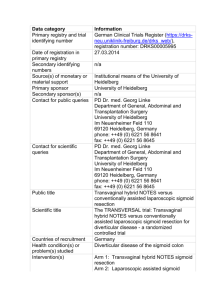

CSCI3230 Introduction to Neural Network II Liu Pengfei Week 13, Winter 2015 Outline Backward Propagation Algorithm 1. 1. 2. Practical Issues 2. 1. 2. 2 Optimization Model & Gradient Descent Multilayer Networks of Sigmoid Units & Backward Propagation Over-fitting Local Minima Backward Propagation Algorithm How to update weights to minimize the error between the target and output? 3 Optimization Model (Error minimization) Given observed data, find a network which gives the same output as the observed target variable(s). Given the topology of the network (number of layers, number of neurons, their connections), find a set of weights to minimize the error function. The set of training examples 4 Target Output Gradient Descent Activation function is smooth E(W) is a smooth function of the weights W But difficult to analytically solve Can use calculus! Use iterative approach 5 Gradient Descent Gradient Descent To find a local minimum of a function using gradient descent, one takes steps proportional to the negative of the gradient (or of the approximate gradient) of the function at the current point. For a smooth function f (x ), f is the direction that f increases most rapidly. x f So xt 1 xt until x converges x x xt 6 Gradient interpretation 7 Gradient Descent Gradient Training Rule i.e. Update Partial derivatives are the key 8 Gradient Descent-Example 9 Gradient Descent Derivation (How to compute We know: 10 ) Sigmoid Units 11 Chain Rule Input x Net input 𝑤𝑖 𝑥𝑖 1 Sigmoid output 1+𝑒 −𝑛𝑒𝑡 𝜕𝐸𝑟𝑟 𝜕𝐸𝑟𝑟 𝜕𝑠𝑖𝑔 𝜕𝑛𝑒𝑡 = ∗ ∗ 𝜕𝑤𝑖 𝜕𝑠𝑖𝑔 𝜕 𝑛𝑒𝑡 𝜕𝑤𝑖 12 1 Error 𝐸 = 2 (𝑡 − 𝑠𝑖𝑔 𝑜𝑢𝑡𝑝𝑢𝑡) 2 𝜕𝐸𝑟𝑟 𝜕𝑠𝑖𝑔 1 𝐸𝑟𝑟 = (𝑡 − 𝑠𝑖𝑔 𝑜𝑢𝑡𝑝𝑢𝑡) 2 2 𝜕𝐸𝑟𝑟 𝜕𝑠𝑖𝑔 13 = (𝑡 − 𝑠𝑖𝑔) 𝜕𝑠𝑖𝑔 𝜕 𝑛𝑒𝑡 1 𝑠𝑖𝑔 = 1 + 𝑒 −𝑛𝑒𝑡 𝜕𝑠𝑖𝑔 𝜕 𝑛𝑒𝑡 14 = 𝑒 −𝑛𝑒𝑡 (1+𝑒 −𝑛𝑒𝑡 )2 = 𝑠𝑖𝑔(1 − 𝑠𝑖𝑔) 𝜕𝑛𝑒𝑡 𝜕𝑤𝑖 𝑛𝑒𝑡 = 𝜕𝑛𝑒𝑡 𝜕𝑤𝑖 15 = 𝑥𝑖 𝑤𝑖 𝑥𝑖 Sigmoid Units After knowing how to update 16 in sigmoid unit, Multilayer Networks of Sigmoid Units For output neuron -same as single sigmoid neuron For hidden neuron - Error is the weight sum error of the connected output neurons 1 𝐸𝑟𝑟 = (𝑡 − 𝑠𝑖𝑔 𝑜𝑢𝑡𝑝𝑢𝑡) 2 2 17 𝐸𝑟𝑟𝑗 = 𝑤𝑖𝑗 𝐸𝑟𝑟𝑖 𝑖 𝑡ℎ𝑎𝑡 𝑐𝑜𝑛𝑛𝑒𝑐𝑡𝑒𝑑 𝑤𝑖𝑡ℎ 𝑗 Hidden neuron Output of hidden 18 Net of output Error of output Multilayer Networks of Sigmoid Units Back-propagation Algorithm BP learns the weights for a multilayer network, given a network with a fixed set of units and interconnections. BP employs gradient descent in attempt to minimize the squared error between the network output values and the target values for these outputs. Two stage learning Forward stage: calculate outputs given input pattern x. Backward stage: update weights by calculating delta. 19 Multilayer Networks of Sigmoid Units Back-propagation Algorithm Error function for BP E defined as a sum of the squared errors overall the output units k for all the training examples d. Error surface can have multiple local minima Guarantee toward some local minimum No guarantee to the global minimum 20 Multilayer Networks of Sigmoid Units Back-propagation Algorithm Process: 21 Multilayer Networks of Sigmoid Units Derivation of the BP Rule xi … j … 22 Multilayer Networks of Sigmoid Units Derivation of the BP Rule xi … j … 23 Multilayer Networks of Sigmoid Units Derivation of the BP Rule Case 1: Rule for Output Unit Weights xi … 24 j Multilayer Networks of Sigmoid Units Derivation of the BP Rule Case 2: Rule for Hidden Unit Weights Multivariate chain rule xi … j … 25 Multilayer Networks of Sigmoid Units Back-propagation Algorithm Process:(revisited) 26 Practical Issues Over-fitting and Local Minima 27 Outline Over-fitting 1. 1. 2. 3. Local Minima 2. 1. 2. 28 Splitting Early stopping Cross-validation Randomize initial weights & Train multiple times Tune the learning rate appropriately Over-fitting Why not choose a method with the best fit to the data? 29 Preventing Over-fitting Goal: To train a neural network having the BEST generalization power 1. 2. 3. 30 When to stop the algorithm? How to evaluate the neural network fairly? How to select a neural network with the best generalization power? Over-fitting Motivation of learning Build a model to learn the underlying knowledge Apply the model to predict the unseen data “Generalization” What is over-fitting? 31 Perform well on the training data but perform poorly on the unseen data Memorize the training data “Specialization” Normal Over-fitted Splitting Method (Cross validation) Splitting method Random shuffle Divide data into two sets 1. 2. 3. 4. 5. 32 Training set: 70% of data Validation set: 30% of data Train (update weights) the network using the training set Evaluate (do not update weights) the network using validation set If stopping criteria is encountered, quit; else repeat step 3-4 Splitting Method( n-fold validation) However, there is a problem: As only part of the data is used as testing data, there may be errors on the training methodology, which coincidentally cannot be reflected by the validation data. Analogy: A child knows only addition but not subtraction. However, as in the maths quiz, no question related to subtraction was asked, he still got 100% marks. 33 Early Stopping Any large enough neural networks may lead to over-fitting if over-trained. If we can know the time to stop training, we can prevent over-fitting Early stopping Early stopping 34 Criteria for Early Stopping 1. 2. 3. Validation error increases Weights do not update much Training epoch (maximum number of iterations) is larger than a constant defined by you One epoch means all the data (including both training and validation) has been used once. Early stopping 35 Cross-validation Data set Randomly Division 1st learning mixed into k folds data set 2nd learning Test set Test set … Learning set Error 1 36 Learning set Error 2 Mean error = Low biased error estimator Cross-validation • Cross-validation: 10-fold Divide the input data into 10 parts: p1, p2, …, p10 for i = 1 to 10 do • • • • Use parts other than pi for training Use pi for validation and get performancei End for Take the average of from performance1 to performance10 37 Fold 1 Fold 2 Accuracy 0.99 Precision … Fold 10 Mean 0.97 0.87 0.90 0.90 0.92 0.95 0.92 Recall 0.85 0.84 0.90 0.91 Fmeasue 0.86 0.84 0.86 0.87 How to choose the best model ? Keep a part of the training data as testing data and evaluate your model using the secretly kept dataset. F-measure on the testing data Fold 1’s Model 0.90 Fold 2’s Model 0.92 … 0.91 Fold 10’s Model 0.99 Best Model: Fold 10’s model 38 Training Validation Testing Cross Validation Local Minima 𝜕𝐸 −𝜇 𝜕𝑤𝑖,𝑗 𝑤𝑖,𝑗 = 𝑤𝑖,𝑗 + Gradient descent will not always guarantee a global minimum We may get stuck in a local minimum! Can you think of some ways to solve the problem? Global minimum Local minimum 39 Local Minima 𝑤𝑖,𝑗 = 𝑤𝑖,𝑗 + 1) 2) 3) 𝜕𝐸 −𝜇 𝜕𝑤𝑖,𝑗 Randomize initial weights & Train multiple times Tune the learning rate appropriately Anymore? Global minimum Local minimum 40 References Backward Propagation Tutorial http://bi.snu.ac.kr/Courses/g-ai01/Chapter5-NN.pdf CS-449: Neural Networks http://www.willamette.edu/~gorr/classes/cs449/intro.html Cross-validation for detecting and preventing over-fitting http://www.autonlab.org/tutorials/overfit10.pdf 41