ppt

advertisement

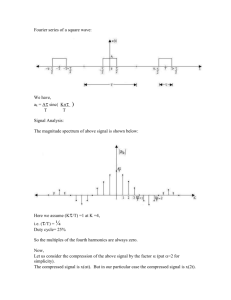

ECE 4371, Fall, 2015 Introduction to Telecommunication Engineering/Telecommunication Laboratory Zhu Han Department of Electrical and Computer Engineering Class 11 Sep. 28th, 2015 Outline Review of Analog and Exam next class Gray Code Line Coding Spectrum Scrambler Multimedia Transmission Gray Code The reflected binary code, also known as Gray code, Two successive values differ in only one digit. http://en.wikipedia.org/wiki/Gray_code If you check the chip, they have the bus number ordered in Gray code. Basic steps for spectrum analysis Figure – Basic pulse function and its spectrum P(w) For example, rect. Function (in time) is sinc function (in freq.) – Input x is the pulse function with different amplitude Carry different information with sign and amplitude Auto correlation is the spectrum of Sx(w) Tb T T Rn lim 1 S x ( w) Tb a a k k n k Re – Overall spectrum n jnwTb n 1 R0 2 Rn e jnwTb Tb n 1 S y ( w) P( w) S xy ( w) 2 Digital Communication System Spectrum of line coding: – Basic pulse function and its spectrum P(w) For example, rect. function is sinc – Input x is the pulse function with different amplitude Carry different information with sign and amplitude Auto correlation is the spectrum of Sx(w) T – Overall spectrum Rn lim b ak ak n T T k 2 S y ( w) P( w) S x ( w) 1 S x ( w) Tb Re n n jnwTb 1 R0 2 Rn e jnwTb Tb n 1 NRZ R0=1, Rn=0, n>0 Pulse width Tb/2 P(w)=Tb sinc(wTb/2) Bandwidth Rb for pulse width Tb RZ scheme DC Nulling Split phase r t R T sin 2 T T 4 4 Polar biphase: Manchester and differential Manchester schemes In Manchester and differential Manchester encoding, the transition at the middle of the bit is used for synchronization. The minimum bandwidth of Manchester and differential Manchester is 2 times that of NRZ. 802.3 token bus and 802.4 Ethernet Bipolar schemes: AMI and pseudoternary R0=1/2, R1=-1/4, Rn=0,n>1, P( w) S y ( w) 2Tb 2 1 cos wTb Tb wT wT sin c 2 b sin 2 b 4 4 2 Reason: the phase changes slower Multilevel: 2B1Q scheme NRZ with amplitude representing more bits EE 541/451 Fall 2006 Pulse Shaping Sy(w)=|P(w)|^2Sx(w) – Sx(w) is improved by the different line codes. – p(t) is assumed to be square How about improving p(t) and P(w) – Reduce the bandwidth – Reduce interferences to other bands – Remove Inter-symbol-interference (ISI) – In wireless communication, pulse shaping to further save BW – Talk about the pulse shaping later Small questions in exam 2 Draw the spectrums of three different line codes and describe why the spectrums have such shapes. Scrambling Make the data more random by removing long strings of 1’s or 0’s. Improve timing The simplest form of scrambling is to add a long pseudo-noise (PN) sequence to the data sequence and subtract it at the receiver (via modulo 2 addition); a PN sequence is produced by a Linear Shift Feedback Register (LSFR). In receiver, descrambling using the same PN. Secure: what is the PN and what is the initial scrambled data PN sequence length 2m – 1 = 26 – 1 = 63 data Scrambling Exercise: 100000000000 Scrambling Example Scrambler Descrambler Video Standard Two camps – H261, H263, H264; – MPEG1 (VCD), MPEG2 (DVD), MPEG4 Spacial Redundancy: JPEG – Intraframe compression – DCT compression + Huffman coding Temporal Redundancy – Interframe compression – Motion estimation Discrete Cosine Transform (DCT) 120 108 0 – black 255 – white 127 134 137 131 90 75 69 73 82 115 97 81 75 122 105 89 83 125 107 92 86 119 101 86 80 117 105 100 88 89 77 79 87 90 83 89 88 95 96 103 99 106 93 100 87 72 65 69 78 70 55 49 53 62 59 44 38 42 51 85 69 58 DCT and Huffman Coding 700 90 100 0 0 0 0 0 90 0 0 0 0 0 0 0 -89 0 0 0 0 0 0 0 0 – black 255 – white 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 0 Basis vectors Using DCT in JPEG DCT on 8x8 blocks Comparison of DF and DCT Quantization and Coding Zonal Coding: Coefficients outside the zone mask are zeroed. •The coefficients outside the zone may contain significant energy •Local variations are not reconstructed properly 30:1 compression and 12:1 Compression Motion Compensation I-Frame – Independently reconstructed P-Frame – Forward predicted from the last I-Frame or P-Frame B-Frame – forward predicted and backward predicted from the last/next I-frame or Pframe Transmitted as - I P B B B P B B B Motion Prediction Motion Compensation Approach(cont.) Motion Vectors – static background is a very special case, we should consider the displacement of the block. – Motion vector is used to inform decoder exactly where in the previous image to get the data. – Motion vector would be zero for a static background. Motion estimation for different frames X Z Available from earlier frame (X) Available from later frame (Z) Y I P B B B P 1 5 2 B B B P B B 3 4 9 6 7 8 B 13 10 11 A typical group of pictures in coding order I B B B P B B B P B B B P A typical group of pictures in display order 12 Coding of Macroblock Y 0 CR 4 5 1 2 CB 3 Spatial sampling relationship for MPEG-1 -- Luminance sample -- Color difference sample A Simplified MPEG encoder Scale factor IN Frame recorder DCT Rate controller Variablelength coder Quantize DC Motion predictor Prediction Reference frame Motion vectors Dequantize Inverse DCT Prediction encoder Buffer fullness Transmit OUT buffer MPEG Standards MPEG stands for the Moving Picture Experts Group. MPEG is an ISO/IEC working group, established in 1988 to develop standards for digital audio and video formats. There are five MPEG standards being used or in development. Each compression standard was designed with a specific application and bit rate in mind, although MPEG compression scales well with increased bit rates. They include: – MPEG1 – MPEG2 – MPEG4 – MPEG7 – MPEG21 – MP3 MPEG Standards MPEG-1 Designed for up to 1.5 Mbit/sec Standard for the compression of moving pictures and audio. This was based on CD-ROM video applications, and is a popular standard for video on the Internet, transmitted as .mpg files. In addition, level 3 of MPEG-1 is the most popular standard for digital compression of audio--known as MP3. MPEG-1 is the standard of compression for VideoCD, the most popular video distribution format thoughout much of Asia. MPEG-2 Designed for between 1.5 and 15 Mbit/sec Standard on which Digital Television set top boxes and DVD compression is based. It is based on MPEG-1, but designed for the compression and transmission of digital broadcast television. The most significant enhancement from MPEG-1 is its ability to efficiently compress interlaced video. MPEG-2 scales well to HDTV resolution and bit rates, obviating the need for an MPEG-3. MPEG-4 Standard for multimedia and Web compression. MPEG-4 is based on object-based compression, similar in nature to the Virtual Reality Modeling Language. Individual objects within a scene are tracked separately and compressed together to create an MPEG4 file. This results in very efficient compression that is very scalable, from low bit rates to very high. It also allows developers to control objects independently in a scene, and therefore introduce interactivity. MPEG-7 - this standard, currently under development, is also called the Multimedia Content Description Interface. When released, the group hopes the standard will provide a framework for multimedia content that will include information on content manipulation, filtering and personalization, as well as the integrity and security of the content. Contrary to the previous MPEG standards, which described actual content, MPEG-7 will represent information about the content. MPEG-21 - work on this standard, also called the Multimedia Framework, has just begun. MPEG-21 will attempt to describe the elements needed to build an infrastructure for the delivery and consumption of multimedia content, and how they will relate to each other. JPEG JPEG stands for Joint Photographic Experts Group. It is also an ISO/IEC working group, but works to build standards for continuous tone image coding. JPEG is a lossy compression technique used for full-color or gray-scale images, by exploiting the fact that the human eye will not notice small color changes. JPEG 2000 is an initiative that will provide an image coding system using compression techniques based on the use of wavelet technology. DV DV is a high-resolution digital video format used with video cameras and camcorders. The standard uses DCT to compress the pixel data and is a form of lossy compression. The resulting video stream is transferred from the recording device via FireWire (IEEE 1394), a high-speed serial bus capable of transferring data up to 50 MB/sec. – H.261 is an ITU standard designed for two-way communication over ISDN lines (video conferencing) and supports data rates which are multiples of 64Kbit/s. The algorithm is based on DCT and can be implemented in hardware or software and uses intraframe and interframe compression. H.261 supports CIF and QCIF resolutions. – H.263 is based on H.261 with enhancements that improve video quality over modems. It supports CIF, QCIF, SQCIF, 4CIF and 16CIF resolutions. – H.264 HDTV 4KTV 4-7 Mbps 25 - 27 Mbps