Logic of Prediction PPT - University of Baltimore Home Page web

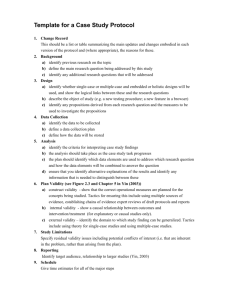

Validation, Meta-analyses, and the Scientific Status of

Selection

Neal Schmitt

Invited Address at the

IOOB Student Conference

Alliant University, San Diego, CA

Outline

• Discuss the nature of validity evidence

• Review the bases for validity claims in employee selection research

• Discuss limitations of data base on which these claims are made

• Propose a large scale multi-national collection and sharing of data on individual difference-performance relationships

Validity and Validation

• Validity is the degree to which inferences we draw from test data about future job performance are accurate.

• Standards and Principles both emphasize construct validity: Different forms of evidence

– Content

– Criterion-related

– Construct

– Consequential

Construct Validity involves several inferences (Binning & Barrett,

1989)

Construct Validity involves several inferences (Binning & Barrett,

1989)

1. # of Tattoos

2. GRE Test Score

3. MMPI Scores

1. ID w. Blue

Collar Social

Class

2. GMA

3. Mental

Dysfunction

Predictor measure is an accurate depiction of the intended predictor construct

Construct Validity involves several inferences (Binning & Barrett,

1989)

1. # of Tattoos

2. GRE Test Score

3. MMPI Scores

1. # Truck driving accidents

2. Grades in Grad school

3. Prison guard performance ratings

1. ID w. Blue

Collar Social

Class

2. GMA

3. Mental

Dysfunction

Criterion measure is an accurate depiction of the intended criterion construct (i.e., Performance

Domain)

1. Truck driving ability

2. Grad school performance

3. Prison guard performance

Construct Validity involves several inferences (Binning & Barrett,

1989)

1. # of Tattoos

2. GRE Test Score

3. MMPI Scores

1. # Truck driving accidents

2. Grades in Grad school

3. Prison guard performance ratings

1. ID w. Blue

Collar Social

Class

2. GMA

3. Mental

Dysfunction

1. Truck driving ability

2. Grad school performance

3. Prison guard performance

Predictor measure is related to the criterion measure —uncorrected validity coefficient in the usual criterion-related study

Construct Validity involves several inferences (Binning & Barrett,

1989)

1. # of Tattoos

2. GRE Test Score

3. MMPI Scores

1. # Truck driving accidents

2. Grades in Grad school

3. Prison guard performance ratings

1. ID w. Blue

Collar Social

Class

2. GMA

3. Mental

Dysfunction

1. Truck driving ability

2. Grad school performance

3. Prison guard performance

Predictor measure is related to the criterion construct – Typically a validity coefficient corrected for unreliability in the performance measure . This is of primary interest in selection context.

Construct Validity involves several inferences (Binning & Barrett,

1989)

1. # of Tattoos

2. GRE Test Score

3. MMPI Scores

1. # Truck driving accidents

2. Grades in Grad school

3. Prison guard performance ratings

1. ID w. Blue

Collar Social

Class

2. GMA

3. Mental

Dysfunction

1. Truck driving ability

2. Grad school performance

3. Prison guard performance

Construct Validity involves several inferences (Binning & Barrett,

1989)

1. # of Tattoos

2. GRE Test Score

3. MMPI Scores

1. # Truck driving accidents

2. Grades in Grad school

3. Prison guard performance ratings

1. ID w. Blue

Collar Social

Class

2. GMA

3. Mental

Dysfunction

1. Truck driving ability

2. Grad school performance

3. Prison guard performance

Predictor construct is related to the criterion construct; the primary theoretical/scientific question

Construct Validity involves several inferences (Binning & Barrett,

1989)

1. # of Tattoos

2. GRE Test Score

3. MMPI Scores

1. # Truck driving accidents

2. Grades in Grad school

3. Prison guard performance ratings

1. ID w. Blue

Collar Social

Class

2. GMA

3. Mental

Dysfunction

1. Truck driving ability

2. Grad school performance

3. Prison guard performance

What about the predictor construct domain and the criterion domain?

Three ways to link observed predictor score to criterion construct

• Content validity: A sampling strategy in which the predictor measure is a direct sample of the criterion (example: a clerical applicant is asked to perform a word processing task). There must be psychological fidelity in representing critical aspects of the criterion construct domain

Second way to support the inference that the predictor score relates to criterion construct.

Criterion-related validity: Must provide evidence for two links (5 and 8 in the figure).

Construct Validity involves several inferences (Binning & Barrett,

1989)

1.

The observed predictor score is correlated with the observed criterion

2.

The criterion measure is an adequate representation of the criterion construct (usually a judgment that criterion deficiency or contamination are not a problem). Evidence from job analysis, CFA analyses, etc.

Third way to provide evidence that the observed predictor is related to the criterion construct: construct validation.

This is construct validity and requires support for two links.

1. Observed predictor scores are representative of the predictor construct (test items map onto the specification of the hypothesized construct) .

2. The predictor construct domain is linked to the criterion construct domain (logical and judgmental supported by empirical research)

Construct Validity Includes

• Concerns about content

• Concerns about construct

– Relationships with measures of theoretically similar and dissimilar constructs

– Freedom from the usual “biasing” factors: e.g., social desirability, faking

– Process variables

Examples of construct validation

• Mumford et al (PPsych, 1996): Biodata

– Job-oriented variables (biodata items related to actual job tasks)

– Worker-oriented variables (biodata items related to constructs thought to underlie job performance: Linkage 6 in the figure)

– Biodata scales were developed to predict performance in laboratory tasks (Linkage 6)

– Biodata scales were developed to predict performance of foreign service officers

(Linkage 7)

Physical Ability

• Arvey et al. (JAP, 1992) and Arnold et al. (JAP,

1980)

– Job analyses (use of force reports, SME descriptions, survey)

– Literature review led to hypothesized importance of endurance and strength

– Collection of data on physical tasks (some face-valid and some not —handgrip and situps—Linkage 6)

– Collection of supervisory ratings on many aspects of physical performance

– Tested and confirmed a model that hypothesized the same set of endurance and strength factors plus a rating factor – good fitting model (Linkage 7)

Criterion-Related Validity Example

Project A (more later)

1. Multiple sources of evidence that observed predictor scores were related to observed criterion.

2. Great deal of effort devoted to collection of performance data from multiple informants and in job samples. Estimates of possible contaminants and reliability.

Data base on validity of existing measures is based primarily on criterion-related validity

• Job analysis

• Specification of the performance domain

• Hypotheses about the relevant predictor constructs (KASOs)

• Selection or construction of predictor variables

• Collection of data on predictor and criterion

(concurrent and predictive validity)

• Analysis of relationships between predictor and criterion measures

• Conclusions and implementation

Meta-analysis: Summary of Criterion-related research and basis for Validity Generalization Claims

• Prior to the use of VG work, there was a general belief that validity of tests was unique in each situation in which a test was used: Situational Specificity

• Schmidt & Hunter (1977) showed that much of the variability in observed validity coefficients could be explained by artifacts of the study in which validity was estimated

• S & H computed the sample-size weighted averages of existing validity estimates for cognitive abilityperformance relationship

• Bare-bones analysis corrected for differences in sample size only and found much of the variability in observed validity coefficients was explained

Validity Generalization

• In addition, computations of credibility intervals around the averages of validity coefficients revealed that for some KASO-performance relationships, one could expect to find non-zero relationships most of the time. That is, if we are examining a situation in which the performance construct is similar to that in the validity data base, we should be able to use the predictor (or a measure of the same construct) with confidence that the validity will generalize.

Results of Meta-analyses:

Cognitive Ability

• Hunter and Schmidt efforts were largely restricted to reanalysis of GATB data collected by the US Department of Labor

• Hunter (1983)

• Hunter & Schmidt (1977) used data from

Ghiselli (1966)

• Hunter & Hunter (1984)

• Schmidt & Hunter (1998)

Other Sources of Information about

Cognitive Ability

• Schmitt et al. (1984) used all published validity studies between 1964 and 1982

• Salgado and colleagues (Salgado et al.,

2003; Bertua, Anderson, & Salgado, 2005) provided European data

Results

• Average observed validity coefficients are almost all in the .20s. Most estimates of ρ are in the .40s and .50s.

• The lower bound of the 90% credibility interval is always substantial, from .10 to the .50s meaning it is unlikely that one would find a nonsignificant validity coefficient when using cognitive ability tests to select employees.

• Very similar results are reported for specific cognitive abilities such as memory, numerical ability, perceptual ability, psychomotor ability, spatial ability

Personality Sources

Performance Outcomes

• Barrick and Mount (1991): Review of published and unpublished sources from the 1950s through the 1980s

• Tett, Jackson, & Rothstein (1991): Reanalysis of existing data exploring the role of hypothesis testing and directionality as moderators of validity

• Hurtz and Donovan (2000): Published and unpublished research on Big Five only

• Barrick, Mount, & Judge (2001) summarized 15 prior meta-analytic studies

• Salgado (1997) European community

Results: Performance

• Observed validity: .04 to .22 across the

Big Five

• Corrected validity: .04 to .33 across the

Big Five (highest validity in Tett et al(1991))

• 90% credibility interval did not include zero for Conscientiousness and occasionally

Emotional Stability

Results: Other Criteria

• Most frequent alternate criterion is OCB

• Much smaller sample sizes (usually less than 2000)

• Observed validity ranges from .04 to .24

• Corrected validity ranges from .04 to .27

• Other personality traits including customer service orientation, core self-esteem, self efficacy, integrity tests: validities ranged from .14 to .50.

Summary

• We have an extensive data base that supports the use of tests of major constructs, certainly cognitive ability and conscientiousness across most occupations

• Validities are such that significant utility can result from the use of measures of these constructs

• The validity of these constructs is generalizable

Problems are with the nature of the primary data base: Consider Landy comments (2007)

• Without empirical studies, VG dies of its own weight OR meta-analysis can make a silk purse out of a sow’s ear, but you need the ears to begin with —so it is wrong to abandon criterion-related validity studies

• We are burdened with the empirical studies of the 50’s, 60’s and earlier that viewed the world through g and O (overall performance lenses)

Performance Criteria

Performance:

1.

cognitive, motor, psychomotor, or interpersonal behavior

2.

controllable by the individual

3.

relevant to organizational goals

4.

scalable in terms of proficiency

Campbell, J.P., McCloy, R.A., Oppler, S.H., & Sager, C.E. (1993). A theory of performance. In N. Schmitt & W.C. Borman (Eds.),

Personnel selection. Jossey-Bass: San Francisco.

• Quantity: The number of units produced, processed or sold (sales volume)

• Quality: The quality of work performed (error rates, inspection of product)

• Timeliness: How fast work is performed ( units produced per hour)

• Creativity: many white-collar jobs- keep track of creative work examples and attempt to quantify them.

• Adherence to Policy:

• OCB – Extra role or contextual behaviors (enhancing the environment)

• Self-Appraisal

• Peer Appraisal:

• Team Appraisal

• Assessment Center

• Accidents

• Quitting

• Intentions to quit

• Completion of training

• Letters of commendation

• Performance appraisals

• Reprimands

• Tardiness

• Absences ???

Performance Criteria

Results of a structural equation analysis of survey research involving 20,000 respondents.

The construct of Personal Satisfaction Motivation measured by 3 indicators and the constructs of Organizational Performance and Individual Performance measured by 2 indicators each

Performance Criteria

Results of a structural equation analysis of survey research involving 20,000 respondents.

The construct of Awards and Bonuses Motivation measured by 3 indicators and the constructs of Organizational Performance and Individual Performance measured by 2 indicators each

Job Performance As A

Multidimensional Concept

• A Multidimensional Model of Job

Performance :

– The eight performance dimensions are:

• Job specific task proficiency

• Non-job-specific task proficiency

• Written and oral communication task proficiency

• Demonstrating effort

• Maintaining personal discipline

• Facilitating peer and team performance

• Supervision/leadership

• Management/administration

34

CRITERIA OF GOOD

PERFORMANCE

1.

Job-specific task proficiency (substantive or technical task)

2.

Non-job-specific task proficiency (task done by most everyone on the job)

3.

Written and oral communication task proficiency

4.

Demonstrating effort (sustained goal directed exertion)

5.

Maintaining personal discipline (avoiding negative behavior )

6.

Facilitating peer and team performance (supporting and moral boosting)

7.

Supervision / leadership

(OPM: Persons who accomplish work through the direction of other people.

First level supervisors personally direct subordinates without the use of other, subordinate supervisors. A second level supervisor directs work through one layer of subordinate supervisors.)

8.

Management / administration

(OPM: Persons who direct the work of an organizational unit, are held accountable for the success of specific line or staff functions, monitor and evaluate the progress of the organization toward meeting goals, and make adjustments in objectives, work plans, schedules, and commitment of resources.

35

DETERMINANTS OF WORK PERFORMANCE

(from Blumberg & Pringle, 1982)

Capacity

Performance

Willingness

Opportunity

First Recorded US Army Efficiency

Report

• Lt. Col. Alex Denniston

• Major Crolines

• Capts. Martel, Crane, Wood

• Capt. Shotwell

• Capt. Reynolds

• Capt. Porter

• Lt. Kerr

• Lts. Perrin, Scott, Ryan, Elworth

• Ensign Mehan

A good natured man

A good man, but no officer

All good officers

A knave despised by all

Imprudent and of most violent passions

Stranger, little known

Merely good, nothing promising

Low vulgar men, Irish and from the meanest walks of life

Very dregs of the earth, unfit for anything under heaven.

What’s wrong with the empirical studies that underlie our meta-analyses

• Small sample sizes or nonexistent sample sizes (Schmidt & Hunter, 1977)

• Lack of information on the two major artifacts necessitating use of hypothetical distributions: Range restriction and criterion unreliability

• Mostly concurrent criterion-related studies with various design flaws: Sussman &

Robertson (JAP, 1989)

Flaws continued

• Lack of sample descriptions limiting the use of the data base in studying the role of demographic characteristics

• Lack of information on organizational characteristics: Concern about organizational characteristics does not imply a return to situational specificity notions

• Conceptualization of performance: Use of single item overall performance index

• Age of the data base: At least in the cognitive area, we have few studies less than 30-40 years old and they range from 30-60 or more years

Are the studies “old”: Cognitive ability example

• <1930 18

• 1931-1950 42

• 1951-1960 39

• 1961-1970 42

• 1971-1980 30

• 1981-1990 34

• >1991 32

• Bertua et al

• Salgado et al

• Vinchur et al (clerical performance)

• Schmitt et al. (1964-1982)

• Schmidt & Hunter (1977) < 1966

• Hunter (GATB) < 1980 most likely

Why is age a problem?

• Changes in work have implications

• Teamwork – we must measure team performance on the criterion end and attend to interpersonal KSAOs

• Virtual work– we must attend to the context in which work takes place in measuring KASOs

• Contingent and temporary work—is there need for a different system of selection than that used for regular workers

• Cognitive requirements of jobs have likely both increased and decreased

• Technical and nontechnical work

• Constantly changing work

• Global work

Single most important validation study has been Project A

• Goal was to generate criterion variables, predictor measures, analytic methods, and validation data that would result in an enhanced selection and classification system for the military services in the U.S.

• 276 entry level occupations

• In 1990, 800,000 person force

• Screened 300,000 to 400,000 annually to select 120,000 to 140,000

• ASVAB consisting of 10 subtests and a number of composites was, and is, the primary selection tool

Performance Domain (performance is behavior, not the result of behavior): Binning & Barrett -

Linkage 8

• Task analyses and critical incident analyses

• Generating the critical performance dimensions

• Constructing measures of each dimension

– Rating scales

– Job knowledge tests

– Hands-on job samples

– Archival records

Data Analyses

• Content analyses of performance measures

• Principal components analyses

• Development of a target model of performance for all jobs

• Confirmatory factor analyses

– Core technical proficiency

– General soldiering proficiency

– Effort and leadership

– Personal discipline

– Physical fitness and military bearing

Predictor Domain (Binning &

Barrett, Linkage 6)

• Extensive literature review (non-cognitive, cognitive, and psychomotor areas)

• Expert judges in the field were consulted as to predictorcriterion relationships for a variety of criterion content categories

• These judgments were factor analyzed to come up with a preliminary list of predictors thought to be relevant to various performance domains

• Practical time constraints were considered in arriving at a list of paper-and-pencil cognitive ability tests, computer-administered psychomotor tests, and paperand-pencil noncognitive measures

Validity Estimates

• Both concurrent and predictive samples

• Observed validity (Binning & Barrett,

Linkage 5)

• Corrected validity for restriction of range and criterion unreliability (Binning &

Barrett, Linkage 9) using actual empirical values

Project A was initiated 25 years ago: What’s new?

• Interest in multi-level issues

• Changes in work have escalated

• Interest in the persons evaluated:

Reactions

• Sustainability is an increasingly important issue

• Technology change

Proposal for Major New Exploration of

KASO-Performance Relationships

• Large Sample:

– Multiple organizations

– Multiple jobs

– Multiple countries

– Collection of demographic data: Gender, ethnic status, first language, age, disability status, etc.

Careful conceptualization and measurement of Performance Constructs

• Task Performance

• OCBs

• Adaptive performance with focus on willingness and ability to learn

• Job or organizationally specific outcomes

(e.g., vigilance or safety)

• Retention

• Counterproductivity

Predictor Measures

• Careful specification of the predictor domain and hypothesized linkages to performance constructs

• Construct studies and related literature surveys of predictor measures

• Assessment of “biasing” factors and mode of stimulus presentation issues

Design

• Predictive

• Data streaming

• Followup of those not selected

• Data should be collected and archived to allow for assessment and correction for artifacts and relationship changes over time

Collection of context or macro level variables

Organizational characteristics: Job, industry type, customer service requirements, size, age, hierarchy, private vs. public, etc.

Type of work schedule and workplace:

Virtual work, temporary work, etc.

Economy

Country and culture

Allowance for ancillary studies

• Mode of data collection: Web-based versus computer-supervised versus paper and pencil

• How do we manage faking?

• New predictor or outcome measures

• Time and ability-outcome relationships

Examination of Multi-level issues

• Team performance: Is the whole different than the sum of its parts? How do individual difference – team performance measures relate? How does team composition affect performance? Does culture or political system have any influence on these relationships

• Do relationships hold across individuals, teams, units, organizations?

• Are there cross-level effects?

• E.g., what is the effect of culture on selection practices? If people within a culture are more similar on some trait than those from different cultures, validity findings will not generalize.

Placement and Classification

Issues

• We know from large scale military studies and simulations that a classification model can improve the utility of selection procedures rather dramatically even when validity differences in composites are relatively tiny. Key here are the intercorrelations between predictors as well as the validity. Utility increases come from decreases in selection ratios for individual jobs.

• We have few applications in civilian arena

Reactions to selection procedures

• Fairness

• Relevance

• Feedback

• Interpersonal treatment

• Consistency

• Reconsideration opportunity

• We know there are cultural differences in the use of and reaction to selection procedures, but have no studies of the impact on validity

Sustainability (Kehoe et al., In press)

• Continued benefit must be recognized

• Organizational fit (is the selection system consistent with the organizational culture)

• Tradeoffs: Quality versus speed and administrative simplicity

• Cost

• Luck associated with who is in charge

• Perceived fairness

• Perceived defensibility

Provision for data-sharing

• Must address individual and organizational confidentiality issues

• Division of labor and maintenance of data base

• Has been done for decades using survey research: “No name” group

Summary and Conclusions

• We have an extensive data base that supports our inferences about the use of many of our selection procedures

• That data base in “old” and deficient in a variety of ways

• Modern theory and research can improve the data base in important practical and scientific ways

• There is a need for a macro cooperative study that is global, cross-cultural, multiorganizational, and continuous

Thank you very much!

Contamination and deficiency

• Contamination

– Pulling in irrelevant information

• Examples

– Asking salespeople about the area football team

– Subjective judgments about the “right” way to dress

• Deficiency

– Missing relevant information

• Examples

– Failing to assess a security guard’s aggressive disposition

– Failing to measure whether someone who persists at tasks has the ability to “let go” when the task is over

Unit 2, Lecture 3:

Introduction to Selection

Content validity: Are you measuring correctly?

Contamination Valid Deficiency

Measurement

Unit 2, Lecture 3: Introduction to

Selection

Construct

Contamination and deficiency can be traded off with scope

Contamination Valid Deficiency

Measurement

Unit 2, Lecture 3: Introduction to

Selection

Construct

Content Validity

• Developing measures

– Look at the job analysis you’ve already done

– Compare your current and proposed methods of assessment to the KSAO or competency matrix for the job

– Try to develop new measures of assessment that are especially relevant for each of the components of the job

– Reject all measures that are not demonstrably related to documented KSAOs or competencies

Unit 2, Lecture 3: Introduction to

Selection

Content Validity

• Example for a dentist, who must know or have skill with:

– Techniques needed to diagnose and treat dental injuries and diseases

– Chemical composition, structure, and properties of substances and of the chemical processes and transformations that they undergo.

– Principles and processes for providing customer and personal services.

– Business and management principles.

– Principles and methods for curriculum and training design, teaching and instruction.

– Principles and methods for promoting and selling products or services.

• What methods could you use to assess each of these?

Unit 2, Lecture 3: Introduction to

Selection

Face Validity

• Face validity

– Do the elements of the selection system look like they’re getting at something important?

– Can also incorporate how applicants react to a measure

– This isn’t really a form of recognized validity

• No conventional way to test for face validity

• No specific standard for a test’s minimum level of face validity

– Why can face validity steer you wrong?

• Facially valid (to some) but useless measures

• Facially invalid (to some) but useful measures

Unit 2, Lecture 3: Introduction to

Selection

Face validity

• Methods for assessment and improvement

– Review items that have been used by other organizations

– Survey current workforce or a general audience about your proposed method to assess whether the measures look relevant

– Potentially have a legal expert look over items

• Remember: lawyers don’t always use statistics to build their arguments, so face validity could matter in court

Unit 2, Lecture 3: Introduction to

Selection

The Logic of Prediction and Criterion

Validity

Predictor

Construct

Criterion

Construct

Predictor

Measure X

This path, which can be estimated statistically, is criterion-related validity

Unit 2, Lecture 3: Introduction to

Selection

Criterion

Measure Y

Two Designs for Criterion Validity

• Predictive validation

– Collect predictor data at hire

– Collect criterion data after a span of time

• Advantages?

• Problems?

• Concurrent validation

– Collect predictor data and criterion data using current employees

• Advantages?

• Problems?

Unit 2, Lecture 3:

Introduction to Selection

Returning to our design strategy

• Imagine we have a number of job applicants

– We have a measure of “job readiness”

• Examples?

– We also have job performance measures

• Examples?

Joe S.

Nate D.

Fran G.

Al C.

Marie S.

Nancy G.

Ramon F.

Lou M.

Wei S.

Job test Performance

25 30

15

30

20

25

19

12

8

22

4

29

21

14

15

33

12

29

Unit 2, Lecture 3: Introduction to Selection

Criterion-related Validity: A Graphical

Illustration

• The line cuts through the middle of the data to create a best guess of the relationship between test scores and performance.

• The expected performance of a person with score a is lower than the performance of a person with score b. performance x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x a b test score

Unit 2, Lecture 3: Introduction to Selection

A Sample Correlation

-3 -2 -1 0

Predictor score

1 2 3

• R =0.50

• This is close to the intelligenceperformance relationship

• Also close to the job knowledge-performance relationship

• Also close to the composite correlation for all personality dimensions

Unit 2, Lecture 3: Introduction to

Selection

A Sample Correlation

-3 -2 -1 0

Predictor score

1 2 3

Unit 2, Lecture 3: Introduction to

Selection

• R =0.30

• This is close to the personalityperformance relationship for the most valid personality traits

A Sample Correlation

-3 -2 -1 0

Predictor score

1 2 3

• R =0.00

• This is close to the relationship between handwriting samples and job performance

Unit 2, Lecture 3: Introduction to

Selection

An Alternative Way to Think about This

Relationship job performance satisfactory performance unsatisfactory performance false negative x x x x x x x x x true negative x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x true positive false positive not hired hired cut score

Unit 2, Lecture 3: Introduction to

Selection test score

Accuracy of Prediction

Unit 2, Lecture 3: Introduction to

Selection

Accuracy of Prediction

Unit 2, Lecture 3: Introduction to

Selection

Standards for Selection

• Cut scores

– The minimum level of performance that is acceptable for an applicant to be considered minimally qualified

• Methods of selecting from applicants

– Minimum qualification

• Hire sequentially based on the first applicants who score above the cut score for the job

– Top down hiring

• Give job offers starting from the most qualified and progressing to the lowest score (ensuring all scores are above the cut point)

– When is each method used? What are the advantages of each method?

Unit 2, Lecture 3: Introduction to

Selection

Baseline Cut Score

satisfactory performance unsatisfactory performance false negative x x x x x x x x x true negative x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x true positive false positive not hired hired

Unit 2, Lecture 3: Introduction to

Selection

Increase cut score

satisfactory performance unsatisfactory performance false negative x x x x x x x x x true negative x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x true positive false positive not hired hired

Unit 2, Lecture 3: Introduction to

Selection

Reduce Cut Score

satisfactory performance unsatisfactory performance false negative x x x x x x x x x true negative x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x true positive false positive not hired hired

Unit 2, Lecture 3: Introduction to

Selection

Of Course, It’s Best to Improve

Validity

Low validity

High validity satisfactory performance unsatisfactory performance false negative x x x x x x x x x x x x x x x x true negative x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x true positive false positive not hired hired satisfactory performance unsatisfactory performance false negative x x x x x x true x negative x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x x true positive false positive not hired

Unit 2, Lecture 3: Introduction to

Selection hired