Time Synchronization - West Virginia University

advertisement

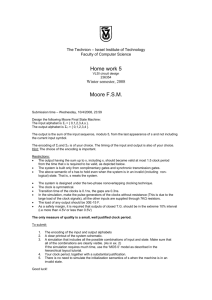

Time Synchronization Vinod Kulathumani West Virginia University Need for TimeSync • Typical use Collect sensor data, correlate with location, time • Coordinated actuation • Reaction to sensed data in real time 2 Needed clock properties • Different applications have different needs Seconds to micro-seconds • Other parameters Logical time or real time? Synchronized with GPS? Monotonic or backward correction allowed? delta-synchronization only with neighbors? 3 Special requirements • Efficiency Processing Memory Energy • Scalability • Robustness Failures Additions Mobility 4 Debate • Claim: GPS is solution to all problems of keeping time, synchronizing clocks doubtful for many wireless sensor networks, for several reasons • Claim: Synchronizing clocks of nodes in sensor networks is not needed for applications that only collect data actually true for some specific cases Nodes can track the delays incurred at each hop Base-station can punch a timestamp for received message 5 GPS based • Relatively high-power (GPS) • Need special GPS / antenna hardware • Need “clear view” to transmissions • Precision of transmitted message is in seconds (not millisecond, microsecond, etc) • “Pulse-per-Second” (PPS) can be highly precise (1/4 microsecond), but not easy to use • Cannot use indoors [e.g. control applications] 6 Clock hardware in sensors • Typical sensor CPU has counters that increment by each cycle, generating interrupt upon overflow we can keep track of time, but managing interrupts is errorprone • External oscillator (with hardware counter) can increment, generate interrupt even when CPU is “off” to save power 7 Uncertainties in clock hardware • cheap, off-the-shelf oscillators can deviate from ideal oscillator rate by one unit per 10-5 (for a microsecond counter, accuracy could diverge by up to 40 microseconds each second) oscillator rates vary depending on power supply, temperature 8 Uncertainties in radio • Send time: Nondeterministic, ~100ms Delay for assembling message & passing it to MAC layer • Access time: Nondeterministic, ~1ms-~1sec Delay for accessing a clear transmission channel • Transmission time: ~25ms Time for transmitting (depends on message size, radio clock speed) • Propagation time: <1microsecond Time bit spends on the air (depends on distance between nodes) • Reception time: overlaps with transmission time • Receive time: Nondeterministic, ~100ms Delay for processing incoming message and notifying application 9 Close up • Interrupt handling time ~1microsec-~??microsec Delay between radio chip raising and CPU responding Abuses of interrupt disabling may cause problems • Encoding time ~100microsec Delay for putting the wave on air • Decoding time ~100microsec Delay for decoding wave to binary data • Byte alignment time Delay due to different byte alignment of sender and receiver 10 Close up… 11 Delays in message transmission 12 Protocols • RBS (Receiver-receiver based) • TPSN (sender-receiver based) • Multi-hop strategies • RBS uses zones • TPSN uses hierarchies • Uniform convergence time sync 13 RBS idea • Use broadcast as a relative time reference Broadcast packet does NOT include timestamp Any broadcast, e.g., RTS/CTS, can be used • A set of nodes synchronize with each other (not with the sender) Significantly reduces non-determinism 14 Reference broadcast • when operating system cannot record instant of message transmission (access delay unknown), but can record instant of reception m1 m1 is received simultaneously by multiple receivers: each records a timestamp value contained in m1 15 RBS: Minimizing the critical path All figures from Elson et. al. • RBS removes send and access time errors Broadcast is used as a relative time reference Each receiver synchronizing to a reference packet Ref. packet was injected into the channel at the same instant for all receivers Message doesn’t contain timestamp Almost any broadcast packet can be used, e.g ARP, RTS/CTS, route discovery packets, etc 16 Reference broadcast… • after getting m1, all receivers share their local timestamps at instant of reception now, receivers come to consensus on a value for synchronized time: for example, each adjusts local clock/counter to agree with average of local timestamps 17 RBS: Phase Offset Estimation 18 RBS: Phase Offset Estimation 19 RBS: Phase Offset Estimation Analysis of expected group dispersion (i.e., maximum pair-wise error) after reference-broadcast synchronization. Each point represents the average of 1,000 simulated trials. The underlying receiver is assumed to report packet arrivals with error conforming to last figure. Mean group dispersion, and standard deviation from the mean, for a 2-receiver (bottom) and 20-receiver (top) group. 20 RBS: Phase Offset with Skew Each point represents the phase offset between two nodes as implied by the value of their clocks after receiving a reference broadcast. A node can compute a least-squared error fit to these observations (diagonal line), and convert time values between its own clock and that of its peer. Synchronization of the Mote’s internal clock 21 RBS: Multi-hop Time Synchronization 22 RBS: Multi-hop Time Synchronization Example, we can compare the time of E1(R1) with E10(R10) by transforming E1(R1) ► E1(R4) ► E1(R8) ► E1(R10). Conversions can be made automatically by using the shortest path algorithm 23 Multi-Hop RBS Performance Average path error is approximately n for an n hop path The key point is the growth is not linear 24 Basic idea – sender/receiver • Sender sends a message with local timestamp • Receiver timestamps message upon arrival • Forms basis for synchronization • Could be accurate if • Sender could generate timestamp at the instant bit was generated • Receiver could time stamp instant when bit arrived • Concurrent view obtained – propagation delay insignificant 25 TSPN: Synchronization phase Synchronization using handshake between a pair of nodes (sender-initiated) Level # Value of T1 Level # T1, T2, T3 Assuming no clock drift and propagation delay do not change Clock drift Delay A can now synchronize with B 26 Error Analysis: TPSN & RBS • Analyze sources of error for the algorithms • Compare TPSN and RBS • Trade-Offs Level # Value of T1 Level # T1, T2, T3 Clock drift 27 Error Analysis: TPSN & RBS • T2 = T1 + SA + PA->B + RB + DA->B • T4 = T3 + SB + PB->A + RA + DB->A = T3 + SB + PB->A + RA - DA->B • DA->B = [(T2-T1) – (T4-T3)]/2 + [SUC + PUC + RUC]/2 • If (SA=SB) and (Delays both ways are same) and (RA = RB), then our clock drift expression is correct • If there are uncertainities, error in synchronization is [SUC + PUC + RUC]/2 • For RBS, the error was [PUC + RUC] 28 Performance (TPSN vs. RBS) 29 TPSN (Multi-hop) Clock Sync Algorithm involves 2 steps Level Discovery phase Synchronization phase TSPN makes the following assumptions Sensor nodes have unique identifiers Node is aware of its neighbors Bi-directional links Creating the hierarchical tree is not exclusive to TSPN 30 TSPN: Level Discovery Algorithm Root node is assigned level i = 0 broadcasts “level discovery pkt.” Neighbors get packet and assign level i+1 to themselves Ignore discovery pkts. once level has been assigned Broadcast “level discovery pkt.” with own level STOP when all nodes have been assigned a level Optimization Use minimum spanning trees instead of flooding 31 TSPN: Synchronization Algorithm Root node initiates the protocol broadcasts “time sync pkt.” Nodes at level = 1 Wait for a random time, initiate time-sync with root On receiving ACK .. Synchronize with root Nodes at level = 2 overhear sync from nodes at level 1 Do a random back-off, initiate time-sync with level 1 node Node sends ACK only if it has been synchronized If a node does not receive an ACK, resend time- sync pulse after a timeout. 32 Techniques for multi-hop - 1 • Regional time zones and conversion e.g. RBS we can compare the time of E1(R1) with E10(R10) by transforming E1(R1) ► E1(R4) ► E1(R8) ► E1(R10) Average path error is approximately n for an n hop path 33 Techniques for multi-hop 2 • Leader based E.g. TSPN, FTSP Leader clock at root Others follow the leader through tree structure • Robustness Leader failure: tolerated by new leader election Node or link failure: tolerate by finding alternate path Like routing table recovery • Excellent synchronization Claim is error is constant over multi-hop [+ve, -ve neutralize] But theoretically error grows lineraly • Rapid setup for on-demand synchronization ? • Suitable for low link failure, stable nodes • Unsuitable in mobile settings / dynamic settings 34 Techniques for multi-hop 3 • Uniform convergence • Basic idea: instead of a leader node, have all nodes follow a “leader value” leader clock could be one with largest value leader clock could be one with smallest value leader value could be mean, median, etc • local convergence -> global convergence • send periodic timesync messages, use easy algorithm to adjust offset if (received_time> local_clock) local_clock= received_time 35 Uniform convergence - advantages • Fault tolerance is automatic • Each node takes input from all neighbors • Mobility of sensor nodes is no problem • Extremely simple implementation • Self-stabilizing from all possible states and system configurations, partitions & rejoins • Was implemented for “Line in the Sand” demonstration 36 Uniform convergence - challenges • Even one failure can contaminate entire network (when failure introduces new, larger clock value) • More difficult to correct skew than for tree • How to integrate GPS or other time source? • What does “largest clock” mean when clock reaches maximum value and rolls over? rare occurrence, but happens someday transient failures could cause rollover sooner 37 Preventing contamination • Build picture of neighborhood • Node collects timesync messages from all neighbors • Are they all reasonably close? yes : adjust local clock to maximum value No: cases to consider: more than one outlier : no consensus, adjust to maximum value only one outlier from “consensus clock range” if pis outlier, then p “reboots” its clock if other neighbor is outlier, ignore that neighbor handles single-fault cases only 38 Preventing contamination • Build picture of neighborhood • Node collects timesync messages from all neighbors • Are they all reasonably close? yes : adjust local clock to maximum value No: cases to consider: more than one outlier : no consensus, adjust to maximum value only one outlier from “consensus clock range” if pis outlier, then p “reboots” its clock if other neighbor is outlier, ignore that neighbor handles single-fault cases only 39 Special case: restarting / new node • Again, build picture of neighborhood • Node joining network or rebooting clock • look for “normal” neighbors to trust normal neighbors : copy maximum of normal neighbors no normal neighbors : adjust local clock to maximum value from any neighbor (including restarting ones) after adjusting to maximum, node becomes “normal” 40 Clock rollover • Node p’s clock advances from 232-1 back to zero • q (neighbor of p) has clock value 232-35 • question: what should q think of p’sclock? proposal: use (<,max) cyclic ordering around domain of values [0,232-1] 41 Bad case for cyclic ordering • Network is in “ring” topology • values (w,x,y,z) are about ¼ of 232 apart in domain of clock values -> in ordering cycle • Maybe, each node follows larger value of neighbor in parallel never synchronizing! a solution to this problem reset to zero when neighbor clocks are too far apart, use special rule after reset 42 Open questions • Energy conservation • Special needs Coordinated actuation Long term sleeping Low duty cycles • Tuning time-sync to application requirements 43 References • Slides on time synchronization by Prof Ted Herman, University of Iowa • Timing-sync Protocol for Sensor Networks (TPSN) Ganeriwal S, Kumar R, Srivastava M [Sensys 2003] • Fine-Grained Network Time Synchronization using Reference Broadcasts Elson J, Girod L, Estrin D [OSDI 2002] • The Flooding Time Synchronization Protocol Maroti M, Kusy B [Sensys 2004] 44