An intervention study to help undergraduate students

advertisement

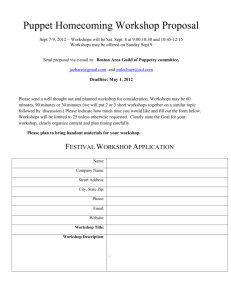

Aligning student and staff expectations around assessment: an intervention study to help undergraduate students understand assessment criteria for examination essays Kathy Harrington, Mercedes Freedman, Savita Bakhshi, Peter O’Neill Write Now Centre for Excellence in Teaching and Learning London Metropolitan University European Congress of Psychology Oslo, July 2009 Study aims Improve students’ understanding of and ability to demonstrate assessment criteria applied to their timed, unseen examinations Leading to improved module performance In addition, we wanted to… Build on previous research on use of assessment criteria to improve student learning Develop the intervention through collaboration between Psychology academics and writing specialists (Writingin-the-Disciplines model) Incorporate peer mentoring in academic writing 2 Context of study 2nd-year cognitive psychology module Assessment 100% by examination Traditionally lower than average pass rate Identified by university as a “killer module” Plan of response required Write Now CETL works with discipline-based academics to improve student learning through curriculum and teaching development Write Now CETL runs university Writing Centre staffed by trained student peer mentors in academic writing Collaborative, non-directive, supportive Enable students to take responsibility for own work 3 Pedagogical rationale Students and tutors often interpret meanings of assessment criteria differently (Harrington et al., 2006; Lea & Street, 1998; Merry et al., 1998) Providing clear and explicit criteria is a first step in helping students understand what tutors are looking for in written work However, research has also shown that facilitating students' active engagement with the criteria is necessary if learning and performance are to be demonstrably enhanced (Price et al., 2001) Structured interventions focussed on understanding and demonstrating assessment criteria have been shown to lead to improvements in student learning and performance (Norton et al., 2005; Rust et al., 2003) Other research has found that students feel talking to peer tutors about their writing leads to better writing, and that psychology students prefer working with peer tutors from their own discipline (Bakhshi et al., 2009) 4 The intervention 4, hour-long compulsory workshops embedded as part of module teaching across the autumn semester 2008-09 Immediately following two-hour lectures Delivered in alternate weeks, with workshops run by subject lecturers in between (focussed more explicitly on lecture content) Designed by team of academic writing specialists, psychology lecturer, psychology PhD student with experience of pedagogical research in area of student writing and assessment Delivered by academic writing specialists and peer writing mentors studying psychology (3rd-year and PhD students) 5 The intervention (continued) Exam answers posted in VLE prior to workshops for students to read and give a grade Set of accompanying materials developed using extracts from authentic examination answers annotated with comments in relation to assessment criteria Specific focus on cognitive psychology Use of departmental assessment criteria In workshops, materials used to facilitate discussion about demonstrating assessment criteria at different levels of performance Students guided in small groups to adopt role of examiner and apply criteria to whole past examination answers Final class discussion drew out students’ insights and summarised main points 6 Data collection and analysis Attendance registers taken at workshops 2, 3 and 4 (not at first workshop) Questionnaire distributed at last workshop (n=63) Likert scale: students’ perceptions of helpfulness of workshops in relation to Examination writing Meeting assessment criteria Understanding subject matter of cognitive psychology Examination grades Analysis using SPSS to produce descriptive and inferential statistics 7 Study sample N=205 students enrolled on the module who took the examination 40 students enrolled on module did not take the examination Study population Total students enrolled on module Conversion Diploma 64 (31.2%) 72 (29.4%) Single Honours 93 (45.4%) 113 (46.1%) Other/unidentified 5 (2.4%) 11 (4.5%) Joint Honours 43 (21%) 49 (20%) Total 205 (100%) 245 (100%) 8 Findings: pass rate and mean grade Number of students Pass rate Fail rate Mean final grade 2007-08 191 71% 29% 47% 2008-09 205 62% 38% 43.6% 9 Percentage who attended workshops Frequency Percent Valid Percent Cumulative Percent 0 100 48.8 48.8 48.8 1 50 24.4 24.4 73.2 2 29 14.1 14.1 87.3 3 26 12.7 12.7 100.0 205 100.0 100.0 Valid Total • 100 (48.8%) students did not attend any workshops • 105 (51.2%) students attended at least one workshop 10 Attendance and pass/fail in examination Test Value df Asymp. Sig. (2sided) Continuity Correction 9.047 1 .003** Pass or fail Pass Fail Total Count 51 49 100 % 51.0% 49.0% 100.0% Count 76 29 105 No Attendance at at least one workshop Yes % 72.4% 27.6% 100.0% Count 127 78 205 % 62.0% 38.0% 100.0% Total 11 Attendance and final grade category Figure 1: Percentage of students who attended workshops by grade category 60 50 1st 40 2.1 2.2 % 30 3rd 20 Fail 10 0 0 1 2 Number of workshops attended 3 12 Workshop attendance and grades: 1 Figure 2: Correlation between number of workshops attended and final grade • Significant positive relationship (r=.314, n= 63, p<0.05) • The more workshops attended, the higher the grade 13 Workshop attendance and grades: 2 Figure 3: Total number of workshops attended and mean final grade Mean final grade overall: 43.61% Conversion Diploma: 60.34% Single Honours: 38.8% Other: 58.2% Joint Honours: 27.44% 14 Similar findings in other research Lusher (2007) Small-group workshops focused on assessment criteria with 3rd-year health psychology students Significant correlation between attendance and mean examination scores (r=0.254, N=111, p<0.01) Multiple regression showed that performance did not independently predict attendance, so not just a matter of more able students attending workshops 15 Students’ perceptions of workshops 7-point Likert scale: strongly disagree to strongly agree Mean scores for all items were positive Understanding what assessment criteria are Understanding how to demonstrate them Understanding what makes a good examination essay in Cognitive Psychology Understanding subject matter of cognitive psychology Achieving a better grade Producing better writing 16 Conclusions Module pass rate lower this year at 62%, compared to 71% in 2007-08 However, a number of minor changes were made to content and delivery, so comparison across years problematic In 2008-09, attendance at the workshops was significantly correlated with higher examination grades Confounding factor is that more able students are more likely to be attending in first place Students’ who attended perceived the workshops to be helpful for More tests needed, cf. Lusher (2007) Understanding what assessment criteria are Understanding how to demonstrate the criteria in their own writing Understanding the subject matter of cognitive psychology Achieving a better grade Producing better writing Difficulty of addressing needs of weaker students, even with “embedded” teaching 17 Conclusions (continued) Substantial improvement in student learning and performance may require changing the method of assessment However, given that this may not be possible (due to institutional constraints), other modifications will be considered based on Student feedback on questionnaires Importance of embedding “writing to learn” activities within modules, rather than treating writing as an add-on skill separate from learning subject matter Benefits to students of collaborative learning environments Planned changes to module for next year Less distinction between lectures and workshops by identifying 3-hour “teaching blocks” instead, with varied mix of lecture and workshop activities, to try to encourage higher attendance Time to practice writing in teaching sessions Peer review of own writing, facilitated by peer mentors 18 References Bakhshi, S., Harrington, K., and O'Neill, P. (2009). Psychology students’ experiences of academic peer mentoring at the London Metropolitan University Writing Centre, Psychology Teaching and Learning, 8(1), 6-13. Harrington, K., Elander, J., Norton, L., Reddy, P., Aiyegbayo, O. & Pitt, E. (2006). A qualitative analysis of staff-student differences in understandings of assessment criteria, in C. Rust (Ed.), Improving Student Learning through Assessment. Oxford: Oxford Centre for Staff and Learning Development. Lea, M. R. & Street, B. (1998). Student writing in higher education: an academic literacies approach, Studies in Higher Education, 23, pp. 157-72. Lusher, J. (2007). How study groups can help examination performance, Health Psychology Update, 16, 1 & 2. Norton, L., Harrington, K., Elander, J., Sinfield, S., Lusher, J., Reddy, P., Aiyegbayo, O. & Pitt, E. (2005). Supporting students to improve their essay writing through assessment criteria focused workshops, in C. Rust (Ed.), Improving Student Learning: Inclusivity and Diversity. Oxford: Oxford Centre for Staff and Learning Development. Merry, S., Orsmond, P. & Reiling, K. (1998). Biology students’ and tutors’ understanding of a ‘good essay’, in C. Rust (Ed.), Improving Student Learning: Improving Students as Learners. Oxford: Oxford Centre for Staff and Learning Development. Price, M. and O'Donovan, B. and Rust, C. (2001). Strategies to develop students' understanding of assessment criteria and processes, in C. Rust (Ed.), Improving Student Learning - 8: Improving Student Learning Strategically. Oxford: Oxford Centre for Staff and Learning Development. Rust, C., Price, M. & O’Donovan, B. (2003). Improving students’ learning developing their understanding of assessment criteria and processes, Assessment and Evaluation in Higher Education, 35, pp. 453-472. 19 http://www.writenow.ac.uk 20