learning to rank

搜索

与

Inter

网

的信息检索

马志明

May 16 , 2008

Email: mazm@amt.ac.cn http://www.amt.ac.cn/member/mazhiming/index.html

约有

626,000

项符合中国科学院数

学与系统科学研究院的查询结果,

以下是第

1

-

100

项。

( 搜索用时

0.45

秒)

How can google make a ranking of

626,000

pages in

0.45

seconds?

A main task of

Internet (Web)

Information Retrieval

= Design and Analysis of

Search Engine (SE) Algorithm

involving plenty of Mathematics

HITS

1998 Jon Kleinberg Cornell University

PageRank

1998 Sergey Brin and Larry Page

Stanford University

Nevanlinna Prize

(

2006)

Jon Kleinberg

• One of Kleinberg‘s most important research achievements focuses on the internetwork structure of the World Wide Web.

• Prior to Kleinberg‘s work, search engines focused only on the content of web pages , not on the link structure.

• Kleinberg introduced the idea of

• “authorities” and

“hubs”:

• An authority is a web page that contains information on a particular topic,

• and a hub is a page that contains links to many authorities.

Zhuzihu thesis.pdf

Page Rank ,

the ranking system used by the

Google search engine.

• Query independent

• content independent.

• using only the web graph structure

Page Rank , the ranking system used by the Google search engine.

WWW 2005 paper

PageRank as a Function of the Damping Factor

Paolo Boldi Massimo Santini Sebastiano Vigna

DSI, Università degli Studi di Milano

3 General Behaviour

3.1 Choosing the damping factor

3.2 Getting close to 1

*

lim

1

(

)

what makes

* different from the other (infinitely

* can we somehow characterise the properties of ?

many, if P is reducible) limit distributions of P ?

Conjecture 1 :

* is the limit distribution of P when the starting distribution is uniform, that is,

lim

1

(

)

n lim

1

N

P n

.

Website provide plenty of information : pages in the same website may share the same IP, run on the same web server and database server, and be authored / maintained by the same person or organization.

there might be high correlations between pages in the same website, in terms of content, page layout and hyperlinks.

websites contain higher density of hyperlinks inside them (about 75% ) and lower density of edges in between.

HostGraph loses much transition information

Can a surfer jump from page 5 of site 1 to a page in site 2 ?

From: s06-pc-chairs-email@u.washington.edu

[mailto:s06-pc-chairs-

Sent: 2006 年 4 月 4 日 8:36

To: Tie-Yan Liu; wangying@amss.ac.cn

; fengg03@mails.thu.edu.cn

; ybao@amss.ac.cn

; mazm@amt.ac.cn

Subject: [SIGIR2006] Your Paper #191

Title:

AggregateRank: Bring Order to Web Sites

Congratulations!!

29th Annual International Conference on Research & Development on Information Retrieval (

SIGIR’06,

August 6

–

11, 2006, Seattle,

Washington, USA).

Ranking Websites, a Probabilistic View

Internet Mathematics, Volume 3

(2007), Issue 3

Ying Bao, Gang Feng, Tie-Yan Liu,

Zhi-Ming Ma, and Ying Wang

- ---

We suggest evaluating the importance of a website with the mean frequency of visiting the website for the Markov chain on the Internet

Graph describing random surfing.

---We show that this mean frequency is equal to the sum of the PageRanks of all the webpages in that website

(hence is referred as PageRankSum )

---We propose a novel algorithm

( AggregateRank Algorithm) based on the theory of stochastic complement to calculate the rank of a website

.

---The AggregateRank Algorithm can approximate the PageRankSum accurately, while the corresponding computational complexity is much lower than

PageRankSum

--- By constructing return-time Markov chains restricted to each website, we describe also the probabilistic relation between PageRank and

AggregateRank.

---The complexity and the error bound of

AggregateRank Algorithm with experiments of real dada are discussed at the end of the paper .

• n webs in N sites, n

n

1

n

2

n

N

The stationary distribution, known as the

PageRank vector, is given by

We may rewrite the stationary distribution as with as a row vector of length n i

We define the one-step transition probability from the website S i to the website S j by where e n j vector of all ones

The N × N matrix C (

α

)=( c ij

(

α

)) is referred to as the coupling matrix , whose elements represent the transition probabilities between websites. It can be proved that C (

α

) is an irreducible stochastic matrix , so that it possesses a unique stationary probability vector. We use ξ ( α ) to denote this stationary probability, which can be gotten from

with e

1

Since

One can easily check that is the unique solution to

with e

1

We shall refer as the AggregateRank

That is, the probability of visiting a website is equal to the sum of PageRanks of all the pages in that website. This conclusion is consistent to our intuition.

the transition probability from S i to S j actually summarizes all the cases that the random surfer jumps from any page in S i to any page in S j within one-step transition. Therefore, the transition in this new HostGraph is in accordance with the real behavior of the Web surfers. In this regard, the socalculated rank from the coupling matrix C (

α

) will be more reasonable than those previous works.

Let denote the number of visiting the website during the n times

, that is

We have

Assume a starting state in website A , i.e.

We define and inductively

It is clear that all the variables are stopping times for X .

Let denote the transition matrix of the return-time Markov chain for site

Similarly, we have

Suppose that AggregateRank, i.e.

the stationary distribution of is

Since

Therefore

• Based on the above discussions, the direct approach of computing the AggregateRank ξ(α) is to accumulate PageRank values (denoted by

PageRankSum ).

• However, this approach is unfeasible because the computation of PageRank is not a trivial task when the number of web pages is as large as several billions. Therefore,

Efficient computation becomes a significant problem .

AggregateRank

1. Divide the n

× n matrix into

N

×

N blocks according to the N sites.

2. Construct the stochastic matrix for by changing the diagonal elements of to make each raw sum up to 1.

3. Determine from

4. Form an approximation matrix , by evaluating to the coupling

5. Determine the stationary distribution of and denote it , i.e.,

Experiments

• In our experiments, the data corpus is the benchmark data for the Web track of TREC

2003 and 2004, which was crawled from the .gov domain in the year of 2002.

• It contains 1,247,753 webpages in total.

we get 731 sites in the .gov dataset. The largest website contains 137,103 web pages while the smallest one contains only 1 page.

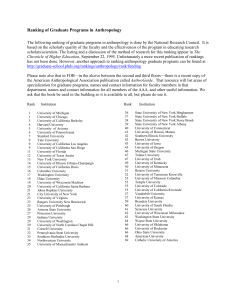

Performance Evaluation of Ranking Algorithms based on Kendall's distance

Similarity between PageRankSum and other three ranking results .

• From: pcchairs@sigir2008.confmaster.net

Sent: Thursday, April 03, 2008 9:48 AM

Dear Yuting Liu, Bin Gao, Tie-Yan Liu, Ying Zhang,

• Zhiming Ma, Shuyuan He, Hang Li

• We are pleased to inform you that your paper

Title:

BrowseRank: Letting Web Users Vote for Page Importance has been accepted for oral presentation as a full paper and for publication as an eight-page paper in the proceedings of

• the 31st Annual International ACM SIGIR

Conference on Research & Development on Information

Retrieval .

Congratulations!!

Building model

• Properties of Q process:

– Stationary distribution:

•

– Jumping probability:

•

– Embedded Markov chain:

• is a Markov chain with the transition probability matrix

Main conclusion 1

•

– is the mean of the staying time on page i.

The more important a page is, the longer staying time on it is.

– is the mean of the first re-visit time at page i.

The more important a page is, the smaller the re-visit time is, and the larger the visit frequency is.

Main conclusion 2

•

– is the stationary distribution of

– The stationary distribution of discrete model is easy to compute

• Power method for

• Log data for

Further questions

• How about inhomogenous process?

– Statistic result show: different period of time possesses different visiting frequency.

– Poisson processes with different intensity.

• Marked point process

– Hyperlink is not reliable.

– Users’ real behavior should be considered.

– Term distribution (anchor, URL, title, body, proximity, ….)

– Recommendation & citation (PageRank, clickthrough data, …)

– Statistics or knowledge extracted from web data

• Questions

– What is the optimal ranking function to combine different features (or evidences)?

– How to measure relevance?

Learning to Rank

• What is the optimal weightings for combining the various features

– Use machine learning methods to learn the ranking function

– Human relevance system (HRS)

– Relevance verification tests (RVT)

Wei-Ying Ma, Microsoft Research Asia

q

( 1 ) d

1

( 1 ) d

2

( 1 )

( 1 ) d n

( 1 )

q

( m ) d

1

( m ) d

2

( m )

( m ) d n

( m )

Learning to Rank

Learning

System

Model q ( m

1 )

Ranking

System d

1

( m

1 ) d

2

( m

1 )

d n

(

( m

1 ) m

1 )

Wei-Ying Ma, Microsoft Research Asia

66

q ( i ) d d

1

2

( i

Learning to Rank (Cont)

) ( i

)

Break down

( i )

( i q

d j

)

, d k

( i )

1

j , k

n

( i )

( i ) d n

( i )

• State-of-the-art algorithms for learning to rank take the pairwise approach

– Ranking SVM

– RankBoost

– RankNet (employed at Live Search)

Wei-Ying Ma, Microsoft Research Asia

67

learning to rank

• The goal of learning to rank is to construct a real-valued function that can generate a ranking on the documents associated with the given query. The state-of-the-art methods transforms the learning problem into that of classification and then performs the learning task:

• For each query, it is assumed that there are two categories of documents: positive and negative (representing relevant and irreverent with respect to the query). Then document pairs are constructed between positive documents and negative documents.

In the training process, the query information is actually ignored .

[5] Y. Cao, J. Xu, T.-Y. Liu, H. Li, Y. Huang, and H.-W. Hon.

Adapting ranking svm to document retrieval

.

In

Proc. of SIGIR’06

, pages 186–193, 2006.

[11] T. Qin, T.-Y. Liu, M.-F. Tsai, X.-D. Zhang, and H. Li.

Learning to search web pages with query-level loss functions

. Technical Report MSR-TR-2006-156, 2006.

As case studies, we investigate Ranking SVM and RankBoost.

We show that after introducing query-level normalization to its objective function, Ranking SVM will have query-level stability.

For RankBoost , the query-level stability can be achieved if we introduce both query-level normalization and regularization to its objective function.

• We re-represent the learning to rank problem by introducing the concept of

• ‘query’ and

‘distribution given query’

• into its mathematical formulation.

• More precisely, we assume that queries are drawn independently from a query space Q according to an (unknown) probability distribution

It should be noted that if , then the bound makes sense. This condition can be satisfied in many practical cases.

As case studies, we investigate Ranking SVM and RankBoost.

We show that after introducing query-level normalization to its objective function, Ranking SVM will have query-level stability.

For RankBoost , the query-level stability can be achieved if we introduce both query-level normalization and regularization to its objective function .

These analyses agree largely with our experiments and the experiments in [5] and [11].

Rank aggregation

• Rank aggregation is to combine ranking results of entities from multiple ranking functions in order to generate a better one.

The individual ranking functions are referred to as base rankers, or simply rankers.

Score-based aggregation

• Rank aggregation can be classified into two categories [2]. In the first category, the entities in individual ranking lists are assigned scores and the rank aggregation function is assumed to use the scores (denoted as score-based aggregation) [11][18][28].

order-based aggregation

• In the second category, only the orders of the entities in individual ranking lists are used by the aggregation function (denoted as order-based aggregation). Order-based aggregation is employed at meta-search, for example, in which only order (rank) information from individual search engines is available.

• Previously order-based aggregation was mainly addressed with the unsupervised learning approach, in the sense that no training data is utilized; methods like

• Borda Count [2][7][27],

• median rank aggregation [9],

• genetic algorithm [4],

• fuzzy logic based rank aggregation [1],

• Markov Chain based rank aggregation [7] and so on were proposed.

i

5

i

4

i

3

i

2

i

1

It turns out that the optimization problems for the

Markov Chain based methods are hard, because they are not convex optimization problems.

We are able to develop a method for the optimization of one Markov Chain based method, called Supervised MC2.

We prove that we can transform the optimization problem into that of Semidefinite Programming. As a result, we can efficiently solve the issue.

Next Generation Web Search ?

(

Web Search 2.0 --> 3.0)

• Directions for new innovations

– Process-centric vs. data-centric

– Infrastructure for Web-scale data mining

– Intelligence & knowledge discovery

Wei-Ying Ma, Microsoft Research Asia

Web Search –

Past, Present, and Future

Wei-Ying Ma

Web Search and Mining Group

Microsoft Research Asia

next generation.ppt

Web Search - Past Present and Future - public.ppt