TC2014_BRK407_Galle

advertisement

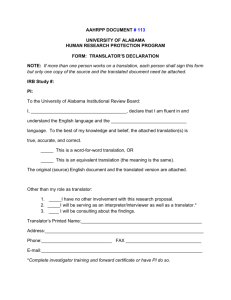

Language Quality Game Dynamics ERP Translation Solutions Crowdsourcing Microsoft solution to validate Dynamics products’ user interface terminology in context. Provide access to existing Microsoft ERP linguistic assets through the Machine Translator Hub (MTHUB) and a translation editor through the Multilingual Application Toolkit (MAT) 4 +40,000 forms 118,500 individual reviews 82% forms reviewed/76% OK 10 languages Players: 89 from 33 Partners/83 MS ERP 1,640 bugs opened All valid bugs (84%) fixed by RTM 7 Player Site https://lqg.partners.extranet.microsoft.com/v23/game/ Moderator Site https://lqg.partners.extranet.microsoft.com/v23/moderator/ 9 Microsoft Translator Hub Multilingual App Toolkit 11 Microsoft Translator Hub for PLLP CAT list 13 14 Multilingual App Toolkit for PLLP 16 Create MT hub project – partner specific instance Train the MT Hub system with existing ERP/CRM linguistic assets (TMs) Machine translation Project setup/Training Microsoft MBSi MT Hub Machine Translation Reviewers Localized Dynamics AX2012 Customers Review and update translation Translation Partners Translation Management: Recruit translators Select translation editor (MAT for UI, other CAT tools, etc.) Source <-> XLIFF conversion (if MAT) Manage translation effort Translator Translation Process: Run machine translation Human review and post editing of machine translated content 18 www.microsoft.com/dynamics/axtechconference 26 27 Microsoft Confidential 28 An average of 4,000 forms included per language 40,000 individual screen captures Participation by Microsoft internal ERP Specialists • 83 internal Players • 72,362 individual reviews screen (870 = average screens review per player) 3,500 2,000 1,500 1,000 500 965 2,500 1,377 3,000 1,242 6 of the 10 languages achieved 100% coverage 4,000 1,846 10 languages included German, French, Danish, Russian, Chinese, Japanese, Brazilian-Portuguese, Polish, Czech, Lithuanian 4,500 2,279 82% of all screens across the 10 languages were reviewed by between 1-3 players 3,221 Participation from partners • 89 Partner Players OnBoarded (33 companies across 10 markets) • 34 Partner Players ‘actively’ played • 46,130 individual reviews screen (1,350 average screens review per player) 3,545 The Language Quality Game was run during the month of August and was ‘live’ for a period of 3 weeks. Coverage by Language 2,248 COVERAGE 4,133 PARTICIPATION 3,823 SCOPE 0 Reviewed - OK Reviewed - Feedback Logged Not Reviewed Resolution of Translation Bugs Duplicate, 215, 13% Fixed, 1383, 84% Won't Fix, 39, 3% Postponed, 6, 0% Results Observations Future Planning P 40,000 screens included: 62% (24,679) reviews as OK Scope: 4,000 screens at once is large and restricts achieving 100% coverage for all languages Smaller, more frequent games • Starting earlier in the development cycle • Focusing on new functionality • Alignment with Technical Preview releases • Broader Partner participation • Additional Language coverage P 18% (7,138) Not Reviewed 20% (7,833) O feedback logged 1,643 Translation bugs opened Typical issues found: • Context sensitive translation • Domain Terminology • Un-translated Text 97% addressed (Fixed or Dupe) before R2 Release Coverage: Partner participation in some markets was low Timing: Running once , close to ‘EndGame’ leaves very little time to address feedback ...and don’t run in August 31 Microsoft Translator Data 32 Recruit Translators and Select Translation Editor Convert resources format to XLIFF with Microsoft conversion tool Localizable XLIFF file is generated with resource strings Translate the XLIFF in MAT Multilingual Editor or send it to the localization vendor for professional translation through CAT tools Convert translated XLIFF back to original resource format with Microsoft conversion tool 33 Microsoft TM and Materials from the previous releases for training Create a language specific Project in MT Hub Create a new System in the project and Train/Tune with existing material Deployment: Deploy the System Deployment: Deploy the system Partners Additional Training/Tuning necessary? Yes Train new system. Use accepted reviewed text as tuning data (Optional) Known good parallel data from previous releases for training/tuning Create a new system by cloning the current system No Localized material Translation: Machine Translate the source material Review: Have the translation reviewed by the assigned reviewer Finalize: Moderator accepts/ rejects the review results and finalize the translation Future implementation Source material Reviewer input Localized material 34