Stepanek_COST_daily

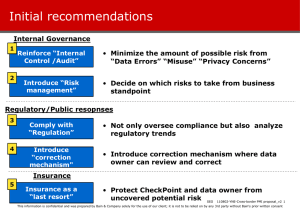

advertisement

Correction of daily values for inhomogeneities P. Štěpánek Czech Hydrometeorological Institute, Regional Office Brno, Czech Republic E-mail: petr.stepanek@chmi.cz COST-ESO601 meeting, Tarragona, 9-11 March 2009 Using daily data for inhomogeniety detection, is it meaningful? Homogenization of daily values – precipitation series working with individual monthly values (to get rid of annual cycle) It is still needed to adapt data to approximate to normal distribution One of the possibilities: consider values above 0.1 mm only Additional transformation of series of ratios (e.g. with square root) Homogenization of precipitation – daily values Original values - far from normal distribution (ratios tested/reference series) Frequencies Homogenization of precipitation – daily values Limit value 0.1 mm (ratios tested/reference series) Frequencies Homogenization of precipitation – daily values Limit value 0.1 mm, square root transformation (of ratios) (ratios tested/reference series) Frequencies Problem of independence, Precipitation above 1 mm August, Autocorrelations Problem of independece, Temperature August, Autocorrelations Problem of independece, Temperature differences (reference – candidate) August, Autocorrelations Homogenization Detection (preferably on monthly, seasonal and annual values) Correction – for daily values WP1 SURVEY (Enric Aguilar) Daily data - Correction (WP4) Trust metadata only Use a technique to detect breaks Detect on lower resolution 12 Very few approaches actually calculate special corrections for daily data. Most approaches either 10 8 – Do nothing (discard data) – Apply monthly factors – Interpolate monthly factors 6 4 2 Linear adjustments Transfer functions CDF Overlapping records & LM References + modelling of hom. Interpolate monthly Empirical values Discard data Changes NLR C N Apply monthly factors 0 The survey points out several other alternatives that WG5 needs to investigate Daily data correction methods „Delta“ methods Variable correction methods – one element Variable correction methods – several elements Daily data correction methods Interpolation of monthly factors – MASH – Vincent et al (2002) - cublic spline interpolation Nearest neighbour resampling models, by Brandsma and Können (2006) Higher Order Moments (HOM), by Della Marta and Wanner (2006) Two phase non-linear regression (O. Mestre) Modified percentiles approach, by Stepanek Using weather types classifications (HOWCLASS), by I. Garcia-Borés, E. Aguilar, ... Adjusting daily values for inhomogeneities, from monthly versus daily adjustments („delta“ method) Adjusting from monthly data monthly adjustments smoothed with Gaussian low pass filter (weights approximately 1:2:1) smoothed monthly adjustments are then evenly distributed among individual days 1.0 1.0 B2BPIS01_T_21:00 0.8 0.6 0.6 0.4 0.4 0.2 0.2 °C 0.0 -0.2 0.0 -0.2 -0.4 -0.4 -0.6 -0.6 ADJ_C_INC 1.12. 1.11. 1.10. 1.9. 1.8. 1.7. 1.5. 1.4. -1.0 1.3. 1.12. 1.11. 1.10. 1.9. 1.8. 1.7. 1.6. 1.5. 1.4. 1.3. -1.0 1.2. ADJ_ORIG -0.8 1.6. UnSmoothed -0.8 1.1. °C B2BPIS01_T_21:00 0.8 1.2. 1.1. Adjusting straight from daily data Adjustment estimated for each individual day (series of 1st Jan, 2nd Jan etc.) Daily adjustments smoothed with Gaussian low pass filter for 90 days (annual cycle 3 times to solve margin values) 0.0 B2BPIS01_P_14:00 -0.5 ADJ_ORIG ADJ_SMOOTH -1.0 -1.5 -2.0 -2.5 -3.0 -3.5 -4.0 -4.5 1.12. 1.11. 1.10. 1.9. 1.8. 1.7. 1.6. 1.5. 1.4. 1.3. 1.2. -5.0 1.1. hPa Adjustments (Delta method) The same final adjustments may be obtained from either monthly averages or through direct use of daily data (for the daily-values-based approach, it seems reasonable to smooth with a low-pass filter for 60 days. The same results may be derived using a low-pass filter for two months (weights approximately 1:2:1) and subsequently distributing the smoothed monthly adjustments into daily values) 1.0 1.0 a) 0.5 0.0 0.0 b) °C 0.5 °C -0.5 -0.5 UnSmoothed 1 ADJ_C_INC 3 ADJ_ORIG 2 -1.0 ADJ_ORIG 4 ADJ_SMOOTH30 5 ADJ_SMOOTH60 6 ADJ_SMOOTH90 7 -1.0 (1 – raw adjustments, 2 – smoothed adjustments, 3 – smoothed adjustments distributed into individual days), b) daily-based approach (4 – individual calendar day adjustments, 5 – daily adjustments smoothed by low-pass filter for 30 days, 6 – for 60 days, 7 – for 90 days) 01-Dec 01-Nov 01-Oct 01-Sep 01-Aug 01-Jul 01-Jun 01-May 01-Apr 01-Mar 01-Feb 01-Dec 01-Nov 01-Oct 01-Sep 01-Aug 01-Jul 01-Jun 01-May 01-Apr 01-Mar 01-Feb 01-Jan -1.5 -1.5 01-Jan Spline through monthly temperature adjustments („delta“ method) Easy to implement No assumptions about changes in variance Integrated daily adjustments = monthly adjustments But, is it natural? Variable correction f(C(d)|R), function build with the reference dataset R, d – daily data cdf, and thus the pdf of the adjusted candidate series C*(d) is exactly the same as the cdf or pdf of the original candidate series C(d) Variable correction Trewin & Trevitt (1996) method: Use simultaneous observations of old and new conditions Variable correction 1996 The HOM method concept: Fitting a model Locally weighted regression (LOESS) (Cleveland & Devlin,1998) HSP2 HSP1 The HOM method concept: Calculating the binned difference series Decile 10, k=10 Decile 1, k=1 The HOM method concept: The binned differences DELLA-MARTA AND WANNER, JOURNAL OF CLIMATE 19 (2006) 4179-4197 SPLIDHOM (SPLIne Daily HOMogenization), Olivier Mestre direct non-linear spline regression approach (x rather than a correction based on quantiles), cubic smoothing splines for estimating regression functions Variable correction, q-q function Michel Déqué, Global and Planetary Change 57 (2007) 16–26 -0.500 -1.000 0.995 0.940 0.880 1.500 Q_DIFF_BE Q_DIFF_AF 0.200 0.200 1.000 0.000 0.000 -0.200 -0.200 0.500 0.000 -1.000 -1.000 -1.200 -1.200 -1.400 -1.400 1 8 15 22 29 0.400 0.460 0.520 0.580 0.640 0.700 43 0.400 50 -0.400 -0.400 -0.600 -0.600 -0.800 -0.800 Q_DIFF Q_DIFF_SM 0.460 0.520 0.580 0.640 0.700 57 64 71 78 0.760 0.880 0.940 0.995 0.880 92 0.940 99 0.995 85 0.820 10.000 0.820 0.760 0.340 36 0.340 0.000 0.280 0.000 0.280 5.000 0.220 5.000 0.220 Q_CAND_BE Q_REF_BE 0.160 15.000 0.160 20.000 0.100 20.000 0.100 25.000 0.040 25.000 0.040 30.000 0.000 30.000 0.000 0.995 0.940 0.880 0.820 0.760 0.700 0.640 0.580 0.520 0.460 0.400 0.340 0.280 0.220 0.160 0.100 0.040 0.000 10.000 0.820 0.760 0.700 0.640 0.580 0.520 0.460 0.400 0.340 0.280 0.220 0.160 0.100 0.040 0.000 Our modified percentiles based approach 15.000 Q_CAND_AF Q_REF_AF Our percentiles based approach 0.200 0.200 99 0.995 92 0.940 85 0.880 78 0.820 71 0.760 0.700 64 0.640 57 0.580 50 0.520 43 0.460 36 0.400 29 0.340 22 0.280 15 0.220 8 0.160 1 0.100 -0.200 -0.200 0.040 0.000 0.000 0.000 -0.400 -0.400 -0.600 -0.600 -0.800 -0.800 -1.000 -1.000 Q_DIFF Q_DIFF_SM -1.200 -1.200 Q_DIFF_SM PERC sm25 -1.400 -1.400 0.000 0.000 0.000 -0.1000.000 -0.100 -0.200 -0.200 -0.300 -0.300 -0.400 -0.500 -0.400 -0.600 -0.500 -0.700 -0.600 -0.800 -0.700 -0.900 5.000 5.000 10.000 10.000 15.000 15.000 20.000 20.000 25.000 25.000 30.000 30.000 Variable correction methods – complex approach (several elements) not yet available … Comparison of the methods, ProClimDB software Correction methods comparison PERC sm50 PERC EMPI sm75 0.000 0.000 0.000 0.000 -0.1000.000 -0.100 -0.1000.000 -0.200 -0.200 -0.200 -0.300 -0.300 -0.300 -0.400 -0.400 -0.400 5.000 5.000 5.000 10.000 10.000 10.000 15.000 15.000 15.000 PECR sm75 EMPIR 20.000 20.000 20.000 25.000 25.000 25.000 30.000 30.000 30.000 0.000 0 0 0.000 -0.100 -0.1 55.000 10.000 10 20.000 20 25.000 25 30.000 30 20 25 30 -0.200 -0.2 -0.300 -0.3 -0.400 -0.4 -0.500 -0.500 -0.500 -0.600 -0.600 -0.500 -0.5 -0.700 -0.700 -0.800 -0.800 -0.700 -0.7 -0.900 -0.900 -0.900 -0.9 -0.600 -0.6 -0.800 -0.8 HOM SPLIDHOM 0 -0.1 0 15.000 15 0 5 10 15 20 25 30 -0.1 0 -0.2 -0.2 -0.3 -0.3 -0.4 -0.4 -0.5 -0.5 -0.6 -0.6 -0.7 -0.7 -0.8 -0.8 -0.9 -0.9 5 10 15 Correction methods comparison, different parameters settings PERC sm50 PERC EMPI sm75 0.000 0.000 0.000 0.000 -0.1000.000 -0.100 -0.1000.000 -0.200 -0.200 -0.200 -0.300 -0.300 -0.300 -0.400 -0.400 -0.400 5.000 5.000 5.000 10.000 10.000 10.000 15.000 15.000 15.000 PECR sm75 EMPIR 20.000 20.000 20.000 25.000 25.000 25.000 30.000 30.000 30.000 0.000 0 0 0.000 -0.100 -0.1 55.000 10.000 10 -0.700 -0.7 -0.900 -0.900 -0.900 -0.9 -0.5 -0.500 -0.6 -0.600 -0.7 -0.700 -0.8 -0.800 -0.9 -0.900 25 25 30 30 -0.600 -0.6 -0.800 -0.8 HOM PERC -0.4 -0.400 30.000 30 -0.400 -0.4 -0.700 -0.700 -0.800 -0.800 -0.3 -0.300 25.000 25 -0.300 -0.3 -0.500 -0.5 -0.2 -0.200 20.000 20 -0.200 -0.2 -0.500 -0.500 -0.500 -0.600 -0.600 0 0.000 -0.1 00.000 -0.100 15.000 15 5 5.000 10 10.000 15 15.000 SPLIDHOM EMPIR 20 20.000 25 25.000 30 30.000 00 -0.1 00 -0.1 55 10 10 15 15 -0.2 -0.2 -0.3 -0.3 -0.4 -0.4 -0.5 -0.5 -0.6 -0.6 -0.7 -0.7 -0.8 -0.8 -0.9 -0.9 SPLIDHOM 20 20 Correction methods comparison, different parameters settings PERC HOM 0.000 0.0 0.000 -0.100 -0.1 0.0 5.000 5.0 10.000 10.0 15.000 15.0 EMPIR SPLIDHOM 20.000 20.0 25.000 25.0 30.000 30.0 0 0.0 0 -0.1 -0.1 0.0 -0.200 -0.2 -0.2 -0.2 -0.3 -0.300 -0.4 -0.400 -0.3 -0.3 -0.4 -0.4 -0.5 -0.500 -0.6 -0.600 -0.7 -0.700 -0.8 -0.800 -0.9 -0.5 -0.5 -0.6 -0.6 -0.7 -0.7 -0.8 -0.8 -0.9 -0.900 -1.0 -0.9 -1.0 5 5.0 10 10.0 HOM 20 20.0 25 25.0 30 30.0 20 25 30 SPLIDHOM 0 -0.1 0 15 15.0 0 5 10 15 20 25 30 -0.1 0 -0.2 -0.2 -0.3 -0.3 -0.4 -0.4 -0.5 -0.5 -0.6 -0.6 -0.7 -0.7 -0.8 -0.8 -0.9 -0.9 5 10 15 Correction of daily values We have some methods … - but we have to validate them -> benchmark dataset on daily data Do we know how inhomogeneites in daily data behave? we should analyse real data who and when?, what method for data comparison?