International Networks and the US-CERN Link

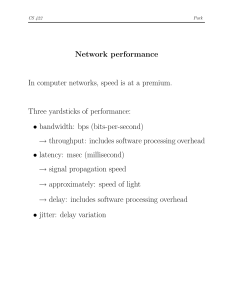

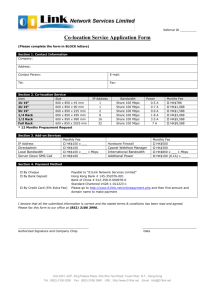

advertisement

Networks for HENP and ICFA SCIC Harvey B. Newman California Institute of Technology CHEP2003, San Diego March 24, 2003 Global Networks for HENP Circa 2003 National and International Networks, with sufficient (and rapidly increasing) capacity and capability, are essential for Data analysis, and the daily conduct of collaborative work in both experiment and theory, Involving physicists from all world regions Detector development & construction on a global scale The formation of worldwide collaborations The conception, design and implementation of next generation facilities as “global networks” “Collaborations on this scale would never have been attempted, if they could not rely on excellent networks” (L. Price) Next Generation Networks for Experiments: Goals and Needs Large data samples explored and analyzed by thousands of globally dispersed scientists, in hundreds of teams Providing rapid access to event samples and analyzed physics results drawn from massive data stores From Petabytes by 2002, ~100 Petabytes by 2007, to ~1 Exabyte by ~2012. Providing analyzed results with rapid turnaround, by coordinating and managing large but LIMITED computing, data handling and NETWORK resources effectively Enabling rapid access to the Data and the Collaboration Across an ensemble of networks of varying capability Advanced integrated applications, such as Data Grids, rely on seamless operation of our LANs and WANs With reliable, monitored, quantifiable high performance ICFA and International Networking ICFA Statement on Communications in Int’l HEP Collaborations of October 17, 1996 See http://www.fnal.gov/directorate/icfa/icfa_communicaes.html “ICFA urges that all countries and institutions wishing to participate even more effectively and fully in international HEP Collaborations should: Review their operating methods to ensure they are fully adapted to remote participation Strive to provide the necessary communications facilities and adequate international bandwidth” NTF ICFA Network Task Force: 1998 Bandwidth Req’ments Projection (Mbps) 1998 2000 2005 0.05 - 0.25 (0.5 - 2) 0.2 – 2 (2-10) 0.8 – 10 (10 – 100) 0.25 - 10 1.5 - 45 34 - 622 BW to a Home Laboratory Or Regional Center 1.5 - 45 34 - 155 622 - 5000 BW to a Central Laboratory Housing Major Experiments 34 - 155 BW on a Transoceanic Link 1.5 - 20 BW Utilized Per Physicist (and Peak BW Used) BW Utilized by a University Group 155 - 622 2500 - 10000 34 - 155 622 - 5000 100–1000 X Bandwidth Increase Foreseen for 1998-2005 See the ICFA-NTF Requirements Report: http://l3www.cern.ch/~newman/icfareq98.html ICFA Standing Committee on Interregional Connectivity (SCIC) Created by ICFA in July 1998 in Vancouver ; Following ICFA-NTF CHARGE: Make recommendations to ICFA concerning the connectivity between the Americas, Asia and Europe (and network requirements of HENP) As part of the process of developing these recommendations, the committee should Monitor traffic Keep track of technology developments Periodically review forecasts of future bandwidth needs, and Provide early warning of potential problems Create subcommittees when necessary to meet the charge Representatives: Major labs, ECFA, ACFA, NA Users, S. America The chair of the committee should report to ICFA once per year, at its joint meeting with laboratory directors (Feb. 2003) Bandwidth Growth of Global HENP Networks Rate of Progress >> Moore’s Law. (US-CERN Example) 9.6 kbps Analog 64-256 kbps Digital 1.5 Mbps Shared 2 -4 Mbps 12-20 Mbps 155-310 Mbps 622 Mbps 2.5 Gbps 10 Gbps (1985) (1989 - 1994) (1990-3; IBM) (1996-1998) (1999-2000) (2001-2) (2002-3) (2003-4) (2005) [X 7 – 27] [X 160] [X 200-400] [X 1.2k-2k] [X 16k – 32k] [X 65k] [X 250k] [X 1M] A factor of ~1M over a period of 1985-2005 (a factor of ~5k during 1995-2005) HENP has become a leading applications driver, and also a co-developer of global networks LHC Data Grid Hierarchy CERN/Outside Resource Ratio ~1:2 Tier0/( Tier1)/( Tier2) ~1:1:1 ~PByte/sec ~100-1500 MBytes/sec Online System Experiment CERN Center PBs of Disk; Tape Robot Tier 0 +1 Tier 1 ~2.5-10 Gbps IN2P3 Center INFN Center RAL Center FNAL Center 2.5-10 Gbps Tier 3 ~2.5-10 Gbps Tier 2 Institute Institute Physics data cache Workstations Institute Tier2 Center Tier2 Center Tier2 Center Tier2 CenterTier2 Center Institute 0.1 to 10 Gbps Tens of Petabytes by 2007-8. An Exabyte ~5-7 Years later. Tier 4 Emerging Vision: A Richly Structured, Global Dynamic System 2001 Transatlantic Net WG Bandwidth Requirements [*] CMS ATLAS BaBar CDF D0 BTeV DESY 2001 2002 2003 2004 2005 2006 100 200 300 600 800 2500 50 100 300 600 800 2500 300 600 1100 1600 2300 3000 100 300 400 2000 3000 6000 400 1600 2400 3200 6400 8000 20 40 100 200 300 500 100 180 210 240 270 300 CERN 155- 622 2500 5000 10000 20000 BW 310 [*] See http://gate.hep.anl.gov/lprice/TAN. The 2001 LHC requirements outlook now looks Very Conservative in 2003 History – One large Research Site Much of the Traffic: SLAC IN2P3/RAL/INFN; via ESnet+France; Abilene+CERN Current Traffic ~400 Mbps; Projections: 0.5 to 24 Tbps by ~2012 LHC: Tier0-Tier1 Link Requirements Estimate: for Hoffmann Report 2000-1 Tier1 Tier0 Data Flow for Analysis Tier2 Tier0 Data Flow for Analysis Interactive Collaborative Sessions [30 Peak] Remote Interactive Sessions [30 Peak] Individual (Tier3 or Tier4) data transfers [Limit to 10 Flows of 5 Mbytes/sec each] TOTAL Per Tier0 - Tier1 Link 1) 2) 3) 4) 5) 0.5 - 1.0 Gbps 0.2 - 0.5 Gbps 0.1 - 0.3 Gbps 0.1 - 0.2 Gbps 0.8 Gbps 1.7 - 2.8 Gbps Does Not Include More Recent (e.g. ATLAS) Data Estimates Rates 270-400 Hz, Event Size 2 MB/Event Does Not Allow Fast Download to Tier3+4 of Many “Small” Object Collections Example: Download 107 Events of AODs (104 Bytes) 100 Gbytes; at 5 Mbytes/sec per person that’s 6 Hours ! This is a still a rough, bottoms-up, static, and hence Conservative Model. A Dynamic Grid System may well require greater bandwidth 2003 NSF ITRs: Globally Enabled Analysis Communities & Collaboratories Develop and build Dynamic Workspaces Construct Autonomous Communities Operating Within Global Collaborations Build Private Grids to support scientific analysis communities e.g. Using Agent Based Peer-to-peer Web Services Drive the democratization of science via the deployment of new technologies Empower small groups of scientists (Teachers and Students) to profit from and contribute to int’l big science Private Grids and Peer-to-Peer Sub-Communities in Global HEP SCIC in 2002-3 A Period of Intense Activity Formed WGs in March 2002; 9 Meetings in 12 Months Strong Focus on the Digital Divide Presentations at Meetings and Workshops (e.g. LISHEP, APAN, AMPATH, ICTP and ICFA Seminars) HENP more visible to governments: in the WSIS Process Five Reports; Presented to ICFA Feb. 13,2003 See http://cern.ch/icfa-scic Main Report: “Networking for HENP” [H. Newman et al.] Monitoring WG Report [L. Cottrell] Advanced Technologies WG Report [R. Hughes-Jones, O. Martin et al.] Digital Divide Report [A. Santoro et al.] Digital Divide in Russia Report [V. Ilyin] SCIC in 2002-3 A Period of Intensive Activity Web Page http://cern.ch/ICFA-SCIC/ Monitoring: Les Cottrell (SLAC) (http://www.slac.stanford.edu/xorg/icfa/scic-netmon) With Richard Hughes-Jones (Manchester), Sergio Novaes (Sao Paolo); Sergei Berezhnev (RUHEP), Fukuko Yuasa (KEK), Daniel Davids (CERN), Sylvain Ravot (Caltech), Shawn McKee (Michigan) Advanced Technologies: R. Hughes-Jones, Olivier Martin (CERN) With Vladimir Korenkov (JINR, Dubna), H. Newman The Digital Divide: Alberto Santoro (Rio, Brazil) With V. Ilyin (MSU), Y. Karita(KEK), D.O. Williams (CERN) Also V. White (FNAL), J. Ibarra and H. Alvarez (AMPATH), D. Son (Korea), H. Hoorani, S. Zaidi (Pakistan), S. Banerjee (India), Key Requirements: Harvey Newman and Charlie Young (SLAC) HENP Networks: Status and Outlook: SCIC General Conclusions The scale and capability of networks, their pervasiveness and range of applications in everyday life, and HENP’s dependence on networks for its research, are all increasing rapidly. However, as the pace of network advances continues to accelerate, the gap between the economically “favored” regions and the rest of the world is in danger of widening. We must therefore work to Close the Digital Divide To make Physicists from All World Regions Full Partners in Their Experiments; and in the Process of Discovery This is essential for the health of our global experimental collaborations, our plans for future projects, and our field. ICFA SCIC: R&E Backbone and International Link Progress GEANT Pan-European Backbone (http://www.dante.net/geant) Now interconnects >31 countries; many trunks 2.5 and 10 Gbps UK: SuperJANET Core at 10 Gbps 2.5 Gbps NY-London, with 622 Mbps to ESnet and Abilene France (IN2P3): 2.5 Gbps RENATER3 backbone from October 2002 Lyon-CERN Link Upgraded to 1 Gbps Ethernet Plan for dark fiber to CERN by end 2003 SuperSINET (Japan): 10 Gbps IP and 10 Gbps Wavelength Core Tokyo to NY Links: 2 X 2.5 Gbps started CA*net4 (Canada): Interconnect customer-owned dark fiber nets across Canada at 10 Gbps, started July 2002 “Lambda-Grids” by ~2004-5 GWIN (Germany): 2.5 Gbps Core; Connect to US at 2 X 2.5 Gbps; Support for Virtual SILK Hwy Project: Satellite links to FSU Republics Russia: 155 Mbps Links to Moscow (Typ. 30-45 Mbps for Science) Moscow-Starlight Link to 155 Mbps (US NSF + Russia Support) Moscow-GEANT and Moscow-Stockholm Links 155 Mbps R&E Backbone and Int’l Link Progress Abilene (Internet2) Upgrade from 2.5 to 10 Gbps in 2002-3 Encourage high throughput use for targeted applications; FAST ESNET: Upgrade: 2.5 and 10 Gbps Links SLAC + IN2P3 (BaBar) Typically ~400 Mbps throughput on US-CERN, Renater links ~600 Mbps Throughput is BaBar Target for First Half of 2003 FNAL: ESnet Link Upgraded to 622 Mbps Plans for dark fiber to STARLIGHT, proceeding US-CERN 622 Mbps in production from 8/02 2.5G to 10G Research Triangle STARLIGHT-CERN-SURFNet(NL); [10Gbps SNV-Starlight Link Loan from Level(3) 10/02-2/03] IEEAF Donation from Tyco: NY-Amsterdam Completed 9/02; Transpacific Donation by Mid-2003. 622 Mbps+10G Wavelength US Nat’l Light Rail and USAWaves (10 Gbps DWDM-based Fiber Infrastructures) Proceeding this Year SuperSINET Updated Map: October 2002 10 GbE + 10 Gbps IP Backbone 10 15 SuperSINET Universities with Many SINET Nodes with 30-100 Mbps GbE 2003: OC192 and OC48 Links Coming Into Service; Upgrade Links to US HENP Labs Network Challenges and Requirements for High Throughput Low to Extremely Low Packet Loss (<< 0.01% for standard TCP) Need to track down uncongested packet loss No Local Infrastructure Bottlenecks or Quality Compromises Gigabit Ethernet and eventually some 10 GbE “clear paths” between selected host pairs TCP/IP stack configuration and tuning Absolutely Required Large Windows (~BW*RTT); Possibly Multiple Streams Also need to consider Fair Sharing with Other Traffic Careful Configuration: Routers, Servers and Client Systems Sufficient End-system CPU and Disk I/O; NIC performance End-to-end monitoring and tracking of performance Close collaboration with local and “regional” network staffs New TCP Protocol Stacks Engineered for Stable Fair Operation at 1-10 Gbps; Eventually to 100 Gbps HEP is Learning How to Use Gbps Networks Fully: Factor of 25-100 Gain in Max. Sustained TCP Thruput in 15 Months, On Some US+TransAtlantic Routes * 9/01 105 Mbps 30 Streams: SLAC-IN2P3; 102 Mbps 1 Stream CIT-CERN 1/09/02 190 Mbps for One stream shared on Two 155 Mbps links 3/11/02 120 Mbps Disk-to-Disk with One Stream on 155 Mbps link (Chicago-CERN) 5/20/02 450-600 Mbps SLAC-Manchester on OC12 with ~100 Streams 6/1/02 290 Mbps Chicago-CERN One Stream on OC12 (mod. Kernel) 9/02 850, 1350, 1900 Mbps Chicago-CERN 1,2,3 GbE Streams, 2.5G Link 11-12/02: 930 Mbps in 1 Stream California-CERN, and California-AMS FAST TCP 9.4 Gbps in 10 Flows California-Chicago 2/03 2.38 Gbps in 1 Stream California-Geneva (99% Link Utilization) FAST TCP: Baltimore/Sunnyvale RTT estimation: fine-grain timer Fast convergence to equilibrium Delay monitoring in equilibrium Pacing: reducing burstiness Measurements Std Packet Size Utilization averaged over > 1hr 3000 km Path 9G 90% 10G 88% 90% Average utilization 92% 95% 1 flow 2 flows 7 flows Fair Sharing Fast Recovery 8.6 Gbps; 21.6 TB in 6 Hours 9 flows 10 flows 10GigE Data Transfer Trial European Commission On Feb. 27-28, a Terabyte of data was transferred in 3700 seconds by S. Ravot of Caltech between the Level3 PoP in Sunnyvale near SLAC and CERN through the TeraGrid router at StarLight from memory to memory As a single TCP/IP stream at average rate of 2.38 Gbps. (Using large windows and 9kB “Jumbo frames”) This beat the former record by a factor of ~2.5, and used the US-CERN link at 99% efficiency. 10GigE NIC HENP Major Links: Bandwidth Roadmap (Scenario) in Gbps Year Production Experimental Remarks SONET/SDH 2001 2002 0.155 0.622 0.622-2.5 2.5 2003 2.5 10 DWDM; 1 + 10 GigE Integration 2005 10 2-4 X 10 Switch; Provisioning 2007 2-4 X 10 ~10 X 10; 40 Gbps ~5 X 40 or ~20-50 X 10 ~25 X 40 or ~100 X 10 1st Gen. Grids SONET/SDH DWDM; GigE Integ. 40 Gbps ~10 X 10 Switching or 1-2 X 40 2nd Gen Grids 2011 ~5 X 40 or Terabit Networks ~20 X 10 ~Fill One Fiber 2013 ~T erabit ~MultiTbps Continuing the Trend: ~1000 Times Bandwidth Growth Per Decade; We are Rapidly Learning to Use Multi-Gbps Networks Dynamically 2009 HENP Lambda Grids: Fibers for Physics Problem: Extract “Small” Data Subsets of 1 to 100 Terabytes from 1 to 1000 Petabyte Data Stores Survivability of the HENP Global Grid System, with hundreds of such transactions per day (circa 2007) requires that each transaction be completed in a relatively short time. Example: Take 800 secs to complete the transaction. Then Transaction Size (TB) Net Throughput (Gbps) 1 10 10 100 100 1000 (Capacity of Fiber Today) Summary: Providing Switching of 10 Gbps wavelengths within ~3-5 years; and Terabit Switching within 5-8 years would enable “Petascale Grids with Terabyte transactions”, to fully realize the discovery potential of major HENP programs, as well as other data-intensive fields. National Light Rail Footprint SEA POR SAC NYC CHI OGD DEN SVL CLE FRE PIT BOS WDC KAN NAS STR LAX RAL PHO SDG WAL OLG ATL DAL JAC 15808 Terminal, Regen or OADM site Fiber route NLR Buildout Started November 2002 Initially 4 10 Gb Wavelengths To 40 10Gb Waves in Future Transition beginning now to optical, multi-wavelength R&E networks. Also Note: IEEAF/GEO plan for dark fiber in Europe Optical Packet Routing Using Conversion D. Blumenthal, UC Santa Barbara Optical > Electronic Switching Microprocessor Power Per Fiber Capacity Increases 1 Fast Wavelength Converter 23 4 5 6 7 8 Packet switched to wavelength 5 Packets at Wavelength 1 and 7 Packet switched to wavelength 2 Fast Tunable Laser Control Signals Circuit Switched Mode Burst Mode Packet Mode 80 Gbps Optical Packet Routing with Label Swapping Results (UCSB) Packets Routed to 1542.0 nm Incoming Packets Packets Routed to 1556.0 nm Packets Routed to 1548.0 nm 1st Hop 2nd Hop Rapid Network Advances and the Digital Divide The current generation of 2.5-10 Gbps network backbones arrived in the last 15 Months in the US, Europe and Japan Major transoceanic links also are reaching 2.5 - 10 Gbps Capability Increased ~4 Times, i.e. 2-3 Times Moore’s Law This is a direct result of the continued precipitous fall of network prices for 2.5 or 10 Gbps in these regions Higher prices remain in the poorer regions There are strong prospects for further advances that will cause the Divide to become a Chasm, Unless We Act For the Rich Regions: 10GigE in campus+metro backbones; GigE/10GigE to desktops Advances in protocols (TCP) to use networks at 1-10 Gbps+ DWDM: More 10G wavelengths and/or 40G speeds on a fiber Owned or leased wavelengths: in the last mile, the region, and/or across the country PingER Monitoring Sites (Also IEPM-BW) Measurements from 38 monitors in 12 countries 790 remote hosts in 70 Countries; 3500 monitor-remote site pairs Measurements go back to Jan-95 Reports on link reliability, quality Countries monitored Contain 78% of world population 99% of Internet users Need to Continue, Strengthen the IEPM+ICTP Monitoring Efforts Remote Sites History – Loss Quality (Cottrell) Fewer sites have very poor to dreadful performance More have good performance (< 1% Loss) BUT <20% of the world’s population has Good or Acceptable performance History - Throughput Quality Improvements from US Bandwidth of TCP < MSS/(RTT*Sqrt(Loss)) (1) 80% annual improvement Factor ~100/8 yr Progress: but Digital Divide is Maintained (1) Macroscopic Behavior of the TCP Congestion Avoidance Algorithm, Matthis, Semke, Mahdavi, Ott, Computer Communication Review 27(3), July 1997 NREN Core Network Size (Mbps-km): http://www.terena.nl/compendium/2002 100M Logarithmic Scale 10M In Transition Gr 100k Ir Lagging Ro 1k Ukr 100 Hu Advanced 1M 10k Leading It Pl Ch Es Fi Nl Cz Work on the Digital Divide: Several Perspectives Identify & Help Solve Technical Problems: Nat’l, Regional, Last 10/1/0.1 km; Peering. SCIC Questionnaire to Experiment Managements Lab Directors Strong Support for Monitoring Projects, such as IEPM Inter-Regional Proposals (Example: Brazil) US NSF Proposal (10/2002); EU @LIS Proposal Work on Policies and/or Pricing: pk, in, br, cn, SE Europe, … Find Ways to work with vendors, NRENs, and/or Gov’ts Use Model Cases: Installation of new advanced fiber infrastructures; Convince Neighboring Countries Slovakia; Czech Republic; Poland (to 5k km fiber) Exploit One-off Solutions: E.g. the Virtual SILK Highway Project (DESY/FSU satellite links); Extend to a SE European site Work with Other Cognizant Organizations: Terena, Internet2, AMPATH, IEEAF, UN, GGFm etc.; help with technical and/or political solns Digital Divide Sub-Committee: Questionnaire Response Extract: Institution Computing / networking needs related to HEP Other UERJ, Brazil HEPGRID PROJECT presented for financial support to work Wait for approved on CMS; T3 then T1. Last Mile Problem: Need to reach RNP funds to build a with good routing from UERJ T3; In 2005/6 a T1 Cinvestav, Mexico Dedicated 2 Mbps link for HEP group Needed (now 2 X 2 Mbps for Whole Community; Not Enough) UNLP, Argentina a) LAN upgrade to 100 Mbps; b) LAN-to-WAN upgrade to 4 Mbps JSI, Slovenia Additional bandwidth needed for HEP; now 2 Mbps for > 1000 people QAU, Pakistan In terms of bandwidth need to upgrade but no last mile connection problem. High Prices (Telecom monopoly) TIFR, India Will have a Tier3 Grid node; Need network bandwidth upgrades at reasonable price (Telecom monopoly) IFT-UNESP Brazil Will maintain a farm for Monte Carlo studies and a Tier 3 Grid node; need more bandwidth University of CYPRUS HEP group intends to build a type T2 or T3 Grid node, and contribute to MC Production. Need to upgrade Network to Gigabit/sec. In principle there is no access limit the Network now. But daily traffic load is the real limitation. Also plans for T2 at NUST 34 Mbps Link to GEANT.University pays for Network International Connectivity Costs in the Different European Market Segments Market segment Liberal Market with transparent pricing Liberal Market with less transparent pricing structure Emerging Market without transparent pricing Traditional Monopolist market Number of Countries 8 Cost Range 1-1.4 7 1.8-3. 3 3 7.5-7. 8 9 18-39 Dai Davies SERENATE Workshop Feb. 5, 2003 Telecom monopolies have even higher prices in low income countries Fewer Market Entrants. Less Competition Lower Income Less Penetration of New Technologies Price cap regulation creates cross subsidies between costumer groups. Large customers (inelastic demand) subsidize small costumers (elastic): High bandwidth services are very expensive Inefficient Rights of Way (ROW) regulation Inefficient spectrum allocation policies C. Casasus, CUDI (Mexico); W. St. Arnaud, CANARIE (Canada) Romania: 155 Mbps to GEANT and Bucharest; Inter-City Links of 2-6 Mbps; to 34 Mbps in 2003 GEANT 155Mbps 34Mbps 34Mbps 34Mbps 34Mbps Annual Cost Was > 1 MEuro 34Mbps Stranded Intercity Fiber Assets APAN Links in Asia January 2003 Typical Intra-Asia Int’l Links 0.5 – 45 Mbps Progress: Japan-Korea Link: 8 Mbps to 1 Gbps in Jan. 2003; IEEAF 10G + 0.6G Links by ~June 2003 Inhomogeneous Bandwidth Distribution in Latin America. CAESAR Report (6/02) J. Ibarra, AMPATH Wkshp In Progress: 622 Mbps Miami–Rio; CLARA Project: Brazil, Mexico, Chile, Agentina Need to Pay Attention to End-point connections Int’l Links 4,236 Gbps Fiber Capacity Into Latin America; (e.g. UERJ Rio) Only 0.071 Gbps Used Digital Divide Committee Gigabit Ethernet Backbone; 100 Mbps Link to GEANT Virtual Silk Highway Project Managed by DESY and Partners STM 16 Virtual SILK Highway Project (from 11/01): NATO ($ 2.5 M) and Partners ($ 1.1M) Satellite Links to 8 FSU Republics in So. Caucasus and Central Asia In 2001-2 (pre-SILK) BW 64-512 kbps Proposed VSAT to get 10-50 X BW for same cost See www.silkproject.org [*] Partners: DESY, GEANT, CISCO UNDP, US State Dept., Worldbank, UC London, Univ. Groenigen SCIC: Extend to a SE Europe Site ? NATO Science for Peace Program http://www.ieeaf.org “Cultivate and promote practical solutions to delivering scalable, universally available and equitable access to suitable bandwidth and necessary network resources in support of research and education collaborations.” Groningen Carrier Hotel TransAtlantic, Transpacific, Intra-US and European Initiatives US-JP-KR-CN-SG Tokyo by ~6/03 NY-AMS Done 9/02 (Research) Global Medical Research Exchange Initiative Bio-Medicine and Health Sciences St. Petersburg Kazakhstan Uzbekistan NL CA MD Barcelona Greece GHANA Layer 1 – Spoke & Hub Sites Buenos Aires/San Paolo Chenai Navi Mumbai CN SG PERTH Layer 2 – Spoke & Hub Sites Layer 3 – Spoke & Hub Sites 2002-3: Beginning a Plan for a Global Research and Education Exchange for High Energy Physics Global Quilt Initiative – GMRE Initiative - 001 Jensen, ICTP Typ. 0-7 bps Per Person Progress in Africa ? Limited by many external systemic factors: Electricity; Import Duties; Education; Trade restrictions Jensen, ICTP Networks, Grids and HENP Current generation of 2.5-10 Gbps backbones and int’l links arrived in the last 15 Months in the US, Europe and Japan Capability Increased ~4 Times, i.e. 2-3 Times Moore’s Reliable high End-to-end Performance of network applications is required (large transfers; Grids), and is achievable Achieving this more broadly for HENP requires: End-to-end monitoring; a coherent approach (IEPM Project) Getting high performance (TCP) toolkits in users’ hands Isolating and addressing specific problems Removing Regional, Last Mile Bottlenecks and Compromises in Network Quality are now On the critical path, in all world regions Digital Divide: Network improvements are especially needed in SE Europe, So. America; SE Asia, and Africa Work in Concert with Internet2, Terena, APAN, AMPATH; DataTAG, the Grid projects and the Global Grid Forum SCIC Work in 2003 Continue Digital Divide Focus Improve and Systematize Information in Europe; in Cooperation with TERENA and SERENATE More in-depth information on Asia, with APAN More in-depth information on South America, with AMPATH Begin Work on Africa, with ICTP Set Up HENP Networks Web Site and Database Share Information on Problems, Pricing; Example Solutions Continue and if Possible Strengthen Monitoring Work (IEPM) Continue Work on Specific Improvements: Brazil and So. America; Romania; Russia; India; Pakistan, China An ICFA-Sponsored Statement at the World Summit on the Information Society (12/03 in Geneva), prepared by SCIC +CERN Watch Requirements; the “Lambda” & “Grid Analysis” revolutions Discuss, Begin to Create a New “Culture of Collaboration”