The Technopolis Group [approach

advertisement

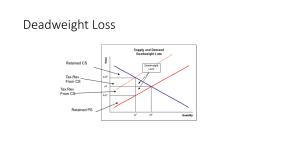

The Technopolis Group Paul Simmonds Director Introduction • Private limited company • Founded in 1989 • A spinoff from SPRU (University of Sussex) • In 2012, the Technopolis Group • €8 million net sales • 90 professional staff • 10 offices across EU • c. 50% relates to evaluation of research and innovation 2 Evaluation of research impact • Research impact is not monolithic • • • • • • Science or co-financed applied research A research system, policy, institution or a programme Policy objectives range from improved public services to incentives for increased effort by private sector Societal impacts can be highly particular and not so readily quantified or monetised as with economic impacts Our ability to characterise and measure these different phenomena is uneven Several ‘big’ methodological challenges • • • • • Diffuse and uncertain location / timing of outcomes and impacts ‘invisible’ key ingredient, knowledge spillovers Small but concentrated interventions in dynamic open systems don’t lend themselves to experimental methodologies Project fallacy Deadweight and crowding out 3 Pragmatism • • • • • • Theory based evaluation (logframe) to deal with context and particularities Formal assessment of available data to devise practicable methodology Use of mixed methods, looking for convergence among data streams Co-production – not a legal audit – to add to texture and insight Always endeavour to add it up, to reveal the general AND specific Precautionary principle, test findings in semi-public domain 4 Solutions • Tool-box has been improving with explosion in research related data and information services (scientometrics) • • • • • These work better at higher levels, but still useful at programme or institute level used with care Use of econometric techniques is increasing for industrial R&D • • • Bibliometrics and citation analyses Social network analyses Patent analyses Control group surveys produce data to adjust for deadweight Macro-economic models being developed to cope with technical change and estimate spillovers No magic bullet however • • Bespoke, expert evaluators still dominate (tough to routinise and reach useful / credible conclusions) Mixed methods with choice being tuned to the particular entity 5 Qualitative research remains key however • For policies and larger programmes • • • Historical tracing of outcomes Use of longitudinal case studies to capture full extent of influences and outcomes in selected areas For industrial programmes • • Combine large-scale surveys to locate / weigh different types of attributable impacts with case studies of ‘known’ sample of outcomes Use of factors established through very large and exhaustive studies, applied as ‘multipliers’ to very much simpler methodologies that content themselves with researching the more immediate and visible outcomes (misses the texture of particular outcomes of course) 6 Squaring circles • • • Evaluation remains labour intensive, and costly Contain costs by being clear about purpose Don’t try to evaluate everything and capture all impacts • LFA useful to deal with context, calibrate search and agree KPIs • Ambition level – non-exhaustive: have we done enough to improve things to at least the minimum desired • Recruit participants to the cause from the outset, co-production • Much greater use of monitoring (self-reporting) • Use of primary and secondary research (converging partial indicators, normalisation) • • Use of standard methodology / framework for consistency Exploit multipliers / facts from selected exhaustive studies 7 Applied research centres / competence centres 8