12 PACA, Assessing Creativity With Self

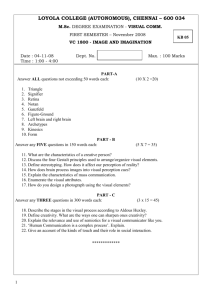

advertisement