FileNewTemplate

advertisement

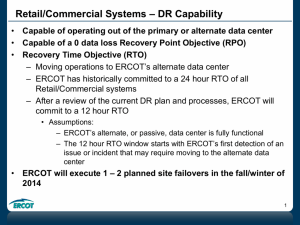

RMS Workshop Retail Systems Disaster Recovery ERCOT May 6th, 2014 1 Addressing Retail DR Needs Mandy Bauld 2 Addressing Retail DR Needs 1. Understand the Current State 2. Define & Understand the Requirement 3. Close the Gap (Next Steps) 3 ERCOT’s Primary Objective for Today 1. Understand the Current State 2. Define & Understand the Requirement • What is the RMS position on a Recovery Time Objective (RTO) for the retail systems in the event of retail system outages? • This information is required in order for ERCOT to effectively take any “next step” actions regarding DR for retail systems. 3. Close the Gap (Next Steps) 4 RMS Feedback Summarized (April 1, 2014 RMS Meeting) 1. Understand the Current State • • • Market needs education on what it takes for ERCOT to failover to level set expectations of the current state Market needs to understand dependencies and decision making process around a failover Market needs information about ERCOT’s failover testing and planning for returning to the primary site 2. Define & Understand the Requirement • • • • • Various opinions expressed regarding downtime (need a limit on the downtime; need full operation within 24 hours; 24 hours downtime is unacceptable) Even after systems were “back up” there were still configuration issues in the DR environment and still had backlog to work through… more than acceptable… it was not fully restored Cleanup work was more than expected The market’s manual work-around capability is limited, is not sustainable, and is not without risk Incomplete or delayed communication has a direct impact on market ability to make decisions and manage customer expectation through the event 3. Close the Gap (Next Steps) • Identify options that improve ERCOT’s and the market’s ability to meet the defined requirements 5 Agenda • • • • • • March 11th Outage Timeline Overview – Retail DR Capability & Process System Outage Communications System Outage Requirements Work-Around Processes Planned Failover Communications 6 March 11th Outage Timeline Dave Pagliai 7 System Outage on March 11, 2014 Impacted Services Retail Transaction Processing, MarkeTrak, eService (service requests and settlement disputes), Retail Data Access & Transparency through MIS and ercot.com, Settlement & Billing processes, ercot.com, Retail Flight Testing (CERT), MPIM, Texas Renewables website (REC), NDCRC through the MIS 8 System Outage on March 11, 2014 Infrastructure Failure - Tuesday 03/11/14 @ 9:27 AM Restoration of Market Facing Systems Tuesday 03/11/14 Texas Renewables (REC) – www.texasrenewables.com – 03/11/14 @ 4:01 PM Settlement & Billing processes – 03/11/14 @ 4:36 PM Ercot.com – 03/11/14 @ 5:20 PM Wednesday 03/12/14 Registration (Siebel) – 03/12/14 @ 1:15 PM MPIM – 03/12/14 @ 1:15 PM Retail Transaction Processing – 03/12/14 @ 2:10 PM MarkeTrak API - 03/12/14 @ 2:10 PM MarkeTrak GUI - 03/12/14 @ 2:10 PM MIS Retail Applications - 03/12/14 @ 2:10 PM NDCRC through the MIS – 03/12/14 @ 3:02 PM Retail Transaction Processing backlog complete – 03/12/14 @ 10:15 PM 9 System Outage on March 11, 2014 Restoration of Market Facing Systems (continued) Thursday 03/13/14 CERT – 03/13/14 @ 3:13 PM This was actually unrelated to the infrastructure outage. Friday 03/14/14 eService (service requests and settlement disputes) – 03/14/14 @ 5:30 PM 10 Communications • 1st Market Notice: Tuesday, 3/11 at 12:27 PM • 1st Retail Market Conference Call: Tuesday, 3/11 at 1:00 PM • Regular Market Notices and Retail Market Conference Calls through Friday, 3/14 • Final notice on Monday, 3/17 11 Challenges • The outage impacted various internal ERCOT applications and tools required to communicate both internally and externally, and access procedural documentation • ERCOT’s registration system failed to initialize in the alternate data center, requiring application servers to be rebuilt, delaying the restoration of several other Retail systems/services 12 Overview – Retail DR Capability & Process Aaron Smallwood 13 Retail/Commercial Systems DR Background • Pre-2011– No DR environment or failover capability, strategy was to utilize iTest environment if extended outage occurred • December 2010 – Retail/Commercial Systems DR environment delivered and tested by the data center project – Evolving state of maturity – Strategy: failover in a major outage event when primary system cannot be restored within required timeframe – Recovery Time Objective (RTO): generally, 24 hour recovery of core systems and services – Recovery Point Objective (RPO): 0 data loss – Testing Strategy : recovery site operability tested annually 14 Retail/Commercial Systems – Current DR Capability • Capable of operating out of the primary or alternate data center • Capable of a 24 hour Recovery Time Objective (RTO) – Moving operations to an alternate data center within 24 hours – Historically have accomplished in less than 24 hours • Capable of a 0 data loss Recovery Point Objective (RPO) • Historical use of DR capability: December 2012 – Unplanned failover • First “real” use of the DR environment June 2013 – Planned failover • First transition back to the primary environment March 2014 – Unplanned failover 15 ERCOT Retail DR - Context For security reasons ERCOT cannot provide specific details regarding environments or business continuity plans. • Outage events are like fingerprints… each is unique. • Issue are initially handled as an IT Incident – First Priority – find the issue – Identify the scope of impacted systems and functions – Identify options and limitations for restoration and recovery – Make recommendations and coordinate restoration – Engage Business SMEs and Market Communications team • Potential for an extended outage? – Mobilize the Disaster Management Team • Executive management and Director-level leadership across the company – The DMT monitors the situation throughout the event to understand the scope of problem, impacts, and make “the big decisions” – Examples: the 12/3/2012 and the 3/11/2014 outages 16 ERCOT Retail DR - Process • • The systems are not currently capable of “real-time” failover Once the failover decision is made, IT follows procedures to: – Verify readiness of the alternate environment – Control data replication streams during the transition – Configure integrated systems to point to the alternate environment • Business follows procedures to: – Prioritize recovery efforts – Work with IT to determine where processes left off and where they should start after recovery – Determine and mitigate potential for data loss – Determine if/what work-arounds may be necessary upon recovery – Verify ability to use systems in the alternate environment – Support Market Communications 17 System Outage Communications Ted Hailu 18 System Outage - Communications • Initial and Subsequent Communications (when/how) • Contingency Plans for email communication • Communication outside the Market Notice process 19 System Outage Requirements Dave Michelsen 20 System Outage Requirements • What is the market’s priority for recovery of retail services? – What is the quantitative and/or qualitative impact of the unavailability of each service? • What is the RTO for each? May need to Consider: – Operating timelines – Importance of time of day (AM vs PM) or day of week and time of day – Tolerance level relative to invoking safety net procedures – Cost to support increases as the RTO decreases 21 System Outage Requirements Retail Service Priority RTO Retail Transaction Processing Disputes & Issues Flight Testing Data Access & Transparency (reports/extracts) Data Access & Transparency / Ad-Hoc Requests 22 Work-Around Processes Dave Michelsen 23 Workaround Process • Are the current processes sufficient? – Move-In(s) • Switch Hold Removals – Move-Outs(s) – Others? 24 Planned Failover Communications Dave Michelsen 25 Planned Failover Communications • Communication for a planned transition between sites – i.e., transition from the alternate data center back to the primary data center – Follow normal processes regarding outages/maintenance communication – Transition would be performed in a Sunday maintenance window 26 The Parking Lot 27 Parking Lot Items • TBD 28