Avoiding Communication in Sparse Matrix

advertisement

Avoiding Communication in Sparse

Matrix-Vector Multiply (SpMV)

• Sequential and shared-memory performance is

dominated by off-chip communication

• Distributed-memory performance is dominated

by network communication

The problem:

SpMV has low arithmetic intensity

SpMV Arithmetic Intensity (1)

dimension: n = 5

number of nonzeros:

nnz = 3n-2 (tridiagonal A)

SpMV

floating point operations

2⋅nnz

floating point words moved

nnz + 2⋅n

Assumption: A is invertible

⇒ nonzero in every row

⇒ nnz ≥ n

overcounts flops by up to n (diagonal A)

SpMV Arithmetic Intensity (2)

O( lg(n) )

O( 1 )

O( n )

more

flops

per

byte

SpMV, BLAS1,2

FFTs

Stencils (PDEs)

Dense Linear Algebra

(BLAS3)

Lattice Methods

•

•

Particle Methods

Arithmetic intensity := Total flops / Total DRAM bytes

Upper bound: compulsory traffic

–

further diminished by conflict or capacity misses

SpMV

æ nnz ö

flops

w (n)

£ 2×ç

÷ ¾nnz=

¾¾¾

®2

è

ø

words

nnz + 2n

flops

2⋅nnz

words moved

nnz + 2⋅n

arith. intensity

2

SpMV Arithmetic Intensity (3)

Opteron 2356

(Barcelona)

256.0

attainable gflop/s

128.0

peak double

precision

floating-point

rate

64.0

32.0

16.0

8.0

4.0

2.0

1.0

•

•

•

•

How to do more

flops per byte?

2 flops per word of data

8 bytes per double

flop:byte ratio ≤ ¼

Can’t beat 1/16 of peak!

0.5

1/

8

1/

4

1/

2

1

2

4

8

actual flop:byte ratio

In practice, A requires at least nnz

words:

• indexing data, zero padding

• depends on nonzero structure, eg,

banded or dense blocks

• depends on data structure, eg,

• CSR/C, COO, SKY, DIA,

JDS, ELL, DCS/C, …

• blocked generalizations

• depends on optimizations, eg,

index compression or variable

block splitting

16

Reuse data (x, y, A)

across multiple SpMVs

Combining multiple SpMVs

(1) k independent SpMVs

[ y0, y1,…

, yk ] = A × [ x0, x1,… , xk ]

(2) k dependent SpMVs

[ x1, x2,…

, xk ] = A × [ x0 , x1,… , xk-1 ]

= éë Ax0 , A 2 x0 ,… , A k x0 ùû

(3) k dependent SpMVs,

in-place variant

x = Ak x

What if we can amortize cost

of reading A over k SpMVs ?

• (k-fold reuse of A)

(1) used in:

• Block Krylov methods

• Krylov methods for multiple

systems (AX = B)

(2) used in:

• s-step Krylov methods,

• Communication-avoiding Krylov

methods

…to compute k Krylov basis vectors

Def. Krylov space (given A, x, s):

Ks ( A, x ) := span ( x, Ax,… , A s x )

(3) used in:

• multigrid smoothers, power method

• Related to Streaming Matrix Powers

optimization for CA-Krylov

methods

(1) k independent SpMVs (SpMM)

[ y0, y1,…

, yk ] = A × [ x0, x1,… , xk ]

SpMM optimization:

• Compute row-by-row

• Stream A only once

=

1 SpMV

k independent SpMVs k independent SpMVs

(using SpMM)

flops

2⋅nnz

2k⋅nnz

2k⋅nnz

words moved

nnz + 2n

k⋅nnz + 2kn

1⋅nnz + 2kn

arith. intensity,

nnz = ω(n)

2

2

2k

(2) k dependent SpMVs (Akx)

[ x1, x2,…

, xk ] = A × [ x0 , x1,… , xk-1 ]

Naïve algorithm (no reuse):

= éë Ax0 , A x0 ,… , A x0 ùû

k

2

Akx (Akx) optimization:

• Must satisfy data

dependencies while keeping

working set in cache

1 SpMV

k dependent SpMVs

k dependent SpMVs

(using Akx)

flops

2⋅nnz

2k⋅nnz

2k⋅nnz

words moved

nnz + 2n

k⋅nnz + 2kn

1⋅nnz + (k+1)n

arith. intensity,

nnz = ω(n)

2

2

2k

(2) k dependent SpMVs (Akx)

Akx algorithm (reuse nonzeros of A):

1

8

10

13

18

20

23

28

30

33

1 SpMV

k dependent SpMVs

k dependent SpMVs

(using Akx)

flops

2⋅nnz

2k⋅nnz

2k⋅nnz

words moved

nnz + 2n

k⋅nnz + 2kn

1⋅nnz + (k+1)n

arith. intensity,

nnz = ω(n)

2

2

2k

40

(3) k dependent SpMVs, in-place

(Akx, last-vector-only)

x=A x

k

Last-vector-only Akx optimization:

• Reuses matrix and vector k times, instead of once.

• Overwrites intermediates without memory traffic

• Attains O(k) reuse, even when nnz < n

• eg, A is a stencil (implicit values and structure)

1 SpMV

k dependent SpMVs,

in-place

Akx, last-vector-only

flops

2⋅nnz

2k⋅nnz

2k⋅nnz

words moved

nnz + 2n

k⋅nnz + 2kn

1⋅nnz + 2n

arith. intensity,

nnz = anything

2

2

2k

Combining multiple SpMVs

(summary of sequential results)

Problem

SpMV

k

independent

SpMVs

k dependent

SpMVs

k dependent

SpMVs,

in-place

words

moved

optimization

2⋅nnz

nnz

+

2n

2k⋅nnz

k⋅nnz

+

2kn

relative bandwidth savings

(n, nnz ⟶ ∞)

words

moved

nnz = ω(n)

nnz = c⋅n

nnz = o(n)

-

-

-

-

-

SpMM

nnz

+

2kn

k

≤ min(c, k)

1

2k⋅nnz

k⋅nnz

+

2kn

Akx

nnz

+

(k+1)n

k

≤ min(c, k)

2

2k⋅nnz

k⋅nnz

+

2kn

Akx, lastvector-only

nnz

+

2n

k

k

k

flops

Avoiding Serial Communication

Reduce compulsory misses by

reusing data:

– more efficient use of memory

– decreased bandwidth cost (Akx,

asymptotic)

•

Must also consider latency cost

– How many cachelines?

– depends on contiguous accesses

•

When k = 16 ⇒ compute-bound?

– Fully utilize memory system

– Avoid additional memory traffic like

capacity and conflict misses

– Fully utilize in-core parallelism

– (Note: still assumes no indexing data)

•

In practice, complex performance

tradeoffs.

– Autotune to find best k

128.0

attainable gflop/s

•

Opteron 2356

(Barcelona)

256.0

peak DP

64.0

32.0

16.0

8.0

4.0

2.0

1.0

0.5

1/

8

1/

4

1/

2

1

2

4

8

actual flop:byte ratio

16

On being memory bound

• Assume that off-chip communication (cache to memory) is bottleneck,

– eg, that we express sufficient ILP to hide hits in L3

• When your multicore performance is bound by memory operations, is it

because of latency or bandwidth?

– Latency-bound: expressed concurrency times the memory access rate does not

fully utilize the memory bandwidth

• Traversing a linked list, pointer-chasing benchmarks

– Bandwidth-bound: expressed concurrency times the memory access rate exceeds

the memory bandwidth

• SpMV, stream benchmarks

– Either way, manifests as pipeline stalls on loads/stores (suboptimal throughput)

• Caches can improve memory bottlenecks – exploit them whenever possible

– Avoid memory traffic when you have temporal or spatial locality

– Increase memory traffic when cache line entries are unused (no locality)

• Prefetchers can allow you to express more concurrency

– Hide memory traffic when your access pattern has sequential locality (clustered

or regularly strided access patterns)

Distributed-memory parallel SpMV

•

Harder to make general statements about performance:

–

–

–

•

A parallel SpMV involves 1 or 2 rounds of messages

–

–

–

–

•

Many ways to partition x, y, and A to P processors

Communication, computation, and load-balance are partition-dependent

What fits in cache? (What is “cache”?!)

(Sparse) collective communication, costly synchronization

Latency-bound (hard to saturate network bandwidth)

Scatter entries of x and/or gather entries of y across network

k SpMVs cost O(k) rounds of messages

Can we do k SpMVs in one round of messages?

–

k independent vectors? SpMM generalizes

•

•

–

k dependent vectors? Akx generalizes

•

•

–

Distribute all source vectors in one round of messages

Avoid further synchronization

Distribute source vector plus additional ghost zone entries in one round of messages

Avoid further synchronization

Last-vector-only Akx ≈ standard Akx in parallel

•

No savings discarding intermediates

Distributed-memory parallel Akx

Example: tridiagonal matrix, k = 3, n = 40, p = 4

Naïve algorithm:

k messages per neighbor

0

processor 1

10

processor 2

20

processor 3

30

processor 4

Akx optimization:

1 message per neighbor

0

processor 1

10

processor 2

20

processor 3

30

processor 4

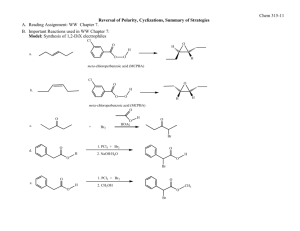

Polynomial Basis for Akx

• Today we considered the special case of the monomials:

éë Ax, A2 x,… , Ak xùû

• Stability problems - tends to lose linear independence

– Converges to principal eigenvector

• Given A, x, k > 0, compute

éë p1 ( A) x, p2 ( A) x,… , pk ( A) xùû

where pj(A) is a degree-j polynomial in A.

– Choose p for stability.

Tuning space for Akx

•

•

•

•

•

DLP optimizations:

– vectorization

ILP optimizations:

– Software pipelining

– Loop unrolling

– Eliminate branches, inline functions

TLP optimizations:

– Explicit SMT

Memory system optimizations:

– NUMA-aware affinity

– Software prefetching

– TLB blocking

Memory traffic optimizations:

– Streaming stores (cache bypass)

– Array padding

– Cache blocking

– Index compression

– Blocked sparse formats

– Stanza encoding

•

•

– Topology-aware sparse collectives

– Hypergraph partitioning

– Dynamic load balancing

– Overlapped communication and computation

Algorithmic variants:

– Compositions of distributed-memory parallel,

shared memory parallel, sequential algorithms

– Streaming or explicitly buffered workspace

– Explicit or implicit cache blocks

– Avoiding redundant computation/storage/traffic

– Last-vector-only optimization

– Remove low-rank components (blocking covers)

– Different polynomial bases pj(A)

Other:

– Preprocessing optimizations

– Extended precision arithmetic

– Scalable data structures (sparse representations)

– Dynamic value and/or pattern updates

Krylov subspace methods (1)

Want to solve Ax = b (still assume A is invertible)

How accurately can you hope to compute x?

• Depends on condition number of A and the accuracy of your inputs A and b

• cond(A) := A × A-1 condition number with respect to matrix inversion

• cond(A) – how much A distorts the unit sphere (in some norm)

• 1/cond(A) – how close A is to a singular matrix

• expect to lose log10(cond(A)) decimal digits relative to (relative) input accuracy

• Idea: Make successive approximations, terminate when accuracy is sufficient

• How good is an approximation x0 to x?

• Error: e0 = x0 - x

• If you know e0, then compute x = x0 - e0 (and you’re done.)

• Finding e0 is as hard as finding x; assume you never have e0

• Residual: r0 = b – Ax0

• r0 = 0 ⇔ e0 = 0, but they do not necessarily vanish simultaneously

e0

r0

£ cond ( A)

x0

A × x0

cond(A) small ⇒ (r0 small ⇒ e0 small)

Krylov subspace methods (2)

1. Given approximation xold, refine by adding a correction xnew = xold + v

• Pick v as the ‘best possible choice’ from search space V

s

• Krylov subspace methods: V := Ks ( A, r0 ) := span ( r0, Ar0 ,… , A r0 )

2. Expand V by one dimension

3. xold = xnew. Repeat.

• Once dim(V) = dim(A) = n, xnew should be exact

Why Krylov subspaces?

• Cheap to compute (via SpMV)

• Search spaces V coincide with the residual spaces • makes it cheaper to avoid repeating search directions

• K(A,z) = K(c1A - c2I, c3z) ⇒ invariant under scaling, translation

• Without loss, assume |λ(A)| ≤ 1

• As s increases, Ks gets closer to the dominant eigenvectors of A

• Intuitively, corrections v should target ‘largest-magnitude’ residual components

Convergence of Krylov methods

• Convergence = process by which residual goes to zero

– If A isn’t too poorly conditioned, error should be small.

• Convergence only governed by the angles θm between

spaces Km and AKm

– How fast does sin(θm) go to zero?

– Not eigenvalues! You can construct a unitary system that

results in the same sequence of residuals r0, r1, …

– If A is normal, λ(A) provides bounds on convergence.

• Preconditioning

– Transforming A with hopes of ‘improving’ λ(A) or cond(A)

Conjugate Gradient (CG) Method

Given starting approximation x0 to Ax = b,

let p0 := r0 := b - Ax0.

For m = 0, 1, 2, … until convergence, do:

a m :=

rm , rm

Vector iterates:

• xm = candidate solution

• rm = residual

• pm = search direction

rm , Apm

xm+1 := xm + a m pm Correct candidate solution along search direction

rm+1 := rm - a m Apm Update residual according to new candidate solution

b m :=

rm+1, rm+1

rm , rm

pm+1 := rm+1 + b m pm Expand search space

Communication-bound:

• 1 SpMV operation per iteration

• 2 dot products per iteration

1. Reformulate to use Akx

2. Do something about the

dot products

Applying Akx to CG (1)

1. Ignore x, α, and β, for now

2. Unroll the CG loop s times (in your head)

3. Observe that:

rm+s, pm+s Î span(pm, Apm,… As pm ) Å span(rm, Arm,… As-1rm )

ie, two Akx calls

4. This means we can represent rm+j and pm+j

symbolically as linear combinations:

rm+ j =: éë pm , Apm ,… , A j pm , rm , Arm ,… , A j-1rm ùû r̂j =: éëPj , R j-1 ùû r̂j

pm+ j =: éë pm , Apm ,… , A j pm , rm , Arm ,… , A j-1rm ùû p̂ j =: éëPj , R j-1 ùû p̂ j

vectors of

length n

1. And perform SpMV

operations symbolically:

(same holds for Rj-1)

APj = A éë pm , Apm ,… A j pm ùû

= éë Apm , A 2 pm ,… A j+1 pm ùû

é 0 0

0 ù

ê

ú

ê 1

ú

ú =: Pj+1S j

= Pj+1 ê

1

ê

ú

ê

ú

1 úû

êë

vectors of

length 2j+1

CG loop:

For m = 0,1,…, Do

a m := rm , rm

rm , Apm

xm+1 := xm + a m pm

rm+1 := rm - a m Apm

b m := rm+1, rm+1

pm+1 := rm+1 + b m pm

rm , rm

Applying Akx to CG (2)

6. Now substitute coefficient vectors for vector iterates (eg, for r)

rm+ j+1 := rm+ j - a m+ j Apm+ j

éëPj+1, R j ùû r̂j+1 := éëPj , R j-1 ùû r̂j - a m+ j A éëPj , R j-1 ùû p̂ j

é

r̂j (1: j +1)

ê

0

éë Pj , R j-1 ùû r̂j = éë Pj+1, R j ùûêê

ê r̂j ( j + 2 : 2 j +1)

êë

0

é

r̂j (1: j +1)

ê

ê

0

r̂j+1 := ê

ê r̂j ( j + 2 : 2 j +1)

êë

0

ù

ú

ú

ú

ú

úû

SpMV performed symbolically

é

by shifting coordinates:

é S

j

A éë Pj , R j-1 ùû p̂ j = éë Pj+1, R j ùûê

ê

ë

ù

é

0

ú

ê

p̂ j (1: j +1)

ú

ê

ú - a m+ j ê

0

ú

ê

úû

êë p̂ j ( j + 2 : 2 j +1)

ù

ú

ú

ú

ú

úû

S j-1

ê

ù

ú p̂ = éP , R ùê

ú j ë j+1 j ûê

ê

û

êë

ù

ú

ú

ú

0

ú

p̂ j ( j + 2 : 2 j +1) ú

û

0

p̂ j (1: j +1)

CG loop:

For m = 0,1,…, Do

a m := rm , rm

rm , Apm

xm+1 := xm + a m pm

rm+1 := rm - a m Apm

b m := rm+1, rm+1

pm+1 := rm+1 + b m pm

rm , rm

Blocking CG dot products

7. Let’s also compute the 2j+1-by-2j+1 Gram matrices:

H

G j = éëPj , Rj-1 ùû éëPj , Rj-1 ùû

Now we can perform all

dot products symbolically:

(

H

é

ù

rm+ j , rm+ j = r m+

j rm+ j = ë Pj , R j-1 û r̂j

) (éëP , R

H

j

j-1

ùû r̂j

)

= r̂ HjG j r̂j

(

H

é

ù

rm+ j , Apm+ j = r m+

j Apm+ j = ë Pj , R j-1 û r̂j

é S

j

= r̂ HjG j ê

ê

ë

S j-1

) ( AéëP , R

H

j

j-1

ùû p̂ j

)

é

0

ê

ù

p̂ j (1: j +1)

ú p̂ = r̂ HG ê

j jê

ú j

0

ê

û

êë p̂ j ( j + 2 : 2 j +1)

ù

ú

ú

ú

ú

úû

CG loop:

For m = 0,1,…, Do

a m := rm , rm

rm , Apm

xm+1 := xm + a m pm

rm+1 := rm - a m Apm

b m := rm+1, rm+1

pm+1 := rm+1 + b m pm

rm , rm

CA-CG

Given approximation x0 to Ax = b,

let p0 := r0 := b - Ax0

Take s steps of CG without communication

For j = 0 to s - 1, Do

{ a m+ j := r̂j , Gr̂j r̂j , GSp̂ j

For m = 0, s, 2s, …, until convergence, Do

{

é p̂

x̂ j+1 := x̂ j + a m+ j ê j

êë 0

r̂j+1 := r̂j - a m+ j Sp̂ j

Expand Krylov basis, using SpMM

and Akx optimizations:

[ Ps, Rs-1 ] := éë pm, Apm, …

, As pm rm, Arm, … , As-1rm ùû

Represent the 2s+1 inner

products of length n with a

2s+1-by-2s+1 Gram matrix

G := [ Ps , Rs-1 ]

H

[ Ps, Rs-1 ]

b m+ j := r̂j+1,Gr̂j+1

Communication

(sequential and parallel)

r̂j , Gr̂j

p̂ j+1 := r̂j+1 + b m+ j p̂ j

} End For

Recover vector iterates:

Represent SpMV operation as a

change of basis (here, a shift):

é 0 0

ê

é é

ù

ê 1

ù

ëSs-1, 0 s+1,1 û

ê

ú

S :=

, S j := ê

1

ê

éëSs-2 , 0 s,1 ùû ú

ê

ë

û

ê

êë

Represent vector iterates of length n with

vectors of length 2s+1 and 2s+2:

é 0

ù

é 1 ù

é 0

ê s+1,1 ú

ê

ú

ê

ú

r̂0 :=

, p̂0 :=

1 , x̂0 := ê 2 s+1,1

0

êë 2s,1 úû

êë 1

ê 0

ú

êë 1,s-1 úû

ù

ú

úû

0 ù

ú

ú

ú

ú

ú

1 úû

pm+s := [ Ps , Rs-1 ] p̂s

rm+s := [ Ps , Rs-1 ] r̂s

xm+s := [ Ps , Rs-1, xm ] x̂s

} End For

Communication

(sequential only)

CG loop:

For m = 0,1,…, Do

a m := rm , rm

rm , Apm

xm+1 := xm + a m pm

ù

ú

úû

rm+1 := rm - a m Apm

b m := rm+1, rm+1

pm+1 := rm+1 + b m pm

rm , rm

CA-CG complexity (1)

Kernel

Computation costs

s dependent • 2s⋅nnz flops

SpMVs

(1 source vector)

Akx

• 4s⋅nnz flops

(2 source vectors)

Communication costs

Sequential:

• Read s vectors of length n

• Write s vectors of length n

• Read A s times

• bandwidth cost ≈ s⋅nnz + 2sn

Parallel:

• Distribute 1 source vector s times

Sequential:

• Read 2 vectors of length n,

• Write 2s-1 vectors of length n,

• Read A once (both Akx and SpMM optimizations)

• bandwidth cost ≈ nnz + (2s+1)n

Parallel:

• Distribute 2 source vectors once

• Communication volume and number of messages

increase with s (ghost zones)

CA-CG complexity (2)

Kernel

Computation costs

Communication costs

2s+1 dot

products

Sequential:

• 2(2s+1)n flops

• ≈ 4ns

Parallel:

• (2s+1)(2n+(p-1))/p

• ≈ 4ns/p

Sequential:

• Read a vector of length n 2s+1 times

Parallel:

• 2s+1 all-reduce collectives, each with lg(p) rounds

of messages: latency cost ≈ 2slg(p)

• 1 word to/from each proc.: bandwidth cost ≈ 2s

Gram

matrix

Sequential:

• (2s+1)2n flops

• ≈ 4ns2

Parallel:

• (2s+1)2(n/p + (p1)/(2p))

• ≈ 4ns2/p

Symbolic dot products

cost an additional

(2s+1)2(2s+3) flops

• ≈ 8s3

Sequential:

• Read a matrix of size (2s+1)×n once.

Parallel:

• 1 all-reduce collective, with lg(p) rounds of

messages: latency cost ≈ lg(p)

• (2s+1)2/2 words to/from each proc.: bandwidth

cost ≈ 4s2

CA-CG complexity (3)

Using Gram matrix and coefficient vectors have additional costs for CA-CG:

• Dense work (besides dot products/Gram matrix) does not increase with s:

CG ≈ 6sn

CA-CG ≈ 3(2s+1)(2s+n) ≈ (6s+3)n

• Sequential memory traffic decreases (factor of 4.5)

CG ≈ 6sn reads, 3sn writes

CA-CG ≈ (2s+1)n reads, 3n writes

Method

Sequential

flops

Sequential

bandwidth

Sequential

latency

Parallel

flops

Parallel

bandwidth

Parallel

latency

CG,

s steps

2s⋅nnz

+

10sn

s⋅nnz

+

(13s+1)n

s⋅nnz/b

+

(13s+1)n/b

2s⋅nnz/p

+

10sn/p

s⋅Expand(A)

+

2s

s⋅Expand(A)

+

(2s+1)lg(p)

4s⋅nnz/p

+

(4s+10)sn/p

Expand(|A|s)+

Expand(|A|s-1)

+

4s2

Expand(|A|s)+

Expand(|A|s-1)

+

+ lg(p)

CA-CG(s),

1 step

4s⋅nnz

+

(4s+10)sn

nnz

+

(6s+6)n

nnz/b

+

(6s+6)n/b

b = cacheline size, p = number of processors

28

29

30

31

CA-CG tuning

Performance optimizations:

• 3-term recurrence CA-CG formulation:

• Avoid the auxiliary vector p.

• 2x decrease in Akx flops, bandwidth cost and

serial latency cost (vectors only - A already

optimal)

• 25% decrease in Gram matrix flops, bandwidth

cost, and serial latency cost

• Roughly equivalent dense flops

• Streaming Akx formulation:

• Interleave Akx and Gram matrix construction,

then interleave Akx and vector reconstruction

• 2x increase in Akx flops

• Factor of s decrease in Akx sequential

bandwidth and latency costs (vectors only - A

already optimal)

• 2x increase in Akx parallel bandwidth, latency

• Eliminate Gram matrix sequential bandwidth

and latency costs

• Eliminate dense bandwidth costs other than 3n

writes

• Decreases overall sequential bandwidth and

latency by O(s), regardless of nnz.

Stability optimizations:

• Use scaled/shifted 2-term recurrence for Akx:

• Increase Akx flops by (2s+1)n

• Increase dense flops by an O(s2) term

• Use scaled/shifted 3-term recurrence for Akx:

• Increase Akx flops by 4sn

• Increase dense flops by an O(s2) term

• Extended precision:

• Constant factor cost increases

• Restarting:

• Constant factor increase in Akx cost

• Preconditioning:

• Structure-dependent costs

• Rank-revealing, reorthogonalization, etc…

Can also interleave Akx and Gram matrix

construction without the extra work • decreases sequential bandwidth and

latency by 33% rather than a factor of s