HW2

advertisement

CS316 – Autumn 2013 – 2014

C. Kozyrakis

CS316 Advanced Multi-Core Systems

HW2 – Superscalar Techniques and Cache Coherence

Due Tuesday 11/12/12 at 5PM

(online on Submission portal or in box in front of Gates 303)

Notes

Collaboration on this assignment is encouraged (groups of 3 students)

This HW set provides practice for the exam. Make sure you work on or review ALL

problems in this assignment.

Group: Member1 - _______________ Member2 - ________________ Member3 - ________________

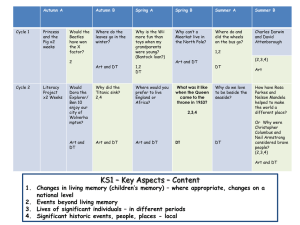

Problem 1: Branch Prediction [9 points]

The figure below shows the control flow of a simple program. The CFG is annotated with three

different execution trace paths. For each execution trace, circle which branch predictor (bimodal, local,

or gshare) will best predict the branching behavior of the given trace. More than one predictor may

perform equally well on a particular trace. However, you are to use each of the three predictors exactly

once in choosing the best predictors for the three traces. Assume each trace is executed many times and

every node in the CFG is a conditional branch. The branch history register for the local and gshare

predictors is limited to 4 bits. Bimodal is a common name for a simple branch history table (BHT).

Provide a brief explanation for your answer.

Circle one:

Bimodal

Local

gshare

1

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Circle one:

Bimodal

Local

gshare

Circle one:

Bimodal

Local

gshare

2

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Problem 2: Renaming [6 points]

Consider a MIPS-like instruction set. All instructions are of the form:

LD DST, offset(addr)

SD SRC, offset(addr)

OP DST, SRC1, SRC2

Part A: [3 points]

Computers spend most of their time in loops, so multiple loop iterations are great places to

speculatively find more work to keep CPU resources busy. Nothing is ever easy, though; the compiler

emitted only one copy of that loop’s code, so even though multiple iterations are handling distinct data,

they will appear to use the same registers. To keep register usages multiple iterations from colliding,

we rename their registers. The following code segment shows an example that we would like our

hardware to rename.

Loop: LD

F2,

0(Rx)

I0:

MULTD

F5,

F0,

F2

I1:

DIVD

F8,

F0,

F2

I2:

LD

F4,

0(Ry)

I3:

ADDD

F6,

F0,

F4

I4:

ADDD

F10, F8,

F2

I5:

SD

F4,

0(Ry)

A compiler could have simply unrolled the loop and used different registers to avoid conflicts, but if we

expect our hardware to unroll the loop, it must also do the register renaming. How? Assume your

hardware has a pool of temporary registers (call them T registers, and assume there are 64 of them, T0

through T63) that it can substitute for those registers designated by the compiler. This rename hardware

is indexed by the source register designation, and the value in the table is the T register of the last

destination that targeted that register. (Think of these table values as producers, and the src registers are

the consumers; it doesn’t much matter where the producer puts its result as long as its consumers can

find it.) Consider the code sequence. Every time you see a destination register in the code, substitute

the next available T, beginning with T9. Then update all the src registers accordingly, so that true data

dependences are maintained. Show the resulting code. (Hint: See following sample)

I0:

LD

T9,

0(Rx)

I1:

MULTD

T10, F0,

T9

3

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Part B: [3 points]

Part A explored simple register renaming: when the hardware register renamer sees a source register, it

substitutes the destination T register of the last instruction to have targeted that source register. When

the rename table sees a destination register, it substitutes the next available T for it. But superscalar

designs need to handle multiple instructions per clock cycle at every stage in the machine, including the

register renaming. A simple scalar processor would therefore look up both src register mappings for

each instruction, and allocate a new destination mapping per clock cycle. Superscalar processors must

be able to do that as well, but they must also ensure that any dest-to-src relationships between the two

concurrent instructions are handled correctly. Consider the following sample code sequence:

I0:

I1:

I2:

I3:

MULTD

ADDD

ADDD

DIVD

F5,

F9,

F5,

F2,

F0,

F5,

F5,

F9,

F2

F4

F2

F0

Assume that we would like to simultaneously rename the first two instructions (2-way superscalar).

Further assume that the next two available T registers to be used are known at the beginning of the

clock cycle in which these two instructions are being renamed. Conceptually, what we want is for the

first instruction to do its rename table lookups, and then update the table per its destination’s T register.

Then the second instruction would do exactly the same thing, and any inter-instruction dependency

would thereby be handled correctly. But there’s not enough time to write the T register designation into

the renaming table and then look it up again for the second instruction, all in the same clock cycle. That

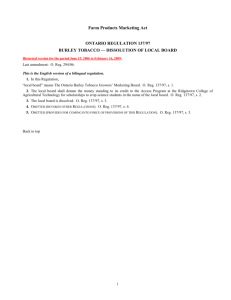

register substitution must instead be done live (in parallel with the register rename table update). Figure

2.1 shows a circuit diagram, using multiplexers and comparators, that will accomplish the necessary

on-the-fly register renaming. Your task is to show the cycle-by-cycle state of the rename table &

destination / sources register mappings for every instruction of the code. Assume the table starts out

with every entry equal to its index (T0 = 0; T1 = 1….).

Figure 2-1. Rename table and on-the-fly register substitution logic for superscalar machines. (Note:

“src” is source, “dst” is destination.)

4

CS316 – Autumn 2013 – 2014

C. Kozyrakis

You only need to fill in mappings for registers that have been renamed from their starting values

(e.g. no need to write in F60=T60, but if F60=T3 that needs to be filled in). Not all fields may be

used.

Cycle 0:

Architectural

F

F

F

F

F

F

F

Machine

T

T

T

T

T

T

T

Instruction I0

dst =

src1 =

src2 =

Instruction I1

dst =

src1 =

src2 =

Machine

T

T

T

T

T

T

T

Instruction I2

dst =

src1 =

src2 =

Instruction I3

dst =

src1 =

src2 =

Cycle 1:

Architectural

F

F

F

F

F

F

F

5

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Problem 3: Coherence [30 points]

Part A: Single processor coherence [5 points]

A processor such as the PowerPC G3, widely deployed in Apple Macintosh systems, is primarily

intended for use in uniprocessor systems, and hence has a very simple MEI cache coherence protocol.

MEI is the same as MESI, except the Shared (S) state is omitted. Identify and discuss one reason why

even a uniprocessor design should support cache coherence. Is the MEI protocol of the G3 adequate for

this purpose? Why or why not? (Hint: think about Direct Memory Access (DMA))

6

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Part B: MOESIF cache coherence protocol [10 points]

Many modern systems use cache-to-cache transfer as a way to avoid penalties of going off-chip for a

memory access. MOESIF cache coherency protocol extends from the MESI protocol, where the

semantics of the additional states are as follows: O state indicates that the line is shared-dirty: i.e.,

multiple copies may exist, but the other copies are in the S state, and the cache that has the line in the O

state is responsible for writing the line back if it is evicted. F state indicates that the line is shared-clean

but multiple copies may exist in the S state and this cache is responsible for a transfer on fill request.

Fill in the table below for actions on every event trigger. If nothing needs to be done, write in “Do

nothing.” If an event is invalid for a given state, write in “Error.”

Current

State s

Event and Local Coherence Controller Responses and Actions (s’ refers to next state)

Local Read

Local Write Local

Bus Read

Bus Write

Bus

Eviction

Upgrade

Invalid (I)

Shared (S)

Forwarding

(F)

Exclusive

(E)

Owned (O)

Modified

(M)

7

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Part C: Snoopy Coherence [5 points]

Assuming a processor frequency of 1 GHz, a target CPI of 2, a per-instruction level-2 cache miss rate

of 1% per instruction, a snoop-based cache coherent system with 32 processors, and 8-byte address

messages (including command and snoop addresses), compute the inbound and outbound snoop

bandwidth required at each processor node.

8

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Part D: Memory Consistency [10 points]

Consider a simple multicore processor using a snoopy MSI cache coherence protocol. Each processor

has a single, private cache that is direct-mapped with four blocks each holding two words. The initial

cache state of the system is shown in the figure below. To simplify the illustration, the cache-address

tag contains the full address.

P0

B0

B1

B2

B3

P1

Coherency Address

State

tag

I

100

S

108

M

110

I

118

B0

B1

B2

B3

P2

Coherency Address

State

tag

I

100

M

128

I

110

S

118

B0

B1

B2

B3

Coherency Address

State

tag

S

120

S

108

I

110

I

118

Reads and writes will experience stall cycles depending on the state of the cache line:

CPU read and write hits generate no stall cycles

CPU read and write misses generate Nmemory and Ncache stall cycles if satisfied by memory and

cache, respectively

CPU write hits that generate an invalidate incur Ninvalidate stall cycles

A write-back of a block, due to either a conflict or another processor’s request to an exclusive

block, incurs an additional Nwriteback stall cycles

The exact cycle count for each event is given in the table below:

Parameter Cycles

100

Nmemory

40

Ncache

15

Ninvalidate

10

Nwriteback

Sequential consistency (SC) requires that all reads and writes appear to have executed in some total

order. This may require the processor to stall in certain cases before committing a read or write

instruction. Consider the following code sequence:

write A

read B

where the write A results in a cache miss and the read B results in a cache hit. Under SC, the processor

must stall read B until after it can order (and thus perform) write A. Simple implementations of SC will

stall the processor until the cache receives the data and can perform the write. Weaker consistency

models relax the ordering constraints on reads and writes, reducing the cases that the processor must

stall. The Total Store Order (TSO, or Processor Order) consistency model requires that all writes

appear to occur in a total order but allows a processor’s reads to pass its own writes. This allows

processor to implement write buffers that hold committed writes that have not yet been ordered with

respect to other processors’ writes. Reads are allowed to pass (and potentially bypass) the write buffer

in TSO (which they could not do under SC). Assume that one memory operation can be performed per

cycle and that operations that hit in the cache or that can be satisfied by the write buffer introduce no

stall cycles. Operations that miss incur the latencies stated above. How many stall cycles occur prior to

each operation for both the SC and TSO consistency models for the cases listed below? Show your

work; a correct answer without any work shown will receive no credit.

9

CS316 – Autumn 2013 – 2014

Instructions

P0: write 110 80

P0: read 108

C. Kozyrakis

Stall cycles

SC

TSO

P0: write 100 80

P0: read 108

SC

TSO

P0: write 110 80

P0: write 100 90

SC

TSO

P0: write 100 80

P0: write 110 90

SC

TSO

P0: read 118

P0: write 110 80

SC

TSO

10

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Problem 4: Instruction Flow and Branch Prediction [30 points]

This problem investigates the effects of branches and control flow changes on program performance for

a scalar pipeline (to keep the focus on branch prediction). Branch penalties increase as the number of

pipeline stages increases between instruction fetch and branch resolution (or condition and target

resolution). This effect of pipelined execution drives the need for branch prediction. This problem

explores both static branch prediction in Part C and dynamic branch prediction in Part D. For this

problem the base machine is a 5-Stage pipeline.

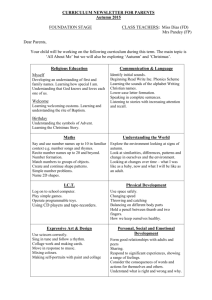

The 5-Stage Pipeline without Dynamic Branch Prediction

Execution Assumptions:

Br. Corr.

• unconditional branches Address

execute in the decode

Fetch

stage

Addr.

Sequential

• conditional branches

Addr. Calc.

execute in the execute

Instruction Fetch

stage

• Effective address calculation is performed

Instruction Decode

in the execute stage

• All memory access is

performed in the memory access stage

Execute

• All necessary forwarding paths exist

• The register file is read

Memory Access

after write

Instruction

Cache

Instructions

The fetch address is a choice

between the sequential address

generation logic and the branch

correction logic. If a mispredicted

branch is being corrected the

correction address is chosen over the

sequential address for the next fetch

address.

Write Back

Part A: Branch Penalties. [2 points]

What are the branch penalties for unconditional and conditional branches?

Unconditional ______________ Conditional _______________

11

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Part B: No Branch Prediction. [4 points]

This problem will use the insertion sort program. An execution trace, or a sequence of executed basic

blocks, is provided for this problem. A basic block is a group of consecutive instructions that are

always executed together in sequence.

Example Code: Insertion Sort

BB Line#

Label

Assembly_Instruction

Comment

1

1

2

3

main:

addi r2,

addi r3,

add r4,

r0,

r0,

r0,

ListArray

ListLength

r0

r2 <- ListArray

r3 <- ListLength

i = 0;

2

4

loop1:

bge r4,

r3,

end

3

4

5

6

loop2:

add r5,

ble r5,

r4,

r0,

r0

cont

5

7

8

9

10

addi

lw

lw

bge

r6,

r7,

r8,

r7,

r5, -1

r5(r2)

r6(r2)

r8, cont

while (i < Length)

{

j=i;

while (j > 0)

{

k=j-1;

temp1 = ListArray[j];

temp2 = ListArray[k];

if (temp1 >= temp2) break;

6

11

12

13

14

sw

sw

addi

ba

r8, r5(r2)

r7, r6(r2)

r5, r5, -1

loop2

7

8

15

16

cont:

17

18

end:

addi r4, r4,

ba loop1

lw

ba

r1,

r1

ListArray[j] temp2;

ListArray[k] temp1;

j--;

}

1

i++;

}

(sp)

r1 <- Return Pointer

Execution Trace: Sequence of Basic Blocks Executed:

123 45723 456 456 4723 456 45723

456

456

45728

[Hint: An alternate way to represent the same execution trace above is to use the sequence of branch

instructions, both conditional and unconditional (i.e. ba), executed.]

12

CS316 – Autumn 2013 – 2014

C. Kozyrakis

1. Fill in the branch execution table with an N for not taken and a T for taken. This table is recording

the execution pattern for each (static) branch instruction. Use the execution trace on the previous page.

Branch Execution - Assume No Branch Prediction:

Branch Instruction No.

(i.e. Line#)

Branch Instruction Execution (dynamic executions of each branch)

1

2

3

4

5

6

7

8

9

10

4

6

10

14

16

18

Using the branch execution table above to calculate the statistics requested in the following table.

Branch Execution Statistics:

Branch Instr. No.

Times Executed

Times Taken

Times Not Taken

% Taken

%Not Taken

4

6

10

14

16

18

2. How many cycles does the trace take to execute (include all pipeline fill and drain cycles)? [Hint:

you don’t need to physically simulate the execution trace, just compute the cycle count.]

3. How many cycles are lost to control dependency stalls?

13

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Part C: Static Branch Prediction. [8 points]

Static branch prediction is a compile-time technique of influencing branch execution in order to reduce

control dependency stalls. Branch opcodes are supplemented with a static prediction bit that indicates a

likely direction during execution of the branch. This is done based on profiling information, ala that in

Part B. For this part of Problem 4, new branch opcodes are introduced:

bget - branch greater than or equal with static predict taken

bgen - branch greater than or equal with static predict not-taken

blet - branch less than or equal with static predict taken

blen - branch less than or equal with static predict not-taken

Static branch prediction information is processed in the decode stage of the 5-stage pipeline. When a

branch instruction with static predict taken (i.e. bget) is decoded the machine predicts taken.

Conversely, when a branch instruction with static predict not-taken (i.e. bgen) is decoded the machine

predicts not-taken.

1. [6 points] Pretend you are the compiler, rewrite each conditional branch instruction in the original

code sequence using the new conditional branch instructions with static branch prediction encoded.

2. [2 points] Assuming the same execution trace, what is the new total cycle count of the modified

code sequence incorporating static branch prediction instructions. Indicate the resultant IPC.

14

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Part D: Dynamic Branch Prediction. [16 points]

This part examines the use of a Branch Target Buffers (BTB) for performing dynamic branch prediction on the 5-Stage scalar pipeline.

The 5-Stage Pipeline without Dynamic Branch Prediction

The branch target buffer

(BTB) in the 5-Stage pipeline contains 4 entries and

is direct mapped. The

BTB caches all branch and

jump instructions. It stores

the branch fetch addresses

along with the branch target addresses. If the

branch fetch address “hits”

in the BTB, the target

address in the BTB is used

if the branch is predicted

taken.

The BTB is updated

immediately after

prediction in the

instruction fetch pipe

stage, i.e. assuming speculative update.

BTB

Br. Corr.

Address

Sequential

Addr. Calc.

Fetch

Addr.

Instruction Fetch

Instruction Decode

Execute

Memory Access

Write Back

15

Instruction

Cache

Instructions

The BTB is accessed simultaneously

with the instruction cache. If the

BTB hits and returns a branch target

address and the branch is predicted

taken, then the next fetch address is

the target address from the BTB. If

the branch is predicted not taken or

the BTB misses, then the sequential

address is the next fetch address.

CS316 – Autumn 2013 – 2014

C. Kozyrakis

1. [6 points] Assume each entry of the BTB employs a 2-bit saturating up/down counter (initialized to

the state 00) that maintains branch history. The BTB uses the following prediction algorithm: 00 - Not

taken, 01 - Not taken, 10 - Taken, 11 - Taken. Fill in the table below with the state of the BTB after

each dynamic branch instruction is executed. Use I[2:1] as the address of an instruction to determine

which BTB entry is referenced, where I is the instruction number in its binary form. (2nd and 3rd bits

resp.)

Dynamic

Branches

Executed

BTB Entry #0

Stat.

Br #

Tar.

Instr#

Hist.

bits

BTB Entry #1

Stat.

Br #

Tar.

Instr#

Hist.

bits

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

16

BTB Entry #2

Stat.

Br #

Tar.

Instr#

Hist.

bits

BTB Entry #3

Stat.

Br #

Tar.

Instr#

Hist.

bits

CS316 – Autumn 2013 – 2014

C. Kozyrakis

2. [4 points] Fill in the table below to evaluate how accurately each branch is predicted.

Branch Instr.

Number

1

2

Branch Instruction Execution

3

4

5

6

7

8

Taken or Not

4

Pred. Direction

Correct? (Y/N)

Taken or Not

6

Pred. Direction

Correct? (Y/N)

Taken or Not

10

Pred. Direction

Correct? (Y/N)

Taken or Not

14

Pred. Direction

Correct? (Y/N)

Taken or Not

16

Pred. Direction

Correct? (Y/N)

Taken or Not

18

Pred. Direction

Correct? (Y/N)

17

9

10

CS316 – Autumn 2013 – 2014

C. Kozyrakis

3. [2 points] Compare and contrast the effectiveness and efficiency of the dynamic branch predictor of

Part D with the static branch predictor of Part C.

4. [4 points] Argue whether the Two-level Adaptive Branch Prediction Scheme [Yeh & Patt]

can improve branch prediction accuracy for the given execution trace? Or for any execution of the

insertion-sort routine?

18

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Problem 5: Instruction Scheduling in the Metaflow Processor [25 points]

This problem will help you understand the details of scheduling and management in a micro-dataflow

example. The Metaflow architecture was first implemented in the Sparc Lightning processor to help

exploit instruction-level parallelism [Popescu et al. IEEE Micro 1991]. The architecture utilizes an

interagted instruction-shelving (DRIS) structure to execute instructions out of order. To exploit

instruction-level parallelism while maintaining consistency, the DRIS forms a unified structure for

register renaming, dependency checking, result forwarding, and ROB. In this problem, you will

explore the details of the DRIS structure and understand how it functions by tracking the state of the

DRIS through a sample code trace. The DRIS entry parameters and operations are explained in the

IEEE Micro1991 paper distributed along with this assignment.

To simplify the problem, we are going to trace the execution of the Metaflow processor abstractly. For

step 1, show the state of the DRIS after you have issued the instruction trace (from PC=101 to PC=111)

into DRIS starting with entry 1. In step 2, show the state of DRIS after all instructions that are ready to

execute at the end of step 1 have been executed and their results forwarded the appropriate locations in

DRIS. In step 3, show the state of the DRIS and register file after all instructions that are ready to

complete at the end of step 2 have been retired. In the subsequent steps, repeat the operations in step 2

and 3 until all instructions have retired out of the DRIS. You can attach extra tables if necessary.

Note: don’t forget to consider memory dataflow dependences of load and store instructions. Also,

assume load and store addresses are in register indirect format and thus do not require separate

computation. Branch instructions are predicted not taken at first so the execution proceeds down the

sequential path speculatively until the branch is resolved. Assume no internal memory by-passing, i.e

loads and store must go to memory.

PC= 101:

102:

103:

104:

105:

106:

107:

108:

mul

add

sub

sw

mul

sub

lw

bne

r1, r1, r3

r3, r1, r2

r4, r4, r2

(r4), r1

r1, r2, r4

r3, r1, r3

r1, (r4)

r1, r4, (115)

109: sub r2, r2, r4

110: mult r3, r1, r3

111: add r4, r3, r1

; r1 r1 * r3

; r3 r1 + r2

; r4 r4 – r2

; mem[r4] r1

; r1 r2 * r4

; r3 r1 – r3

; r1 mem[r4]

; branch to 115

; if r1 != r4

; r2 r2 – r4

; r3 r1 * r3

; r4 r3 + r1

…………..

115: halt

19

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 1

Register File

r1

r2

5

3

DRIS

Source Operand 1

Tag

1

r3

2

r4

1

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Disp- Func. Exec- Program

atched Unit cuted Counter

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

Note:

Please use different color pens to show the state values that changes and don’t change from step to step.

For each new step, first copy the state unchanged to the new sheet and then make the changes with a

different color pen.

Use {add, sub, mul, br, lw, and sw} for the function unit column.

In this problem, the dispatch column is effectively the same as the executed column and do not need to

be filled.

20

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 2

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

21

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 3

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

22

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 4

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

23

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 5

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

24

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 6

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

25

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 7

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

26

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 8

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

27

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 9

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

28

Disp- Func. Exec- Program

atched Unit cuted Counter

CS316 – Autumn 2013 – 2014

C. Kozyrakis

Step 10

Register File

r1

r2

DRIS

Source Operand 1

Tag

1

r3

r4

Source Operand 2

Locked Reg. ID Locked Reg. ID Latest

Destination

Reg. Content

2

3

4

5

6

7

8

9

10

11

12

13

14

15

29

Disp- Func. Exec- Program

atched Unit cuted Counter