Instruction-Level Parallelism (ILP) Presentation

advertisement

Instruction-level Parallelism

• Instruction-Level Parallelism (ILP): overlapping of executions among

instructions that are mutually independent.

• Difficulties in exploiting ILP: various hazards that impose dependency among

instructions, as a result:

CPIpipeline CPIideal Structural _ Stalls RAW _ Stalls

WAR _ Stalls WAW _ Stalls Control _ Stalls

– RAW(read after write): j tries to read a source before i writes to it

– WAW(write after write): j tries to write an operand before it is written by i

– WAR(write after read): j tries to write a destination before it is read by i

• Limited parallelism among a basic block, a straight line of code

sequence with no branches in, except to the entry, and no branches out, except

at the exit. For typical MIPS programs the average dynamic branch frequency is

often between 15% and 25%, meaning that

– Basic block size is between 4 and 7, and

– Exploitable parallelism in a basic block is between 4 and 7

Slide 1

Instruction-level Parallelism

• Loop-Level Parallelism:

for (i=1; i<=1000; i=i+1)

x[i] = x[i] + y[i];

– Loop unrolling: static or dynamic, to increase ILP;

– Vector instructions: a vector instruction operates on a sequence of data

items (pipelining data streams):

Load V1, X; Load V2, Y; Add V3,V1,V2; Store V3, X

• Data Dependences: an instruction j is data dependent on instruction i if either

– instruction i produces a result that may be used by instruction j, or

– instruction j is data dependent on instruction k, and instruction k is data

dependent on instruction i.

• Dependences are a property of programs, whether a given dependence results

in an actual hazard being detected and whether that hazard actually causes a

stall are properties of the pipeline organization.

– Data Hazards: data dependence vs. name dependence

» RAW(real data dependence): j tries to read a source before i writes to it

» WAW(output dependence): j tries to write an operand before it is written by i

» WAR(antidependence): j tries to write a destination before it is read by i

Slide 2

Instruction-level Parallelism

•

Control Dependence: determines the ordering of instruction i with respect to

a branch instruction so that the instruction is executed in the correct program

order and only when it should be. There are two constraints imposed by

control dependences:

1. An instruction that is control dependent on a branch cannot be moved

before the branch so that its execution is no longer controlled by the

branch:

if p1{

s1;

s1;

cannot be changed to if p1{

};

};

2. An instruction that is not control dependent on a branch cannot be moved

after the branch so that its execution is controlled by the branch:

s1;

if p1{

if p1{

cannot be changed to

s1;

};

};

Slide 3

Instruction-level Parallelism

•

Control Dependences: preservation of control dependence can

be achieved by ensuring that instructions execute in program

order and the detection of control hazards guarantees an

instruction that is control dependent on a branch delays

execution until the branch’s direction is known.

– Control dependence in itself is not the fundamental

performance limit;

– Control dependence is not the critical property that must be

preserved; instead, the two properties critical to program

correctness and normally preserved by maintaining both data

and control dependence are:

1. The exception behavior – any change in the ordering of

instruction execution must not change how exceptions are

raised in the program (or cause any new exceptions)

2. The data flow – the actual flow of data values among

instructions that produce results and those that consume them.

Slide 4

Instruction-Level Parallelism

• Examples:

DADDU

R2,R3,R4 no d-dp prevents

DADDU

R2,R3,R4

BEQZ

R2,L1

this exchange

LW

R1,0(R2)

LW

R1,0(R2) Mem. exception

BEQZ

R2,L1

L1:

may result

L1:

----------------------------------------------------------------------------------------DADDU

R1,R2,R3

DADDU

R1,R2,R3

BEQZ

R4,L

BEQZ

R12,SN

speculation

DSUBU

R1,R5,R6

DSUBU

R4,R5,R6

L: …….

DADDU

R5,R4,R9

OR

R7,R1,R8

SN: OR

R7,R8,R9

•

•

Preserving data dependence alone is not

•

sufficient for program correctness ‘cause

multiple predecessors

Speculation, which helps with the exception

problem, can lessen impact of control

dependence (to be elaborated later)

The move is okay if R4 is dead after SN, i.e. if

R4 is unused after SN, and DSUBU can not

generate an exception, because dataflow

cannot be affected by this move.

Slide 5

Instruction-Level Parallelism

• Dynamic Scheduling:

– Advantages

» Handles some cases where dependences are unknown at compile time

» Simplifies compiler

» Allows code that was compiled with one pipeline in mind to run

efficiently on a different pipeline

» Facilitates hardware speculation

– Basic idea:

» Out-of-order execution tries to avoid stalling in the presence of

detected dependences (that could generate hazards), by

scheduling otherwise independent instructions to idle functional

units on the pipeline;

» Out-of-order completion can result from out-of-order execution;

» The former introduces the possibility of WAR and WAW (nonexisting in the 5-depth pipeline), while the latter creates major

complications in handling exceptions.

Slide 6

Instruction-Level Parallelism

• Out-of-order execution introduces•

WAR, also called antidependence,

and WAW, also called output

dependdnece:

DIV.D

ADD.D

SUB.D

MUL.D

F0,F2,F4

F6,F0,F8

F8,F10,F14

F6,F10,F8

• These hazards are not caused by

real data dependences, but by

virtue of sharing names!

• Both of these hazards can be

avoided by the use of register

renaming

•

Out-of-order completion in dynamic

scheduling must preserve exception

behavior

–

Exactly those exceptions that would arise if

the program were executed in strict program

order actually do arise, also called precise

exception;

Dynamic scheduling may generate

imprecise exceptions

–

–

The processor state when an exception is

raised does not look exactly as if the

instructions were executed in the strict

program order

Two reasons for impreciseness:

1.

The pipeline may have already completed

instructions that are later in program order

than the faulty one; and

2.

The pipeline may have not yet completed

some instructions that are earlier in

program order than the faulty one.

Slide 7

Instruction-Level Parallelism

•

Out-of-order execution is made possible by splitting the ID stage

into two stages, as seen in scoreboard:

1.

2.

•

Issue – Decode instructions, check for structural hazards.

Read operands – Wait until no data hazards, then read operands.

Dynamic scheduling using Tomasulo’s Algorithm:

–

–

Tracks when operands for instructions become available and allow

pending instructions to execute immediately, thus minimizing RAW

hazards;

Introduces register renaming to minimize the WAW and WAR hazards.

DIV.D F0,F2,F4

DIV.D

F0,F2,F4

ADD.D F6,F0,F8 use temp reg. S & T

ADD.D

S,F0,F8

S.D

F6,0(R1)

to rename F6 & F8

S.D

S,0(R1)

SUB.D F8,F10,F14

SUB.D

T,F10,F14

MUL.D F6,F10,F8

MUL.D

F6,F10,T

Slide 8

Instruction-Level Parallelism

•

Dynamic scheduling using Tomasulo’s Algorithm:

–

Register renaming in Tomasulo’s scheme is implemented by reservation

From instruction unit

stations

FP registers

Instruction

queue

L/S Ops

FP operations

Address unit

Operand buses

Load

buffers

Store

buffers

Operation bus

1

2

3

Data

Reservation

stations

1

2

Address

Memory unit

FP adders

FP multipliers

Common data

bus (CDB)

Slide 9

Instruction-Level Parallelism

•

Distinguishing Features of Tomasulo’s Algorithm:

–

–

–

–

–

–

It is a technique allowing execution to proceed in the presence of

hazards by means of register renaming.

It uses reservation stations to buffer/fetch operands whenever they

become available, eliminating the need to use registers explicitly

for holding (intermediate) results. When successive writes to a

register take place, only the last one will actually update the

register.

Register specifiers (of pending operands) for an instruction are

renamed to names of the (corresponding) reservation stations in

which the producer instructions reside.

Since there can be more “conceptual” (or logical) reservation

stations than there are registers, a much larger “virtual register set”

is effectively created.

The Tomasulo method contrasts to the scoreboard method in that

the former is decentralized while the latter is centralized.

In the Tomasulo method, a newly generated result is immediately

made known and available to all reservation stations (thus all

issued instructions) simultaneously, hence avoiding any

bottleneck.

Slide 10

Instruction-Level Parallelism

•

Three Main Steps of Tomasulo’s Algorithm:

1. Issue:

If there is no structural hazards for the current instruction

Then issue the instruction

Else stall until structural hazards are cleared. (no WAW hazard

checking)

2. Execute:

If both operands are available (no RAW hazards)

Then execute the operation

Else monitor the Common Data Bus (CDB) until the relevant value

(operand) appears on CDB, and then read the value into the

reservation station. When both operands become available in the

reservation station, execute the operation. (RAW is cleared as

soon as the value appears on CDB!)

3. Write Result: When result is generated place it on CDB through

which register file and any functional unit waiting on it will be able

to read immediately.

Slide 11

Instruction-Level Parallelism

•

Main Content of A Reservation Station:

Slide 12

Instruction-Level Parallelism

•

Updating Reservation Stations during the 3 Steps:

Slide 13

Instruction-Level Parallelism

•

Updating Reservation Stations during the 3 Steps (cont’d):

Slide 14

Instruction-Level Parallelism

•

An Example of Tomasulo’s Algorithm:when all issued but only one completed

Instruction Status

Instruction

Issue

Execute

Write Result

x

L.D

F6,34(R2)

x

x

L.D

F2,45(R3)

x

x

MUL.D

F0,F2,F4

x

SUB.D

F8,F2,F6

x

DIV.D

F10,F0,F6

x

ADD.D

F6,F8,F2

x

Reservation Station

Name

Busy

Op

Vj

Vk

Qj

Qk

Load1

No

Load2

Yes

Load

Add1

Yes

SUB

Add2

Yes

ADD

Add3

No

Mult1

Yes

MUL

Regs[F4]

Load2

Mult2

Yes

DIV

Mem[34+Regs[R2]]

Mult1

Address(M)

45+Regs[R3]

Mem[34+Regs[R2]]

Load2

Add1

Load2

Register Status

Field

F0

F2

Qi

Mult1

Load2

F4

F6

F8

F10

Add2

Add1

Mult2

F12

……

F30

Slide 15

Instruction-Level Parallelism

•

An Example of Tomasulo’s Algorithm:when MUL.D is ready to write its result

Instruction Status

Instruction

Issue

Execute

Write Result

L.D

F6,34(R2)

x

x

x

L.D

F2,45(R3)

x

x

x

MUL.D

F0,F2,F4

x

x

SUB.D

F8,F2,F6

x

x

x

DIV.D

F10,F0,F6

x

ADD.D

F6,F8,F2

x

x

x

Reservation Station

Name

Busy

Op

Vj

Vk

Load1

No

Load2

No

Add1

No

Add2

No

Add3

No

Mult1

Mult2

Qj

Yes

MUL

Mem[45+Regs[R3]]

Regs[F4]

Yes

DIV

Mem[34+Regs[R2]]

Qk

Address(M)

Mult1

Register Status

Field

F0

Qi

Mult1

F2

F4

F6

F8

F10

Mult2

F12

……

F30

Slide 16

Instruction-Level Parallelism

•

Another Example of Tomasulo’s Algorithm:multiplying an array by a scalar F2

Instruction Status

Instruction

From Iteration

Issue

Execute

x

L.D

F0,0(R1)

1

x

MUL.D

F4,F0,F2

1

x

S.D

F4,0(R1)

1

x

L.D

F0,0(R1)

2

x

MUL.D

F4,F0,F2

2

x

S.D

F4,0(R1)

2

x

Write Result

x

Reservation Station

Name

Busy

Op

Vj

Vk

Qj

Qk

Load1

Yes

Load

Regs[R1]+0

Load2

Yes

Load

Regs[R1]-8

Add1

No

Add2

No

Add3

No

Mult1

Yes

MUL

Regs[F2]

Load1

Mult2

Yes

MUL

Regs[F2]

Load2

Store1

Yes

Store

Regs[R1]

Mult1

Store2

Yes

Store

Regs[R1]-8

Mult2

Note: integer ALU ops (DADDUI R1,R1,-8 & BNE R1,R2,Loop) are ignored and branch is predicted taken.

Address(M)

Slide 17

Instruction-Level Parallelism

•

The Main Differences between Scoreboard & Tomasulo’s:

1. In Tomasulo, there is no need to check WAR and WAW (as must

be done in Scoreboard) due to renaming, in the form of reservation

station number (tag) and load/store buffer number (tag).

2. In Tomasulo pending result (source operand in case of RAW) is

obtained on CDB rather than from register file.

3. In Tomasulo loads and stores are treated as basic functional unit

operations.

4. In Tomasulo control is decentralized rather than centralized -- data

structures for hazard detection and resolution are attached to

reservation stations, register file, or load/store buffers, allowing

control decisions to be made locally at reservation stations, register

file, or load/store buffers.

Slide 18

Instruction-Level Parallelism

•

Summary of the Tomasulo Method:

1. The order of load and store instructions is no longer important so

long as they do not refer to the same memory address. Otherwise

conflicts on memory locations are checked and resolved by the

store buffer for all items to be written.

2. There is a relatively high hardware cost:

a) associative search for matching in the reservation

stations

b) possible duplications of CDB to avoid bottleneck

3. Buffering source operands eliminates WAR hazards and implicit

renaming of registers in reservation stations eliminates both WAR

and WAW hazards.

4. Most attractive when compiler scheduling is hard or when there

are not enough registers.

Slide 19

Instruction-Level Parallelism

• The Loop-Based Example:

Loop: L.D

F0,0(R1)

MUL.D F4,F0,F2

S.D

F4,0(R1)

DADDUI R1,R1,-8

L.D

F0,0(R1)

MUL.D F4,F0,F2

S.D

F4,0(R1)

BNE

R1,R2,Loop

– Once the system reaches the state

shown in the previous table, two

copies (iterations) of the loop could

be sustained with a CPI close to 1.0,

provided the multiplies could

complete in four clock cycles

• WAR and WAW hazards were

eliminated through dynamic renaming

of registers, via reservation stations

• A load and a store can safely be done

in a different order, provided they

access different addresses; otherwise

dynamic memory disambiguation is in

order:

– The processor must determine

whether a load can be executed at a

given time by matching addresses of

uncompleted, preceding stores;

– Similarly, a store must wait until

there are no unexecuted loads or

stores that are earlier in program

order and share the same address

• The single CDB in the Tomasulo

method can limit performance

Slide 20

Instruction-Level Parallelism

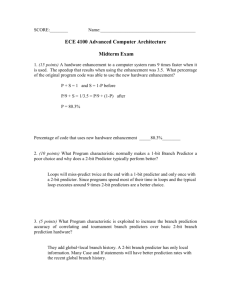

• Dynamic Hardware Branch Prediction: control dependences rapidly

become the limiting factor as the amount of ILP to be exploited increases,

which is particularly true when multiple instructions are to be issued per

cycle.

– Basic Branch Prediction and Branch-Prediction Buffers

» A small memory indexed by the lower portion of the address of the branch

instruction, containing a bit that says whether the branch was recently taken or

not – simple, and useful only when the branch delay is longer than the time to

calculate the target address

» The prediction bit is inverted each time there is a wrong prediction – an

accuracy problem (mispredict twice); a remedy: 2-bit predictor, a special case

of n-bit predictor (saturating counter), which performs well (accuracy:99-82%)

Taken

Not taken

Predict taken

11

Predict taken

10

Taken

Taken

Not taken

Not taken

Predict not taken

01

Taken

Predict not taken

00

Not taken

Slide 21

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– Correlating Branch Predictors

If (aa==2)

aa=0;

If (bb==2)

bb=0;

If (aa!=bb){

Assign aa and bb to

registers R1 and R2

DSUBUI

BNEZ

DADD

L1: DSUBUI

BNEZ

DADD

L2: DSUBUI

BEQZ

R3,R1,#2

R3,L1

R1,R0,R0

R3,R2,#2

R3,L2

R2,R0,R0

R3,R1,R2

R3,L3

;branch b1 (aa!=2)

;aa=0

;branch b2 (bb!=2)

;bb=0

;R3=aa-bb

;branch b3 (aa==bb)

» The behavior of branch b3 is correlated with the behavior of branches

b1 and b2 (b1 & b2 both not taken b3 will be taken); A predictor

that uses only the behavior of a single branch to predict the outcome of

that branch can never capture this behavior.

» Branch predictors that use the behavior of other branches to make

prediction are called correlating predictors or two-level predictors.

Slide 22

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– Correlating Branch Predictors

If (d==0)

d=1;

If (d==1)

Assign d to register R1

BNEZ

R1,L1

;branch b1 (d!=0)

DADDIU R1,R0,#1

L1: DADDIU R3,R1, # -1

BNEZ

R3,L2

…

L2:

;d==0, so d=1

;branch b2 (d!=1)

Initial value of d

d==0?

b1

Value of d before b2

d==1?

b2

0

Yes

Not taken

1

Yes

Not taken

1

No

Taken

1

Yes

Not taken

2

No

Taken

2

No

Taken

Behavior of a 1-bit Standard Predictor Initialized to Not Taken (100% wrong prediction)

d=?

b1 prediction

b1 action

New b1 prediction

b2 prediction

b2 action

New b2 prediction

2

NT

T

T

NT

T

T

0

T

NT

NT

T

NT

NT

2

NT

T

T

NT

T

T

0

T

NT

NT

T

NT

NT

Slide 23

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– Correlating Branch Predictors

» The standard predictor mispredicted all branches!

» A 1-bit correlation predictor uses two bits, one bit for the last branch being not

taken and the other bit for taken (in general the last branch executed is not the

same instruction as the branch being predicted).

The 2 Prediction bits (p1/p2)

Prediction if last branch not taken (p1)

Prediction if last branch taken (p2)

NT/NT

NT

NT

NT/T

NT

T

T/NT

T

NT

T/T

T

T

The Action of the 1-bit Predictor with 1-bit correlation, Initialized to Not Taken/Not Taken

d=?

b1 prediction

b1 action

New b1 prediction

b2 prediction

b2 action

New b2 prediction

2

NT/NT

T

T/NT

NT/NT

T

NT/T

0

T/NT

NT

T/NT

NT/T

NT

NT/T

2

T/NT

T

T/NT

NT/T

T

NT/T

0

T/NT

NT

T/NT

NT/T

NT

NT/T

Slide 24

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– Correlating Branch Predictors

» With the 1-bit correlation predictor, also called a (1,1) predictor, the only

misprediction is on the first iteration!

» In general case an (m,n) predictor uses the behavior of the last m branches to

choose from 2m branch predictors, each of which is an n-bit predictor for a

Branch address

single branch.

2-bit per-branch predictors

4

xx

xx prediction

»The number of bits in an (m,n)

predictor is:

2m*n *(number of prediction

entries selected by the branch

address)

2-bit global branch history

Slide 25

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– Performance of Correlating Branch Predictors

Slide 26

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– Tournament Predictors: Adaptively Combining Local and Global Predictors

» Takes the insight that adding global information to local predictors helps

improve performance to the next level, by

• Using multiple predictors, usually one based on global information and one based

on local information, and

• Combining them with a selector

» Better accuracy at medium sizes (8K bits – 32K bits) and more effective use of

very large numbers of prediction bits: the right predictor for the right branch

» Existing tournament predictors use a 2-bit saturating counter per branch to

choose among two different predictors:

0/0, 1/0,1/1

The counter is incremented

whenever the “predicted”

predictor is correct and the

other predictor is incorrect,

and it is decremented in the

reverse situation

0/0, 0/1,1/1

Use predictor 1

1/0

Use predictor 2

0/1

1/0

0/1

0/1

Use predictor 1

Use predictor 2

1/0

0/0, 1/1

State Transition Diagram

0/0, 1/1

Slide 27

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– Performance of Tournament Predictors:

Prediction due to local predictor

Misprediction rate of 3 different predictors

Slide 28

Instruction-Level Parallelism

• Dynamic Hardware Branch Prediction:

– The Alpha 21264 Branch Predictor:

» 4K 2-bit saturating counters indexed by the local branch address to choose

from among:

• A Global Predictor that has

– 4K entries that are indexed by the history of the last 12 branches;

– Each entry is a standard 2-bit predictor

• A Local Predictor that consists of a two-level predictor

– At the top level is a local history table consisting of 1024 10-bit entries, with

each entry corresponding to the most recent 10 branch outcomes for the entry;

– At the bottom level is a table of 1K entries, indexed by the 10-bit entry of the

top level, consisting of 3-bit saturating counters which provide the local

prediction

» It uses a total of 29K bits for branch prediction, resulting in very high

accuracy: 1 misprediction in 1000 for SPECfp95 and 11.5 in 1000 for

SPECint95

Slide 29

Instruction-Level Parallelism

• High-Performance Instruction Delivery:

– Branch-Target Buffers

» Branch-prediction cache that stores the predicted address for the next

instruction after a branch:

• Predicting the next instruction address before decoding the current instruction!

• Accessing the target buffer during the IF stage using the instruction address of the

fetched instruction (a possible branch) to index the buffer.

PC of instruction to fetch

Look up

Predicted PC

Number

of

entries

in

branchtarget

buffer

=

Yes: then instruction is a taken branch and

predicted PC should be used as the next PC

No: instruction is not

predicted to be branch;

proceed normally

Branch

predicted

taken or

untaken

Slide 30

Instruction-Level Parallelism

•

•

Handling branch-target buffers:

Send PC to

memory and

branch-target

buffer

IF

No

Entry found

in branchtarget buffer?

ID

No

Is

instruction

a taken

branch?

EX

Send out

predicted

PC

Yes

No

Normal

instruction

execution

(0 cycle

penalty)

Enter branch

instruction

address and

next PC into

branch-target

buffer (2 cycle

penalty)

Yes

Taken

branch?

Yes

Mispredicted

Branch

branch, kill

correctly

fetched

predicted;

instruction; restart

continue

fetch at other

execution with

target; delete

no stalls (0

entry from target cycle penalty)

buffer (2 cycle

penalty)

Integrated Instruction Fetch Units: to meet

the demands of multiple-issue processors,

recent designs have used an integrated

instruction fetch unit that integrates several

functions:

– Integrated branch prediction – the branch

predictor becomes part of the instruction

fetch unit and is constantly predicting

branches, so as to drive the fetch pipeline

– Instruction prefetch – to deliver multiple

instructions per clock, the instruction fetch

unit will likely need to fetch ahead,

autonomously managing the prefetching of

instructions and integrating it with branch

prediction

– Instruction memory access and buffering –

encapsulates the complexity of fetching

multiple instructions per clock, trying to

hide the cost of crossing cache blocks, and

provides buffering, acting as an on-demand

unit to provide instructions to the issue

stage as needed and in the quantity needed

Slide 31

Instruction-Level Parallelism

• Taking Advantage of More ILP with Multiple Issue

– Superscalar: issue varying numbers of instructions per cycle that are either

statically scheduled (using compiler techniques, thus in-order execution) or

dynamically scheduled (using techniques based on Tomasulo’s algorithm, thus outorder execution);

– VLIW (very long instruction word): issue a fixed number of instructions formatted

either as one large instruction or as a fixed instruction packet with the parallelism

among instructions explicitly indicated by the instruction (hence, they are also

known as EPIC, explicitly parallel instruction computers). VLIW and EPIC

processors are inherently statically scheduled by the compiler.

Common

Name

Issue

Structure

Hazard

Detection

Scheduling

Distinguishing

Characteristics

Examples

Superscalar

(static)

Dynamic (IS

packet <= 8)

Hardware

Static

In-order execution

Sun UltraSPARC II/III

Superscalar

(dynamic)

Dynamic

(split&piped)

Hardware

Dynamic

Some out-of-order

execution

IBM Power2

Superscalar

(speculative)

Dynamic

Hardware

Dynamic with

speculation

Out-of-order execution

with speculation

Pentium III/4, MIPS R 10K,

Alpha 21264, HP PA 8500,

IBM RS64III

VLIW/LIW

Static

Software

Static

No hazards between

issue packets

Trimedia, i860

EPIC

Mostly static

Mostly

software

Mostly static

Explicit dependences

marked by compiler

Itanium

Slide 32

Instruction-Level Parallelism

• Taking Advantage of More ILP with Multiple Issue

– Multiple Instruction Issue with Dynamic Scheduling: dual-issue with Tomasulo’s

Iteration No. Instructions

Issues at

Executes

Mem Access

Write CDB

Comments

1

L.D

F0,0(R1)

1

2

3

4

First issue

1

ADD.D

F4,F0,F2

1

5

8

Wait for L.D

1

S.D

F4,0(R1)

2

3

1

DADDIU R1,R1,#-8

2

4

1

BNE

R1,R2,Loop

3

6

2

L.D

F0,0(R1)

4

7

2

ADD.D

F4,F0,F2

4

10

2

S.D

F4,0(R1)

5

8

2

DADDIU R1,R1,#-8

5

9

2

BNE

R1,R2,Loop

6

11

3

L.D

F0,0(R1)

7

12

3

ADD.D

F4,F0,F2

7

15

3

S.D

F4,0(R1)

8

13

3

DADDIU R1,R1,#-8

8

14

3

BNE

9

16

R1,R2,Loop

9

Wait for ADD.D

5

Wait for ALU

Wait for DADDIU

8

9

Wait for BNE complete

13

Wait for L.D

14

Wait for ADD.D

10

Wait for ALU

Wait for DADDIU

13

14

Wait for BNE complete

18

Wait for L.D

19

Wait for ADD.D

15

Wait for ALU

Wait for DADDIU

Slide 33

Instruction-Level Parallelism

• Taking Advantage of More ILP with Multiple Issue: resource usage

Clock number

Integer ALU

2

1/L.D

3

1/S.D

4

1/DAADIU

5

FP ALU

Data cache

CDB

Comments

1/L.D

1/L.D

1/ADD.D

1/DADDIU

6

7

2/L.D

8

2/S.D

2/L.D

1/ADD.D

9

2/DADDIU

1/S.D

2/L.D

10

2/ADD.D

2/DADDIU

11

12

3/L.D

13

3/S.D

3/L.D

2/ADD.D

14

3/DADDIU

2/S.D

3/L.D

15

3/ADD.D

3/DADDIU

16

17

18

19

20

3/ADD.D

3/S.D

Slide 34

Instruction-Level Parallelism

• Taking Advantage of More ILP with Multiple Issue

– Multiple Instruction Issue with Dynamic Scheduling: + an adder and a CBD

Iteration No. Instructions

Issues at

Executes

Mem Access

Write CDB

Comments

1

L.D

F0,0(R1)

1

2

3

4

First issue

1

ADD.D

F4,F0,F2

1

5

8

Wait for L.D

1

S.D

F4,0(R1)

2

3

1

DADDIU R1,R1,#-8

2

3

1

BNE

R1,R2,Loop

3

5

2

L.D

F0,0(R1)

4

6

2

ADD.D

F4,F0,F2

4

9

2

S.D

F4,0(R1)

5

7

2

DADDIU R1,R1,#-8

5

6

2

BNE

R1,R2,Loop

6

8

3

L.D

F0,0(R1)

7

9

3

ADD.D

F4,F0,F2

7

12

3

S.D

F4,0(R1)

8

10

3

DADDIU R1,R1,#-8

8

9

3

BNE

9

11

R1,R2,Loop

9

Wait for ADD.D

4

Executes earlier

Wait for DADDIU

7

8

Wait for BNE complete

12

Wait for L.D

13

Wait for ADD.D

10

Executes earlier

Wait for DADDIU

10

11

Wait for BNE complete

15

Wait for L.D

16

Wait for ADD.D

10

Executes earlier

Wait for DADDIU

Slide 35

Instruction-Level Parallelism

• Taking Advantage of More ILP with Multiple Issue: more resource

Clock number

Integer ALU

2

3

Address adder

FP ALU

Data cache

1/DAADIU

1/S.D

5

2/DADDIU

2/S.D

2/L.D

13

2/DADDIU

1/ADD.D

3/DADDIU

3/L.D

2/ADD.D

3/S.D

2/L.D

1/S.D

3/L.D

11

12

1/DADDIU

2/L.D

8

10

1/L.D

1/L.D

1/ADD.D

7

9

CDB#2

1/L.D

4

6

CDB#1

3/DADDIU

3/L.D

3/ADD.D

2/ADD.D

2/S.D

14

15

16

3/DADDIU

3/S.D

Slide 36

Instruction-Level Parallelism

• Hardware-Based Speculation

– One of the main factors limiting the performance of the previous two-issue

dynamically scheduled pipeline is the control hazard which prevented the

instruction following a branch from starting, causing one-cycle penalty on every

loop iteration.

– Branch prediction, while reduces the direct stalls attributable to branches, may not

be sufficient to generate the desired amount of instruction-level parallelism for a

multi-issue pipeline.

– Hardware speculation extends branch prediction with dynamic scheduling by

speculating on the outcome of branches and executing the program as if our guesses

were correct, that is, we fetch, issue and execute instructions in hardware

speculation. Dynamic scheduling only fetches and issues such instructions.

– Hardware-based speculation combines three key ideas:

» Dynamic branch prediction to choose which instructions to execute;

» Speculation to allow the execution of instructions before the control

dependences are resolved (with the ability to undo the effects of an incorrect

speculated sequence); and

» Dynamic scheduling to deal with the scheduling of different combinations of

basic blocks.

– Data flow execution – follows the predicted flow of data values to choose when to

execute instructions – operations execute as soon as their operands are available!

Slide 37

Instruction-Level Parallelism

• Hardware-Based Speculation:

– A speculated execution allows an instruction to complete execution and bypass its

results to other instructions, without allowing the instruction to perform any updates

that cannot be undone until the instruction is no long speculative, at which point the

instruction commits by updating registers and memory. Pre-commit values are

stored in the reorder buffer (ROB).

MIPS FP Unit using Tomasula’s Algorithm and Extended

to Handle Speculation

1.

Issue/Dispatch: Get inst. from the queue. Issue if a R.S. and a

ROB slot is available; Send operands to R.S. from either

registers or ROB; Otherwise stall.

2.

Execute: when both operands’ values are available in the R.S.,

execute; otherwise monitor the CDB – checking to RAW

hazards.

3.

Write Result: write the result produced on the CDB and from

CDB into the ROB and any R.S. waiting for this result.

4.

Commit: 3 sequences of actions –

»

Normal– instruction reaches the head of ROB and

result is present in ROB, updates the register and

removes instr

»

Store– similar to normal except memory is updated

»

Incorrect branch prediction– ROB is flushed and

execution is restarted at the correct successor of the

branch.

Slide 38

Instruction-Level Parallelism

• An Example of Hardware-Based Speculation: status as Mult is about to commit

Reservation Station

Name

Busy

Op

Vj

Vk

Load1

No

Load2

No

Add1

No

Add2

No

Add3

No

Mult1

Mult2

Qj

No

MUL.D

Mem[45+Regs[R3]]

Regs[F4]

Yes

DIV.D

Qk

Dest

A

#3

Mem[34+Regs[R2]]

#3

#5

Reorder Buffer

Entry

Busy

Instruction

State

Destination

Value

1

No

L.D. F6, 34(R2)

Commit

F6

Mem[34+Regs[R2]]

2

No

L.D. F2, 45(R3)

Commit

F2

Mem[45+Regs[R3]]

3

Yes

MUL.D F0, F2, F4

Write result

F0

#2 x Regs[F4]

4

Yes

SUB.D F8, F6, F2

Write result

F8

#1 - #2

5

Yes

DIV.D F10, F0, F6

Execute

F10

6

Yes

ADD.D F6, F8, F2

Write result

F6

#4 + #2

FP Register Status

Field

F0

Reorder #

3

Busy

Yes

F1

F2

……

F5

F6

F7

6

No

No

No

No

Yes

…

F8

F10

4

5

Yes

Slide

Yes 39

Instruction-Level Parallelism

• Another Example of Hardware-Based Speculation: dynamic loop unrolling

Loop:

L.D.

MUL.D

S.D.

DADDIU

BNE

F0, 0(R1)

F4, F), F2

F4, 0(R1)

R1, R2, #-8

R1, R2, Loop

Reorder Buffer

Entry

Busy

Instruction

State

Destination

Value

1

No

L.D.

F0, 0(R1)

Commit

F0

Mem[0+Regs[R1]]

2

No

MUL.D.

F4, F0, F2

Commit

F4

#1 x Regs[F2]

3

Yes

S.D.

F4, 0(R1)

Write result

0 + Regs[R1]

#2

4

Yes

DADDIU R1, R1, #-8

Write result

R1

Regs[R1] - 8

5

Yes

BNE

Write result

6

Yes

L.D.

F0, 0(R1)

Write result

F0

Mem[#4]

7

Yes

MUL.D.

F4, F0, F2

Write result

F4

#6 x Regs[F2]

8

Yes

S.D.

F4, 0(R1)

Write result

0 + #4

#7

9

Yes

DADDIU R1, R1, #-8

Write result

R1

#4 - 8

10

Yes

BNE

Write result

R1, R2, Loop

R1, R2, Loop

FP Register Status

Field

F0

Reorder #

6

Busy

Yes

F1

No

F2

No

F3

No

F4

F5

7

6

Yes

No

F6

No

F7

F8

4

5

…

Slide

No 40

Instruction-Level Parallelism

• Multiple Issue with Speculation: without speculation

Loop:

L.D.

DADDIU

S.D.

DADDIU

BNE

R2, 0(R1)

R2, R2, #1

R2, 0(R1)

R1, R1, #4

R2, R3, Loop

;R2=array element

;increment R2

;store result

;increment pointer

;branch if not last element

Iteration No. Instructions

Issues at

Executes

Mem Access

Write CDB

Comments

1

L.D

R2,0(R1)

1

2

3

4

First issue

1

DADDIU R2,R2,#1

1

5

6

Wait for L.D

1

S.D

2

3

1

DADDIU R1,R1,#-4

2

3

1

BNE

R2,R3,Loop

3

7

2

L.D

R2,0(R1)

4

8

2

DADDIU R2,R2,#1

4

11

2

S.D

5

9

2

DADDIU R1,R1,#-4

5

8

2

BNE

R2,R3,Loop

6

13

3

L.D

R2,0(R1)

7

14

3

DADDIU R2,R2,#1

7

17

3

S.D

8

15

3

DADDIU R1,R1,#-4

8

14

3

BNE

9

19

R2,0(R1)

R2,0(R1)

R2,0(R1)

R2,R3,Loop

7

Wait for DADDIU

4

Executes directly

Wait for DADDIU

9

10

Wait for BNE

12

Wait for L.D

13

Wait for DADDIU

9

Wait for BNE

Wait for DADDIU

15

16

Wait for BNE

18

Wait for L.D

19

Wait for DADDIU

15

Wait for BNE

Wait for DADDIU

Slide 41

Instruction-Level Parallelism

• Multiple Issue with Speculation: with speculation

Loop:

L.D.

DADDIU

S.D.

DADDIU

BNE

R2, 0(R1)

R2, R2, #1

R2, 0(R1)

R1, R1, #4

R2, R3, Loop

;R2=array element

;increment R2

;store result

;increment pointer

;branch if not last element

Iteration No. Instructions

Issues at

Executes

Read Access

Write CDB

Commit

Comments

1

L.D

R2,0(R1)

1

2

3

4

5

First issue

1

DADDIU R2,R2,#1

1

5

6

7

Wait for L.D

1

S.D

2

3

7

Wait for DADDIU

1

DADDIU R1,R1,#-4

2

3

8

Commit in order

1

BNE

R2,R3,Loop

3

7

8

Wait for DADDIU

2

L.D

R2,0(R1)

4

5

7

9

No execute delay

2

DADDIU R2,R2,#1

4

8

9

10

Wait for L.D

2

S.D

5

6

10

Wait for DADDIU

2

DADDIU R1,R1,#-4

5

6

11

Commit in order

2

BNE

R2,R3,Loop

6

10

11

Wait for DADDIU

3

L.D

R2,0(R1)

7

8

10

12

Earliest possible

3

DADDIU R2,R2,#1

7

11

12

13

Wait for L.D

3

S.D

8

9

13

Wait for DADDIU

3

DADDIU R1,R1,#-4

8

9

14

Execute earlier

3

BNE

9

13

14

Wait for DADDIU

Slide 42

R2,0(R1)

R2,0(R1)

R2,0(R1)

R2,R3,Loop

4

6

7

9

10

Scoreboard Sanpshot 1

Slide 43

Scoreboard Sanpshot 2

Slide 44

Scoreboard Sanpshot 3

Slide 45

Tomasulo Sanpshot 1

Slide 46

Tomasulo Sanpshot 2

Slide 47

Scoreboard – Centralized Control

Slide 48

Prediction Accuracy of a 4096-entry 2-bit Prediction

Buffer for a SPEC89 Benchmark

Slide 49

Prediction Accuracy of a 4096-entry 2-bit Prediction

Buffer vs. an Infinite Buffer for a SPEC89 Benchmark

Slide 50